Author:

Dai Yifei, PhD, Assistant Researcher, Ministry of Education Examination Center.

Original article published in “China Examination” 2016, Issue 11.

Abstract:Entering the era of a holistic view of validity, research on test validity has evolved into supporting the effectiveness of examinations with as much “evidence” as possible, with validity argumentation becoming the basis for testing validity. “Evidence-Centered Design” (ECD) aims to serve validity argumentation, relying on computer technology and thinking, using educational measurement and statistical principles as methods, modularizing the test design process to maximize the connection of all evidence related to test validity, achieving the “evidence chain” of testing. The upgrade of the national question bank for educational examinations in China can refer to the validity argumentation framework of ECD, emphasizing validity and prioritizing evidence, establishing cognitive and modular thinking for examinees, fundamentally improving the scientific nature of educational examinations.

Keywords:Validity; Validity Verification; ECD; Question Bank

The question bank is an imported concept that originated in the UK in the 1960s and is currently widely accepted and adopted by major examination organizations worldwide as a psychological measurement technique and working method. The question bank is also a means to reform the examination industry in China, improve the evaluation mechanism, and enhance theoretical innovation, serving as a “tool” for transforming examinations. The construction of the national question bank for educational examinations in China began in 2006, marked by the Ministry of Education Examination Center undertaking the national question bank construction project. As of now, all subjects of the national unified educational examinations, including the college entrance examination, graduate entrance examination, self-study examinations, and adult college entrance examinations, have been included in the question bank construction, forming a certain scale of Class A question banks stored in the form of test items and Class B question banks stored in the form of test papers. Many examination projects have begun to accumulate a certain scale of question bank reserves, with question setting achieving routine management and significantly improved risk response capabilities. However, constrained by political, social, and security factors, large-scale high-stakes educational examinations, represented by the college entrance examination, still adopt the traditional “closed” question-setting method, where question setters cannot “exit” until after the examination ends, and the risk control of “leaking questions” is primarily closed. Objectively speaking, the construction of question banks in China is still in its infancy, and there is still a certain distance from a modern question bank that integrates management of test items, assembly of test papers, and reporting of scores. Strictly speaking, if a question bank lacks validity standards, the examination products it outputs cannot explain the meaning of scores, and there is indeed room for improvement in the design of validity in our question banks. The new round of college entrance examination reforms requires that examinations primarily serve the admission of undergraduate institutions, emphasizing the assessment of abilities and qualities, adjusting the number of subjects, and allowing foreign language tests to be taken multiple times a year; graduate entrance examinations, self-study examinations, and other large-scale educational examinations are also undergoing reforms, with new ideas being brewed and top-level designs imminent; the rapid development of online media has led to unprecedented public attention to national educational examinations, and the power of social oversight cannot be underestimated. Facing these challenges, what can the national question bank do? How should it act?

The author believes that improving and upgrading the national question bank should no longer focus on hardware updates or pursuing an increase in the number of test papers stored, but should return to theoretical construction, integrating important psychological measurement concepts such as validity, reliability, equivalence, and score interpretation into the question bank. Among these, establishing the concept of validity is the most urgent. An examination without validity guarantees carries significant risks. Focusing on validity, on the statistical properties of educational measurement, and on the argumentation process based on score interpretation, building a “theory-driven” national question bank is the goal and significance of the next phase of educational examination question bank upgrades, and it is also the focus of this article. The theory of validity has now developed to a new stage, with the validity argumentation paradigm influenced by the holistic view of validity leading to the proposal of numerous testing models. This article intends to select the evidence-centered design framework (ECD) currently guiding the testing and evaluation work of the Educational Testing Service (ETS) in the United States as the research object, to analyze its working methods, dissect the role of this framework in validity argumentation, and point out the significance of the ideas and methods contained in ECD for upgrading the national question bank.

Development of Validity Theory

Whether a test effectively measures what it intends to measure is the most important indicator for evaluating that test, known as validity, while that “thing,” i.e., a certain “concept or attribute,” is referred to as a construct. Nowadays, the discourse in psychometrics no longer simply describes how valid a test is, whether it is high or low, because such discussions are neither correct nor meaningful without premises or limitations. Validity is more associated with keywords such as “degree,” “evaluation,” and “judgment,” and validity verification has gradually replaced the observation of validity in a static sense. Looking back at the development of validity theory, it has generally gone through three stages: the period of single validity view before the 1950s, the period of classification validity view from the 1950s to the mid-1980s, and the period of holistic validity view from the mid-1980s to the present.

Proponents of the single validity view equate validity with correlation coefficients; the larger the coefficient, the stronger the predictive ability, and thus the better the validity. During the American standardization testing movement, research on educational tests such as GRE and LSAT typically reported the correlation between examinee scores and their first-year final grades to illustrate the effectiveness of the tests. Validity was then presented as an objective concept in the form of coefficients. Since the 1950s, the understanding of validity has entered the “Trinitarian doctrine” phase, defining validity as three types: content validity, criterion-related validity, and construct validity. Among them, criterion-related validity is an integration of the previously proposed predictive and concurrent validity concepts. The term “construct” was first introduced as a criterion for identifying types of validity. The classification validity view has had a profound impact on the subsequent development of validity theory, and the three types of “validity” have been used to this day. In the mid-1980s, the understanding of validity entered the holistic view period. Initially, construct validity dominated the “Trinitarian doctrine,” and later, construct replaced construct validity. Lee J. Cronbach pointed out in the second edition of “Educational Measurement” that “it is not the test itself that is validated for validity but the interpretation of the data formed during the specific testing process.” Since then, the connotation of validity has shifted from merely “the validity of a test” to “the validity of score interpretation.” In the 1985 edition of “Standards for Educational and Psychological Testing,” the definition of validity was revised to “whether the inferences made based on scores are appropriate, meaningful, and practical. Validity verification of tests is the process of collecting evidence to support the above inferences.” It is noteworthy that at this time, the “types of validity” were replaced by “types of evidence,” where content evidence and criterion evidence were seen as supplements to “construct-related evidence,” and reliability also became a form of validity evidence. Samuel J. Messick, a representative of the holistic view of validity, proposed the question: “To what extent do empirical evidence and theoretical rationale indicate whether the inferences or actions based on test scores or other assessment methods are sufficient and appropriate?” The comprehensive evaluative judgment of this question is validity. This definition is very close to the 2014 version of the “Standards” explanation of validity — “the degree to which the accumulation of evidence and theory supports the interpretation of scores in specific uses.” Thus, the logic of validity research has evolved into “supporting examinations with as much evidence as possible.”

Validity Verification Based on Argumentation

Using as much “evidence” as possible to prove the effectiveness of examinations has become the basis for validity verification, where evidence is validity. Under the influence of this view of validity, the content of validity verification has also changed: validity verification under the single validity view involves the calculation of correlation coefficients; validity verification under the classification validity view is a process of validating multiple standards, both empirically and theoretically; validity verification under the holistic view of validity manifests as a process of argumentation for validity as a unified whole, focusing not only on formal logic and mathematical reasoning but also on repeated questioning and justification of premise acceptability.

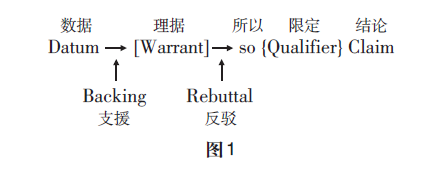

If Messick’s definition of validity in the third edition of “Educational Measurement” was a significant breakthrough in validity theory, then in the fourth edition, Michael T. Kane’s use of “validity verification” rather than “validity” as the title to explain validity theory can be seen as a further development of Messick’s focus on “evaluation,” emphasizing the process of evidence collection. Kane pointed out that validity verification includes two types of arguments: interpretive argument and validity argument. The former uses the “If-Then” rule to provide specific explanations for score interpretations and applications supported by evidence, while the latter evaluates the interpretive argument to confirm that the interpretation or application of scores is valid, first requiring that its interpretive argument is relevant, and that reasoning is reasonable, and that premise assumptions are acceptable. From the perspective of formal logic, as long as the conclusion is drawn according to logical rules, the reasoning is valid; interpretive arguments use this reasoning logic, but the problem is that the establishment of interpretive argument evidence relies not only on formal logic but sometimes also on hypothetical reasoning or informal logic. Therefore, validity verification must not only consider the “reasonable interpretation of scores” but also the “acceptability of score interpretations,” providing justification for those seemingly true premises. Validity verification is no longer a closed step or link but a process of continuously collecting various evidences, “revisiting” between conclusions and evidence in the validity argumentation process. Based on the reality of informal logic existing in validity verification, the psychometric community has begun to seek methods for validity argumentation. The argumentation model proposed by Stephen E. Toulmin, the founder of informal logic, provides a basis for validity argumentation. Kane constructed a specific validity argumentation model using Toulmin’s model, attempting to solve the problem of establishing the validity of the reasoning evidence itself. Toulmin believes that before drawing a certain conclusion, sufficient justification for that conclusion must be provided, and when the conclusion is challenged, it must be defended. Figure 1 shows the six basic elements of Toulmin’s model and their interrelationships.

Data (Datum) is the starting point for deriving conclusions (Claim) using this model; the records of examinees’ responses are the data, and the scores are the conclusions. Between the examinees’ responses and the scores, there exists a justification process (Warrant), and the evidence supporting the justification is the backing (Backing). In this process, on one hand, it is necessary to enhance the acceptability of the conclusion through qualifiers (Qualifier), and on the other hand, it is necessary to exclude exceptions that may rebut the conclusion (Rebuttal). Each interpretive argument in validity argumentation undergoes several rounds of justification from data to conclusion, with the conclusion of the previous round becoming the data for the next round of justification.

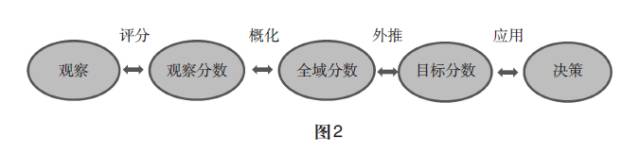

Based on the chain of validity verification arguments depicted by Kane and others, language testing expert Lyle F. Bachman added an inference, namely decisions based on scores. Here, the extended argumentation chain proposed by Bachman (Figure 2) is used to illustrate the specific application of Toulmin’s argumentation model in validity argumentation.

How to prove that the conclusions derived from the data observations of examinees’ responses and the use of scores are justified? The justification for general scores from observed scores is a statistical process of generalizing from sample means to expected values, and the support for this process includes evidence provided by representative samples. The justification for target scores derived from general scores is a regression equation, and the support for the regression equation includes empirical studies on the relationship between test scores and criterion scores. The reasoning when interpreting scores often requires explicit qualifiers, such as standard errors and confidence intervals, both of which indicate the uncertainty of the reasoning process from observed scores to general scores, as well as the standard errors of correlation coefficients when inferring from test scores to standardized scores. Decisions based on target scores often gain persuasiveness through qualifiers; for example, the requirements for tests used for employment differ significantly from those for class placement tests or entrance exams. In some cases, even with qualifiers provided, there may be no way to achieve the leap from data, to justification, to conclusion, thus rebuttals occur. The justification process from data to conclusion, even with qualifiers added, may still not be accepted, and at this point, the interpretive argument for scores cannot be established.

The connotation of validity is continuously developing, and validating validity through argumentation is an inevitable trend for the future. However, merely having argumentation models and conceptual terminology is not sufficient for designing and implementing tests; examination agencies need clearer and more specific “tools,” i.e., a certain structure or framework to integrate argumentation models and terminology, making the entire process from design to evaluation clearer and more operational. ECD is such a framework choice and ideological method.

ECD: Implementation Framework for Validity Argumentation

ECD was initially a research and development project established by ETS in 1997, led by Robert J. Mislevy, Linda S. Steinberg, and Russell G. Almond. The most direct theoretical basis for this project comes from Mislevy’s explanation of the evidence and reasoning issues in testing in 1994, where he pointed out that regardless of the type of educational evaluation, it essentially concerns making certain inferences about students’ knowledge, skills, and achievements, and evaluation can be seen as a process of “reasoning based on evidence.” In the following years, Mislevy and others published research findings related to this framework and provided a more systematic explanation. Currently, ECD has become one of the major application models in the field of educational evaluation in the United States and serves as a platform guiding ETS in the development and implementation of specific exam evaluation products. The construct-centered view of validity by Messick directly influenced Mislevy’s development of ECD, and the development of theories such as evidence reasoning, statistical models, and probability reasoning, along with the application of computer technology in psychometrics, made the emergence of ECD possible and necessary. Under the influence of the holistic view of validity, ECD attempts to integrate multiple pieces of evidence and coordinate the connections between different evidences to enable all evidence to point towards the ultimate goal of validity verification. Microcosmically, ECD includes a conceptual framework and four specific delivery phases, where the conceptual framework is a combination of six models, and the delivery phases describe the dynamic embedding of each model into different stages of test operation; macroscopically, ECD also implies a hierarchical relationship composed of five parts, as well as a testing cycle that integrates the aforementioned steps with various information.

3.1 Operational Mode

3.1.1 Conceptual Assessment Framework

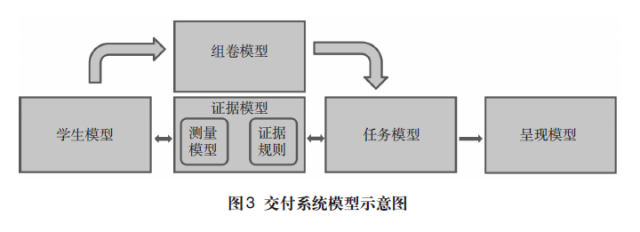

The Conceptual Assessment Framework (CAF) reflects the blueprint for designing a test, specifically including six models: Student Model, Evidence Model, Task Model, Assembly Model, Presentation Model, and Delivery System Model. Each model in CAF revolves around the questions “What to measure” and “How to measure what is intended to be measured.”

The Student Model is a collection of numerous unobservable variables that come from the real-time adjustments of examinees’ response situations probabilistically. Examinees provide different feedback to different test items in a testing state, and the computer gives the next task based on the latest feedback. The Student Model provides data on examinees’ responses, represented as probability distributions.

The Evidence Model consists of evidence rules and measurement models. Evidence rules specify how to infer the examinee’s level from their current responses (observable variables), which belong to response scoring data. Measurement models provide information about the relationships between the variables of the Student Model and observable variables. Classical measurement theory, item response theory, cognitive diagnostic models, etc., all belong to measurement models, guiding the process of summative scoring, which is the accumulation and integration of evidence throughout the testing task.

The Task Model addresses how to provide evidence to the Evidence Model. It determines what testing tasks the examinee will see and what results will be produced after responses. The Task Model includes various task model variables, reflecting the attributes of each task and how these attributes are related to the testing tasks seen by examinees and the feedback received after responses. The Task Model can assist item writers in drafting test item content and help measurement experts allocate the number of items. Different task models generate different task combinations, presenting different test paper content. This modular approach to tasks systematically controls the evidence materials and statistical parameters required to provide a set of test papers, with the advantage that these task combinations can be directly evaluated and provide a pathway for open-ended task combinations.

The Assembly Model links the Student Model, Evidence Model, and Task Model together. This model aims to measure the variables of the Student Model accurately while coordinating the interrelationships between different tasks, appropriately reflecting the depth and breadth of the assessment content. This model can assist the computer in answering the question of “to what extent does the examinee need to be measured?”

The Presentation Model specifies how the test paper is presented. The same test content can be presented in a paper-and-pencil format or through computer networks; the difference lies in that the former only requires sending a command to the printer, while the latter requires writing code to implement it. The Delivery System Model integrates the student, evidence, task, assembly, and presentation models, standardizing the common content of each model, such as platform, security, and time control, assisting different types of models in pairing to achieve different testing purposes.

According to Mislevy and others, these six models serve as a bridge connecting the validity argumentation of testing with the operational aspects. Through these models, the knowledge levels and skills assessed by a test, measurement conditions, and various evidences can be visualized. Figure 3 is a schematic diagram of the Delivery System Model, visually reflecting the relationships between the models.

3.1.2 Delivery Phases

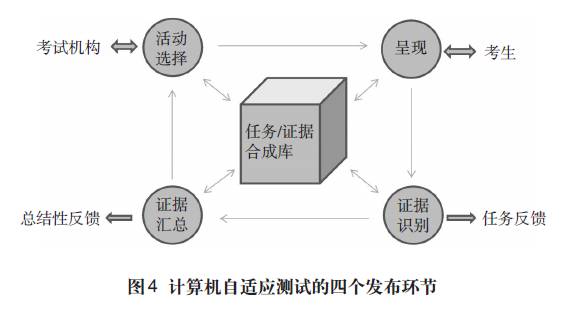

If the aforementioned models belong to the static framework of test design, the delivery phases (Four-process Delivery Architecture for Assessment Delivery) are the dynamic processes that link the static framework together. The operation of a computer-adaptive test relies on the following four phases: Presentation Process, Evidence Identification (also known as Response Processing), Evidence Accumulation (also known as Summary Scoring), and Activity Selection, with each phase linked to the Task/Evidence Composite Library — accepting data and outputting data. The Activity Selection phase is responsible for selecting test tasks from the task library and then sending the instructions to present the task to the Presentation phase, which exhibits the test item to the examinee, with these examination tasks designed by the Task Model. After the Presentation phase collects the examinee’s responses, it passes the results to the Evidence Identification phase, which completes the response process according to the methods specified by the Evidence Model, providing the values of observable variables defined by the evaluation procedures of the Evidence Model. This value is then passed to the Evidence Accumulation phase, which updates the probability distribution based on the values of the Student Model variables, obtaining summative scoring feedback, which is immediately stored for the next score report. Evidence Accumulation provides information to the Activity Selection phase, assisting it in determining which examination tasks to select. Figure 4 reflects the interrelationships among these four phases.

3.1.3 ECD Layers

The ECD Layers (ECD Layers) were proposed in 2005 by Mislevy and others based on the conceptual testing framework of ECD and the four delivery phases, representing an enhancement and expansion of the ECD framework. According to Table 1, CAF and the delivery phases are just two layers within the ECD layers, with content analysis and content models added as two layers before CAF, and a testing implementation layer added between CAF and test delivery. From the roles and core contents reflected in Table 1, content analysis and content models resonate with construct validity and validity argumentation theory, indirectly linking them to score interpretation. Content analysis specifically manifests in the assessment content, as determined by experts and scholars from various professional fields, while content models are primarily the responsibility of test designers, with both collaborating to define the content and structure of the test. Test implementation involves actually producing each part of the test depicted in CAF, including item writers drafting examination tasks, matching testing models, establishing scoring criteria, and programming simulations.

3.2 Design Features

3.2.1 Evidence-Centered, All Designs Serve Validity Argumentation

Under the influence of the notion that evidence equals validity, the “calculation” of test validity has shifted to the collection, organization, interpretation, and argumentation of evidence related to the test, and the design of ECD revolves entirely around this theme. The way ECD handles and organizes evidence is not entirely separate but mutually supportive and causative, providing a complete evidence chain for validity argumentation. ECD is a problem-oriented design, constantly seeking to answer the following questions: What kind of score report should ultimately be formed? How should scores explain and interpret this report? What are the reasons for explanation and interpretation? What evidence exists? How can this evidence be collected and processed? How can this evidence support the conclusions? Is the evidence reliable? In the continuous process of answering these questions, the working model of ECD is formed.

The 2014 version of the “Standards” lists five types of validity evidence: test content, response processes, internal structure of the test paper, relationships with other variables, and the validity and consequences of testing. ECD cleverly connects these five types of evidence and provides embedded models for each type of evidence.

Test content relates to the evidence about the measurement object, i.e., the construct, primarily reflected in the Task Model. Examination agencies will place various types of test items into the question bank according to specific needs, combining items in terms of quantity and type to present the test paper content to examinees. Whether the test content can adequately reflect a certain type of professional competence is judged by experts, correlating with the construct to a certain extent, but it is still insufficient to establish the evidence chain for score interpretation and validity argumentation.

The evidence model of ECD can provide evidence generated during response processes and evidence related to the internal structure of the test paper. The examinee’s response process reflects the matching relationship between the examinee’s ability performance and the construct. Specifically, the evidence collected during the examination is not whether the examinee can answer a particular question, but whether they can indeed reflect the level of ability that the question intends to assess. The response phase can collect evidence including writing habits, response times, and the allocation of visual attention, which current computer technologies can easily record. The response process can also corroborate the quality of scoring by recording the scoring habits, judging whether the scorers adhere to the scoring standards. Evidence related to the internal structure of the test paper reflects the extent to which the combinations of various test items and parts of the test paper align with the basis for score interpretation (i.e., the construct). When the purpose of the test is singular and the construct is clearly defined, the homogeneity of the items is relatively high, and vice versa. Evidence obtained from the relationships between the test and other variables reflects the degree to which these relationships align with the constructs that explain scores, primarily including convergent and discriminant evidence, correlations with criteria, and validity generalization. Evidence regarding validity and social effects primarily reflects whether the social consequences produced by the examination align with expectations. The response phase and summative scoring phase of ECD continuously collect immediate and conclusive evidence during the actual operation of the test, and the process of handling various data is fully recorded, allowing for on-demand analysis with external variables when necessary.

3.2.2 Based on Computer Technology and Thinking, Modularizing the Test Design Process

Expert systems, software design, and legal argumentation are the main technical and theoretical foundations of the ECD framework. ECD is a design framework based on computer-assisted testing technology, and understanding all aspects of the examination requires considering the application of computers. Traditionally, examination work consists of three aspects: item writing, examination administration, and evaluation. In the item writing phase, item writers create items, assemble tests, and manage them; in the examination administration phase, exam staff store, transport, monitor, collect, score, and release results; in the evaluation phase, evaluators analyze and assess the quality of the test papers. This division is based on the nature of the work. ECD offers another way of thinking about test design, starting with validity verification as the core work, and then breaking down tasks into six modules around this goal, which are based on computer technology, allowing the item writing, examination administration, and evaluation departments to work using different modules and to obtain data and resources from different modules. As the design tasks of the examination have been refined into individual computer instructions or programs, when certain aspects of the examination are revised or updated, only the specific content of the corresponding module needs to be modified, without affecting the operation of other modules, facilitating the convenient and efficient incorporation of new data and materials into the revised examination. The validity verification process of ECD unfolds based on different work modules, characterized by openness and continuity.

4 ECD and the Upgrade of the National Question Bank for Educational Examinations in China

Experts once summarized the innovations in examination technology when the college entrance examination was restored 30 years ago, identifying four innovations in examination methods and technologies: the rise of online registration technology, the implementation of online scoring, the implementation of online examinations, and the application of electronic examination room monitoring technology. Now, nearly 40 years after the restoration of the college entrance examination, the development of examination technology in China still lingers in these four aspects. Among these four aspects, online registration, online scoring, and electronic examination room monitoring all belong to examination administration technology, with only online examinations related to item writing and evaluation. Although many examinations in China have adopted online testing technology and accumulated rich experience, high-stakes educational examinations represented by the college entrance examination have almost universally not adopted online testing technology. Currently, the national educational examination question bank has begun to take shape, and major national educational examinations such as the college entrance examination fall within the support of question bank technology. If computer-assisted examinations, online examinations, and the question bank as technical support can truly be applied to these examinations, it will substantially enhance the quality of the examinations.

The ECD test design framework used by ETS is, strictly speaking, not the construction of the question bank itself, but a test design framework that is above the question bank in structure and governs the paradigm and ideas of test design. It incorporates the question bank, allowing the question bank to function within a test network or test cycle. The ECD framework and operational mode can provide the following three insights for the construction of the national question bank in China.

First, establish a validity mindset, incorporating validity argumentation into the design considerations for upgrading the question bank. In modern education and psychometrics, validity is a unified concept that requires multiple forms of evidence to support the inferences made about examinees’ psychological structures based on examination results; it concerns the interpretation of examination results, not the examination itself; it includes an evaluation of the social consequences produced by the use of examination results. Validity is a fundamental requirement of educational psychometrics and one of the basic elements of the scientific nature of large-scale educational examinations. Validity argumentation is both a verification of examination quality and a demonstration of examination quality. In today’s competitive environment, the effectiveness of scores and the interpretations of scores depend on the validity of the examination. The question bank can accomplish many things, but its core function is to assist in item writing, and the quality of item writing should be measured by validity standards. Therefore, the design of the question bank should not only ensure the security of stored test items but also serve to ensure the interpretability and acceptability of test scores.

Second, establish examinee-centered thinking; the design of the question bank should shift from a unidimensional perspective to a bidimensional perspective. The users of the question bank are examination agencies, but the service targets of the question bank are by no means limited to examination agencies. From a means perspective, the question bank is an excellent method for item writing using technological means, but from a purpose perspective, the items produced with the assistance of the question bank still face examinees, their parents, and society as a whole. This is not merely a methodological issue. If the construction of the question bank only stands from the unidimensional perspective of examination agencies, it often adopts a value judgment prioritizing efficiency and security, especially under China’s educational system, where the issue of examination validity is easily overlooked. The greatest concern arising from this unidimensional perspective is that it cannot withstand societal scrutiny; once doubts arise regarding the effectiveness of the test paper, responding to such challenges becomes the most difficult task. Moreover, it is difficult to connect the entire evidence chain of validity argumentation, let alone complete the interpretation of the meaning of scores. Establishing a bidimensional perspective that includes both test administrators and examinees is critical for the upgrade of the question bank. Ultimately, every examination must report scores or results to examinees, and this result, which is often overlooked by examination agencies, is precisely what examinees value most. If a mindset centered on examinees is not established, viewing examinations from the perspective of examinees, examinations may be reduced to mere administrative tasks rather than true psychological measurement and educational evaluation. A bidimensional perspective requires examination agencies to clarify how they will explain scores to examinees and how to use those scores from the very beginning of test design.

Third, establish modular thinking, allowing the question-setting process to be modularized within the question bank. A question bank lacking scientific statistical and measurement analysis capabilities is not a valuable question bank. ECD provides a pathway for constructing question banks using modular thinking. As previously analyzed, the various models contained within the question bank break down the various aspects of test design. On one hand, this maximizes the application of computer technology in the field of psychometrics; on the other hand, it translates the entire thought process of test design into concrete operational steps, integrating various validation processes for test validity into computer models. Establishing modular thinking is particularly significant for addressing the challenges posed by technological advancements to examination agencies.

5 Conclusion

If high-stakes educational examinations, led by the college entrance examination, still use “closed” methods to avoid risks, with item setters predicting question difficulty back-to-back, using raw scores to set cut-off lines, inviting experts to validate content validity through evaluation meetings, and using public opinion to guide societal doubts about examination fairness, then our examinations can hardly be termed scientific, and our examination institutions cannot claim to be professional examination agencies. Western educational measurement has come a long way, from the initial correlation coefficients to various types of validity, followed by the emergence of constructs and the establishment of holistic validity views. People’s understanding of examinations is no longer merely a score but the entire examination process. What people need to understand is the meaning behind the scores and the validity and reliability of that meaning; the examination process signifies a process of evidence accumulation. The ECD framework is designed around the collection of evidence. For this reason, the ECD framework can be referenced in the construction of the national educational examination question bank in China, as it expresses a test design ideology that emphasizes validity and prioritizes evidence, starting from constructs, from examinees, and from purposes, centering everything around the interpretive power of scores. These are precisely the concepts that need to be established in the current construction of the national question bank.