Click on the above“Beginner’s Guide to Vision”, select to add a bookmark or “pin”

Important content delivered promptly

Is anyone curious:

With large models being so appealing, what if we could take them from the cloud to the edge for development, making it possible for every phone to be equipped with a large model? Wouldn’t that be even better?

— We haven’t achieved this wish yet, not because we don’t want to, but because we can’t.

Due to differences in chip architecture, deploying on the edge often requires developers to modify the model’s network structure just to manage a “fit”, but after modifications, performance is hard to guarantee, not to mention a series of existing issues like power consumption and cost.

However, now a platform has emerged that claims to directly utilize open-source models with performance and power consumption comparable to GPU-based edge chips in autonomous driving.

It’s called AX650N, from Axiom Intelligence, and it supports the Transformer architecture exceptionally well.

The Transformer needs no introduction; it is the foundational architecture behind popular large models like ChatGPT and Stable Diffusion.

So how effective is it? Let’s go through the parameters one by one.

Complete Original Swin Transformer Deployment on Edge in 5 Minutes

AX650N is the third-generation edge chip released by AI chip company Axiom Intelligence.

It consists of a CPU and an NPU, where the CPU uses an octa-core A55 processor, and the NPU employs self-developed mixed precision technology, achieving high computing power of 43.2TOPs (INT4) or 10.8TOPs (INT8).

AX650N is mainly used for edge visual perception.

Currently, in this field, the industry mainly develops applications based on CNN networks.

In contrast, the Swin Transformer, which boasts excellent accuracy and performance, has not seen significant large-scale deployment and is still mostly hosted on cloud servers.

Axiom Intelligence states that this is because GPUs are more friendly for computing the MHA structure (Multi-Head Attention mechanism in Transformers).

Most current edge AI chips, due to architectural limitations, optimize for CNN structures for better model efficiency and do not provide much performance optimization for the MHA structure. Thus, we need to modify the Swin Transformer network structure to barely deploy it on the edge—

Once the network structure is modified, it means a series of issues will arise, such as accuracy degradation. Once accuracy drops, the model must be retrained, a process that can take weeks or even months.

Liu Jianwei, co-founder and vice president of Axiom Intelligence, introduced:

Using AX650N to deploy the original Swin Transformer on the edge, from receiving the test board to demo replication, only takes 5 minutes, and to run a private model in one’s private environment, it only takes 1 hour to complete.

— Not only can it run, but it runs quickly, with high performance and low power consumption.

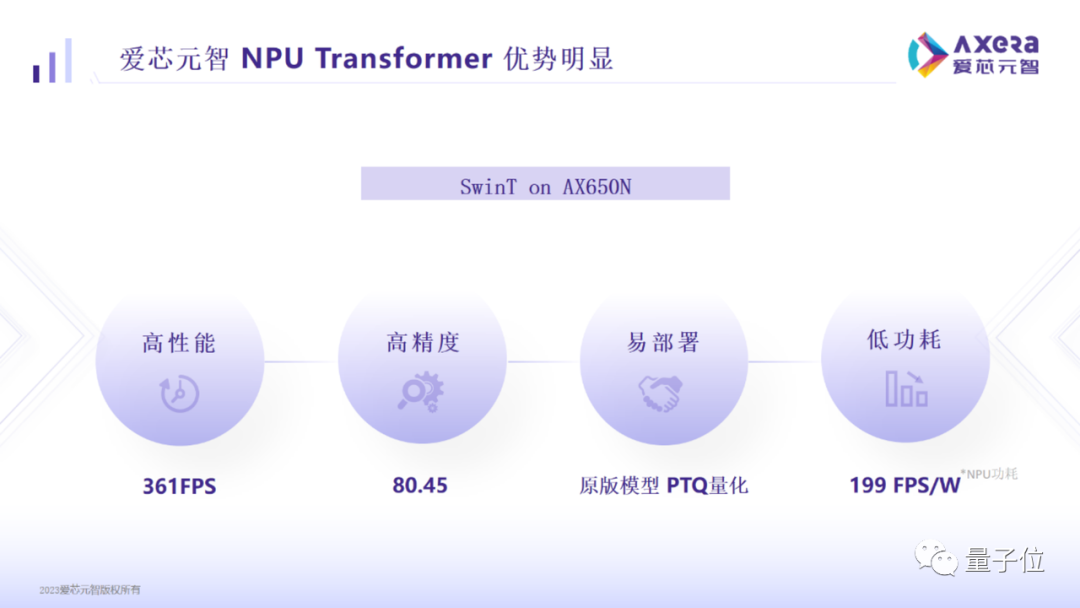

Specifically, the performance of deploying Swin Transformer on AX650N can reach 361 FPS.

What does this mean?

A well-known chip maker’s high-end domain control SoC, used in autonomous driving, runs at a frame rate below 400. AX650N is basically comparable to it.

Not only does it have high performance, but AX650N can also ensure accuracy, achieving an accuracy of 80.45%, which is higher than the market level.

At the same time, the power consumption is also very low, meaning it has very high energy efficiency, capable of running at 199 FPS/W, several times that of the aforementioned GPU-based high-end domain control SoC (the overall TDP of this chip is about 15 to 60W, with actual incremental power consumption exceeding 20W, yielding about 20 FPS/W).

In addition to these advantages, AX650N also supports low-bit mixed precision. When encountering models with large-scale parameters, we can use INT4 to reduce memory and bandwidth usage, thereby lowering the deployment cost of large models on the edge.

Based on this, Axiom Intelligence states that AX650N can be considered the current best platform for supporting the Transformer architecture for edge deployment.

By the way, in addition to the Swin Transformer mentioned above, AX650N is also compatible with Transformer models including ViT/DeiT and DETR, and the latest visual model DINOv2 released by Meta has also achieved results of over 30 frames.

Therefore, with AX650N, downstream operations such as detection, classification, and segmentation become much easier.

It is reported that next, Axiom Intelligence will further optimize AX650N for the Transformer structure and explore multimodal Transformer models.

Ps. The specific deployment methods for Swin Transformer, DETR, and EfficientViT have been provided in detail by Axiom Intelligence officials. We won’t elaborate here; you can check the link at the end of the article~

△Performance test of Swin Transformer, 3.6 Tops can run over 100 frames

Edge and On-device Deployment of Large Models is the Trend

Returning to the topic of large models, have you ever considered what their vigorous development will bring us?

Dr. Lu Qi, CEO of Qi Ji Chuang Tan, mentioned in a recent speech:

When he graduated from CMU, buying a map cost 3 dollars; now we can find one online in 300 milliseconds, and the cost of information access has approached zero.

Axiom Intelligence officials stated that large models will somewhat repeat the above story, meaning we can envision a future where opening a phone or other terminal will give us an AI assistant, akin to a personal doctor, lawyer, or chef, who knows everything and provides us with very low-cost consultations and services.

On the other hand, for instance, if smart speakers could use large models locally, they could perform interactions that truly qualify as intelligent, rather than just extracting keywords.

To realize such wishes, large model deployment will not be limited to the cloud.

△Image source: Axiom Intelligence official website

“From a quantitative perspective, the demand for edge and on-device will certainly exceed that of the cloud, as there will be more edge and on-device devices.”

As we discussed today regarding Transformer edge deployment, “any business that uses cameras will be interested in it.”

Axiom Intelligence explains that one of the biggest advantages of deploying Transformers on the edge compared to CNN networks is that it can solve the previous issue of high AI intelligence investment in long-tail scenarios.

For example, monitoring garbage in rivers with CNN networks might require months to collect new data and retrain if a new type of garbage appears; otherwise, the system won’t recognize it.

In contrast, visual large models based on Transformers, trained using unsupervised learning, can directly recognize new instances (i.e., zero-shot or one-shot capabilities), saving a significant amount of time and training costs.

However, developing a platform that allows Transformer models to run efficiently and easily on the edge is not without challenges.

For example:

Hardware architecture must be accurately positioned early on to optimize specifically for Transformers, find ways to reduce bandwidth usage of large parameter models, and ensure smoother loading.

On the software side, during inference, quantization is required instead of floating-point inference, which requires considerable experience.

……

Based on current results, Axiom Intelligence’s AX650N still has many iterations to perform, such as improving hardware reading capabilities for discrete data and ensuring that associated computations keep up with new reading speeds, as well as working on network sparsity, MOE (Mixture of Experts), and low-bit optimization at the hardware level.

About Axiom Intelligence

Axiom Intelligence was founded in May 2019 and is a company focused on the research and development of artificial intelligence visual perception chips and foundational computing platforms.

The founder, chairman, and CEO Qiu Xiaoxin graduated from Tsinghua University with a master’s in automation and received a PhD in electronic engineering from the University of Southern California. Before founding Axiom Intelligence, she served as vice president at Broadcom and CTO at Unisoc.

Last year, Axiom Intelligence announced the completion of 800 million A++ round financing, with investors including Tencent and Meituan. Including previous rounds, the total financing amount has approached 2 billion RMB.

To date, Axiom Intelligence has successfully developed and mass-produced three generations of multiple edge and edge-side intelligent visual perception chips, all claimed to have low power consumption advantages and excellent image processing capabilities, applied in smart cities, intelligent transportation, and smart manufacturing.

One More Thing

Axiom Intelligence is set to launch the Axiom Pi Pro development board (similar to Raspberry Pi) on a certain e-commerce platform soon, with related tutorials and tools available on GitHub.

Those interested in deep research on Transformers can go “get active” now.

Links:[1]https://mp.weixin.qq.com/s/csuIyfvFXPPVX8wdkQ0yOg[2]https://mp.weixin.qq.com/s/K_RpTAX97jz2Rpk3BpGrGw[3]https://mp.weixin.qq.com/s/vqtA9czTaYk7kJTPS3Zc4Q

Download 1: OpenCV-Contrib Extension Module Chinese Tutorial

Reply "Extension Module Chinese Tutorial" in the "Beginner's Guide to Vision" public account backend to download the first Chinese version of the OpenCV extension module tutorial, covering installation of extension modules, SFM algorithms, stereo vision, object tracking, biological vision, super-resolution processing, and more than twenty chapters.

Download 2: Python Vision Practical Project 52 Lectures

Reply "Python Vision Practical Project" in the "Beginner's Guide to Vision" public account backend to download 31 practical vision projects including image segmentation, mask detection, lane line detection, vehicle counting, eyeliner addition, license plate recognition, character recognition, emotion detection, text content extraction, and facial recognition, to assist in quickly learning computer vision.

Download 3: OpenCV Practical Project 20 Lectures

Reply "OpenCV Practical Project 20 Lectures" in the "Beginner's Guide to Vision" public account backend to download 20 practical projects based on OpenCV, achieving advanced learning of OpenCV.

Group Chat

Welcome to join the public account reader group to exchange with peers. Currently, there are WeChat groups for SLAM, 3D vision, sensors, autonomous driving, computational photography, detection, segmentation, recognition, medical imaging, GAN, algorithm competitions, etc. (will gradually subdivide in the future). Please scan the WeChat number below to join the group, with a note: “Nickname + School/Company + Research Direction”, e.g., “Zhang San + Shanghai Jiao Tong University + Vision SLAM”. Please follow the format; otherwise, you will not be approved. After successful addition, invitations will be sent to relevant WeChat groups based on research direction. Please do not send advertisements in the group; otherwise, you will be removed. Thank you for your understanding~