Artificial Intelligence (AI) has undergone unprecedented expansion in recent years. The dissemination of AI tools has transcended the boundaries of data centers and high-performance devices, entering embedded systems, from IoT sensors and portable medical devices to the most complex industrial applications. Running AI models effectively in embedded environments faces certain limitations due to a lack of computational and energy resources.

In response to this need, a new generation of hardware accelerators has been designed specifically for AI to transform the technology of embedded systems, including Neural Processing Units (NPUs), Graphics Processing Units (GPUs), Digital Signal Processors (DSPs), Field Programmable Gate Arrays (FPGAs), and microcontrollers with integrated AI accelerators. These solutions have their advantages, limitations, and areas of application.

Architectures, Frameworks, and Applications

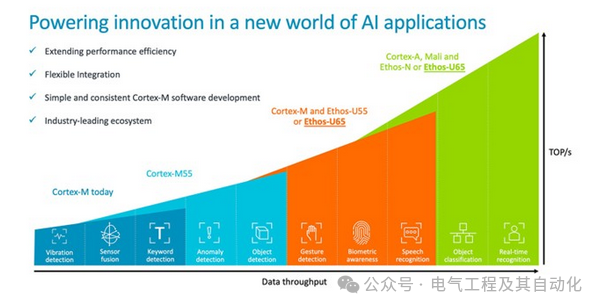

Neural Processing Units (NPUs) are hardware components designed to accelerate the operations of neural networks, such as convolutions and matrix multiplications. These are often found in System on Chip (SoC) designs, optimized for embedded devices to enhance energy efficiency. The IU55 and U65 are NPUs aimed at optimizing and accelerating machine learning applications on microcontrollers, balancing power efficiency and functionality, and capable of supporting high-performance inference in battery-powered devices. A practical example is the use of NPUs in computer vision applications for real-time object detection in security cameras or drones.

The Ethos-U55 NPU, integrated with the Cortex-M processor, is optimized for low-power inference and supports machine learning models such as Convolutional Neural Networks. The U65 extends the capabilities of the U55, providing superior performance for more demanding applications. Both NPUs leverage proprietary architectures to enhance memory efficiency and reduce latency.

They are scalable and support standard frameworks like TensorFlow Lite, enabling advanced AI solutions to be implemented in compact, resource-constrained devices.

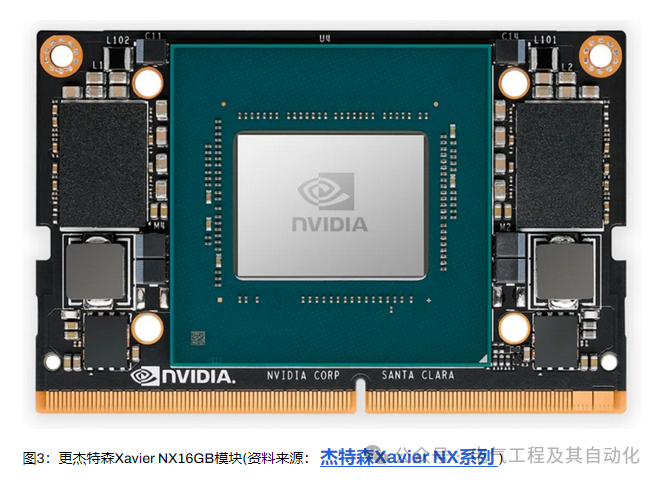

GPUs (Graphics Processing Units), originally designed for graphics processing, have become powerful tools for AI thanks to their ability to perform parallel computations at scale. NVIDIA’s Jetson family provides GPU platforms optimized for embedded applications, such as the Jetson Nano and Xavier, suitable for intensive workloads like facial recognition and real-time video analysis.

However, processors can be less efficient than other accelerators, making them better suited for devices with continuous power. DSPs (Digital Signal Processors) are another popular choice in embedded systems due to their ability to process signals in real-time. While DSPs have traditionally been used for audio processing and motor control, their capabilities have also been extended to support AI models. For example, Texas Instruments DSPs like the TMS320 series are used in voice recognition applications for connected devices. DSPs typically provide a good balance of performance and efficiency, suitable for applications requiring both inference and traditional signal processing.

FPGAs (Field Programmable Gate Arrays) represent a game-changing AI acceleration technology in embedded systems, thanks to their flexibility and ability to be programmed to execute specific tasks efficiently. Unlike NPUs, FPGAs can be customized to optimize the execution of specific models, ensuring optimal use of hardware resources. Xilinx, with its AI framework, provides tools for developing and accelerating machine learning applications on FPGAs.

Vitis AI can optimize TensorFlow or PyTorch models and their deployment on devices like Xilinx Zynq UltraScale+. This solution is particularly suitable for machine vision and industrial automation applications where low latency and precision are critical parameters. Additionally, programming languages like USB class for FPGAs allow developers to leverage hardware acceleration without deep design knowledge, thus lowering the technical barriers to entry.

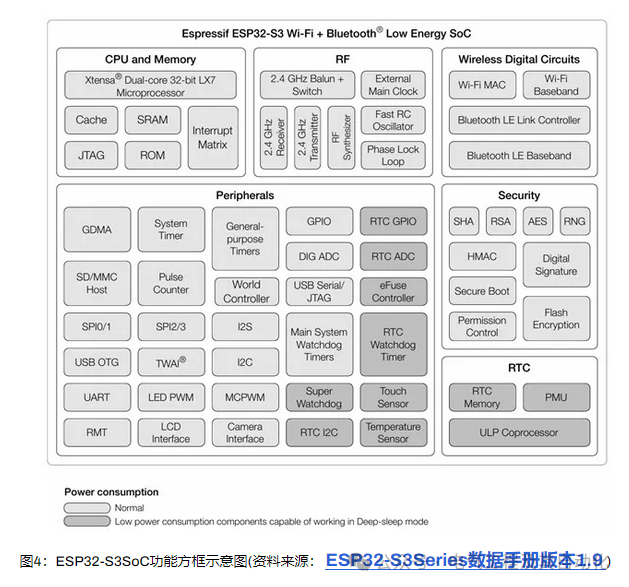

Another significant innovation in the embedded space is microcontrollers with integrated AI accelerators. Devices such as STMicroelectronics STM32, Espressif ESP32-S3, and NXP MX RT incorporate dedicated engines to accelerate the execution of models directly on low-power microcontrollers. The STM32Cube.AI allows developers to convert TensorFlow Lite models for optimized execution on STM32, suitable for connected applications.

The ESP32-S3 system-on-chip (SoC), on the other hand, offers a vector unit that enhances performance for machine learning computations, making it an excellent choice for portable battery-powered devices. All the mentioned microcontrollers represent a low-cost, high-efficiency solution for applications requiring simple AI inference, such as signal classification or keyword recognition.

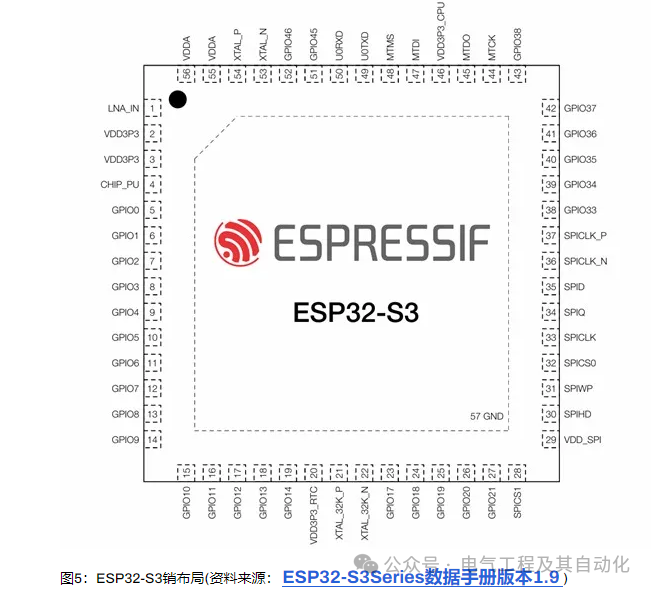

The ESP32-S3 features a dual-core XTensa LX7 processor with a frequency of up to 240 MHz, designed for AIoT applications. It includes 2.4 GHz wireless connectivity (802.11b/g/n) and Bluetooth 5 (LE) for fast and reliable connections, providing high range and transmission speeds of up to 2 Mbps. It features 512 KB SRAM, supports SPI flash and high-speed PSRAM, and has 45 GPIOs, rich peripherals like SPI, I2C, ADC, serial ports, and others, along with an RF module. Designed for AI applications, the ESP32-S3 includes vector instructions to accelerate neural network computations and signal processing. It also offers an ultra-low power core for power-saving modes and user interfaces with touch input. Benefiting from the support of the ESP-IDF platform, it ensures regular updates, rigorous testing, and validated tools for developing innovative applications.

Its technical specifications, along with numerous programmable inputs and outputs for controlling various smart devices, make it a very powerful and flexible solution for secure and efficient connectivity of devices.

The chip is optimized for processing AI-related operations, such as complex computations for neural networks, due to its specialized integrated features, and ensures high security for data protection through tools like advanced encryption and systematic separation of different operations.

The ESP32-S3 is also designed for energy efficiency, with low-power modes suitable for battery-powered devices. Developers can rely on a mature and up-to-date software platform, making it easier to create new applications or adjust existing ones. In summary, it is a powerful, secure, and flexible chip that is perfect for the next generation of connected devices.

How to Choose the Right AI Accelerator

One of the most common questions among developers is how to choose the right accelerator for a specific embedded project. The answer depends on various factors, including the type of application, power consumption requirements, performance needs, and cost constraints. For applications requiring very low latency, such as real-time robotic control, FPGAs can be an ideal choice; otherwise, processors are often preferred for high-power computing needs, such as complex video analysis.

NPUs, on the other hand, provide a good compromise between performance and energy efficiency for general-purpose applications like voice recognition and language translation. DSPs and microcontrollers with integrated accelerators are particularly suitable for low-power applications, such as wearables and connected sensors. Performance and power consumption benchmarks are critical for evaluating AI accelerators in embedded systems. In this regard, NVIDIA’s Jetson Nano offers a computing capability of 472 GFLOPS with a consumption of about 5 watts, making it a popular choice for computer vision applications, whereas devices like the Espressif ESP32-S3, despite having lower computing power, consume less than 1 watt and are ideal for battery-powered applications.

FPGAs like the Xilinx Zynq UltraScale+ are highly energy-efficient, but their power consumption is highly dependent on the specific hardware configuration and complexity of the implementation. In the field of computer vision, AI accelerators have proven to be revolutionary tools. Just consider smart cameras using FPGAs to run real-time object detection and tracking algorithms, enhancing security in transportation and public spaces. In the field of industrial automation, FPGAs are used for visual inspection on production lines to achieve more accurate and faster quality control than traditional methods.

Additionally, microcontrollers with AI acceleration find applications in wearable medical devices, such as heart monitoring watches using models to detect arrhythmias in real-time. Open-source platforms and development frameworks play a significant role in the widespread adoption of accelerators in embedded systems: TensorFlow Lite and PyTorch Mobile are widely used for developing and optimizing models for embedded devices, while frameworks like Edge Impulse provide tools for end-to-end design solutions in low-power devices and simplify the development process for IoT applications. In particular, Edge Impulse allows developers to train models using datasets collected from embedded sensors, significantly reducing development time.

Another area of growing interest is the use of RISC-V as an accelerator in embedded systems, an open-source architecture that allows for unprecedented customization to create AI accelerators tailored for specific applications. Some companies have already developed RISC-V cores optimized for machine learning workloads. The flexible RISC-V architecture allows developers to balance energy consumption and costs more effectively than proprietary architectures.

Conclusion

AI accelerators in embedded systems offer a wide range of options, each with unique characteristics addressing specific application needs. Choosing the right accelerator depends on a comprehensive understanding of the project’s requirements, performance expectations, and power consumption constraints. As hardware and software technologies continue to evolve, integrating AI into embedded devices becomes increasingly convenient and ubiquitous, opening up new possibilities for industries from home automation and beyond.