Source丨Internet

1. Pandas – For Data Analysis

Pandas is a powerful toolkit for analyzing structured data; it is built on Numpy (which provides high-performance matrix operations) and is used for data mining and analysis, while also offering data cleaning capabilities.

<span># 1. Install the package </span>$ pip install pandas <span># 2. Enter the Python interactive interface </span>$ python -i <span># 3. Use Pandas>>> import pandas as pd>>> df = pd.DataFrame() >>> print(df) </span><span># 4. Output result </span>Empty DataFrame Columns: [] Index: [] 2. Selenium – Automated Testing

Selenium is a tool for testing web applications from the perspective of an end-user. By running tests in different browsers, it is easier to discover browser incompatibilities. It is compatible with many browsers.

A simple test can be done by opening a browser and visiting Google’s homepage:

from selenium import webdriver import time browser = webdriver.Chrome(executable_path =<span>"C:\Program Files (x86)\Google\Chrome\chromedriver.exe"</span>) website_URL =<span>"https://www.google.co.in/"</span> browser.get(website_URL) refreshrate = int(3) <span># Refresh Google homepage every 3 seconds. </span> <span># It will keep running until you stop the compiler. </span> <span>while</span> True: time.sleep(refreshrate) browser.refresh() 3. Flask – Micro Web Framework

Flask is a lightweight and customizable framework written in Python, which is more flexible, lightweight, secure, and easy to use compared to other similar frameworks. Flask is currently a very popular web framework. Developers can quickly implement a website or web service using Python.

from flask import Flask app = Flask(__name__) @app.route(<span>'/'</span>) def hello_world(): <span>return</span> <span>'Hello, World!'</span> 4. Scrapy – Web Scraping

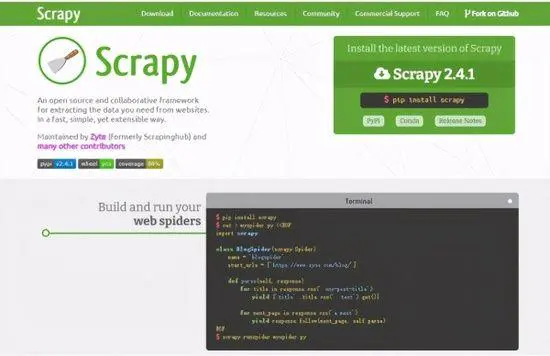

Scrapy provides strong support for precisely scraping information from websites. It is very practical.

Currently, most developers use scraping tools to automate scraping tasks. Therefore, when writing scraping code, you can use this Scrapy.

Starting Scrapy Shell is also very simple:

scrapy shell We can try to extract the value of the search button on the Baidu homepage. First, we need to find the class used by the button, which is displayed as “bt1” in inspect element.

Specifically, execute the following operations:

response = fetch(<span>"https://baidu.com"</span>) response.css(<span>".bt1::text"</span>).extract_first() ==> <span>"Search"</span> 5. Requests – For API Calls

Requests is a powerful HTTP library. With it, you can easily send requests without manually adding query strings to the URL. Additionally, it has many features such as authorization handling, JSON/XML parsing, session handling, etc.

Official example:

>>> r = requests.get(<span>'https://api.github.com/user'</span>, auth=(<span>'user'</span>, <span>'pass'</span>)) >>> r.status_code 200 >>> r.headers[<span>'content-type'</span>] <span>'application/json; charset=utf8'</span> >>> r.encoding <span>'utf-8'</span> >>> r.text <span>'{"type":"User"...'</span> >>> r.<span>json</span>() {<span>'private_gists'</span>: 419, <span>'total_private_repos'</span>: 77, ...} 6. Faker – For Generating Fake Data

Faker is a Python package that generates fake data for you. Whether you need to seed a database, create beautiful XML documents, fill your persistence to emphasize testing it, or get data from production services with the same name, Faker is suitable for you.

With it, you can quickly generate fake names, addresses, descriptions, etc.! The following script is an example where I create a contact entry containing a name, address, and some descriptive text:

Installation:

pip install Faker from faker import Faker fake = Faker() fake.name() fake.address() fake.text() 7. Pillow – For Image Processing

Pillow is a powerful image processing tool in Python. Whenever you need to perform image processing, you can use it. After all, as a developer, you should choose a more powerful image processing tool.

Simple example:

from PIL import Image, ImageFilter try: original = Image.open(<span>"Lenna.png"</span>) blurred = original.filter(ImageFilter.BLUR) original.show() blurred.show() blurred.save(<span>"blurred.png"</span>) except: <span>print</span> <span>"Unable to load image"</span> Effective tools can help us complete work tasks more quickly, so I share several tools that I think are useful, and I hope these 7 Python efficiency tools can help you.

Editor /Fan Ruiqiang

Reviewer / Fan Ruiqiang

Proofreader / Fan Ruiqiang

Click below

Follow us