Follow the public account, Java dry goodsDelivered on time

1. Background of Docker

In common development and project scenarios, the following situations are prevalent:

-

Personal Development Environment

To do big data related projects, you need to install a CDH cluster. The common practice is to set up 3 virtual machines corresponding to the CDH version on your computer. After installing the CDH cluster, considering that you may also need a clean CDH cluster in the future, to avoid repeated installations, you usually back up the entire CDH cluster, thus your computer ends up with 6 virtual machine images. Additionally, when learning other technologies, like learning Ambari big data cluster, to avoid damaging the existing virtual machine environment, you have to set up 3 new virtual machines, quickly filling up your local disk with a bunch of virtual machine images. -

Internal Company Development Environment

Companies often do projects in small teams, generally with the operations department allocating virtual machines from their managed server resources for internal development and testing.For example, doing a machine learning related project: 1) Xiao Ming set up an Ambari cluster on the virtual machine allocated by the operations department to run big data related business. -

Development/Testing/On-site Environment

After developers write and test code in the development environment, they submit it to the testing department. Testers run it in the testing environment and find bugs, while developers claim there are no bugs in the development environment. After multiple discussions between developers and testers to resolve the bugs, the version is released. After deployment in the production environment, bugs are found again, leading to further disputes between engineers and testers. Sometimes, to accommodate special on-site environments, code needs to be customized and branches created, causing every on-site upgrade to be a nightmare. -

Upgrading or Migrating Projects

Each time a version is released to upgrade on-site, if multiple tomcat applications are running, each tomcat must first be stopped, the war package replaced, and then restarted one by one, which is not only cumbersome but also prone to errors. If serious bugs occur after the upgrade, manual rollback is required. Additionally, if the project wants to go to the cloud, it will need to undergo another round of testing after deployment in the cloud. If considering other cloud vendors, the same tests may need to be repeated (for example, if the data storage component is changed), which is time-consuming and labor-intensive.

Summarizing all the scenarios listed above, they share a common problem: there is no technology that can shield the differences in operating systems while running applications without compromising performance to solve the issue of environmental dependencies. Docker was born to address this.

2. What is Docker?

Namespace and CGroup technologies to achieve environment isolation and resource control. Among them, Namespace is a kernel-level environment isolation method provided by Linux, allowing a process and its child processes to run in a space isolated from the Linux super parent process. Note that Namespace can only achieve isolation of the running space; physical resources are still shared among all processes. To achieve resource isolation, the Linux system provides CGroup technology to control the resources (such as CPU, memory, disk IO, etc.) that a group of processes can use. By combining these two technologies, a user-space independent object that limits resources can be constructed, and this object is called a container.Linux Container is a containerization technology provided by the Linux system, abbreviated as LXC, which combines Namespace and CGroup technologies to provide users with a more user-friendly interface for containerization. LXC is merely a lightweight containerization technology that can only limit certain resources and cannot achieve network restrictions, disk space usage limits, etc. The company dotCloud combined LXC with the technologies listed below to implement the Docker container engine. Compared to LXC, Docker has more comprehensive resource control capabilities and is an application-level container engine.

-

Chroot: This technology can construct a complete Linux file system within a container; -

Veth: This technology can virtually create a network card on the host to bridge with the eth0 network card in the container, enabling network communication between the container and the host, as well as between containers; -

UnionFS: A union file system, Docker uses this technology’s “Copy on Write” feature to achieve fast container startup and minimal resource usage, which will be introduced later; -

Iptables/netfilter: These two technologies are used to control container network access policies; -

TC: This technology is mainly used for traffic isolation and bandwidth limitation; -

Quota: This technology is used to limit the size of disk read/write space; -

Setrlimit: This technology is used to limit the number of processes opened in the container, limit the number of open files, etc.

It is also because Docker relies on these technologies in the Linux kernel that it requires at least version 3.8 or higher to run Docker containers. The official recommendation is to use kernel version 3.10 or higher.

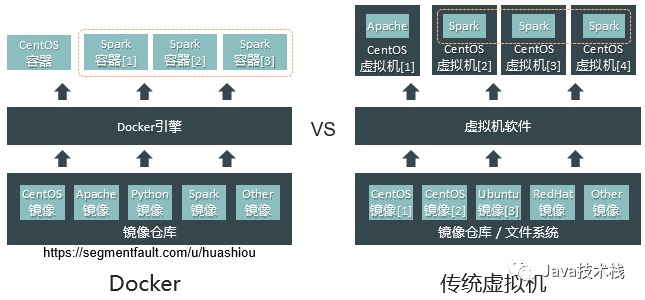

3. Differences from Traditional Virtualization Technologies

-

Directly running on physical hardware. For example, kernel-based KVM virtual machines, this type of virtualization requires CPU support for virtualization technology; -

Running on another operating system, such as VMWare and VirtualBox virtual machines.

Since the operating system running on the virtual machine shares hardware through the Hypervisor, the instructions sent by the VM Guest OS must be captured by the Hypervisor and translated into instructions that the physical hardware or host operating system can recognize. Virtual machines like VMWare and VirtualBox do not perform as well as bare metal, but hardware-based KVM can achieve about 80% of bare metal performance. The advantage of this type of virtualization is that it achieves complete isolation between different virtual machines, ensuring high security, and can run multiple operating systems with different kernels (like Linux and Windows) on a single physical machine. However, each virtual machine is heavy, consumes a lot of resources, and starts slowly.

The Docker engine runs on the operating system and uses kernel-based technologies like LXC, Chroot, etc., to achieve environment isolation and resource control. After the container starts, the processes inside the container interact directly with the kernel without going through the Docker engine, resulting in almost no performance loss and allowing it to utilize the full performance of bare metal. However, since Docker is based on Linux kernel technology for containerization, applications running within containers can only operate on operating systems with the Linux kernel. Currently, the Docker engine installed on Windows actually uses the built-in Hyper-V virtualization tool to automatically create a Linux system, and the operations within the container are indirectly executed using this virtual system.

4. Basic Concepts of Docker

Docker mainly has the following concepts:

-

Engine: A tool for creating and managing containers, which generates containers by reading images and is responsible for pulling images from repositories or submitting images to repositories; -

Image: Similar to virtual machine images, generally composed of a basic operating system environment and multiple applications, serving as the template for creating containers; -

Container: Can be seen as a simplified Linux system environment (including root user permissions, process space, user space, and network space) along with the applications running within it, packaged into a box; -

Repository: A place where image files are centrally stored, divided into public and private repositories. The largest public repository is the official Docker Hub, and also domestic cloud providers like Alibaba Cloud and Tencent Cloud provide public repositories; -

Host: The server where the engine’s operating system runs.

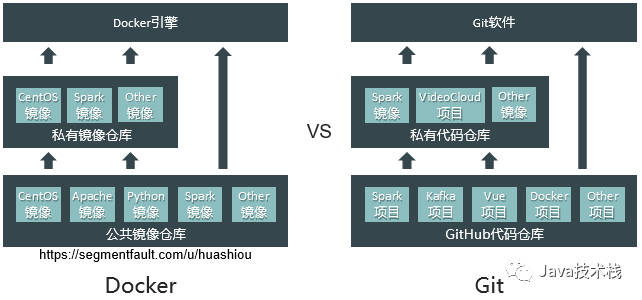

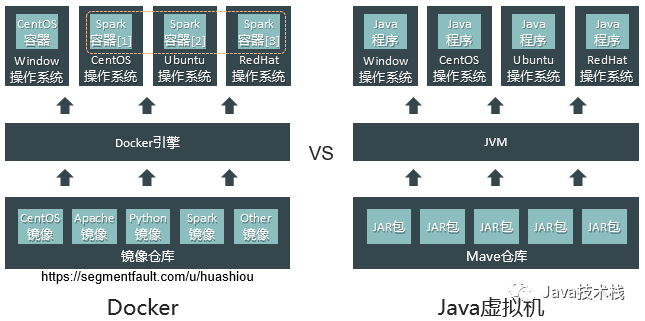

5. Comparison of Docker with Virtual Machines, Git, and JVM

To give everyone a more intuitive understanding of Docker, here are three sets of comparisons:

The repository concept of Docker is similar to that of Git.

Java adapts to the operating system’s characteristics based on the JVM to shield system differences, while Docker utilizes the compatibility features of kernel versions to achieve the effect of building once and running everywhere. As long as the Linux system’s kernel is version 3.8 or higher, containers can run.

Of course, just as in Java, if the application code uses features from JDK10, it cannot run on JDK8. If the application within the container uses features from kernel version 4.18, then when starting the container on CentOS7 (kernel version 3.10), although the container can start, the application’s functions may not operate normally unless the host operating system’s kernel is upgraded to version 4.18.

6. Docker Image File System

UnionFS can combine the contents of multiple independently located directories (also called branches) into a single directory. UnionFS allows control over the read and write permissions of these directories. Additionally, for read-only files and directories, it has the “Copy on Write” feature, meaning that if a read-only file is modified, a copy of the file is made to a writable layer (which may be a directory on disk), and all modification operations are actually performed on this file copy. The original read-only file remains unchanged. One example of using UnionFS is Knoppix, a Linux distribution used for demos, teaching, and commercial product presentations, which combines a CD/DVD and a writable device (e.g., USB) to allow for any changes made to files on the CD/DVD during the demo to be applied to the USB without altering the original content on the CD/DVD.

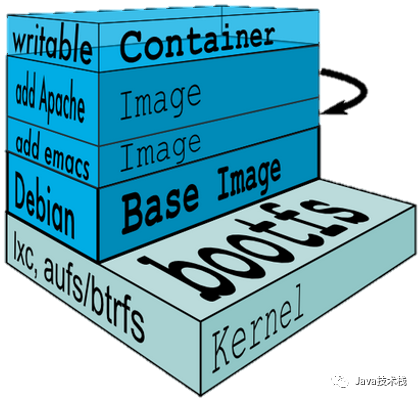

There are many types of UnionFS, among which AUFS is commonly used in Docker. In addition, there are DeviceMapper, Overlay2, ZFS, and VFS. Each layer of the Docker image is stored by default in the /var/lib/docker/aufs/diff directory. When a user starts a container, the Docker engine first creates a writable layer directory in /var/lib/docker/aufs/diff, and then uses UnionFS to mount this writable layer directory along with the specified image’s layers into a directory in /var/lib/docker/aufs/mnt (where the specified image’s layers are mounted in read-only mode). Through technologies like LXC, environment isolation and resource control are achieved, allowing the applications within the container to run relying only on the corresponding mounted directories and files in the mnt directory.

Utilizing the Copy on Write feature of UnionFS, when starting a container, the Docker engine actually only adds a writable layer and constructs a Linux container. Both of these actions consume almost no system resources, allowing Docker containers to start in seconds, enabling thousands of Docker containers to be started on a single server, while traditional virtual machines struggle to start dozens, and they also take a long time to boot. These are two significant advantages of Docker over traditional virtual machines.

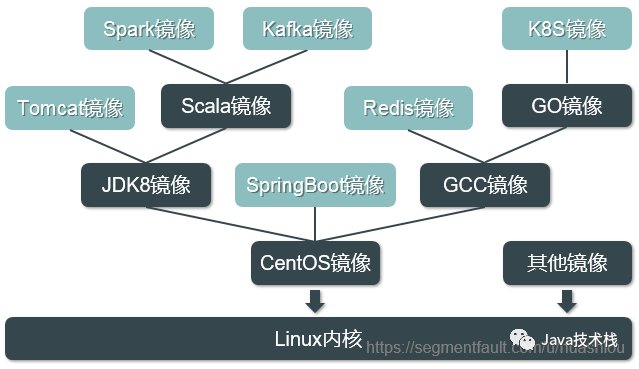

When an application directly calls kernel functionality, it can serve as the base layer for building images. However, since containers isolate the environment, applications within the containers cannot access files on the host (unless certain directories or files are mapped into the container). In this case, application code can only use kernel functionalities. However, the Linux kernel only provides basic and low-level management functionalities like process management, memory management, file system management, etc. In practical scenarios, almost all software is developed based on operating systems, so they often rely on software and runtime libraries from the operating system. If the next layer of these applications is directly the kernel, the applications cannot run. Therefore, in practice, application images are often based on an operating system image to fulfill runtime dependencies.

The operating system images in Docker differ from the ISO images used for installing systems. ISO images contain the operating system kernel and all directories and software included in that distribution, while Docker’s operating system images do not contain the system kernel, only essential directories (like /etc, /proc, etc.) and commonly used software and runtime libraries. You can think of the operating system image as an application on top of the kernel, a tool that encapsulates kernel functionalities and provides a runtime environment for user-developed applications. Applications built on such images can utilize various software functionalities and runtime libraries of the corresponding operating system. Moreover, since applications are built on operating system images, even if moved to another server, as long as the functionalities used by the application in the operating system image can adapt to the host’s kernel, the application can run normally. This is why it can be built once and run anywhere.

The following image vividly illustrates the relationship between images and containers:

7. Docker Base Operating Systems

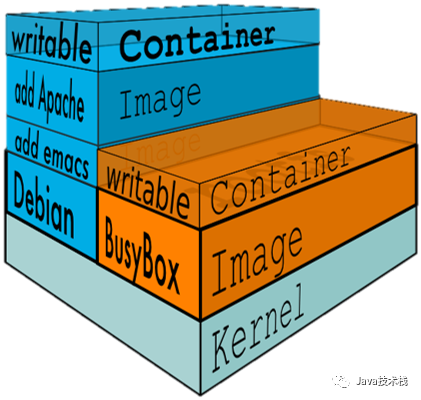

The above system images are suitable for different scenarios:

-

BusyBox: An ultra-lightweight Linux system that integrates over 100 common Linux commands, with a size of less than 2MB, known as the “Swiss Army Knife of Linux systems,” suitable for simple testing scenarios; -

Alpine: A security-oriented lightweight Linux distribution system, with more complete functionality than BusyBox and a size of less than 5MB, recommended as the base image by the official website, as it contains sufficient basic functionality and is small in size, making it the most commonly used in production environments; -

Debian/Ubuntu: Debian-based operating systems with complete functionality, approximately 170MB in size, suitable for development environments; -

CentOS/Fedora: Both are Redhat-based Linux distributions, commonly used operating systems for enterprise-level servers, with high stability and approximately 200MB in size, suitable for production environments.

8. Docker Persistent Storage

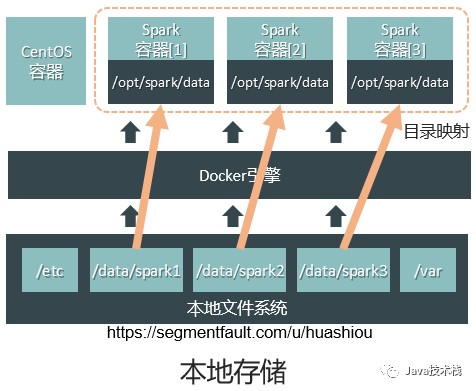

Based on the previously mentioned Copy on Write feature of UnionFS, it is known that adding, deleting, or modifying files within a container actually operates on copies of files within the writable layer. When the container is closed, this writable layer is also deleted, causing all modifications to the container to be lost. Therefore, the issue of file persistence within containers needs to be addressed. Docker provides two solutions to achieve this:

-

Mapping directories from the host file system to directories within the container, as shown in the following figure. This way, all files created in that directory within the container are stored in the corresponding directory on the host. After closing the container, the directory on the host still exists, and upon restarting the container, it can still read the files created previously, thus achieving file persistence within the container. Of course, it should be understood that if the files that come with the image are modified, since the image is read-only, this modification cannot be saved upon closing the container unless a new image is built after the modification.

-

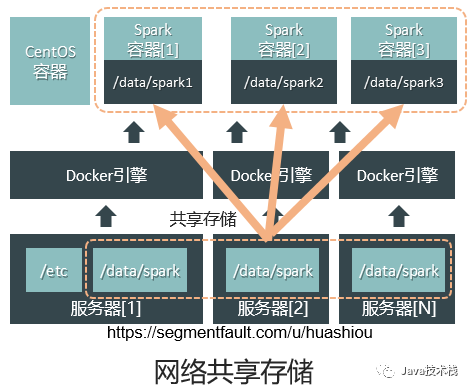

Combining the disk directories of multiple hosts over the network for shared storage, and then mapping specific directories in the shared storage to specific containers, as shown in the following figure. This way, when containers restart, they can still read the files created before they were closed. NFS is commonly used as a shared storage solution in production environments.

9. Docker Image Creation Methods

There are two methods for creating images:

-

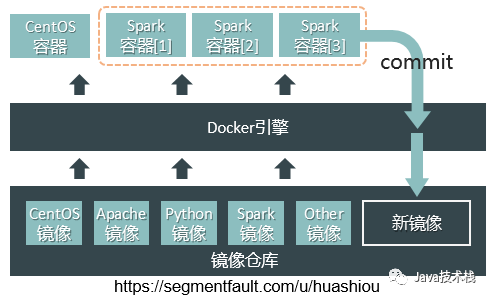

Generating a new image from a running container

This method is relatively simple but does not intuitively allow for setting environment variables, listening ports, etc., and is suitable for use in simple scenarios.

-

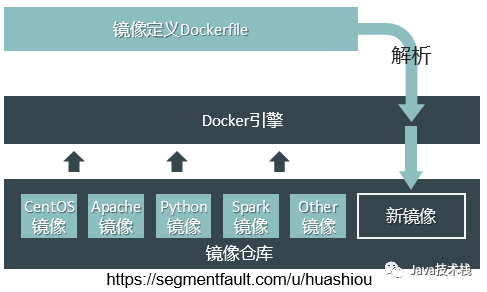

Generating a new image through a Dockerfile

FROM ubuntu/14.04 # Base image

MAINTAINER guest # Maintainer signature

RUN apt-get install openssh-server -y # Install ssh service

RUN mkdir /var/run/sshd # Create directory

RUN useradd -s /bin/bash -m -d /home/guest guest # Create user

RUN echo ‘guest:123456’| chpasswd # Modify user password

ENV RUNNABLE_USER_DIR /home/guest # Set environment variable

EXPOSE 22 # Default port to open in the container

CMD ["/usr/sbin/sshd -D"] # Automatically start ssh service when starting the container

The Docker engine can construct an Ubuntu image with ssh service based on the steps defined in the above Dockerfile.

10. Docker Use Cases

As a lightweight virtualization solution, Docker has a rich array of application scenarios. Below are some common scenarios:

-

Using as a lightweight virtual machine

You can create containers using system images like Ubuntu, serving as virtual machines. Compared to traditional virtual machines, startup speed is faster, resource usage is lower, and a single machine can start a large number of operating system containers, facilitating various tests; -

Using as a cloud host

By combining with container management systems like Kubernetes, containers can be dynamically allocated and managed across many servers. Internally, it can even replace virtualization management platforms like VMWare, using Docker containers as cloud hosts; -

Application service packaging

In web application service development scenarios, the Java runtime environment and Tomcat server can be packaged as a base image. After modifying the code package, it can be added to the base image to create a new image, making it easy to upgrade services and control versions; -

Container as a Service (CaaS)

The emergence of Docker has led many cloud platform providers to offer container cloud services, abbreviated as CaaS. Below is a comparison of IaaS, PaaS, and SaaS:IaaS (Infrastructure as a Service): Provides virtual machines or other basic resources as services to users. Users can obtain virtual machines or storage from providers to load related applications, while the cumbersome management of these infrastructures is handled by IaaS providers. Its main users are system administrators and operations personnel in enterprises; -

Continuous Integration and Continuous Deployment

The internet industry advocates agile development, and Continuous Integration/Deployment (CI/CD) is the most typical development model. Using a Docker container cloud platform, it is possible to automatically trigger the backend CaaS platform to download, compile, and build a test Docker image after the code is pushed to Git/SVN, replacing the testing environment container service, and automatically running unit/integration tests in Jenkins or Hudson. Once tests pass, the new version image can be automatically updated online, completing service upgrades. The entire process is automated, simplifying operations to the greatest extent while ensuring that online and offline environments are completely consistent, and that the online service version is unified with the Git/SVN release branch. -

Solving the Implementation Challenges of Microservices Architecture

Frameworks like Spring Cloud can manage microservices, but microservices still need to run on operating systems. An application developed with a microservices architecture often has many microservices, leading to the need to start multiple microservices on a single server to improve resource utilization. However, microservices may only be compatible with certain operating systems, leading to wasted resources and operational difficulties, even when there are ample server resources (due to differing operating systems). Utilizing Docker’s environment isolation capabilities allows microservices to run within containers, solving the aforementioned issues. -

Executing Temporary Tasks

Sometimes users only want to execute one-time tasks, but using traditional virtual machines requires setting up an environment, and after executing the task, resources need to be released, which is quite cumbersome. Using Docker containers allows for the construction of temporary runtime environments, which can be closed after the task is completed, making it convenient and quick. -

Multi-Tenant Environments

By leveraging Docker’s environment isolation capabilities, it is possible to provide exclusive containers for different tenants, achieving simplicity and cost-effectiveness.

11. Conclusion

The technology behind Docker is not mysterious; it is merely an application-level containerization technology that integrates various achievements accumulated by predecessors. It utilizes version-compatible kernel containerization technology used in various Linux distributions to achieve the effect of building once and running everywhere, and it leverages the basic operating system image layers within containers to shield the operating system differences of the actual running environment, allowing users to focus solely on ensuring correct operation on the selected operating system and kernel version, greatly improving efficiency and compatibility.

However, as containers run in increasing numbers, managing containers will become another operational challenge, necessitating the introduction of container management systems like Kubernetes, Mesos, or Swarm, which will be introduced in future opportunities.

Finally, follow the public account Java Technology Stack and reply: Interview to obtain a complete set of Java interview questions and answers.

Follow Java Technology Stack for more dry goods