Thank you for reading, I am Jiang Feng, focusing on AI tools, intelligent agents, and AI programming.

I am a science fiction movie fan and have watched almost all sci-fi movies, often more than once.

For example, movies like Ex Machina, Chappie, I, Robot, and The Matrix are all excellent films. These movies present a sci-fi perspective to the audience without involving life and ethics.

However, there is one movie that I believe better reflects the lifestyle after the popularization of AI: Spike Jonze’s “Her”.

Strictly speaking, this is a sci-fi romance film. The theme reflects some ethical issues that arise after artificial intelligence deeply intervenes in our lives.

The male protagonist, Theodore Twombly, is a writer and a very lonely person. By chance, he comes into contact with the latest artificial intelligence system OS1, embodied as Samantha.

Samantha is an electronic device but possesses AI capabilities, allowing her to discuss various topics with people. Theodore becomes obsessed with chatting with Samantha and even develops feelings for her.

The movie was released in 2013, but the timeline is set in 2025. At that time, I wondered if humanity could really reach that level by 2025. Would there be an AI device that could chat at any time?

Now, it is exactly 2025, and large models like GPT, DeepSeek, and Kiwi have already achieved AI chatting. However, a hardware chatting device that combines an operating system and AI, as depicted in the movie, seems to be still absent.

However, after successfully running the Qwen3:06b model on my Raspberry Pi, I realized that everything in the movie is not far off.

Some may wonder, what does it mean that it can run on a Raspberry Pi?

The Raspberry Pi is a small development board widely used in open-source smart home systems, IoT systems, and industrial network systems. These scenarios share a common term: IoT.

This includes the desktop AI buddy recently developed by Microsoft, which also uses the Raspberry Pi.

Therefore, if large models can run smoothly on the Raspberry Pi, it is foreseeable that the hardware future of the IoT market will be AI-enabled.

After all this, let’s introduce how to run large models on the Raspberry Pi and see the results.

01

Raspberry Pi Configuration

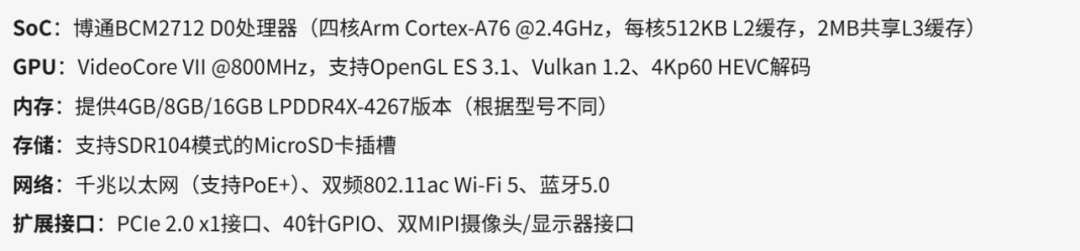

I chose the latest Raspberry Pi 5, with a 4-core 2.4G CPU.

The package I purchased is as follows, with the only difference being that I used a 128G SD card. This allows for more storage to download larger models.

Then download the official Raspberry Pi imaging tool.

Insert the SD card into the card reader, connect the reader to the laptop, select the SD card and the operating system to be imaged, and proceed with the imaging.

After imaging, insert the SD card into the Raspberry Pi, power it on, and the Raspberry Pi will run normally.

I won’t go into detail about the entire process here; if you have any questions, feel free to message me.

02

Running Large Models

Step 1: Download the Ollama software.

Automatic installation method:

curl -fsSL https://ollama.com/install.sh | sh

However, you may encounter slow network speeds; for example, it took me a long time to download, so I eventually opted for manual installation.

The manual installation steps are described on the Ollama official website.

https://github.com/ollama/ollama/blob/main/docs/linux.md

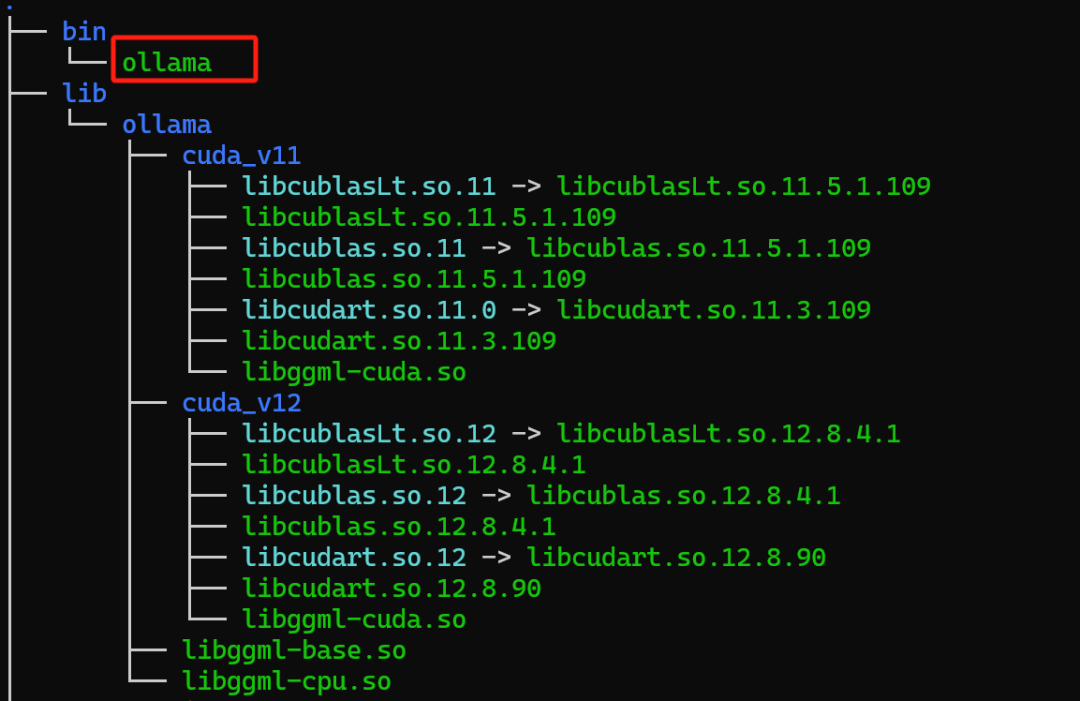

First, download the arm64 version, as the Raspberry Pi uses an Arm Cortex chip and is based on the Arm architecture.

curl -L https://ollama.com/download/ollama-linux-arm64.tgz -o ollama-linux-arm64.tgz

Extract the installation package to the local directory.

sudo tar -C /usr -xzf ollama-linux-arm64.tgz

After extraction, you can find the Ollama executable in the bin directory.

Run the command:

ollama serve # Start the Ollama process

ollama run qwen3:0.6b # Run the model; the first time it will pull the model

Once the model is pulled, you can chat smoothly. The response speed exceeded my expectations. Earlier this year, I ran the DeepSeek-1.5b model on the Raspberry Pi 5, and the speed of running Qwen3 is even faster than that of DeepSeek-1.5b.

Experience the speed of Qwen3:0.6b on the Raspberry Pi

03

Calling Large Models in Code

Simply using Ollama to run large models is not enough. What we need is for applications to integrate large models, rather than manually invoking them.

Fortunately, Ollama provides a Python library for use. We can write code to utilize the large model.

Installation command:

sudo pip3 install ollama –break-system-packages

If the speed seems slow, you can switch the mirror site to a domestic one.

After installation, first execute the command ollama serve to start Ollama. Then execute the following code.

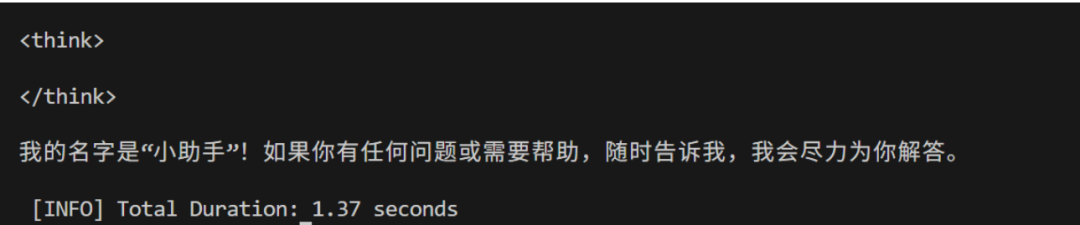

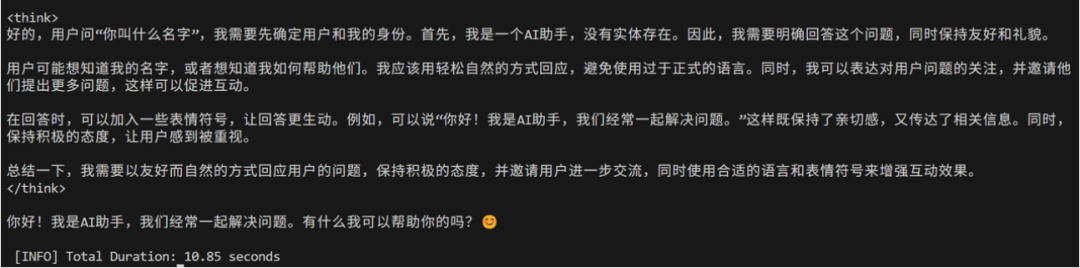

import ollama

if __name__== "__main__":

MODEL = 'qwen3:0.6b'

PROMPT = 'What is your name /no_think'

res=ollama.generate(model=MODEL, prompt=PROMPT) # print(res)

print(f"\n{res['response']}")

print(f"\n [INFO] Total Duration: {(res['total_duration']/1e9):.2f} seconds")Qwen3 introduces “thinking mode” and “non-thinking mode”, allowing the model to perform optimally in different scenarios. In thinking mode, the model engages in multi-step reasoning and deep analysis, similar to how humans ponder complex problems.

In non-thinking mode, the model prioritizes response speed and efficiency, suitable for simple tasks or real-time interactions.

Including /no_think in the request indicates exiting thinking mode.

The code includes runtime statistics, showing that the response time in thinking mode can reach up to 10 seconds, while in non-thinking mode, it is only 1 second.

In addition, Ollama also provides a chat mode for dialogue with the model.

from ollama import chat

stream = chat(

model='qwen3:0.6b',

messages=[{'role': 'user', 'content': 'Why is the sky blue?'}],

stream=True,

)

for chunk in stream:

print(chunk['message']['content'], end='', flush=True)For detailed operations, refer to:

https://github.com/ollama/ollama-python

In Conclusion

Some may see this and ask, what’s the big deal? It’s just running a large model.

It’s important to note that the Raspberry Pi does not have a GPU; it only has a general-purpose CPU. Its configuration is even lower than that of many children’s watches. If the Raspberry Pi can run it, many other IoT devices can also run large models, especially since large models are evolving towards smaller sizes.

The Raspberry Pi can connect to many peripherals, such as cameras, microphones, and speakers. If I connect a microphone and speaker, then convert the audio collected by the microphone into text and send it to the large model, and then pass the output from the large model to the speaker for audio playback, wouldn’t that be a local chatbot that does not rely on the internet or a large model API?

Moreover, different peripherals can create various functionalities. Let’s not forget that Qwen3 also has built-in MCP functionality. This time I talked about how to run it; next time I will discuss how to run MCP.

AI’s future is certainly not limited to large models and applications; AI hardware is an ocean of possibilities.In the past, it was often said that software defines hardware; in the AI era, it is intelligent agents that define hardware.

#AI #AI tools #DeepSeek #Qwen3 #large models #Raspberry Pi 5 #IoT #AI hardware

Hello, I am Jiang Feng, with a master’s degree in Electronic Information Engineering, primarily a programmer. In the AI era, I am starting my own media IP journey. Since graduation, I have changed jobs once and worked at two companies. My next job: digital nomad.

Add me on WeChat to receive a big gift package, including AI/DeepSeek/eBook materials.