(For algorithm enthusiasts, star this post to hone your programming skills)

Source: Machine Heart

He is the father of the C language, a Turing Award winner in 1983, and a key developer of Unix. However, he missed out on his PhD due to his “rebellious” nature, and his doctoral thesis was lost for half a century. Now, this mysterious doctoral thesis has finally resurfaced.

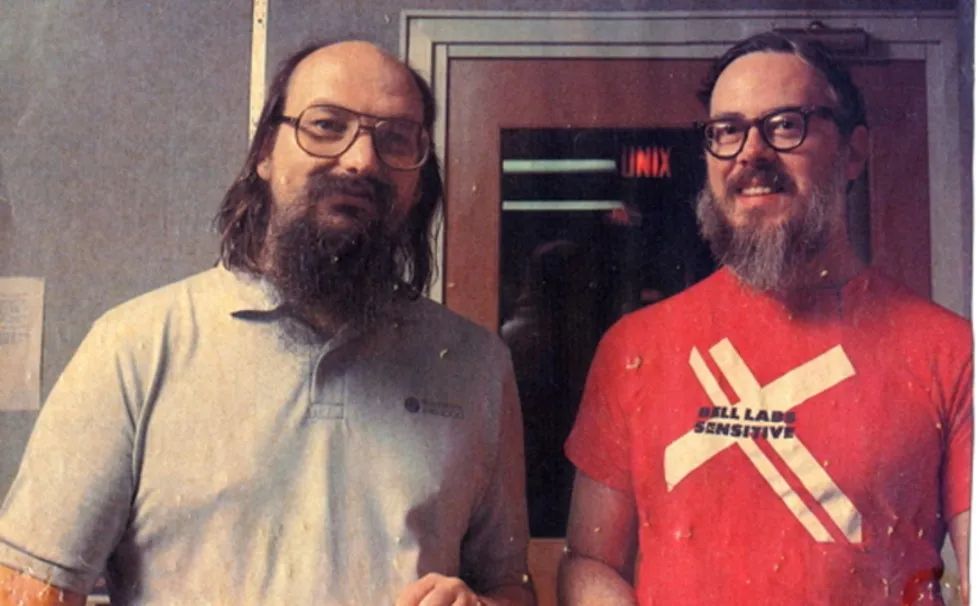

Many people may have heard of Dennis Ritchie. In the late 1960s, he graduated from Harvard University with a degree in Applied Mathematics and “followed in his father’s footsteps” by joining Bell Labs, where he spent his entire career. Shortly after joining Bell Labs, he collaborated with Ken Thompson to develop the Unix operating system and the enduring C language. Thompson led the system’s development, while Ritchie spearheaded the creation of the C language. After the C language was released, Thompson rewrote Unix using it. In 1983, Dennis Ritchie and Ken Thompson jointly received the Turing Award.Half a century later, Unix has become the foundation for most operating systems in the digital world, while C has become one of the most popular programming languages worldwide.

Ken Thompson and Dennis RitchieAlthough Dennis Ritchie passed away in 2011, Bell Labs still maintains his personal homepage. On this page, Ritchie describes his academic journey in computer science in his characteristic dry tone:“I studied undergraduate physics at Harvard and further pursued applied mathematics… My doctoral thesis (1968) was on subrecursive hierarchies. My undergraduate studies made me realize that my intellect was insufficient to become a physicist, and moving towards computers seemed quite promising. My graduate experience further clarified that my intellect was also insufficient to make me an expert in algorithm theory. I personally preferred procedural languages over functional languages.”Regardless of whether these self-assessments are objective, the path Ritchie chose indeed led him to a field where he could shine.Despite Ritchie’s fame in the computer field, few people know that his doctoral thesis has rarely been seen, as it was lost.That’s right, it was lost; it was neither published nor included in any public literature, and even the Harvard University library’s catalog and thesis database could not locate it. When Ritchie passed away in 2011, his sister Lynn meticulously searched through Harvard’s catalog records and other channels but found no copy.However, perseverance paid off, and she eventually found a copy from Ritchie’s advisor’s widow. But due to the lack of a public copy, only a handful of people had read this thesis over the past half-century.Why did this happen?In Ritchie’s self-description, we note that he did not explicitly state that he obtained his PhD based on the thesis he wrote in 1968. The reality is: he did not obtain a PhD.What happened in between? Ritchie’s graduate school classmate, MIT professor Albert Meyer, provided an unexpected answer.Because he didn’t want to pay the binding fee, the doctoral thesis was lost for over half a centuryAlbert Meyer recalled:“The explanation I heard from our advisor Pat Fischer was that Harvard had a rule: to obtain a PhD, one had to submit a bound copy of the thesis to the university library, and only then would the library provide a certificate for the PhD. At that time, Dennis had already submitted the thesis to the thesis review committee and received approval. He even typed up a copy to submit to the library, but the library told him that the thesis needed to be bound before submission. At that time, the binding fee was not a small amount… It wasn’t so expensive that he couldn’t afford it, but it was still quite a sum. According to Pat, Dennis’s attitude was: ‘If Harvard Library wants a bound thesis, they should pay for it themselves; I won’t pay!’ Clearly, he did just that and thus did not obtain his PhD.”So, the reason this big shot did not obtain his doctoral thesis was not due to issues with the thesis itself, but rather because of his “rebellious” nature, refusing to pay the binding fee!After extensive inquiries, Lynn confirmed that Ritchie indeed did not submit a bound thesis and did not obtain a PhD from Harvard, but Ritchie’s brother John believes that his “rebelliousness” was not solely due to the binding fee: Ritchie had already secured a dream job as a researcher at Bell Labs, and he was the kind of person who “didn’t sweat the small stuff”.

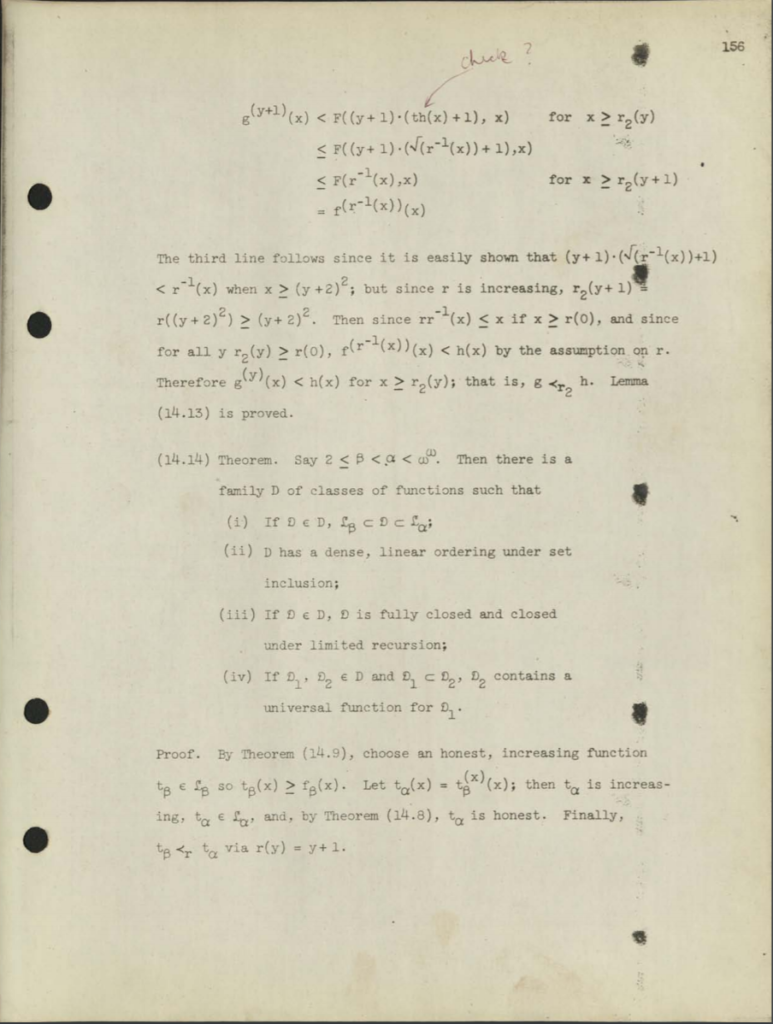

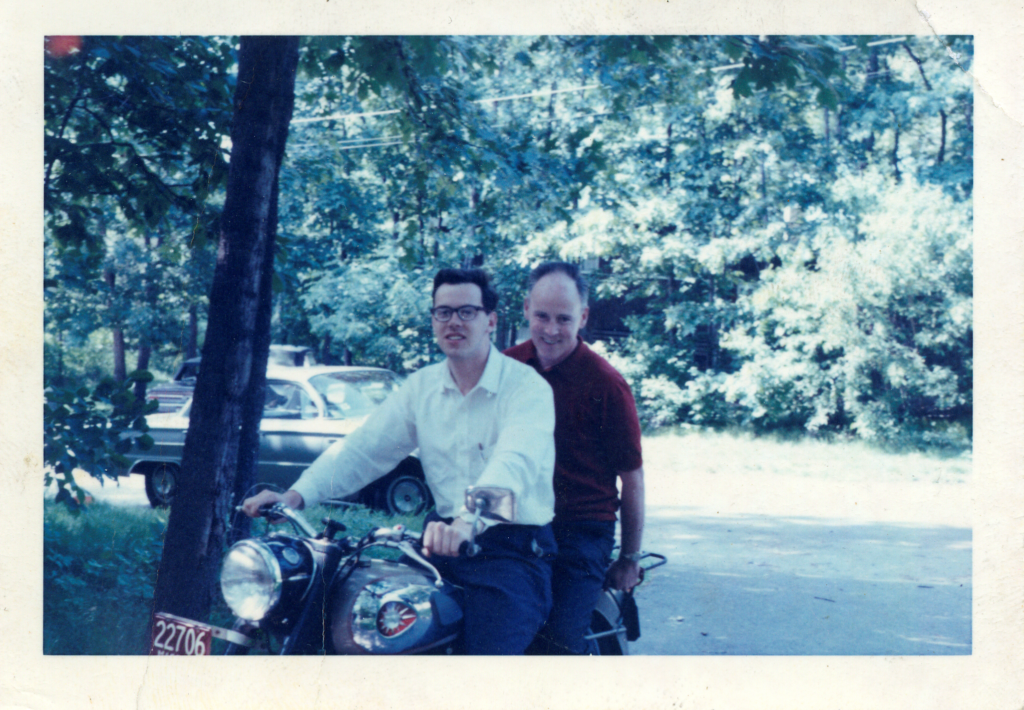

When Dennis Ritchie first entered Bell Labs, he worked with his father Alistair Ritchie (left) and electronic switch pioneer William Keister (middle).Recently, Ritchie’s family donated some of his belongings to the Computer History Museum (CHM), the most important of which is a photocopy of Ritchie’s doctoral thesis, marking the first public appearance of this thesis in half a century. Also donated were early source codes of Unix (1970-71).This thesis, written in 1968, is titled “Program Structure and Computational Complexity”; Ritchie was only 27 at the time. Now, Ritchie has passed away, and the thesis has long since faded and yellowed.

The manuscript of Dennis Ritchie’s lost thesis is publicly available for the first time.Along with the photocopy, an electronic version of the thesis has also been released.Thesis link:https://archive.computerhistory.org/resources/access/text/2020/05/102790971/Ritchie_dissertation.pdfPerhaps this thesis can give us a glimpse into the early development of computer science and the challenges faced by the pioneers of that time. Additionally, it can remind us how far we have come on this path and the changes technology has undergone in a person’s brief lifetime.Decoding Dennis Ritchie’s Doctoral Thesis

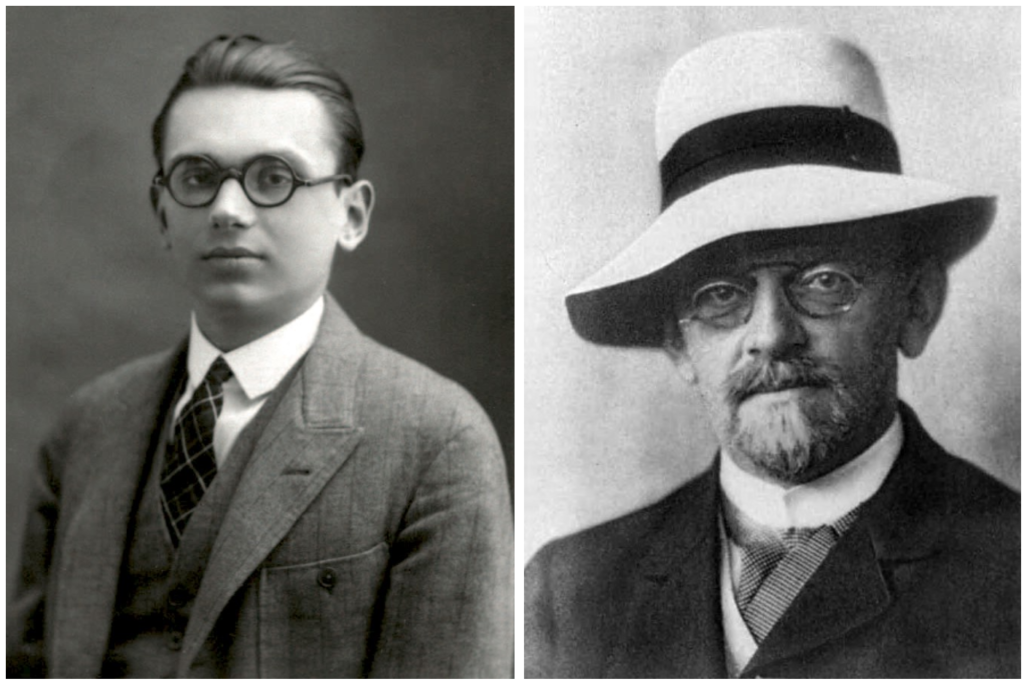

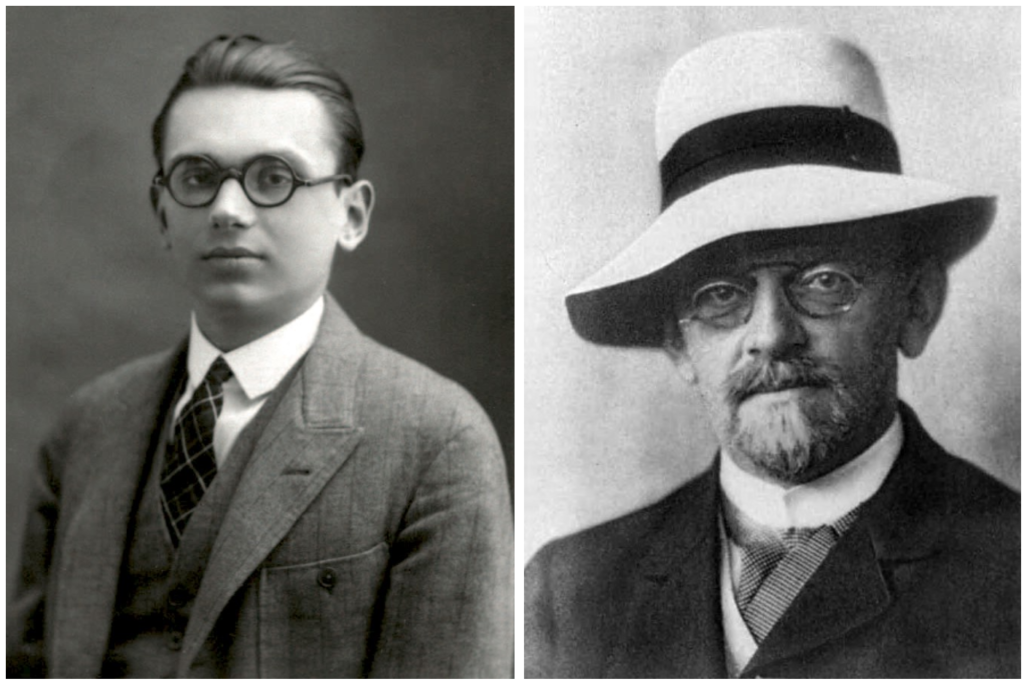

Restoring and making Dennis Ritchie’s thesis manuscript public is one thing; understanding it is another.To understand the content of this thesis, we need to return to the early 20th century, a time when mathematicians, philosophers, and logicians were exploring the ultimate foundations of mathematics.For several centuries prior, the characteristics of mathematical knowledge—exactitude and certitude—placed it in a special, even sacred position. Philosophical reflections on the sources or foundations of these mathematical characteristics can be traced back at least to Pythagoras and Plato, and in the early 20th century, influential mathematicians and philosophers established formal logic (expressing rules and reasoning steps using symbolic systems) as the foundation of mathematics.In the 1920s, German mathematician David Hilbert attempted to defend the view of formal logic as the foundation of mathematics and had a significant impact. Specifically, Hilbert believed that one could construct certain properties of mathematics through specific proofs in formal logic, such as the absence of contradictions in mathematics and that any mathematical statement is either true or false.The proofs advocated by Hilbert were “finitist,” relying on the manipulation of formal logic’s symbolic expressions using simple, almost mechanical rules.In the 1930s, as people sought such symbolic logical manipulation rules, mathematicians and philosophers linked them to computation and established rigorous processes for human “computers” and mechanical calculators to perform mathematical operations.Kurt Gödel provided such proofs advocated by Hilbert but demonstrated the opposite of what Hilbert expected. Gödel’s logic did not show that the logic ensuring everything in mathematics is correct could be proven; rather, it led to the opposite conclusion, namely Gödel’s incompleteness theorem.Gödel’s shocking result relied on arguments about specific mathematical objects known as “primitive recursive functions.” The emphasis of Gödel’s recursive functions is that they are computable and depend on “finite processes,” which are the kind of simple, almost mechanical rules that Hilbert envisioned.

Left: Gödel as a student (1925); Right: David Hilbert (1912).After Gödel, American mathematician Alonzo Church formed logical proofs using similar computability arguments, which not only demonstrated that mathematics is not always decidable but that some mathematical statements cannot even be determined to be true or false. Church’s proof was based on the concept of “effectively calculable functions,” which was based on Gödel’s recursive functions.Almost simultaneously, British mathematician Alan Turing constructed a proof with the same result, but his proof was based on the concept of “computability” defined by the operations of abstract “computing machines.” This abstract Turing machine could perform any computation and later became a fundamental basis for theoretical computer science.In the following decades, before computer science became an established discipline, mathematicians, philosophers, and others began to explore the nature of computation, gradually distancing themselves from the foundations of mathematics.As Albert Meyer recounted in an interview:“In the 1930s and 1940s, ‘what is computable and what is not computable’ received widespread research and understanding. Gödel and Turing placed logical limits on what is computable and non-computable. However, new ideas emerged in the 1960s: ‘Let’s try to understand what can be done with computation,’ and it was then that the concept of computational complexity emerged… You can do everything through computation, but not everything is that easy… What will the effects of computation be?”With the rise of electronic digital computation, the question for these researchers was no longer about the impact of logical arguments on the essence of mathematics, but rather the revelation of the limitations of computability itself.As these limitations were fully understood, researchers’ interests shifted to the essential questions of computability within these limitations.

MIT Professor Albert Meyer.The exploration of the above questions partly took place in the mid-1960s. At that time, Dennis Ritchie and Albert Meyer both entered Harvard University’s Applied Mathematics Department for graduate studies, which was often the place where electronic digital computation practices took root on campus. Meyer recalled:“Applied mathematics is a vast discipline, and this computational theory is just a small, new part of it.”After entering the Applied Mathematics Department at Harvard, Ritchie and Meyer became increasingly interested in computational theory, leading them to find Patrick Fischer as their advisor. Fischer had just obtained his PhD and had not been teaching at Harvard for long, coinciding with Ritchie and Meyer’s graduate studies. Meyer recalled:“Patrick was very interested in understanding the nature of computation. He wanted to know what makes things complex and what makes them simple… What can different types of programs do?”A Summer AssignmentAfter a year of graduate study, Fischer hired Ritchie and Meyer as summer research assistants. Meyer was assigned to research an “open problem” that Fischer had discovered in computational theory and to report back before the end of summer. Fischer was about to leave Harvard at that time.Meyer spent the entire summer working hard on this problem but completed only a small part before the summer ended. Soon after, while attending a seminar by Fischer, Meyer suddenly thought of a solution and excitedly shared this breakthrough with Fischer. To Meyer’s surprise and slight disappointment, Fischer told him that Ritchie had already thought of the solution. It turned out that Fischer had assigned the same problem to both of them without informing them that they were working on the same issue!

Dennis Ritchie and his father Alistair E. Ritchie.The problem Fischer assigned to both was a significant one regarding computational complexity, relating to the relative ease or time of computing one thing compared to another. Recall that Gödel used primitive recursive functions to illustrate the computability of finite processes, which was a key point in his famous paper. In the 1950s, Polish mathematician Andrzej Grzegorczyk defined the hierarchy of these recursive functions based on the rate of growth of functions. Fischer’s summer problem was to have Meyer and Ritchie explore the relationship between this function hierarchy and computational complexity.Fortunately, Meyer’s appreciation for Ritchie’s solution offset his disappointment; he recalled, “… The concept of loop programs proposed by Dennis was simply beautiful and so important; it was a very good explanatory mechanism and a clever way to clarify the subject. I didn’t even care whether he solved the problem.”The loop program proposed by Ritchie during that summer became the core of his 1968 doctoral thesis. In fact, loop programs are very small, limited computer programs, and anyone who has written a loop program in BASIC using the FOR command will be familiar with them.In a loop program, you can set a variable to zero, increment a variable by 1, or move a variable’s value to another variable. That’s it. The only control available in a loop program is a simple loop, where a sequence of instructions is repeated a certain number of times. Importantly, loops can be “nested,” meaning loops within loops.Ritchie demonstrated in his doctoral thesis that these loop functions are precisely what is needed to produce Gödel’s primitive recursive functions, and only these functions; they perfectly reflect the hierarchy proposed by Grzegorczyk.Gödel believed that his recursive functions had strong computability, while Ritchie proved that loop programs were the appropriate tool to accomplish this task.Ritchie’s thesis showed that the degree of nesting of loop programs is a measure of their computational complexity and also a measure of the time required for their computation. Furthermore, he pointed out that evaluating the depth of loops corresponds exactly to Grzegorczyk’s hierarchy. The growth rate of primitive recursive functions is indeed related to their computational complexity; in fact, they are the same.Meyer recalled:“Loop programs were made into a very simple model that any computer scientist could immediately understand. When explaining the original recursive hierarchy, traditional formulas used very complex logical symbols to represent complicated syntax, which was hard for ordinary people to grasp. But now, you suddenly find that a three or four-line explanation can clearly describe loop programs in computer science.”Meyer explained:“Dennis was a very lovely, easy-going, humble person. Clearly, he was very smart, but also somewhat reserved… We discussed our co-authored paper ‘The Complexity of Loop Programs’; he read the paper and provided his feedback and explained loop programs to me.”In 1967, this paper was published by ACM. It marked the beginning of a prolific era in Meyer’s theoretical computer science career and was an important step in his professional journey. However, for him and Ritchie, this collaboration was the end.“It was really disappointing. I wanted to collaborate with him because he seemed smart, friendly, and it was fun to work with him. But, you know, he was already doing other things. He was playing ‘Spacewar’ all night!” Meyer recalled the scene at that time.Let’s return to Ritchie’s personal assessment mentioned at the beginning of the article: “My graduate experience made me realize that my intellect was insufficient to become an expert in algorithm theory.”After understanding this doctoral thesis, we find that he seems to have been misleading. Perhaps, compared to theoretical research, implementation was more enticing for Ritchie, which is why he chose to explore the boundaries, essence, and possibilities of computation by creating new systems and languages.Reference link:https://computerhistory.org/blog/discovering-dennis-ritchies-lost-dissertation/

– EOF –

Recommended Reading Click the title to jump

1. What is the most complex C language program you have seen or written?

2. Linus is at it again! He angrily tells Intel to “go die”

3. The core idea of the Two Sum problem

If you found this article helpful, please share it with more people.

Follow ‘Algorithm Enthusiasts’ and star this post to hone your programming skills.

Great article, I’mreading it❤️