Friendly Reminder: The full text contains over 6200 words, estimated reading time is 16 minutes.

Abstract

In public cloud environments, users no longer own the hardware resources of the infrastructure; software runs in the cloud, and business data is also stored in the cloud. Therefore, security issues are one of the main concerns for users when deciding whether to migrate to the cloud.

The security of public clouds involves multiple aspects such as virtualization security, management security, and data security. The key core and security premise is virtualization security, as only by addressing this issue can the unique security problems of the cloud environment be gradually and comprehensively resolved, ultimately providing users with a secure cloud environment.

Therefore, the security prevention, response, and problem-handling capabilities of cloud service providers are particularly important.

1. Vulnerability Overview

Recently, a buffer overflow vulnerability (CVE-2019-14835) was discovered in the vhost kernel module of the QEMU-KVM virtualization platform, which could be exploited during the hot migration of virtual machines. Privileged guest users can pass invalid-length descriptors to the host, escalating their privileges on the host, and thereby executing virtual machine escape attacks, manipulating the virtual machine to crash the host kernel or execute malicious code in the physical machine kernel, gaining control of the host.

2. Introduction to virtio Networking

Virtio is a standardized open interface developed for virtual machines to access simplified devices, such as block devices and network adapters. Virtio-net is a virtual Ethernet card and is the most complex device supported by virtio to date.

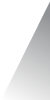

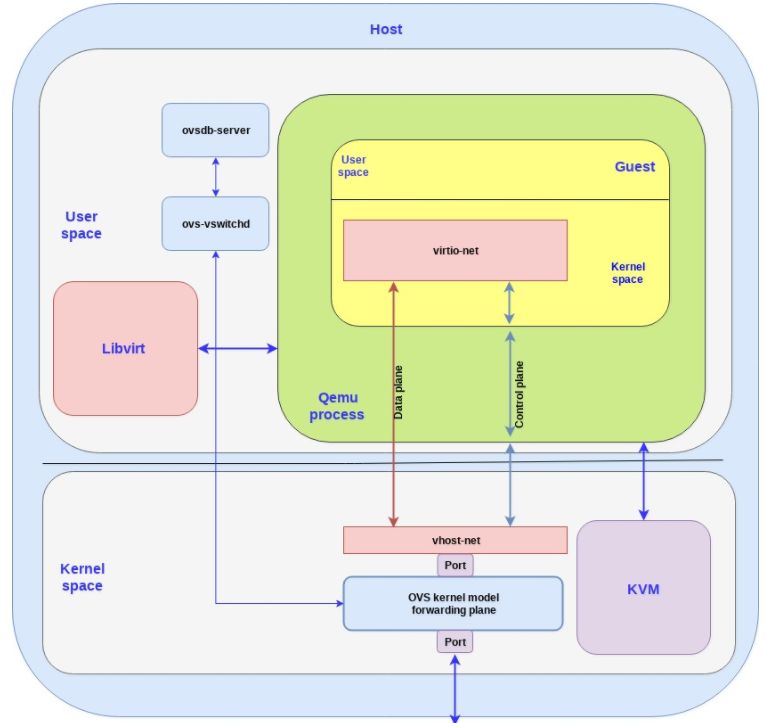

Figure 1: vhost-net / virtio-net Architecture

Virtio networking has two layers (as shown in Figure 1):

1. Control plane: Used for establishing and terminating the capability exchange negotiation between the host and the guest.

2. Data plane: Used for transmitting actual data between the host and the client.

The control plane is based on the virtio specification, defining how to create control and data planes between the guest and host. For performance reasons, the data plane does not adopt the virtio specification but uses the vhost protocol for data transmission.

As shown in Figure 1, the component located in the guest in virtio is virtio-net, which runs as a frontend in the guest kernel space, while the component located in the host is vhost-net, which runs as a backend in the host kernel space.

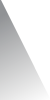

Figure 2: Data Transmission in Virtio Networking

In the actual data plane communication process, sending and receiving are accomplished through dedicated queues (as shown in Figure 2). For each virtual CPU, a send and a receive queue are created.

The above describes how the guest uses virtio networking to pass packets to the host kernel. To forward these packets to other clients running on the same host or outside the host (such as the internet), OVS is required.

OVS is a software switch that allows packet forwarding in the kernel. It consists of a user space part and a kernel part (as shown in Figure 3):

User space: Includes the database for managing and controlling the switch (ovsdb-server) and the OVS daemon (OVS-vswitchd).

Kernel space: Includes the ovs kernel module responsible for the data path or forwarding plane.

Figure 3: OVS Connecting Virtio Model

As shown in Figure 3, the OVS controller communicates with the ovsdb-server and the kernel layer’s forwarding plane, using two ports to push packets into and out of OVS. As shown in Figure 3, one port connects the OVS kernel forwarding plane to the physical NIC, while the other port connects to the vhost-net backend.

3. Attack Analysis

This vulnerability exists in the host’s vhost/vhost_net kernel module, which is a virtio network backend. The bug occurs during the hot migration process, where QEMU needs to know the dirty pages. The vhost/vhost_net uses a kernel buffer to record the dirty log but does not check the boundaries of the log buffer.

Therefore, we can forge the desc table in the guest, wait for migration or perform some actions (such as increasing the workload) to trigger the cloud provider to migrate this guest. When the guest is migrated, it will cause the host kernel’s log buffer to overflow.

The vulnerability call path is as follows:

handle_rx(drivers/vhost/net.c) -> get_rx_bufs -> vhost_get_vq_desc -> get_indirect(drivers/vhost/vhost.c)In the VM guest, the attack can create an indirect desc table in the VM driver, allowing vhost to enter the above call path during the hot migration of the VM, ultimately reaching the function get_indirect.

The detailed triggering logic of the vulnerability is as follows (taking the BC-Linux7.5 system with kernel 4.14.78-300.el7.bclinux as an example, omitting the information of the calling functions before and after):

1. static int get_indirect(struct vhost_virtqueue *vq,

2. struct iovec iov[], unsigned int iov_size,

3. unsigned int *out_num, unsigned int *in_num,

4. struct vhost_log *log, unsigned int *log_num,

5. struct vring_desc *indirect)

6. {

7. struct vring_desc desc;

8. unsigned int i = 0, count, found = 0;

9. #len can be controlled by the VM Guest

10. u32 len = vhost32_to_cpu(vq, indirect->len);

11. struct iov_iter from;

12. int ret, access;

13.

14. …omitted…

15.

16. #so count can also be controlled by the VM Guest

17. count = len / sizeof desc;

18. /* Buffers are chained via a 16 bit next field, so

19. * we can have at most 2^16 of these. */

20. #count can reach up to USHRT_MAX + 1, i.e., 2^16 + 1

21. if (unlikely(count > USHRT_MAX + 1)) {

22. vq_err(vq, "Indirect buffer length too big: %d\n",

23. indirect->len);

24. return -E2BIG;

25. }

26.

27. do {

28. unsigned iov_count = *in_num + *out_num;

29. #so this loop can execute USHRT_MAX+1 times

30. if (unlikely(++found > count)) {

31. vq_err(vq, "Loop detected: last one at %u "

32. "indirect size %u\n",

33. i, count);

34. return -EINVAL;

35. }

36. #causing each desc to be controllable

37. if (unlikely(!copy_from_iter_full(&desc, sizeof(desc), &from))) {

38. vq_err(vq, "Failed indirect descriptor: idx %d, %zx\n",

39. i, (size_t)vhost64_to_cpu(vq, indirect->addr) + i * sizeof desc);

40. return -EINVAL;

41. }

42. …omitted…

43. if (desc.flags & cpu_to_vhost16(vq, VRING_DESC_F_WRITE))

44. access = VHOST_ACCESS_WO;

45. else

46. access = VHOST_ACCESS_RO;

47.

48. #if desc.len is set to 0, translate_desc will not return an error and ret == 0

49. ret = translate_desc(vq, vhost64_to_cpu(vq, desc.addr),

50. vhost32_to_cpu(vq, desc.len), iov + iov_count,

51. iov_size - iov_count, access);

52. …omitted…

53. /* If this is an input descriptor, increment that count. */

54. if (access == VHOST_ACCESS_WO) {

55. #because ret == 0, the value of in_num will not change (if in_num is greater than ov_size, it will cause translate_desc to return an error

56. *in_num += ret;

57. #when executing hot migration, the log's buffer will not be NULL

58. if (unlikely(log)) {

59. #because log_num can be USHRT_MAX in size, but the size of the log buffer is far less than USHRT_MAX, leading to log buffer overflow

60. log[*log_num].addr = vhost64_to_cpu(vq, desc.addr);

61. log[*log_num].len = vhost32_to_cpu(vq, desc.len);

62. ++*log_num; #continuously counting

63. }

64. } else {

65. …omitted…

66. }

67. } while ((i = next_desc(vq, &desc)) != -1);

68. return 0;

From the above code, it can be seen that in each loop, as long as in_num is incremented by the count value of ret, and log_num is incremented by 1, it can theoretically ensure that the condition log_num < in_num holds at all times. However, the code does not consider some special cases where in_num does not increase. Once in_num does not increase while log_num increases, it will trigger an out-of-bounds access to the host log array.

In other words, if the virtual machine maliciously creates a vring desc with desc.len = 0, then the situation of in_num not increasing while log_num increases occurs, thus constructing part of the conditions that trigger this bug. Coupled with the hot migration of the virtual machine (which will call the relevant code under certain conditions during the hot migration), it will ultimately manipulate the virtual machine to crash the host kernel or execute malicious code in the physical machine kernel, gaining control of the host.

It should also be noted that a similar issue exists in another function of the vhost kernel module, vhost_get_vq_desc.

4. Remediation Plan

Based on the above analysis, the solution to this problem is to not record the log when desc.len is 0 (i.e., when the ret parameter value is 0). In this case, the value of log_num will not increase, ensuring that the condition log_num < in_num holds at all times.

The detailed patch changes are as follows:

1. diff --git a/drivers/vhost/vhost.c b/drivers/vhost/vhost.cindex 34ea219..acabf20 100644--- a/drivers/vhost/vhost.c+++ b/drivers/vhost/vhost.c

2. @@ -2180,7 +2180,7 @@ static int get_indirect(struct vhost_virtqueue *vq,

3. /* If this is an input descriptor, increment that count. */

4. if (access == VHOST_ACCESS_WO) {

5. *in_num += ret;

6. - if (unlikely(log)) {

7. + if (unlikely(log && ret)) {

8. log[*log_num].addr = vhost64_to_cpu(vq, desc.addr);

9. log[*log_num].len = vhost32_to_cpu(vq, desc.len);

10. ++*log_num;

11. @@ -2321,7 +2321,7 @@ int vhost_get_vq_desc(struct vhost_virtqueue *vq,

12. /* If this is an input descriptor,

13. * increment that count. */

14. *in_num += ret;

15. - if (unlikely(log)) {

16. + if (unlikely(log && ret)) {

17. log[*log_num].addr = vhost64_to_cpu(vq, desc.addr);

18. log[*log_num].len = vhost32_to_cpu(vq, desc.len);

19. ++*log_num;

4.1 Upgrade Content

The operating system team promptly followed up on this vulnerability information and released a security update announcement. The detailed security update content is as follows:

|

System Version |

Vulnerability Fix Version |

Announcement Details |

|

BC-Linux7.2 |

kernel-3.10.0-327.82.1.el7 |

BLSA-2019:0050 – [Important] Kernel Security Update Announcement |

|

BC-Linux7.3 |

kernel-3.10.0-514.69.1.el7 |

BLSA-2019:0051 – [Important] Kernel Security Update Announcement |

|

BC-Linux7.4 |

kernel-3.10.0-693.59.1.el7 |

BLSA-2019:0048 – [Important] Kernel Security Update Announcement |

|

BC-Linux7.5 |

kernel-3.10.0-862.41.2.el7 |

BLSA-2019:0047 – [Important] Kernel Security Update Announcement |

|

BC-Linux7.6 |

kernel-3.10.0-957.35.2.el7 |

BLSA-2019:0046 – [Important] Kernel Security Update Announcement |

For users of the BC-Linux system, please refer to the steps in the above announcement information to automatically upgrade the system kernel using the yum command and restart the system to apply the patch functionality;

4.2 Kernel Hot Patch

Upgrading the kernel requires a reboot, which generally is not adopted in online production environments, as system reboot means business interruption.

For systems running online, we have launched a hot patch remediation plan that can fix this vulnerability without rebooting the system, ensuring that user business remains uninterrupted.

Taking the BC-Linux7.3 system with kernel 3.10.0-514.1.el7 as an example, the usage of the hot patch is as follows:

1. Install kpatch user-space tool and patch package

Download and install the hot patch management tool provided by the BC-Linux team for the BC-Linux7.3 system:

bclinux-kpatch-0.6.0-2.el7_3.bclinux.x86_64.rpm

Download and install the hot patch file provided by the BC-Linux team for the BC-Linux7.3 system:

bclinux-kpatch-patch-7.3-5.el7_3.bclinux.x86_64.rpm

2. Apply the hot patch to the system

First, check the current hot patch information of the system:

1. [root@bclinux-vm1 ~]# kpatch list

2. Loaded patch modules:

3.

4. Installed patch modules:

5. vhost_make_sure_log_num_CVE_2019_14835 (3.10.0-514.1.el7.x86_64)

Start the kpatch service to load all installed kpatch hot patch modules, while configuring the default system to start the kpatch service at boot, ensuring that the hot patch remains effective after system reboot:

1. [root@bclinux-vm1 ~]# systemctl start kpatch

2. [root@bclinux-vm1 ~]# systemctl enable kpatch

3. Verify that the hot patch is effective

1. [root@bclinux-vm1 ~]# kpatch list

2. Loaded patch modules:

3. vhost_make_sure_log_num_CVE_2019_14835 [enabled]

4.

5. Installed patch modules:

6. vhost_make_sure_log_num_CVE_2019_14835 (3.10.0-514.1.el7.x86_64)

Through the above hot patch solution, the issue has been resolved, and we have also verified the effectiveness of the hot patch using the POC scheme published by the community.

The CVE-2019-14835 vulnerability discovered this time exists at the kernel level of the virtualization host, breaking the security boundaries between the virtual machine and the host, as well as between virtual machines (previous vulnerabilities we discovered were more at the user space level). The operating system team responded promptly to the related issues and provided a hot patch solution for the online environment. Currently, we are implementing the hot patch solution for online environments such as mobile cloud as planned, ensuring that users experience no disruption and that their business continues to operate stably.

References

1. Introduction to virtio-networking and vhost-net

2. VHOST-NET GUEST TO HOST ESCAPE – Kernel vulnerability – CVE-2019-14835

3. V-gHost: QEMU-KVM VM Escape in vhost/vhost-net

4. https://www.openwall.com/lists/oss-security/2019/09/17/1

5. https://www.openwall.com/lists/oss-security/2019/09/24/1

6. patch: vhost: make sure log_num < in_num

Source: Su Yan Da Yun Ren

↓↓ Click “Read the original” 【Join the Cloud Technology Community】

Related Articles:

All latest versions of Docker are affected! Allowing attackers to gain root access to the host

Warning | Linux Explodes TCP “SACK Panic” Vulnerability! Multiple companies affected: AWS, Alibaba Cloud, Tencent Cloud, Huawei Cloud, RedHat, etc.

Google researchers: Software technology cannot solve the “Spectre” chip vulnerabilityiLnkP2P exposes serious vulnerabilities: 2 million IoT devices worldwide affected

Google G Suite vulnerability: Some passwords stored in plaintext for up to fourteen years

RightScale 2019 Cloud Status Survey Report: 35% of cloud spending is wasted [with 50-page PDF download]

More articles please follow

If you find this article interesting, click here [to read more] 👇