Overview of Computer Development

A computer is an electronic device used for high-speed calculations, capable of performing both numerical and logical operations, and has memory storage capabilities. It is a modern intelligent electronic device that can automatically and rapidly process vast amounts of data according to programmed instructions. A computer that has no installed software is referred to as bare metal.

Computers can be classified into five categories: supercomputers, industrial control computers, network computers, personal computers, and embedded computers. More advanced types include biological computers, photonic computers, and quantum computers.

The inventor of the computer is John von Neumann.

Computers are one of the most advanced scientific and technological inventions of the 20th century, having an extremely significant impact on human production and social activities, and they have rapidly developed with great vitality. Their application fields have expanded from initial military and scientific research to various sectors of society, forming a massive computer industry that has driven global technological progress, leading to profound social changes. Computers have become ubiquitous in schools and enterprises, entering the homes of ordinary people, and have become an indispensable tool in the information society.

Below is an introduction to the history of computer development:

In 1642, French philosopher-mathematician Blaise Pascal invented the world’s first hand-cranked mechanical calculator, which used gear transmission principles to perform addition and subtraction.

In 1889, American scientist Herman Hollerith developed an electric tabulating machine based on electricity to store calculation data.

In 1930, American scientist Vannevar Bush created the world’s first analog electronic computer.

In 1933, American mathematician D.N. Lehmer built an electrical computer to factor all natural numbers between 1 and 10 million into prime factors.

On February 14, 1946, the world’s first electronic computer, the Electronic Numerical Integrator and Computer (ENIAC), commissioned by the U.S. military, was unveiled at the University of Pennsylvania. ENIAC, developed at the Aberdeen Proving Ground to meet ballistic calculation needs, used 17,840 vacuum tubes, measured 80 feet by 8 feet, weighed 28 tons, consumed 170 kW of power, and had a calculation speed of 5,000 additions per second, costing approximately $487,000. The advent of ENIAC was epoch-making, marking the beginning of the electronic computer era. Over the next 60 years, computer technology developed at an astonishing pace, with no other technology achieving a performance-to-price ratio increase of six orders of magnitude in just 30 years.

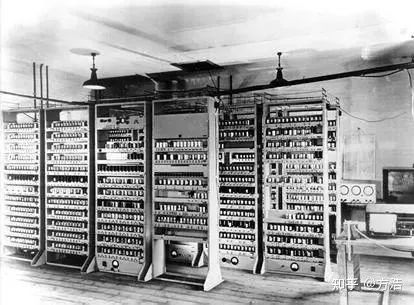

1st Generation: Vacuum Tube Digital Computers (1946–1958)

In terms of hardware, the logic elements used vacuum tubes, with main memory utilizing mercury delay lines, cathode ray tube static memory, magnetic drums, and magnetic cores; external storage used magnetic tapes. Software was based on machine language and assembly language. The primary application fields were military and scientific computing.

Characteristics included large size, high power consumption, and low reliability. They were slow (typically thousands to tens of thousands of operations per second) and expensive, but laid the foundation for future computer development.

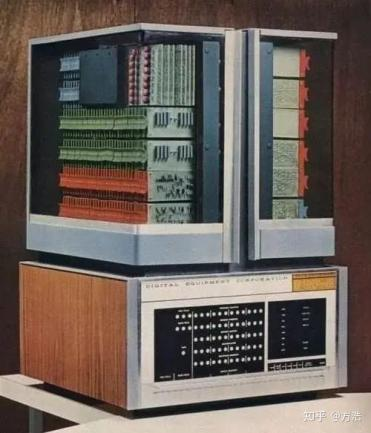

2nd Generation: Transistor Digital Computers (1958–1964)

In terms of hardware, operating systems, high-level languages, and their compilers emerged. The primary application fields were scientific computing and business processing, with initial entry into industrial control. Characteristics included reduced size, lower energy consumption, increased reliability, and improved calculation speed (typically hundreds of thousands to millions of operations per second), with significant performance improvements over the first generation.

3rd Generation: Integrated Circuit Digital Computers (1964–1970)

In terms of hardware, logic elements used medium and small-scale integrated circuits (MSI, SSI), with main memory still using magnetic cores. Software saw the emergence of time-sharing operating systems and structured, scalable programming methods. Characteristics included faster speeds (typically millions to tens of millions of operations per second), significantly improved reliability, further reduced prices, and a trend towards generalization, serialization, and standardization. Application fields began to include word processing and graphics processing.

4th Generation: Large Scale Integrated Circuit Computers (1970–Present)

In terms of hardware, logic elements used large-scale and very large-scale integrated circuits (LSI and VLSI). Software saw the emergence of database management systems, network management systems, and object-oriented languages. Characteristics included the birth of the world’s first microprocessor in Silicon Valley in 1971, marking the beginning of the microcomputer era. Application fields gradually expanded from scientific computing and business management to home use.

Jiang Hai Large Computer

Editor: Wang Chenchen

Typesetting: Shen Hanyu

Review: Shang Lingling