Click the above SRE Operations Group,👉Follow me👈,Choose Set as Star

High-quality articles delivered promptly

4 Nginx Reverse Proxy4.4 Implementing Load Balancing for HTTP Protocol Reverse Proxy

4.4.1 Related Directives and Parameters

Based on the implementation of Nginx reverse proxy, advanced features such as backend server grouping, weight allocation, status monitoring, and scheduling algorithms can be achieved using the ngx_http_upstream_module module.

https://nginx.org/en/docs/http/ngx_http_upstream_module.htmlupstream name { server address [parameters]; } # Define a backend server group, which can include one or more servers # After defining, reference it in the proxy_pass directive, scope httpserver address [parameters]; # Define a specific backend server in upstream, scope upstream # address specifies the backend server, which can be an IP address, hostname, or UNIX Socket, and can include a port number # parameters are optional parameters, specifically the following attributes # weight=number specifies the weight of the server, default value is 1 # max_conns=number specifies the maximum active connections for this server, after reaching this limit, no more requests will be sent to this server, default value 0 means no limit # max_fails=number conditions for marking the backend server as offline, when a client accesses, how many consecutive checks are performed on the selected backend server during this scheduling, if all fail, it is marked as unavailable, default is 1 time, when a client accesses, TCP triggers the health check of the backend server, rather than periodic probing # fail_timeout=time conditions for marking the backend server as online, for already detected unavailable backend servers, check again after this time interval to see if they are available, if found available, the backend server will participate in scheduling, default is 10 seconds # backup marks this server as a backup, only used when all backend servers are unavailable # down marks this server as temporarily unavailable, can be used for smooth offline of backend servers, new requests will no longer be scheduled to this server, existing connections are not affectedhash key [consistent]; # Use a self-specified key for hash calculation for scheduling, the key can be a variable, such as fields in the request header, URI, etc. If the corresponding server entry configuration changes, it will cause the same key to be rehashed # consistent indicates using consistent hashing, this parameter ensures that when the server entries in this upstream change, as few rehashes as possible occur, suitable for caching service scenarios, improving cache hit rate # scope upstreamip_hash; # Source address hash scheduling method, based on the client's remote_addr (the first 24 bits of the source address IPv4 or the entire IPv6 address) for hash calculation, to achieve session persistence, scope upstreamleast_conn; # Least connections scheduling algorithm, prioritizes scheduling client requests to the backend server with the least connections, equivalent to the LC algorithm in LVS # Combined with weight, it can achieve a similar effect to the WLC algorithm in LVS # scope upstreamkeepalive connections; # Maximum number of idle keep-alive connections to retain for each worker process, exceeding this value, the least recently used connections will be closed # Default not set, scope upstreamkeepalive_time time; # Duration to keep idle connections, exceeding this time, idle connections that have not been used will be destroyed # Default value 1h, scope upstream4.4.2 Basic Configuration

Basic Usage

| Role | IP |

| Client | 10.0.0.208 |

| Proxy Server | 10.0.0.206 |

| Real Server – 1 | 10.0.0.210 |

| Real Server – 2 | 10.0.0.159 |

# Proxy Server Configurationupstream group1 { server 10.0.0.210; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Real Server-1 Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.com/index.html10.0.0.210 index# Real Server-2 Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.com/index.html10.0.0.159 index# Client Testing - Round Robin Scheduling to Backend Servers[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 indexOne Proxy Server Scheduling Multiple Upstreams

| Role | Belongs toupstream | IP |

| Client | 10.0.0.208 | |

| Proxy Server | 10.0.0.206 | |

| Real Server – 1 | group1 | 10.0.0.210 |

| Real Server – 2 | group1 | 10.0.0.159 |

| Real Server – 3 | group2 | 10.0.0.110 |

| Real Server – 4 | group2 | 10.0.0.120 |

# Proxy Server Configurationupstream group1 { server 10.0.0.210; server 10.0.0.159;}upstream group2 { server 10.0.0.110:8080; server 10.0.0.120:8080;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}server { listen 80; server_name www.m99-josedu.net; location / { proxy_pass http://group2; proxy_set_header host $http_host; }}# Real Server-1 Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.com/index.html10.0.0.210 index# Real Server-2 Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.com/index.html10.0.0.159 index# Real Server-3 Configurationserver { listen 8080; root /var/www/html/www.m99-josedu.net; server_name www.m99-josedu.net;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.net/index.html10.0.0.110 index# Real Server-4 Configurationserver { listen 8080; root /var/www/html/www.m99-josedu.net; server_name www.m99-josedu.net;}[root@ubuntu ~]# cat /var/www/html/www.m99-josedu.net/index.html10.0.0.120 index# Client Configuration[root@ubuntu ~]# cat /etc/hosts10.0.0.206 www.m99-josedu.com www.m99-josedu.net# Client group1 Testing[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index# Client group2 Testing[root@ubuntu ~]# curl http://www.m99-josedu.net10.0.0.110 index[root@ubuntu ~]# curl http://www.m99-josedu.net10.0.0.120 index[root@ubuntu ~]# curl http://www.m99-josedu.net10.0.0.110 index[root@ubuntu ~]# curl http://www.m99-josedu.net10.0.0.120 indexSetting Weights

# The default weight for each server configuration is 1, this configuration will schedule the two servers in a ratio of 3:1upstream group1 { server 10.0.0.210 weight=3; server 10.0.0.159;}# Client Testing[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 indexLimiting Maximum Active Connections

# 10.0.0.210 can only maintain two active connections simultaneouslyupstream group1 { server 10.0.0.210 max_conns=2; server 10.0.0.159;}# Backend Server Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com; limit_rate 10k;}# Client Testing, open 6 windows to download files[root@ubuntu ~]# wget http://www.m99-josedu.com/test.img# Check connections on 10.0.0.210, 2 active connections[root@ubuntu ~]# ss -tnpe | grep 80# Check connections on 10.0.0.159, 4 active connections[root@ubuntu ~]# ss -tnep | grep 80# Client continues testing, new requests will not be scheduled to 10.0.0.210[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl http://www.m99-josedu.com10.0.0.159 indexBackend Server Health Check

The upstream directive in Nginx performs passive health checks on backend servers. When a client request is scheduled to that server, it checks whether the server is available during the TCP three-way handshake. If it is unavailable, it will schedule to another server. When the number of unavailability reaches the specified number (default is 1 time, determined by the max_fails option in the Server configuration), it will not schedule requests to that server for a specified time (default is 10 seconds, determined by the fail_timeout option in the Server configuration) until the specified time has passed and then schedule requests to that server again. If the server is still unavailable, it will continue to wait for a period of time, and if the server becomes available again, it will be restored to the scheduling list.

upstream group1 { server 10.0.0.210; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Stop Nginx service on 10.0.0.210[root@ubuntu ~]# systemctl stop nginx.service# Client Testing, detects that 10.0.0.210 is unavailable, will schedule all requests to 10.0.0.159[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index# Enable Nginx service on 10.0.0.210, the client must wait 10 seconds after the last detection of the server being unavailable before it can schedule requests to that server again[root@ubuntu ~]# systemctl stop nginx.service# If the resource on the backend server does not exist, it will not affect scheduling and will return the corresponding status code and status page[root@ubuntu ~]# mv /var/www/html/www.m99-josedu.com/index.html{,bak}# Client Testing[root@ubuntu ~]# curl www.m99-josedu.com/index.html10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com/index.html<html><head><title>404 Not Found</title></head><body><center><h1>404 Not Found</h1></center><hr><center>nginx/1.18.0 (Ubuntu)</center></body></html>Setting Backup Servers

# Set backup, when both 10.0.0.210 and 10.0.0.159 are unavailable, requests will be scheduled to 10.0.0.213upstream group1 { server 10.0.0.210; server 10.0.0.159; server 10.0.0.213 backup;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Currently 159 and 210 are available, Client Testing[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.210 index# Stop Nginx services on 159 and 210 and test again[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.213 -- The website is under maintenance, please visit laterSetting Smooth Offline for Backend Servers

# Proxy Server Configurationupstream group1 { server 10.0.0.210; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Backend Server Configurationserver { listen 80; root /var/www/html/www.m99-josedu.com; server_name www.m99-josedu.com; limit_rate 10k;}# Client Testing - Open two windows to download files[root@ubuntu ~]# wget http://www.m99-josedu.com/test.img# Check on 10.0.0.210, there is one connection[root@ubuntu ~]# ss -tnep | grep 80# Check on 10.0.0.159, there is also one connection[root@ubuntu ~]# ss -tnep | grep 80# Modify Proxy Server Configuration to take 10.0.0.210 offlineupstream group1 { server 10.0.0.210 down; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Reload to take effect[root@ubuntu ~]# nginx -s reload# You will find that the previously maintained download connections are not interrupted, but new requests will no longer be scheduled to 10.0.0.210[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index4.4.3 Load Balancing Scheduling Algorithms

Source IP Address Hash

The ip_hash algorithm only uses the first 24 bits of IPV4 for hash calculation. If the first 24 bits of the client IP are the same, it will be scheduled to the same backend server.

# Proxy Server Configurationupstream group1 { ip_hash; server 10.0.0.210; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# 10.0.0.208 - Client Testing, scheduled to 10.0.0.159[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index# 10.0.0.213 - Client Testing, scheduled to 10.0.0.159[root@rocky ~]# curl www.m99-josedu.com10.0.0.159 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.159 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.159 index# 10.0.0.1 - Client Testing, scheduled to 10.0.0.159C:\Users\44301>curl www.m99-josedu.com10.0.0.159 indexC:\Users\44301>curl www.m99-josedu.com10.0.0.159 indexC:\Users\44301>curl www.m99-josedu.com10.0.0.159 indexUsing a Self-Specified Key for Hash Scheduling

# Proxy Server Configuration# Three servers with equal weight, scheduling algorithm hash($remote_addr)%3, values 0,1,2 will schedule to different serversupstream group1 { hash $remote_addr; server 10.0.0.210; server 10.0.0.159; server 10.0.0.213;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# 10.0.0.208 - Client Testing, scheduled to 10.0.0.159[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl www.m99-josedu.com10.0.0.159 index# 10.0.0.207 - Client Testing, scheduled to 10.0.0.213[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index# Three servers with different weights, scheduling algorithm hash($remote_addr)%(1+2+3), values 0,1,2,3,4,5# 0 schedules to 210# 1,2 schedules to 159# 3,4,5 schedules to 213upstream group1 { hash $remote_addr; server 10.0.0.210 weight=1; server 10.0.0.159 weight=2; server 10.0.0.213 weight=3;}# Proxy Server Configuration# Scheduling based on request_uri, different clients accessing the same resource will be scheduled to the same backend serverupstream group1 { hash $request_uri; server 10.0.0.210; server 10.0.0.159; server 10.0.0.213;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# 10.0.0.208 - Client Testing[root@ubuntu ~]# curl www.m99-josedu.com/index.html10.0.0.213 index[root@ubuntu ~]# curl www.m99-josedu.com/index.html10.0.0.213 index[root@ubuntu ~]# curl www.m99-josedu.com/index.html10.0.0.213 index[root@ubuntu ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaa[root@ubuntu ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaa[root@ubuntu ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaa# 10.0.0.207 - Client Testing[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.213 index[root@rocky ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaa[root@rocky ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaa[root@rocky ~]# curl www.m99-josedu.com/a.html10.0.0.210 aaaLeast Connections Scheduling Algorithm

# Proxy Server Configuration, least connections scheduling algorithmupstream group1 { least_conn; server 10.0.0.210; server 10.0.0.159;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Client opens a download connection, limits speed, keeps this connection alive[root@ubuntu ~]# wget www.m99-josedu.com/test.img# Download request is scheduled to 10.0.0.210[root@ubuntu ~]# ss -tnep | grep 80ESTAB 0 0 10.0.0.210:80 10.0.0.206:38880 users:(("nginx",pid=905,fd=5)) uid:33 ino:57728 sk:1003cgroup:/system.slice/nginx.service <-># New client testing, requests will not be scheduled to 10.0.0.210[root@rocky ~]# curl www.m99-josedu.com10.0.0.159 index[root@rocky ~]# curl www.m99-josedu.com10.0.0.159 index4.4.4 Consistent Hashing

# Proxy Server Configuration# Three servers with equal weight, scheduling algorithm hash($remote_addr)%3, values 0,1,2 will schedule to different serversupstream group1 { hash $remote_addr; server 10.0.0.210; server 10.0.0.159; server 10.0.0.213;}server { listen 80; server_name www.m99-josedu.com; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}In the above configuration, all three backend servers have a weight of 1, so the total weight is 3. Then, using the hash value of the client IP to take the modulus of the total weight.

Assuming the current scheduling situation is as follows

| hash($remote_addr) | hash($remote_addr)%3 | server |

| 3,6,9 | 0,0,0 | 10.0.0.210 |

| 1,47 | 1,1,1 | 10.0.0.159 |

| 2,58 | 2,2,2 | 10.0.0.213 |

At this time, if a new backend server is added, the total weight will change to 4. Therefore, the same hash value will result in different scheduling results, which will cause the data created on the original backend server to not exist on the new server.

Reducing the number of backend servers or modifying the weights of backend servers will also lead to rescheduling, causing the original cached data to become invalid (e.g., login status, shopping cart, etc.).

Consistent Hashing

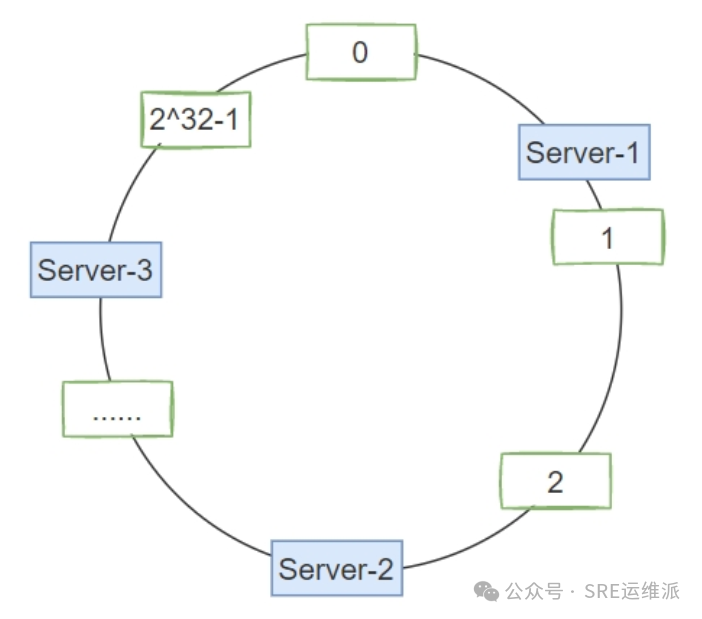

Consistent hashing is an algorithm used for data partitioning and load balancing in distributed systems, where the “hash ring” is one of the core concepts of this algorithm.

In consistent hashing, all possible data nodes or servers are mapped to a virtual ring. This ring typically spans a fixed hash space, such as 0 to 2^32-1, and each data node or server is mapped to a point on the ring through hash calculation. The hash value also falls within the range of 0 to 2^32-1.

On this ring, data will be distributed to the nearest node. When new data needs to be stored, it is first hashed to obtain the hash value of the data, and then the nearest node to this hash value is found to store the data. Similarly, when querying data, the hash value is calculated to find the nearest node for querying.

Since the hash ring is a circular structure, adding and removing nodes has relatively little impact on the overall system. When nodes are added or removed, only adjacent nodes are affected, while other nodes remain unchanged. This allows the consistent hashing algorithm to provide good load balancing performance in distributed systems while minimizing data migration overhead.

In summary, the hash ring in consistent hashing maps data nodes to the ring through hash calculations to achieve data partitioning and load balancing in distributed algorithms.

upstream group1 { hash $remote_addr consistent; # Add consistent hash parameter server 10.0.0.210; server 10.0.0.159; server 10.0.0.213;}| hash($remote_addr) | hash($remote_addr)%(2^32-1) | Node | Server |

| 1 | 1 | 1 | First clockwise |

| 2 | 2 | 2 | First clockwise |

| 3 | 3 | 3 | First clockwise |

| 4 | 4 | 4 | First clockwise |

| 5 | 5 | 5 | First clockwise |

Hash Ring

Ring Wrapping

In consistent hashing, a potential issue is ring wrapping, where a certain area of the hash ring becomes too crowded while other areas are relatively empty, which may lead to load imbalance. To address this issue, some improved consistent hashing algorithms introduce the concept of virtual nodes.

Virtual nodes are an extension of physical nodes. By creating multiple virtual nodes for each physical node and distributing them evenly on the hash ring, each physical node will have multiple replicas on the ring instead of just one position. This way, even if a certain area of the hash ring is too crowded, the load can be balanced by adjusting the number of virtual nodes.

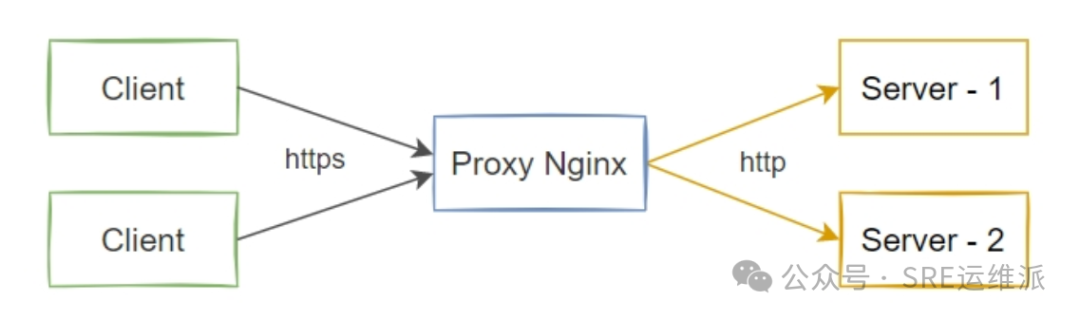

4.5 Comprehensive Case

Implementing automatic redirection from HTTP to HTTPS and reverse proxying client HTTPS requests to multiple backend HTTP servers through load balancing.

# upstream configurationupstream group1 { server 10.0.0.210; server 10.0.0.159;}# HTTP redirect to HTTPSserver { listen 80; server_name www.m99-josedu.com; return 302 https://$server_name$request_uri;}# HTTPS configurationserver { listen 443 ssl http2; server_name www.m99-josedu.com; ssl_certificate /usr/share/easy-rsa/pki/www.m99-josedu.com.pem; ssl_certificate_key /usr/share/easy-rsa/pki/private/www.m99-josedu.com.key; ssl_session_cache shared:sslcache:20m; ssl_session_timeout 10m; location / { proxy_pass http://group1; proxy_set_header host $http_host; }}# Client Testing[root@ubuntu ~]# curl -Lk www.m99-josedu.com10.0.0.159 index[root@ubuntu ~]# curl -LkI www.m99-josedu.comHTTP/1.1 302 Moved TemporarilyServer: nginxDate: Sat, 17 Feb 2025 13:55:01 GMTContent-Type: text/htmlContent-Length: 138Connection: keep-aliveLocation: https://www.m99-josedu.com/HTTP/2 200server: nginxdate: Sat, 17 Feb 2025 13:55:01 GMTcontent-type: text/html; charset=utf8content-length: 17last-modified: Wed, 14 Feb 2025 02:45:15 GMTetag: "65cc293b-11"accept-ranges: bytes— END —

-Click the card below to follow-

Like, Share, and View!

Your encouragement is the greatest support for me!