This article mainly introduces the basic concepts of memory and the memory management algorithms of operating systems.

-

Contiguous Memory Management

-

Non-contiguous Memory Management

-

Fixed Partitioning The memory is pre-divided into several fixed-size areas. The partition sizes can be equal or unequal. Fixed partitioning is easy to implement but can cause internal fragmentation waste within partitions, and the total number of partitions is fixed, limiting the number of processes that can be executed concurrently. -

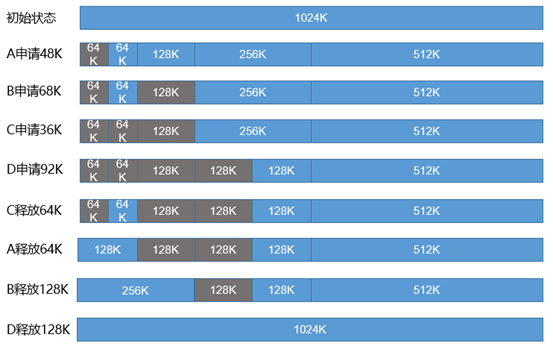

Dynamic Partitioning

-

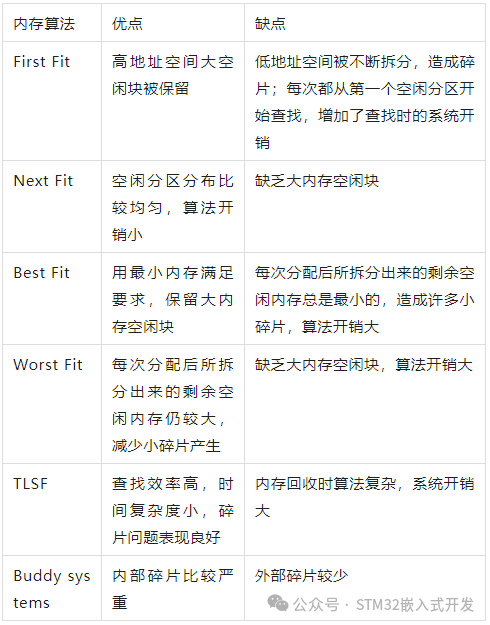

First Fit Algorithm

-

Next Fit Algorithm

-

Best Fit Algorithm

-

Worst Fit Algorithm

-

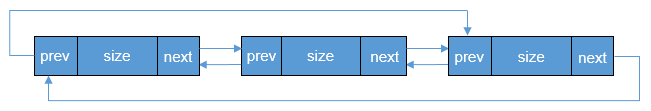

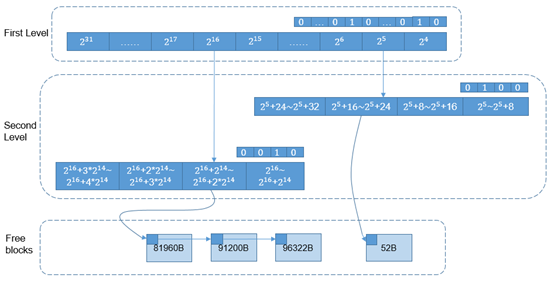

Two Level Segregated Fit (TLSF)

-

Buddy Systems