1. Concept of Virtualization

What is Virtualization

Virtualization is the process of creating multiple virtual machines from a single physical machine using a hypervisor. The behavior and operation of virtual machines are similar to that of physical machines, but they utilize the computing resources of the physical machine, such as CPU, memory, and storage. The hypervisor allocates these computing resources to each virtual machine as needed.

What are the Advantages of Virtualization

(1) Improved Hardware Resource Utilization

A single server can run multiple virtual machines for different applications, breaking the limitation of one application per server.

(2) Avoid Direct Software Conflicts Between Applications and Services

Many applications and services cannot be installed on the same system.

(3) Increased Stability

Achieving load balancing, dynamic migration, and automatic fault isolation reduces shutdown events. Under shared storage conditions, migration can be performed dynamically without shutting down.

(4) Easier Management and Reduced Management Costs

Application isolation, with each application using an independent virtual machine, reduces mutual interference.

(5) Faster Redeployment and Simpler Backups

Features such as templates, cloning, and snapshots can be utilized.

(6) Enhanced IT Flexibility to Adapt to Business Changes Through Dynamic Resource Allocation

When business priorities change, computing and storage resources can be allocated more flexibly and quickly.

Understanding the principles of virtualization through CPU virtualization, memory virtualization, and I/O virtualization

2. CPU Virtualization

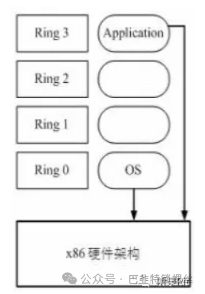

The x86 operating system is designed to run directly on physical hardware, thus fully occupying hardware resources. The x86 architecture provides four privilege levels for operating systems and applications to access hardware. Rings refer to the CPU’s operating levels, with Ring 0 being the highest level and Rings 1-3 decreasing in privilege.

Applications run at Ring 3. If an application needs to access the disk, such as writing a file, it must execute a system call (function). During the execution of a system call, the CPU’s operating level switches from Ring 3 to Ring 0 and jumps to the corresponding kernel code location to execute. The kernel then completes the device access for you and switches back from Ring 0 to Ring 3.This process is called the switch between user mode and kernel mode.

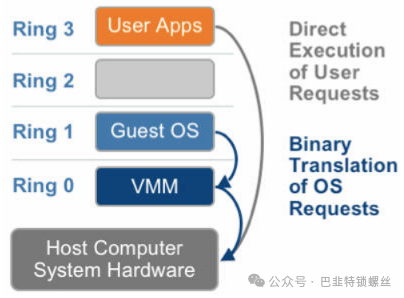

Now the question arises: since the host machine (physical machine) operates in Ring 0, the guest machine (GuestOS) cannot operate in Ring 0. However, the guest operating system is unaware of this, so a virtual machine monitor (VMM) is needed to prevent this from happening. The virtual machine accesses hardware through the VMM, and there are three implementation techniques based on their principles:

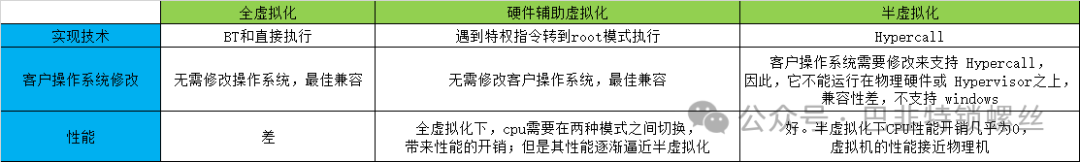

(1) Full Virtualization (2) Paravirtualization (3) Hardware-Assisted Virtualization

2.1 Full Virtualization Based on Binary Translation

The guest operating system runs in Ring 1. When it executes privileged instructions, it triggers an exception (a CPU mechanism where unauthorized instructions trigger an exception). The VMM captures this exception, translates it, simulates it, and finally returns to the guest operating system, which believes its privileged instructions are functioning normally and continues to run. However, this performance overhead is significant; a simple instruction that previously executed directly now has to go through a complex exception handling process.

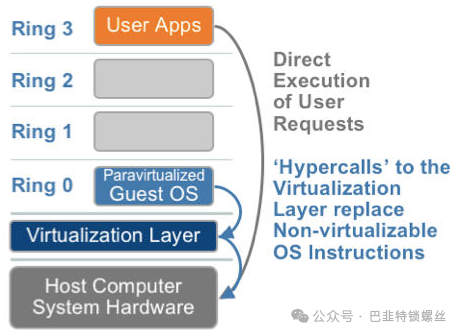

2.2 Paravirtualization

The idea of paravirtualization is to modify the operating system kernel to replace non-virtualizable instructions, allowing direct communication with the underlying virtualization layer (hypervisor) through hypercalls. The hypervisor also provides hypercall interfaces to satisfy other critical kernel operations, such as memory management, interrupts, and timekeeping.

This approach eliminates the capture and simulation in full virtualization, greatly improving efficiency. Therefore, technologies like XEN, which use paravirtualization, have a specially customized kernel version for the guest operating system, equivalent to x86, mips, arm, etc. As a result, there is no need for exception capture, translation, or simulation, leading to very low performance loss. This is the advantage of the XEN paravirtualization architecture, which is also why XEN only supports virtualizing Linux and cannot virtualize Windows, as Microsoft does not modify its code.

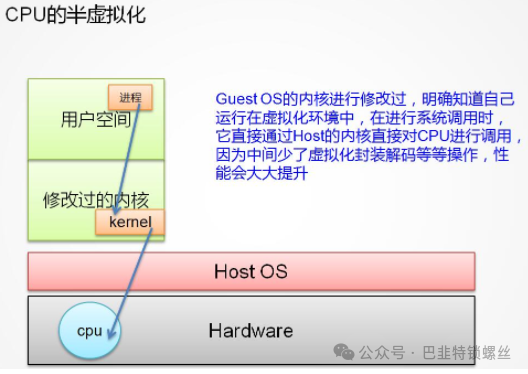

The guest OS kernel has been modified to explicitly know that it is running in a virtualized environment. When making system calls, it directly calls the CPU through the host’s kernel. Since the virtualization capture and exception translation processes are omitted, performance is greatly enhanced. A representative example is XEN.

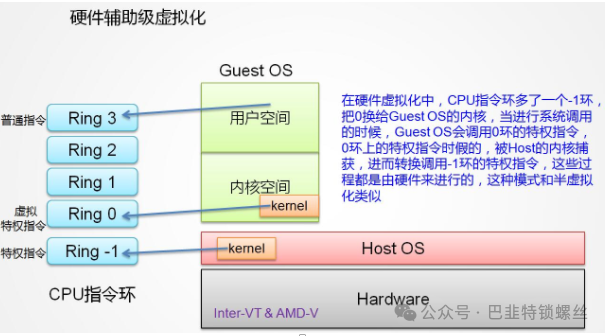

2.3 Hardware-Assisted Virtualization

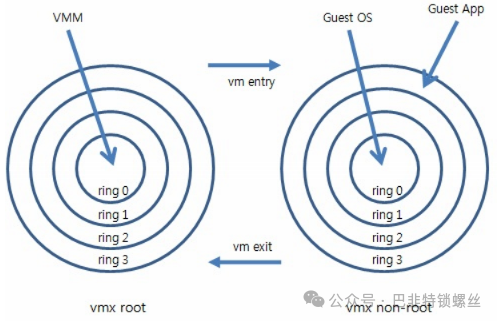

After 2005, CPU manufacturers Intel and AMD began to support virtualization. Intel introduced Intel-VT (Virtualization Technology). This type of CPU has VMX root operation and VMX non-root operation modes, both of which support four operating levels from Ring 0 to Ring 3. Thus, the VMM can run in VMX root operation mode while the guest OS runs in VMX non-root operation mode..

Moreover, these two operating modes can be interchanged. The VMM running in VMX root operation mode can explicitly call the VMLAUNCH or VMRESUME instructions to switch to VMX non-root operation mode, and the hardware automatically loads the context of the guest OS, allowing it to run. This transition is called VM entry. During the guest OS execution, if it encounters events that require VMM processing, such as external interrupts or page faults, or if it actively calls the VMCALL instruction to invoke VMM services (similar to system calls), the hardware automatically suspends the guest OS and switches to VMX root operation mode, resuming VMM execution. This transition is called VM exit. The behavior of software in VMX root operation mode is essentially consistent with that on processors without VT-x technology; however, VMX non-root operation mode is significantly different, primarily because certain instructions or events cause VM exit.

In other words, the hardware layer distinguishes between these modes, so in full virtualization, implementations relying on “exception capture – translation – simulation” are no longer necessary. Furthermore, CPU manufacturers are increasingly supporting virtualization, and the performance of hardware-assisted full virtualization is gradually approaching that of paravirtualization. Additionally, hardware-assisted virtualization does not require modifications to the guest operating system, making it the future trend of development.

The main characteristics distinguishing the three are:

1. Full virtualization: deceives the GuestOS into believing it is running on a physical machine.

2. Hardware-assisted virtualization: requires CPU hardware support.

3. Paravirtualization: requires modification of the GuestOS, which Windows does not support.

3. KVM CPU Virtualization

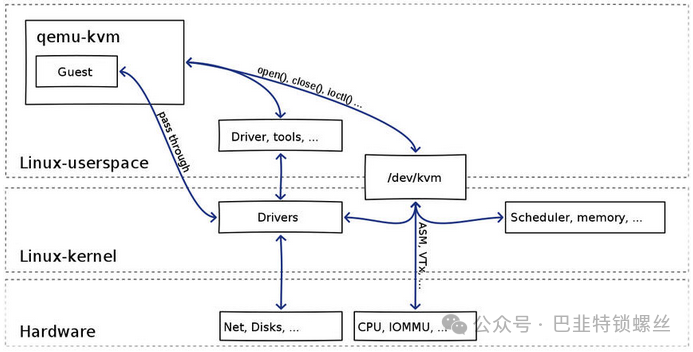

KVM is a hardware-assisted full virtualization solution that requires support for CPU virtualization features.

3.1 KVM Virtual Machine Creation Process

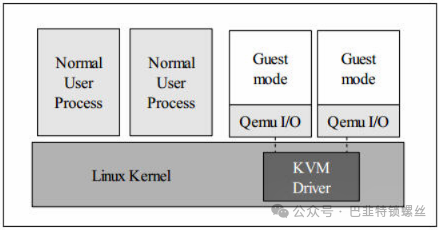

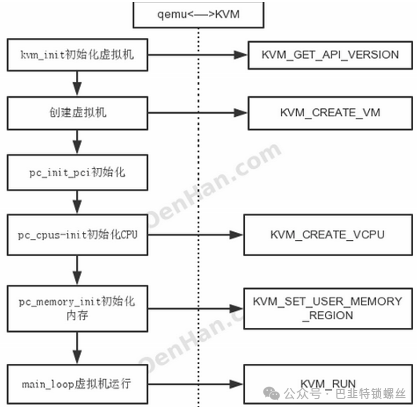

(1) qemu-kvm controls the virtual machine through a series of IOCTL commands to /dev/kvm;

(2) A KVM virtual machine is a Linux qemu-kvm process, scheduled by the Linux process scheduler like any other Linux process;

(3) A KVM virtual machine includes virtual memory, virtual CPU, and virtual I/O devices, where memory and CPU virtualization are handled by the KVM kernel module, while I/O device virtualization is managed by QEMU;

(4) The memory of the KVM virtual machine is part of the address space of the qemu-kvm process;

(5) The vCPU of the KVM virtual machine runs as a thread in the context of the qemu-kvm process.

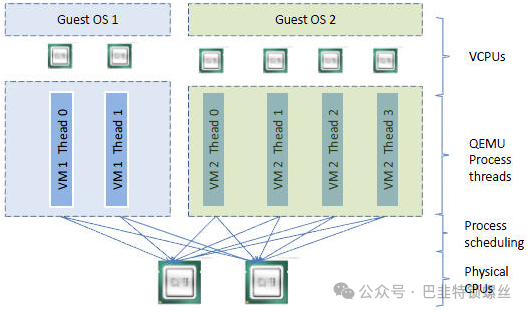

The logical relationship between vCPU, QEMU process, Linux process scheduling, and physical CPU:

2.2 Virtualization Features in CPUs

Because the support for virtualization features in CPUs does not create a virtual CPU and KVM Guest code runs on the physical CPU. CPUs that support virtualization have added new features. For example, Intel VT technology adds two operating modes: VMX root mode and VMX non-root mode. Generally, the host operating system and VMM run in VMX root mode, while the guest operating system and its applications run in VMX non-root mode.

Since both modes support all rings, the guest can run in the ring it requires (OS runs in ring 0, applications run in ring 3), and the VMM also runs in its required ring (for KVM, QEMU runs in ring 3, KVM runs in ring 0). The switch between the two modes is called VMX switching. Transitioning from root mode to non-root mode is called VM entry; transitioning from non-root mode to root mode is called VM exit. It is evident that the CPU switches between the two modes under control, alternately executing VMM code and Guest OS code.

For KVM virtual machines, the VMM running in VMX Root Mode executes the VMLAUNCH instruction to switch the CPU to VMX non-root mode when it needs to execute Guest OS instructions, initiating the VM entry process;

When the Guest OS needs to exit this mode, the CPU automatically switches to VMX Root mode, which is the VM exit process.

It can be seen that the KVM guest code runs directly on the physical CPU under the control of the VMM. QEMU merely controls the code of the virtual machine through KVM, but it does not execute its code. In other words, the CPU is not truly virtualized into a virtual CPU for the guest to use.

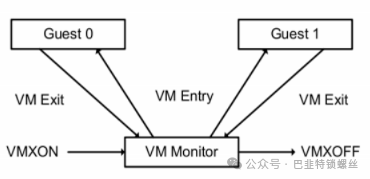

After the VMM completes the initialization of Vcpu and memory, it calls the KVM interface through ioctl to create the virtual machine and creates a thread to run the VM. During the initial setup of the VM, various registers are set to help KVM locate the entry point of the instructions to be loaded (main function). Therefore, after the thread calls the KVM interface, control of the physical CPU is handed over to the VM. The VM runs in VMX non-root mode, which is a special CPU execution mode provided by Intel-V or AMD-V. When the VM executes special instructions, the CPU saves the current VM context to the VMCS register (this register is a pointer that saves the actual context address), and then control switches to the VMM. The VMM retrieves the reason for the VM’s return and processes it. If it is an I/O request, the VMM can directly read the VM’s memory and simulate the I/O operation, then call the VMRESUME instruction for the VM to continue executing. At this point, from the VM’s perspective, the I/O operation’s instruction has been executed by the CPU.

Intel-V adds VMX mode on top of Ring 0 to Ring 3, with VMX root mode designated for the VMM (previously mentioned VM monitor), which in the KVM system is the mode in which the qemu-kvm process runs. VMX non-root mode is for the guest, which also has rings 0 to 3, but it is unaware that it is in VMX non-root mode.

Intel’s virtual architecture is fundamentally divided into two parts:

The Virtual Machine Monitor

The Guest (GuestOS)

Virtual Machine Monitor (VMM)

The virtual machine monitor appears on the host machine as an entity that provides virtual machine CPU, memory, and a series of virtual hardware. In the KVM system, this entity is a process, such as qemu-kvm. The VMM is responsible for managing the resources of the virtual machine and has control over all virtual machine resources, including switching the CPU context of the virtual machine.

Guest

The guest can be an operating system (OS) or a binary program; whatever it is, for the VMM, it is just a set of instruction sets that only need to know the entry point (the value of the rip register) to load. The guest requires a virtual CPU. When the guest code runs, it is in VMX non-root mode. In this mode, it uses the same instructions and registers as it would normally, but when it executes special instructions (such as the out instruction in the demo), it hands control of the CPU to the VMM, which handles the special instructions and completes the hardware operations.

The Switching Between VMM and Guest

KVM’s CPU virtualization relies on Intel-V’s virtualization technology, running the Guest in VMX mode. When special operations are executed, control is returned to the VMM. After the VMM processes the special operations, it returns the results to the Guest.

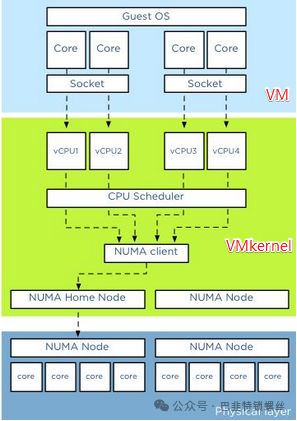

Several concepts: socket (the physical unit of CPU), core (the physical core within each CPU), thread (hyper-threading; typically, a CPU core provides only one thread, making the guest see only one CPU; however, hyper-threading technology virtualizes the CPU core, allowing one core to be virtualized into multiple logical CPUs that can run multiple threads simultaneously).

The above diagram is divided into three layers: VM layer, VMKernel layer, and physical layer. For physical servers, all CPU resources are allocated to individual operating systems and the applications running on them. Applications send requests to the operating system, which then schedules the physical CPU resources. In a virtualization platform like KVM, a VMKernel layer is added between the VM layer and the physical layer, allowing all VMs to share the resources of the physical layer. Applications on the VM send requests to the operating system on the VM, which then schedules virtual CPU resources (the operating system treats virtual CPUs as equivalent to physical CPUs), and the VMKernel layer schedules resources across multiple physical CPU cores to meet the needs of the virtual CPUs. In a virtualization platform, both the OS CPU Scheduler and Hypervisor CPU Scheduler perform resource scheduling within their respective domains.

In KVM, the number of sockets, cores, and threads can be specified, for example: setting -smp 5, sockets=5, cores=1, threads=1, resulting in a total of 5 vCPUs. The guest sees the CPU cores based on KVM vCPUs, while vCPUs are scheduled by Linux as ordinary threads/lightweight processes on the physical CPU cores. The conclusion is that there is no significant performance difference on VMware ESXi, although some guest operating systems may limit the number of physical CPUs; in such cases, fewer sockets and more cores can be used.

2.3 How Guest System Code is Executed

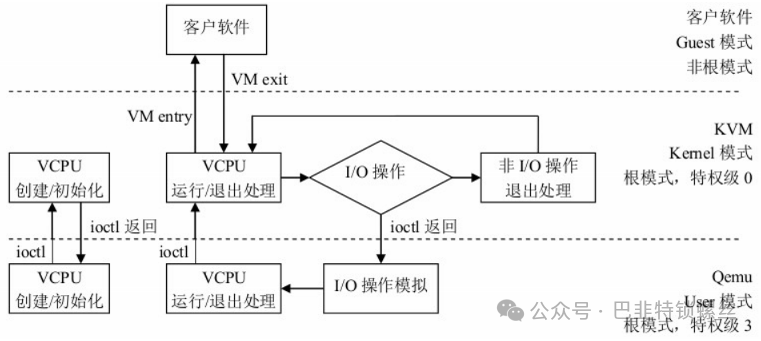

A standard Linux kernel has two execution modes: kernel mode (Kernel) and user mode (User). To support CPUs with virtualization capabilities, KVM adds a third mode to the Linux kernel, namely guest mode (Guest), which corresponds to the CPU’s VMX non-root mode.

The KVM kernel module serves as a bridge between User mode and Guest mode:

The QEMU-KVM in User mode runs the virtual machine through IOCTL commands.

Upon receiving this request, the KVM kernel module performs some preparatory work, such as loading the vCPU context into the VMCS (virtual machine control structure), and then drives the CPU into VMX non-root mode to begin executing guest code.

The roles of the three modes are:

Guest mode: executes non-I/O code of the guest system and drives the CPU to exit this mode when necessary;

Kernel mode: responsible for switching the CPU to Guest mode to execute Guest OS code and returning to Kernel mode when the CPU exits Guest mode;

User mode: represents the guest system in executing I/O operations.

Changes in QEMU-KVM Compared to Native QEMU:

Native QEMU achieves full virtualization through instruction translation, while the modified QEMU-KVM calls the KVM module through IOCTL commands;

Native QEMU is single-threaded, while QEMU-KVM is multi-threaded.

The host Linux views a virtual machine as a QEMU process, which includes several types of threads:

I/O threads for managing simulated devices;

vCPU threads for running Guest code;

Other threads, such as those handling event loops, offloaded tasks, etc.

KVM is a kernel module that implements a character device /dev/kvm to interact with users. By calling a series of ioctl functions, it can switch between QEMU and KVM.

Process of qemu-kvm:

1. Start a child thread to create and initialize vCPU; the main thread waits;

2. The child thread completes the initialization of vCPU and waits, notifying the main thread to run;

3. The main thread continues to initialize virtualization work, completes initialization, and notifies the child thread to continue running;

4. The child thread continues to start the virtual machine kvm_run, while the main thread executes select for interaction processing.

Analysis of the kvm process:

Thread-1: main thread, this thread loops, performing select operations to check for read/write file descriptors, and performs read/write operations if any;

Thread-2: child thread, asynchronously performing I/O operations, mainly for disk mapping operations (block drive);

Thread-3: child thread, vCPU thread, starting and running the virtual machine with kvm_run.

Example: Starting a 2-Core Virtual Machine with qemu-kvm

| 12345678910111213141516171819202122232425262728 | <span># Create a 2-core virtual machine with qemu-kvm</span><span>[root@localhost ~]</span><span># qemu-kvm -cpu host -smp 2 -m 512m -drive file=/root/cirros-0.3.5-i386-disk.img -daemonize</span><span>VNC server running on `::1:5900'</span><span># Check the main qemu-kvm process</span><span>[root@localhost ~]</span><span># ps -ef | egrep qemu</span><span>root 24066 1 56 13:59 ? 00:00:10 qemu-kvm -cpu host -smp 2 -m 512m -drive </span><span>file</span><span>=</span><span>/root/cirros-0</span><span>.3.5-i386-disk.img -daemonize</span><span>root 24077 24041 0 13:59 pts</span><span>/0</span> <span>00:00:00 </span><span>grep</span> <span>-E --color=auto qemu</span><span># Check the qemu-kvm child threads</span><span>[root@localhost ~]</span><span># ps -Tp 24066</span><span> </span><span>PID SPID TTY TIME CMD</span><span>24066 24066 ? 00:00:00 qemu-kvm</span><span>24066 24067 ? 00:00:00 qemu-kvm</span><span>24066 24070 ? 00:00:07 qemu-kvm</span><span>24066 24071 ? 00:00:02 qemu-kvm</span><span>24066 24073 ? 00:00:00 qemu-kvm</span><span># Use gdb to check the role of child threads</span><span>(</span><span>gdb</span><span>) thread 1</span><span>[Switching to thread 1 (Thread 0x7fb830cb2ac0 (LWP 24066))]</span><span>#0 0x00007fb829eaebcd in poll () from /lib64/libc.so.6</span><span>(</span><span>gdb</span><span>) thread 2</span><span>[Switching to thread 2 (Thread 0x7fb7fddff700 (LWP 24073))]</span><span>#0 0x00007fb82de0e6d5 in pthread_cond_wait@@GLIBC_2.3.2 () from /lib64/libpthread.so.0</span><span>(</span><span>gdb</span><span>) thread 3</span><span>[Switching to thread 3 (Thread 0x7fb81fe6e700 (LWP 24071))]</span><span>#0 0x00007fb829eb02a7 in ioctl () from /lib64/libc.so.6</span><span>(</span><span>gdb</span><span>) thread 4</span><span>[Switching to thread 4 (Thread 0x7fb82066f700 (LWP 24070))]</span><span>#0 0x00007fb829eb02a7 in ioctl () from /lib64/libc.so.6</span> |

From the above data, we conclude:

A 2-core virtual machine with 4 child threads:

(1) thread-1: main thread loop, performing select operations to check for read/write file descriptors, and performing read/write operations if any;

(2) thread-2: child thread, asynchronously performing I/O operations, mainly for disk image operations (block drive);

(3) thread-3: child thread, vCPU thread, starting and running the virtual machine;

(4) thread-4 is similar to thread-3.

2.4 Two Scheduling Steps from Guest Threads to Physical CPU

To schedule a thread from the guest to a physical CPU, two processes must be followed:

(1) The guest thread is scheduled to the guest physical CPU, i.e., KVM vCPU, which is managed by the guest operating system. Each guest operating system has different implementations. In KVM, vCPUs appear to the guest system as physical CPUs, so the scheduling method is not significantly different;

(2) The vCPU thread is scheduled to the physical CPU, i.e., the host physical CPU, which is managed by the Hypervisor, i.e., Linux.

KVM uses standard Linux process scheduling methods to schedule vCPU processes. In Linux systems, the distinction between threads and processes is that processes have independent kernel spaces, while threads are units of code execution, which are the basic units of scheduling. In Linux, threads are lightweight processes that share some resources, so they are scheduled in the same way as processes.

2.5 Methods for Allocating Guest VCPU Numbers

1. More vCPUs do not necessarily mean better performance; thread switching consumes a lot of time. The minimum number of vCPUs should be allocated based on load requirements;

2. The total number of vCPUs for guests on the host should not exceed the total number of physical CPU cores. If it does not exceed, there is no CPU contention, and each vCPU thread is executed on a physical CPU core; if it exceeds, some threads will wait for CPU and there will be overhead due to thread switching on a single CPU core;

3. Divide the load into compute load and I/O load. For compute loads, allocate more vCPUs, and consider CPU affinity by assigning specific physical CPU cores to these guests.

Steps to determine the number of vCPUs. If we want to create a VM, the following steps can help determine the appropriate number of vCPUs:

1. Understand the application and set initial values.

Is the application critical? Does it have a Service Level Agreement? It is essential to understand whether the applications running on the virtual machine support multithreading. Consult the application provider to see if it supports multithreading and SMP (Symmetric Multi-Processing). Refer to the number of CPUs required when the application runs on a physical server. If there is no reference information, set 1 vCPU as the initial value and closely monitor resource usage.

2. Monitor resource usage.

Determine a time period to observe the resource usage of the virtual machine. The time period depends on the characteristics and requirements of the application and can range from several days to several weeks. Monitor not only the CPU usage of the VM but also the CPU occupancy rate of the application within the operating system. It is especially important to distinguish between average CPU usage and peak CPU usage. If 4 vCPUs are allocated, and the CPU usage of the application on the VM:

(1) Peak usage equals 25%, meaning it can only utilize a maximum of 25% of total CPU resources, indicating that the application is single-threaded and can only use one vCPU;

(2) Average usage is less than 38%, and peak usage is less than 45%, consider reducing the number of vCPUs;

(3) Average usage is greater than 75%, and peak usage is greater than 90%, consider increasing the number of vCPUs.

3. Change vCPU data and observe results.

Make changes as minimal as possible; if 4 vCPUs are needed, start with 2 vCPUs and observe whether performance is acceptable.

Copyright Notice: The content of this article comes from Blog Garden: hukey, following the CC 4.0 BY-SA copyright agreement. The original text and this statement are included. This work is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 2.5 China Mainland License. Original link: https://www.cnblogs.com/hukey/p/11138768.html If there are any copyright issues, please contact us, and we will delete it immediately. Special thanks to the original author for their creation. All copyrights of this article belong to the original author and are unrelated to this public account. For commercial reprints, please contact the original author; for non-commercial reprints, please indicate the source.