Table of Contents

-

Introduction

-

1. Understanding Linux Memory

-

2. Linux Memory Address Space

-

3. Linux Memory Allocation Algorithms

-

4. Memory Usage Scenarios

-

5. Pitfalls of Memory Usage

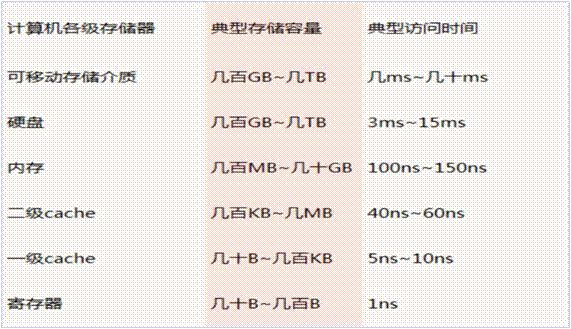

1. Understanding Linux Memory

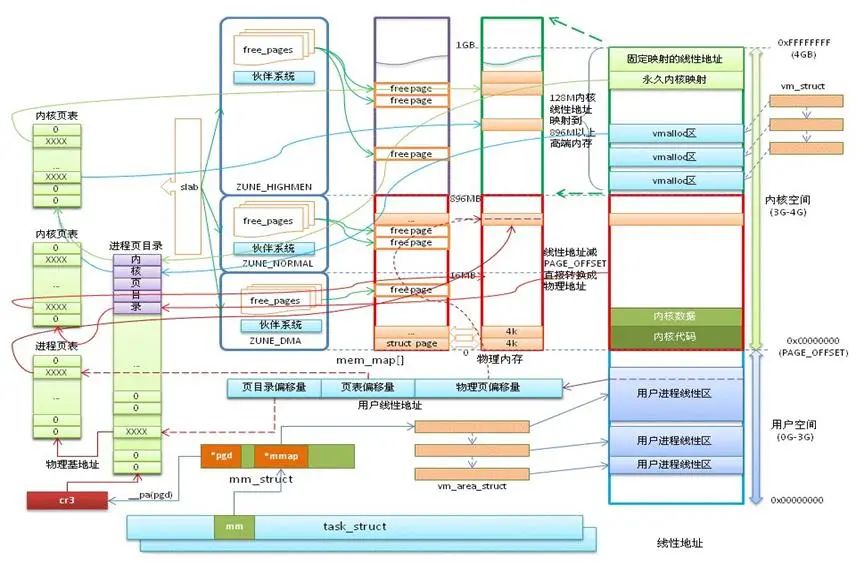

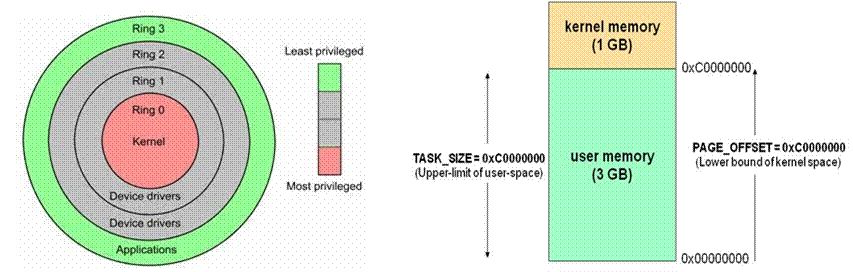

2. Linux Memory Address Space

-

User Mode: Ring 3 code running in user mode is subject to many restrictions from the processor

-

Kernel Mode: Ring 0 is the core state in the processor’s storage protection

-

Three ways to switch from user mode to kernel mode: system calls, exceptions, and external device interrupts

-

Difference: Each process has its own independent memory space that is not disturbed; programs in user mode cannot arbitrarily manipulate kernel address space, which provides a certain level of security; kernel mode threads share the kernel address space;

-

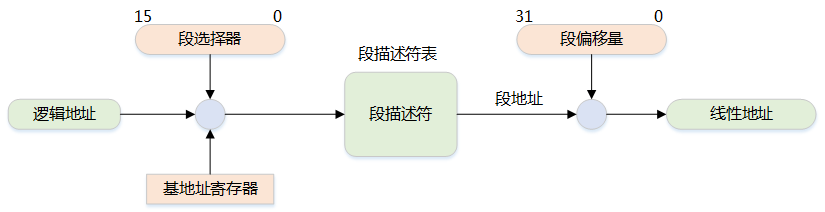

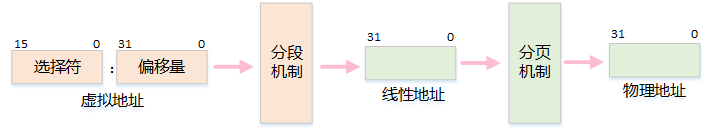

MMU is a hardware circuit that includes two components: a segmentation component and a paging component

-

The segmentation mechanism converts a logical address into a linear address

-

The paging mechanism converts a linear address into a physical address

-

To facilitate quick retrieval of segment selectors, the processor provides six segment registers to cache segment selectors, which are: cs, ss, ds, es, fs, and gs

-

Segment Base Address: The starting address of the segment in the linear address space

-

Segment Limit: The maximum offset that can be used within the segment in the virtual address space

-

The value in the segment register of the logical address provides the segment descriptor, from which the segment base and limit are obtained, and by adding the offset of the logical address, the linear address is obtained

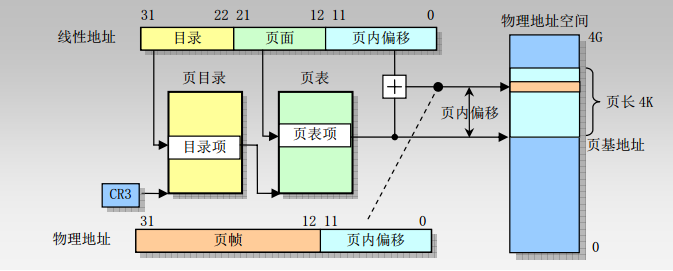

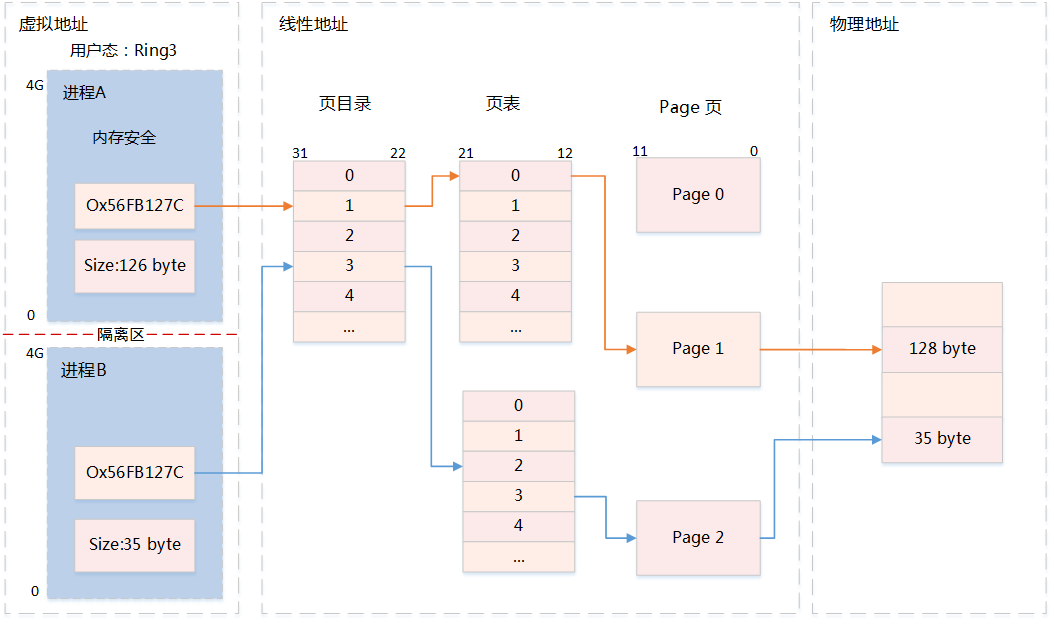

5. Memory Address – Paging Mechanism (32-bit)

-

The paging mechanism occurs after the segmentation mechanism, further converting the linear address into a physical address

-

10-bit page directory, 10-bit page table entry, and 12-bit page offset

-

The size of a single page is 4KB

-

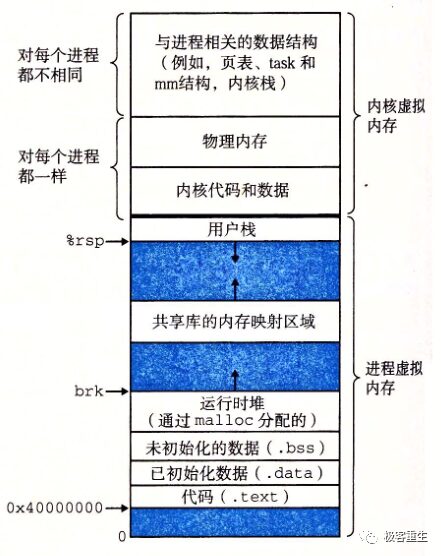

text: Code segment executable code, string literals, read-only variables

-

data: Data segment, maps initialized global variables in the program

-

bss: Stores uninitialized global variables in the program

-

heap: Runtime heap, the memory area allocated using malloc during program execution

-

mmap: Mapping area for shared libraries and anonymous files

-

stack: User process stack

-

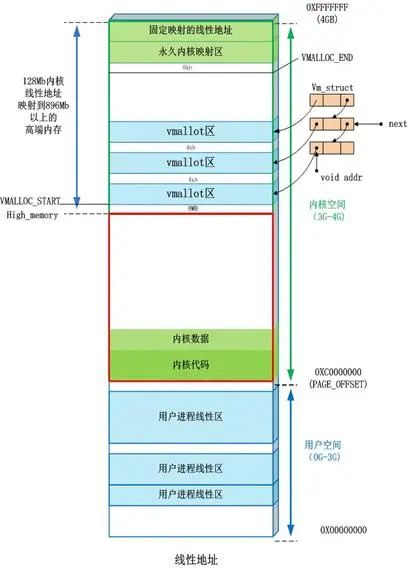

Direct Mapping Area: The area from 3G starting at a maximum of 896M is for direct memory mapping

-

Dynamic Memory Mapping Area: This area is allocated by the kernel function vmalloc

-

Permanent Memory Mapping Area: This area can access high memory

-

Fixed Mapping Area: This area has only a 4k isolation band from the top of 4G, with each address item serving a specific purpose, such as: ACPI_BASE, etc.

-

User processes can typically only access the virtual addresses of user space and cannot access the virtual addresses of kernel space

-

Kernel space is mapped by the kernel and does not change with the process; kernel space addresses have their corresponding page tables, while user processes each have different page tables

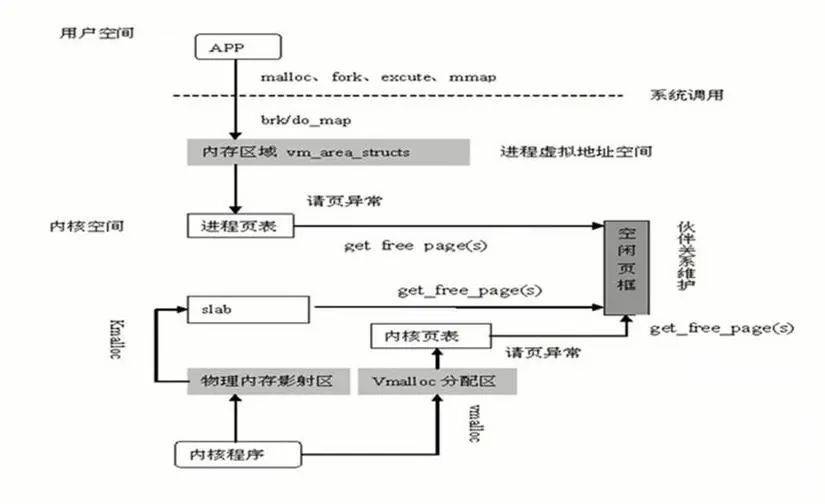

3. Linux Memory Allocation Algorithms

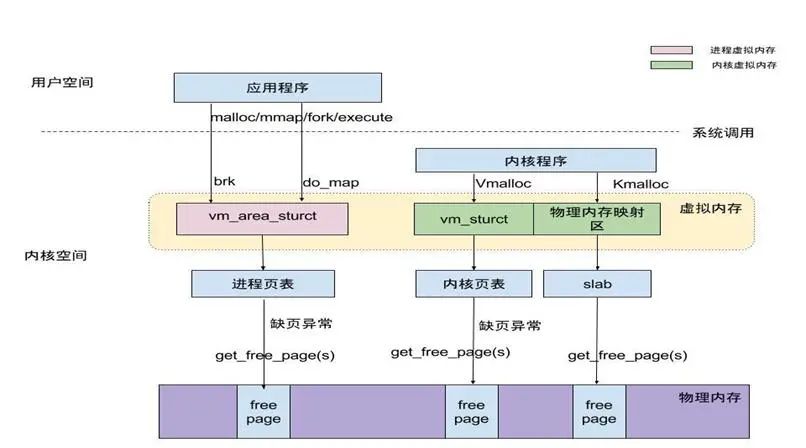

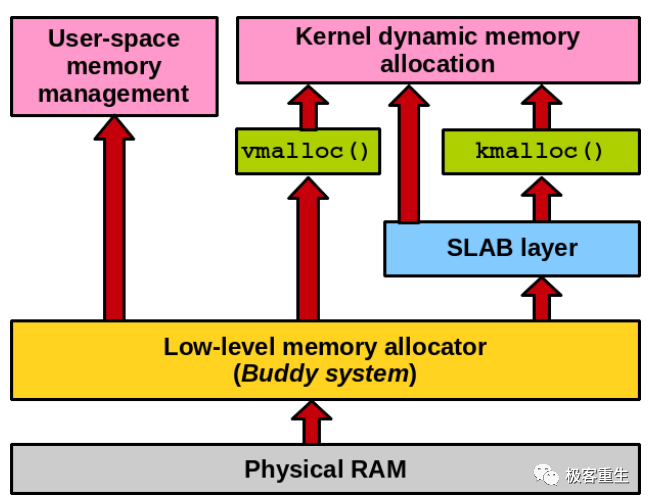

Linux Memory Management Framework

-

Causes: Memory allocation is small, and the lifespan of these small allocations is long, which leads to memory fragmentation after repeated requests

-

Advantages: Increases allocation speed, facilitates memory management, and prevents memory leaks

-

Disadvantages: A large amount of memory fragmentation can slow down the system, reduce memory utilization, and cause significant waste

-

Avoid using dynamic memory allocation functions (try to use stack space)

-

Allocate and free memory in the same function whenever possible

-

Try to allocate larger memory at once instead of repeatedly allocating small amounts

-

Try to request large chunks of memory in powers of 2

-

Avoid external fragmentation – Buddy System Algorithm

-

Avoid internal fragmentation – Slab Algorithm

-

Manage memory manually and design a memory pool

-

Provides an efficient allocation strategy for the kernel to allocate a group of contiguous pages and effectively solves the external fragmentation problem

-

The allocated memory area is based on page frames

-

External fragmentation refers to memory areas that are not allocated (not belonging to any process), but are too small to be allocated to new processes requesting memory space

-

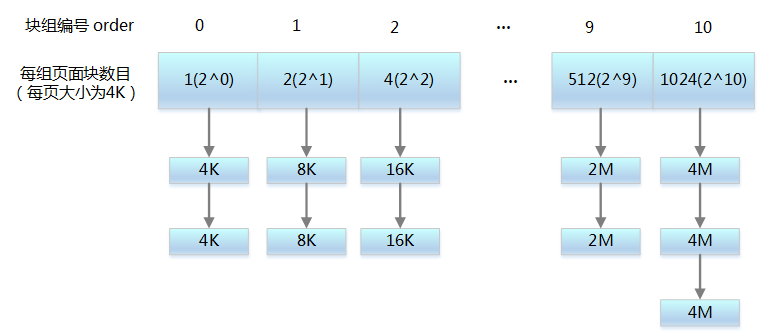

3) Organizational Structure

-

All free pages are grouped into 11 block linked lists, with each linked list containing blocks of pages that are 1, 2, 4, 8, 16, 32, 64, 128, 256, 512, and 1024 contiguous page frames. Up to 1024 contiguous pages can be requested, corresponding to 4MB of contiguous memory

-

Request 2^i page blocks for storage space; if the 2^i corresponding linked list has free page blocks, allocate them to the application

-

If there are no free page blocks, check if the 2^(i-1) corresponding linked list has free page blocks; if yes, allocate 2^i block linked list nodes to the application, and insert the 2^i block linked list nodes back into the 2^i corresponding linked list

-

If there are no free page blocks in the 2^(i-1) linked list, repeat step 2 until a linked list with free page blocks is found

-

If still none are found, return memory allocation failure

-

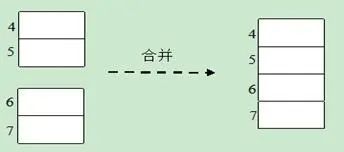

Release 2^i page block storage space, check the linked list corresponding to the 2^i page blocks to see if there are contiguous page blocks with the same physical address; if not, no need to merge

-

If yes, merge into 2^(i-1) page blocks and continue checking the next level linked list until no more merges are possible

-

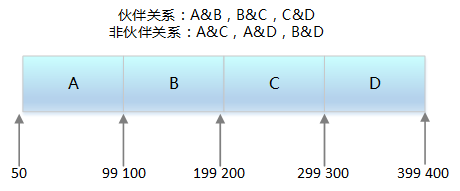

The two blocks must have the same size

-

They must have contiguous physical addresses

-

The page block sizes must be the same

-

The larger the allocated memory, the higher the likelihood of failure

-

There are few scenarios for using large amounts of memory

-

Modify MAX_ORDER and recompile the kernel

-

Pass the “mem=” parameter during kernel startup options, such as “mem=80M”, reserving part of the memory; then use

-

request_mem_region and ioremap_nocache to map the reserved memory into the module. This requires modifying kernel startup parameters without recompiling the kernel. However, this method does not support x86 architecture, only supports ARM, PowerPC, and other non-x86 architectures

-

Call alloc_boot_mem before the mem_init function in start_kernel to preallocate large memory blocks, which requires recompiling the kernel

-

vmalloc function, which is used by kernel code to allocate memory that is contiguous in virtual memory but not necessarily contiguous in physical memory

-

These pages have fixed positions in memory and cannot be moved or reclaimed

-

Kernel code segment, data segment, memory from kernel kmalloc(), memory occupied by kernel threads, etc.

-

These pages cannot be moved but can be deleted. The kernel performs page reclamation when these pages occupy too much memory or when memory is scarce

3) Movable Pages

These pages can be moved freely, and pages used by user-space applications belong to this category. They are mapped through page tables

When they move to a new location, the page table entries are updated accordingly

-

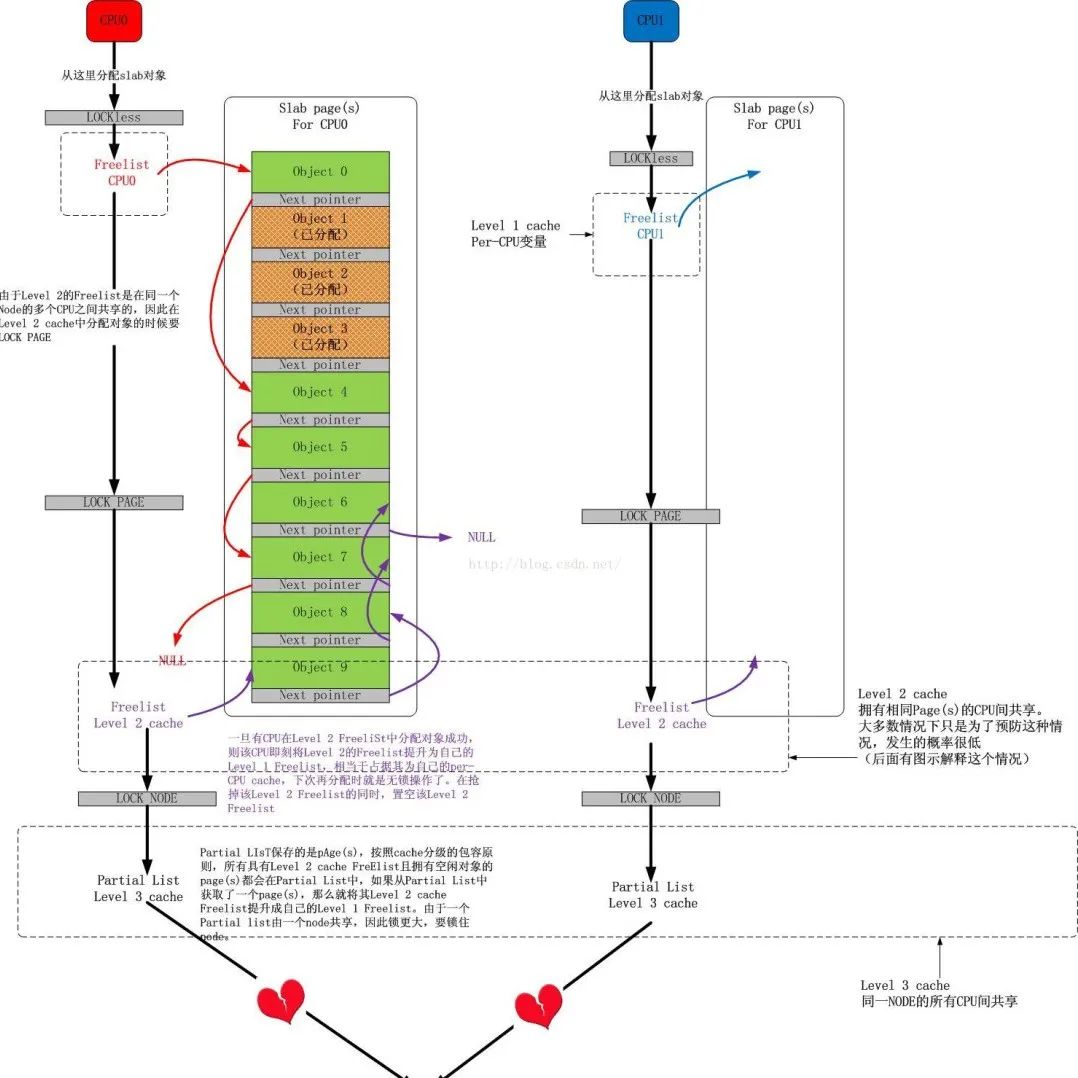

The slab allocator used by Linux is based on an algorithm first introduced by Jeff Bonwick for the SunOS operating system

-

Its basic idea is to place frequently used objects in the kernel into a cache and keep them in an initially available state. For example, process descriptors are frequently requested and released in the kernel

-

The allocated memory space is greater than the requested memory space

3) Basic Goals

Reduce internal fragmentation caused by the buddy algorithm when allocating small blocks of contiguous memory

Cache frequently used objects to reduce the time overhead of allocating, initializing, and releasing objects

Use coloring techniques to adjust objects for better use of hardware cache

-

Since objects are allocated and released from slabs, a single slab can move between slab lists

-

Slabs in the slabs_empty list are the main candidates for reclamation (reaping)

-

The slab also supports the initialization of generic objects, avoiding the need to initialize an object multiple times for the same purpose

Classic | Illustrated Core Ideas of Linux Memory Performance Optimization

-

The allocation of small blocks of contiguous memory provided by the slab allocator is achieved through a general cache

-

The objects provided by the general cache have geometrically distributed sizes, ranging from 32 to 131072 bytes.

-

The kernel provides two interfaces, kmalloc() and kfree(), for memory allocation and release respectively

-

The kernel provides a complete set of interfaces for the allocation and release of dedicated caches, allocating slab caches for specific objects based on the parameters passed

-

kmem_cache_create() is used to create a cache for a specified object. It allocates a cache descriptor from the cache_cache general cache for the new dedicated cache and inserts this descriptor into the cache_chain list formed by cache descriptors

-

kmem_cache_alloc() allocates a slab in the cache specified by its parameters. Conversely, kmem_cache_free() releases a slab in the specified cache

-

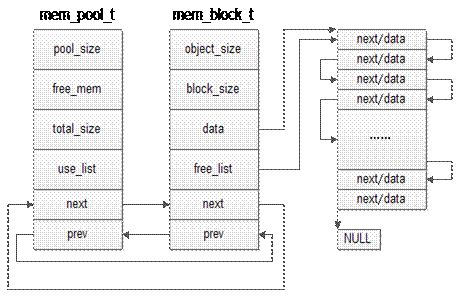

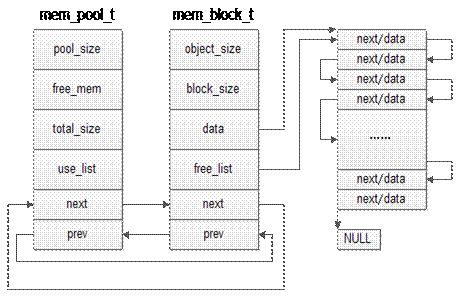

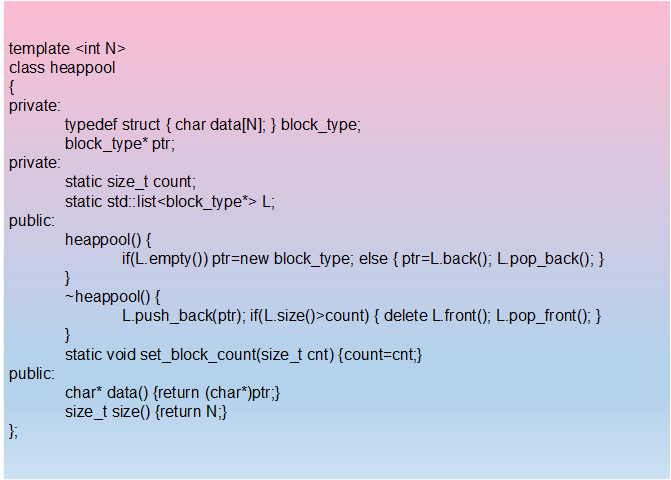

First, allocate a certain number of equally sized (generally) memory blocks as a reserve

-

When there is a new memory demand, allocate a portion of memory blocks from the pool; if the memory blocks are insufficient, continue to allocate new memory

-

A significant advantage of this approach is that it minimizes memory fragmentation, improving memory allocation efficiency

-

mempool_create creates a memory pool object

-

mempool_alloc allocates a function to obtain that object

-

mempool_free releases an object

-

mempool_destroy destroys the memory pool

-

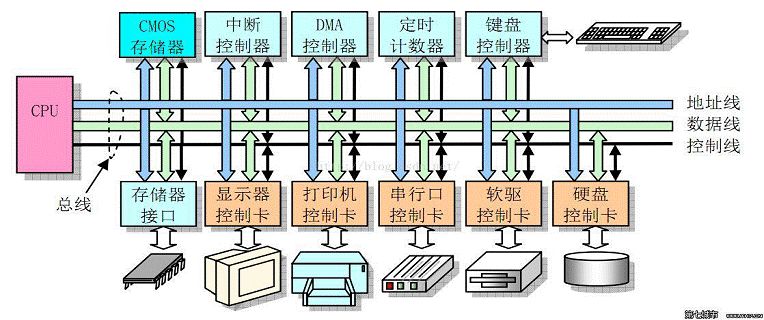

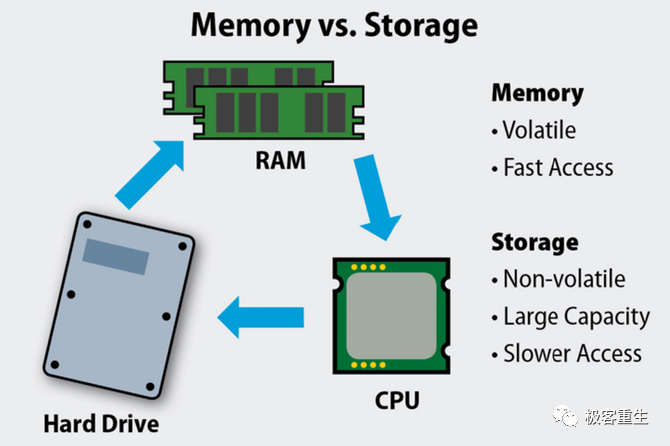

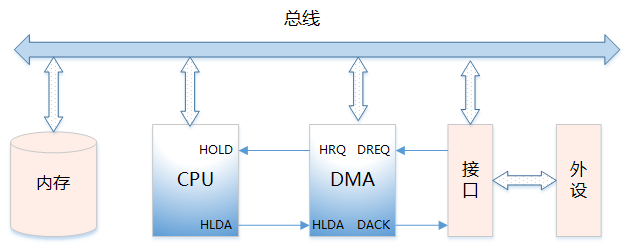

Direct Memory Access is a hardware mechanism that allows peripheral devices and main memory to directly transfer their I/O data without the involvement of the system processor

-

2) Functions of the DMA Controller

-

Can issue a system hold (HOLD) signal to the CPU, requesting bus takeover

-

When the CPU issues an allow takeover signal, it controls the bus and enters DMA mode

-

Can address memory and modify address pointers, allowing read and write operations on memory

-

Can determine the number of bytes to be transferred in this DMA transfer and judge whether the DMA transfer has ended

-

Issues a DMA end signal, allowing the CPU to resume normal operation

-

DREQ: DMA Request Signal. This is the signal from the peripheral device to the DMA controller requesting DMA operation

-

DACK: DMA Acknowledge Signal. This is the signal from the DMA controller to the peripheral device that requested DMA, indicating that the request has been received and is being processed

-

HRQ: Signal from the DMA controller to the CPU, requesting bus takeover

-

HLDA: Acknowledge signal from the CPU to the DMA controller, allowing bus takeover:

4. Memory Usage Scenarios

-

Page management

-

Slab (kmalloc, memory pool)

-

User mode memory usage (malloc, realloc file mapping, shared memory)

-

Memory map of the program (stack, heap, code, data)

-

Data transfer between kernel and user mode (copy_from_user, copy_to_user)

-

Memory mapping (hardware registers, reserved memory)

-

DMA memory

-

alloca allocates memory on the stack, so no need to free

-

malloc allocates uninitialized memory, and programs using malloc() may run correctly at first but can encounter problems after some time as memory is reallocated

-

calloc initializes every byte in the allocated memory space to zero

-

realloc extends the size of existing memory space

-

mmap maps a file or other object into memory, accessible by multiple processes

3. Kernel Mode Memory Allocation Functions

-

get_free_pages directly operates on page frames, suitable for allocating large amounts of contiguous physical memory

-

kmem_cache_alloc implements memory allocation based on the slab mechanism, suitable for frequent allocation and release of memory blocks of the same size; kmalloc is based on kmem_cache_alloc and is the most common allocation method for less than page frame size

-

vmalloc establishes a mapping of non-contiguous physical memory to virtual addresses, suitable when large memory is needed but continuity is not a requirement

-

dma_alloc_coherent is implemented based on _alloc_pages and is suitable for DMA operations; ioremap implements the mapping from known physical addresses to virtual addresses, suitable for situations where physical addresses are known, such as device drivers; alloc_bootmem reserves a segment of memory during kernel startup, which the kernel cannot see if it is less than the physical memory size, and memory management requirements are high

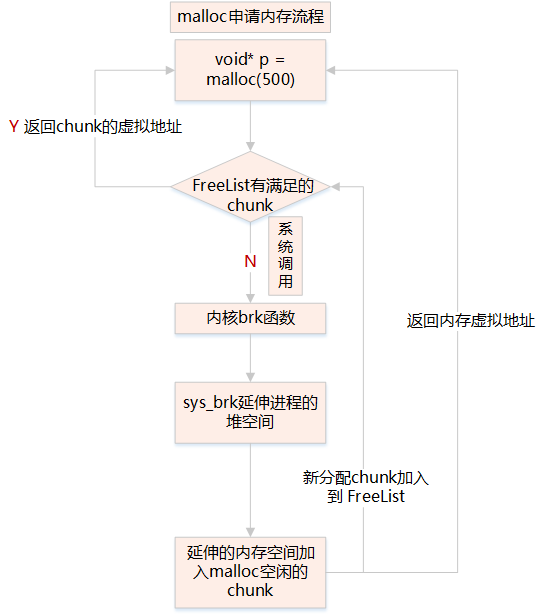

4. Memory Allocation with malloc

-

When calling the malloc function, it searches the free_chunk_list linked list for a memory block large enough to satisfy the user’s request

-

The main task of the free_chunk_list linked list is to maintain a linked list of free heap space buffers

-

If the linked list of space buffers does not find the corresponding node, it needs to extend the process’s stack space through the system call sys_brk

-

By get_free_pages, request one or more physical pages, convert addr to the pte address in the process’s pdg mapping, and set the pte corresponding to addr to the first address of the physical page

-

System calls: Brk – request memory less than or equal to 128kb, do_map – request memory greater than 128kb

-

User mode processes exclusively occupy virtual address space, and two processes can have the same virtual address

-

When accessing user mode virtual address space, if there is no mapped physical address, a page fault exception is issued through a system call

-

The page fault exception enters the kernel, allocating physical address space and establishing a mapping with the user mode virtual address

-

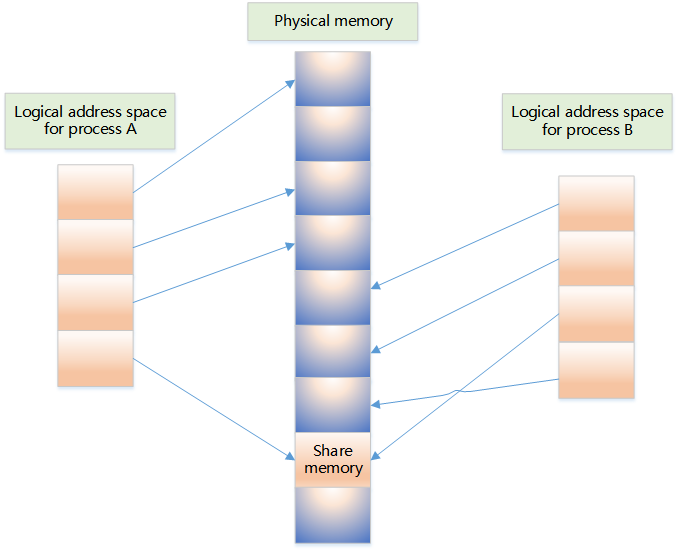

It allows multiple unrelated processes to access the same part of logical memory

-

Transferring data between two running processes, shared memory is an extremely efficient solution

-

Sharing data between two running processes is an efficient method of inter-process communication, effectively reducing the number of data copies

-

shmget creates shared memory

-

shmat starts access to the shared memory and connects it to the current process’s address space

-

shmdt detaches the shared memory from the current process

5. Pitfalls of Memory Usage

-

Not matching calls to new and delete in class constructors and destructors

-

Not correctly clearing nested object pointers

-

Not defining the base class destructor as a virtual function

-

If the base class pointer points to a subclass object and the base class destructor is not virtual, the subclass destructor will not be called, and the resources of the subclass will not be released correctly, causing memory leaks

-

Missing copy constructors; passing by value will call (copy) constructors, while passing by reference will not call them

-

An array of pointers to objects is not the same as an array of objects; the array stores pointers to objects, and it is necessary to release the space for each object as well as for each pointer

-

Missing overloaded assignment operators can also lead to memory leaks when the class size is variable, as it results in copying objects member by member

-

Pointer variables not initialized

-

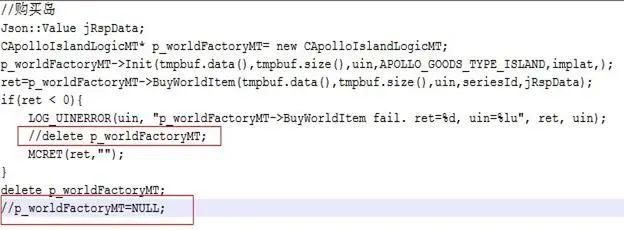

Pointer freed or deleted without being set to NULL

-

Pointer operations exceed the variable’s scope, for example, returning a pointer to stack memory results in a dangling pointer

-

Accessing a null pointer (requires null check)

-

sizeof cannot retrieve the size of an array

-

Attempting to modify a constant, e.g., char p=”1234″; p=’1′

-

Multi-threaded shared variables not modified with volatile

-

Multi-threaded access to global variables without locking

-

Global variables are only valid for a single process

-

Multi-process writing to shared memory data without synchronization

-

mmap memory mapping, multi-process unsafe

-

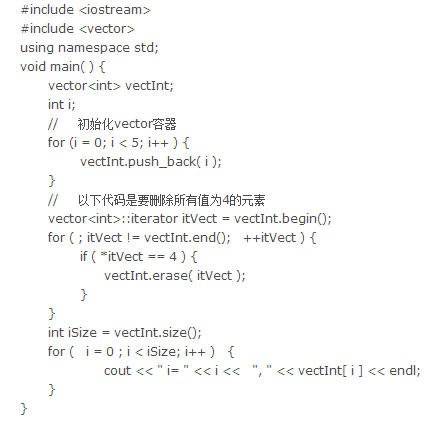

Invalidated iterators after deletion

-

Adding elements (insert/push_back, etc.) or deleting elements causes invalidation of sequence container iterators

Incorrect example: deleting the current iterator will invalidate it

Correct example: when erasing an iterator, save the next iterator

-

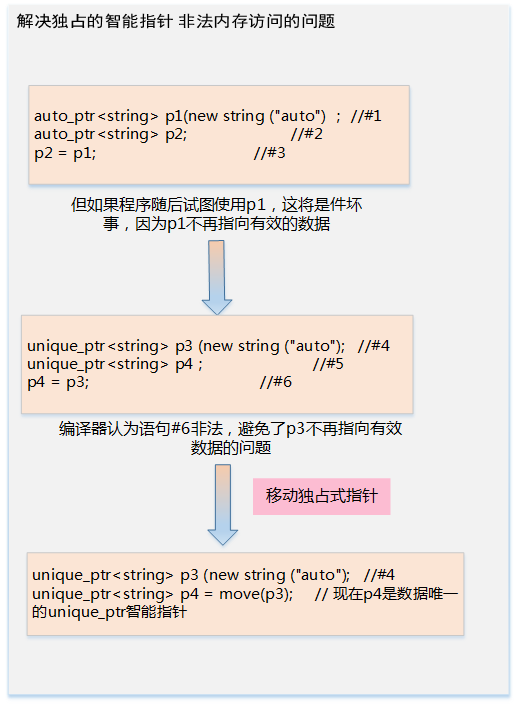

Replace auto_ptr with unique_ptr

-

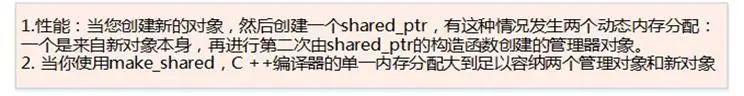

Use make_shared to initialize a shared_ptr

-

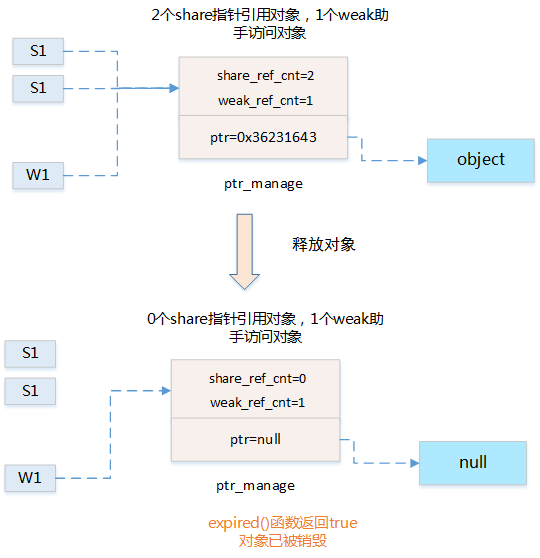

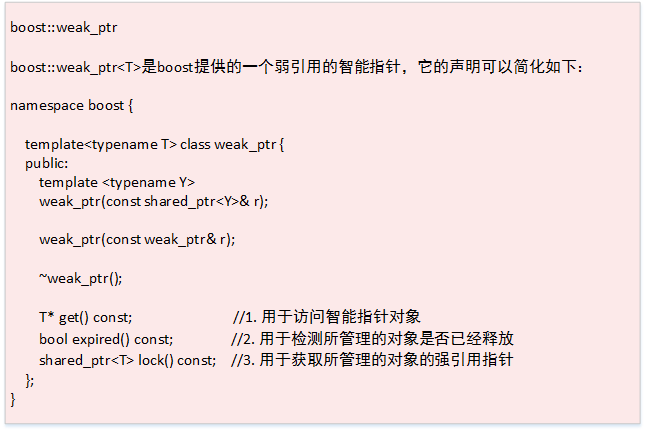

weak_ptr as a smart pointer assistant

(1) Principle Analysis:

(2) Data Structure:

(3) Usage:

-

lock() to obtain a strong reference pointer to the managed object

-

expired() to check whether the managed object has been released

-

get() to access the smart pointer object

6. C++11 Smaller, Faster, Safer

-

std::atomic atomic data types for multi-thread safety

-

std::array fixed-length array has less overhead than array and unlike std::vector, its length is fixed and cannot be dynamically expanded

-

std::vector vector slimming shrink_to_fit(): reduces capacity to the same size as size()

-

std::forward_list is a singly linked list (std::list is a doubly linked list), and in cases where only sequential traversal is needed, forward_list can save more memory and has better insertion and deletion performance than list.

-

std::unordered_map, std::unordered_set are unordered containers implemented with hash, with insertion, deletion, and search time complexity of O(1); using unordered containers can achieve better performance when the order of elements in the container is not a concern

6. How to View Memory

-

Memory usage in the system: /proc/meminfo

$cat /proc/meminfoMemTotal: 8052444 kB # Total size of all memory (RAM), minus some reserved space and kernel size. MemFree: 2754588 kB # Completely unused physical memory, lowFree+highFreeMemAvailable: 3934252 kB # Maximum available memory size for starting a new application without using swap space, calculated as: MemFree+Active(file)+Inactive(file)-(watermark+min(watermark,Active(file)+Inactive(file)/2))Buffers: 137128 kB # Cache pages occupied by block devices, including: direct read/write block devices and file system metadata, such as superblock cache pages. Cached: 1948128 kB # Indicates the cache pages occupied by normal file data. SwapCached: 0 kB # The swap cache contains anonymous memory pages that are determined to be swapped but have not yet been written to the physical swap area. These anonymous memory pages, such as those allocated by user processes, are not associated with any files; if swapping occurs, this memory will be written to the swap area. Active: 3650920 kB # Active includes active anon and active fileInactive: 1343420 kB # Inactive includes inactive anon and inactive fileActive(anon): 2913304 kB # Anonymous pages, user process memory pages are divided into two types: memory pages associated with files (such as program files, data files) and memory pages that are unrelated to files (such as process stack, memory allocated with malloc), the former is called file pages or mapped pages, the latter is called anonymous pages. Inactive(anon): 727808 kB # See aboveActive(file): 737616 kB # See aboveInactive(file): 615612 kB # See aboveSwapTotal: 8265724 kB # Total size of available swap space (swap partitions free up part of hard drive space for current programs when physical memory is insufficient)SwapFree: 8265724 kB # Current remaining swap sizeDirty: 104 kB # Size of memory that needs to be written to diskWriteback: 0 kB # Size of memory being written backAnonPages: 2909332 kB # Size of memory for unmapped pagesMapped: 815524 kB # Size of mapped devices and filesShmem: 732032 kB # Size of shared memorySlab: 153096 kB # Size of kernel data structure slabSReclaimable: 99684 kB # Size of reclaimable slabSUnreclaim: 53412 kB # Size of unreclaimable slabKernelStack: 14288 kBPageTables: 62192 kBNFS_Unstable: 0 kBBounce: 0 kBWritebackTmp: 0 kBCommitLimit: 12291944 kBCommitted_AS: 11398920 kBVmallocTotal: 34359738367 kBVmallocUsed: 0 kBVmallocChunk: 0 kBHardwareCorrupted: 0 kBAnonHugePages: 1380352 kBCmaTotal: 0 kBCmaFree: 0 kBHugePages_Total: 0HugePages_Free: 0HugePages_Rsvd: 0HugePages_Surp: 0Hugepagesize: 2048 kBDirectMap4k: 201472 kBDirectMap2M: 5967872 kBDirectMap1G: 3145728 kB-

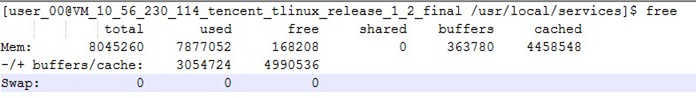

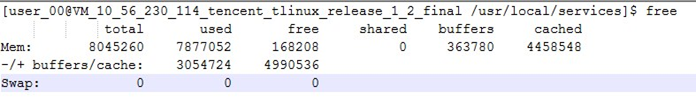

Query total memory usage: free

-

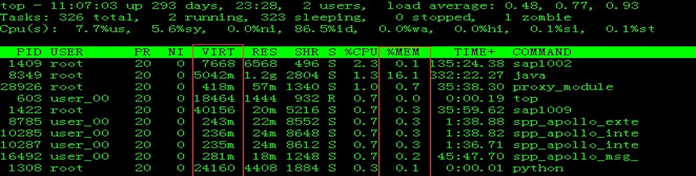

Query process CPU and memory usage ratio: top

-

Virtual memory statistics: vmstat

-

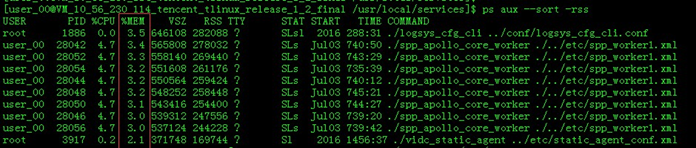

Process memory consumption ratio and sorting: ps aux –sort -rss

-

View buddy system information

The current state of the buddy system can be viewed by cat /proc/buddyinfo command

cat /proc/buddyinfo Node 0, zone DMA 23 15 4 5 2 3 3 2 3 1 0 Node 0, zone Normal 149 100 52 33 23 5 32 8 12 2 59 Node 0, zone HighMem 11 21 23 49 29 15 8 16 12 2 142 -

View slab information

You can view it through cat /proc/slabinfo command

cat /proc/slabinfo slabinfo - version: 2.1# name <active_objs> <num_objs> <objsize> <objperslab> <pagesperslab> : tunables <limit> <batchcount> <sharedfactor> : slabdata <active_slabs> <num_slabs> <sharedavail>bridge_fdb_cache 0 0 64 59 1 : tunables 120 60 0 : slabdata 0 0 0nf_conntrack_expect 0 0 240 16 1 : tunables 120 60 0 : slabdata 0 0 0nf_conntrack_ffffffff81f6f600 0 0 304 13 1 : tunables 54 27 0 : slabdata 0 0 0iser_descriptors 0 0 128 30 1 : tunables 120 60 0 : slabdata 0 0 0ib_mad 0 0 448 8 1 : tunables 54 27 0 : slabdata 0 0 0fib6_nodes 22 59 64 59 1 : tunables 120 60 0 : slabdata 1 1 0ip6_dst_cache 13 24 320 12 1 : tunables 54 27 0 : slabdata 2 2 0ndisc_cache 1 10 384 10 1 : tunables 54 27 0 : slabdata 1 1 0ip6_mrt_cache 0 0 128 30 1 : tunables 120 60 0 : slabdata 0 0 0-

Release System Memory Cache

You can release it through /proc/sys/vm/drop_caches

#To free pagecache, useecho 1 > /proc/sys/vm/drop_caches#To free dentries and inodes, use echo 2 > /proc/sys/vm/drop_caches#To free pagecache, dentries and inodes, useecho 3 >/proc/sys/vm/drop_cache

#To free pagecache, useecho 1 > /proc/sys/vm/drop_caches#To free dentries and inodes, use echo 2 > /proc/sys/vm/drop_caches#To free pagecache, dentries and inodes, useecho 3 >/proc/sys/vm/drop_cache—Copyright Statement—

Source: Geek Rebirth, Edited by: nhyilin

For academic sharing only, copyright belongs to the original author.

If there is any infringement, please contact WeChat: Eternalhui or nhyilin for deletion or modification!

Recommended Articles

☞ Basics of Neural Networks

☞ What is the Use of Linear Algebra?

☞ Interesting Article: Various Algorithms for Pursuing Girls

☞ The Significance of Taylor Expansion, Fourier Transform, Laplace Transform, and Z Transform

☞ Detailed Explanation and Implementation of Collaborative Filtering (CF) Algorithm

☞ Seven Regression Techniques You Should Master