Hello everyone, today I would like to share a paper published in 2023 in Science Robotics, titled “Human motor augmentation with an extra robotic arm without functional interference”.The paper evaluates a multimodal human-machine interface (HMI) based on gaze detection and diaphragmatic breathing on a specially designed modular neuro-robotics platform that integrates virtual reality and bilateral upper limb exoskeletons. The results indicate that the proposed HMI does not interfere with speech or visual exploration, and it can be used to control an additional virtual arm independently from or in coordination with biological arms. Participants showed significant improvements in daily training and learning memory, with no further enhancements when providing artificial tactile feedback. As a proof of concept, both naïve and experienced participants used a simplified version of the HMI to control a wearable extra robotic arm (XRA). Our analysis demonstrates how the proposed human-machine interface can be effectively used to control the XRA. Results show that experienced users achieved a success rate 22.2% higher than naïve users, combined with an average success rate of 74% displayed by naïve users during their first use of the system, supporting the feasibility of VR-based testing and training. The first authors of this paper are Giulia Dominijanni, Daniel Leal Pinheiro, and Leonardo Pollina, with corresponding authors Solaiman Shokur and Silvestro Micera.

Humans have always developed and used tools to enhance their sensory and motor skills. The proficient use of tools is one of the key characteristics of humanity and has played a fundamental role in our evolution. A fascinating possibility is to provide additional limbs for users to wear and control, further enhancing our capabilities. Recently, extra robotic limbs have transitioned from purely fictional realms to exciting tools that provide additional degrees of freedom to both healthy and clinical populations.

Various prototypes characterized by different mechanical designs, drive systems, and features have been proposed, with most robotic devices consisting of one or two arms or fingers. Extra robotic fingers have been tested in augmentation and rehabilitation applications, utilizing human-machine interfaces (HMIs) based on simple switch buttons, biological arm kinematics, toe-driven force sensors, foot motion tracking, and muscle recording.

Extra robotic arms (XRAs) primarily focus on enhancing functionality. Their HMIs are often based on the use of foot movements—this strategy limits the user’s walking potential. Moreover, only a few have surpassed their initial proof of concept with limited functional characteristics, and several examples have limited their evaluations to virtual extra arms without translating into physical devices. As noted in this paper, neural resource allocation is a major challenge in this field. Identifying and validating sensory-motor control strategies that allow for independent and coordinated control over natural limbs represents a primary focus for real-world use of XRAs and the development of control strategies that do not interfere with other user functions. Some pioneering studies have begun to systematically address this critical issue, initially limiting their assessments to the control of two-dimensional (2D) or three-dimensional cursors.

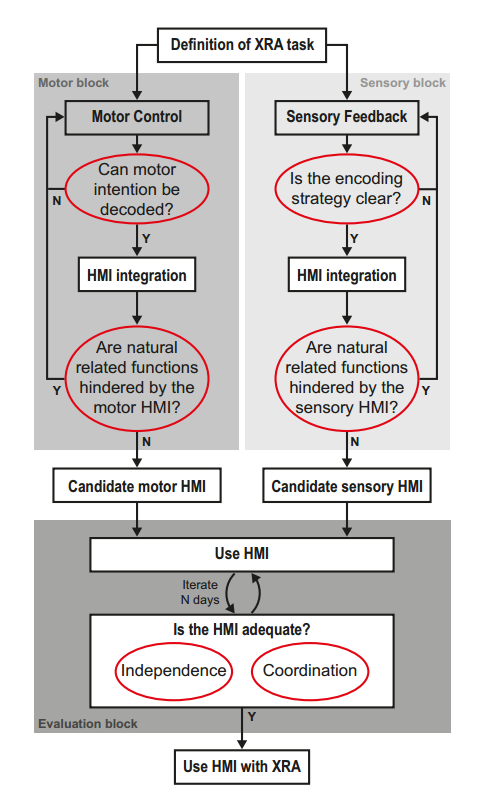

This paper proposes a universal pipeline to develop motion, sensory, or sensory-motor HMIs for XRAs given a defined task. The pipeline consists of three distinct blocks: one for developing candidate motor HMIs (motor block), one for developing candidate sensory HMIs (sensory block), and another that includes evaluation steps for the considered HMIs or their combinations (evaluation block). The proposed components and steps are illustrated in Figure 1.

Figure 1 illustrates the development pipeline for XRA HMIs.

HMI interface design:

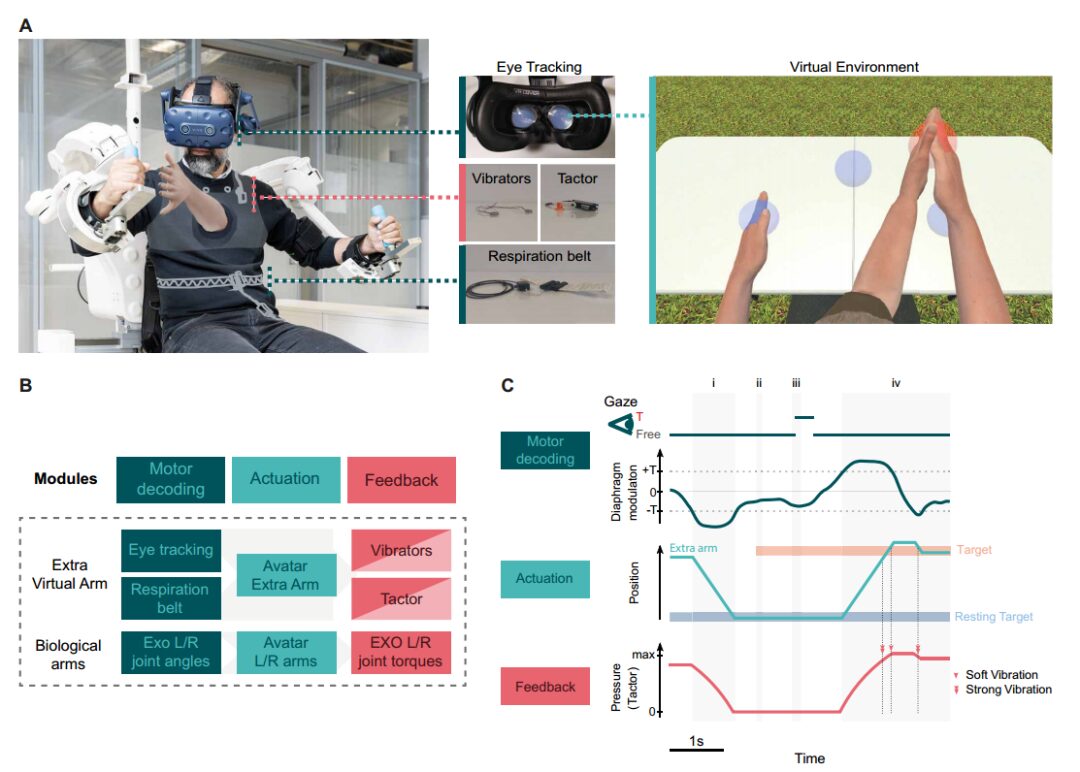

Visual motion coordination is considered to be related to reaching movements, where a gaze (rapid eye movement focusing on the area of interest in the fovea) occurs before the movement starts. Therefore, when it comes to the anticipated target, the gaze can be considered a valuable source of information, which has been previously used for XRAs and incorporated into mixed brain-computer interfaces (BCIs) to control upper limb exoskeletons. Here, we track eye movements to determine whether the user is focused on reachable targets, reducing the control of the extra arm’s 3D reaching movements to a single degree of freedom given by the direction between the end effector and the selected target. In our proposed human-machine interface, the user controls the movement of the extra limb by adjusting diaphragmatic expansion (Figure 2C). We implemented an adaptive two-level threshold to determine the three possible stages required to control the extra arm. To move the end effector forward, the user needs to inhale to expand the diaphragm, while diaphragm contraction is interpreted as the intention to move backward. Finally, with a relaxed diaphragm, the user can breathe normally and maintain the current position. The upper limit threshold is defined as the maximum expansion amount being 50% greater than the average contraction amount; meanwhile, the lower limit is 50% less than the average minimal contraction amount. The thresholds are updated every 100 milliseconds. The diaphragm modulation signals are recorded using the BrainVision breathing belt, connected to the ANT neural recording system for sampling at 512 Hz, filtered using a second-order high-pass Butterworth filter with a cutoff frequency of 25.6 Hz.

Figure 2 illustrates the neuro-robotics platform and HMI testing for extra arm control.

Tactile feedback display

This paper developed a tactile display that provides tactile and proprioceptive feedback to the user from the extra limb. The tactile display consists of two modules: one made of a 3D printed lever system connected to a servo motor and two coin vibration motors (Figure 2A). Inspired by the successful implementation of vibrators and pressure actuators in upper limb prosthetics, and the ability to prevent user discomfort by avoiding continuous vibrations, a hybrid feedback encoding strategy was chosen for contact and proprioceptive information. The first module is located on the left pectoralis major, delivering pressure stimuli to the user’s skin to encode the distance of the extra limb’s end effector from a resting position, thus providing proprioceptive information. When the other hand is resting close to the body, the lever head contacts the skin without indentation; when the hand moves away from the body, pressure increases with increasing distance from the resting position. The second module’s two coin vibration motors are placed on the user’s chest, one on the left and one on the right, encoding tactile information. Specifically, the left and right motors encode contact with the target and biological arm (high-intensity vibration) and disconnection (low-intensity vibration). Each vibration lasts for 250 milliseconds. These two modules are controlled by an Arduino ATMega2560 through a custom MATLAB script that maps the normalized distance between the minimum and maximum servo motor rotation angles (10° and 80°) to the motor pins and controls the frequency intensity of the motors via pulse-width modulation.

XVA and modular neuro-robotics platform

To test the proposed human-machine interface in a flexible environment, this paper developed an immersive virtual reality where a user-controlled humanoid avatar is equipped with additional upper limbs – XVA. To avoid merging with the left and right limbs, the hands of the XVA have four fingers and two thumbs (Figure 2A). In the experiments described here, to maintain body symmetry, the XVA is always placed in the center of the chest; however, the anchoring point of the XVA can be chosen freely. The XVA is characterized by a customized kinematic chain inspired by the human arm, thus using inverse kinematics for control, taking the position and orientation of the end effector (XVA hand) as input. The virtual reality is presented from a first-person perspective, developed in Unity, and presented to participants wearing the Vive Pro Eye HMD. The VE and its components are at the core of the proposed modular testing platform, which includes a control loop and a sensory loop, with different implementations for the XVA and natural arms (Figure 2B).

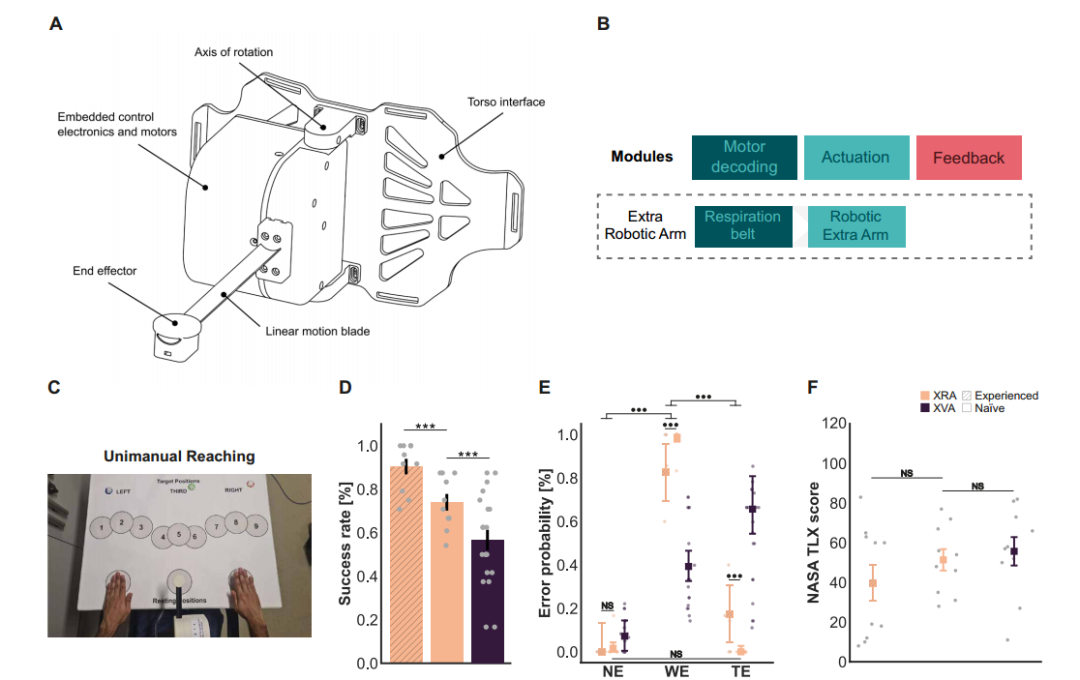

The planar XRA prototype study

A prototype of an XRA was developed using a physical robot to test our human-machine interface. The XRA described in this paper is a wearable robot connected to the user’s chest via adjustable straps, integrating a base that allows for planar rotation and a linear motion blade for end effector translation (Figure 7A). The overall weight of the robot is less than 2 kg, with its weight distribution located near the chest, making it comfortable to use. The weight distribution is only slightly affected by the extension of the metal strap, as it is very light. The rotational range of the workspace exceeds 36°, the translation range exceeds 40 cm, with a speed of 8.5 cm/s and an accuracy of 0.4 cm/s. The XRA controller runs on an Arduino Due and receives rotational and translational input via serial communication. Currently, the target selection that determines the direction of the XRA is automated, rather than based on eye-tracking as implemented in the XVA HMI. The end effector translation is based on the same diaphragm breathing modulation decoder classification output as the XVA HMI.

HMI enhancement assessment

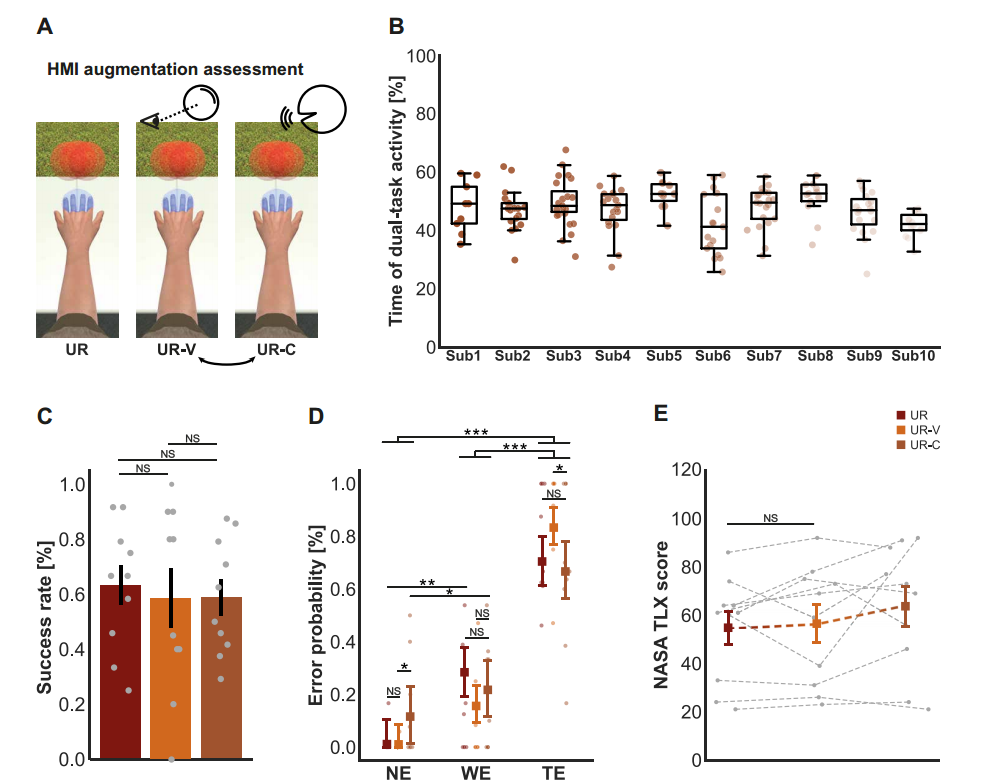

To evaluate the actual enhancement level provided to users, we designed two dual tasks to investigate the extent to which the gaze diaphragm HMI interfered with users’ ability to perform daily activities. Specifically, we tested the impact of virtual reality UR tasks on gaze and speech separately. If participants reached the target and stayed within the target for 500 milliseconds, and the trial timeout was set to 5 seconds, the UR trial was considered successful. To assess the impact on the ability to visually explore the surrounding environment, we had participants use the XVA alone to perform UR tasks while a soap bubble (UR-v task) appeared in the peripheral view. To burst the bubble, participants needed to gaze at it for at least 500 milliseconds.

The neuro-robotics platform and sensory-motor human-machine interface

To apply the proposed pipeline to develop HMIs for controlling the reaching movements of XRAs, this paper developed a neuro-robotics platform for testing and validating HMIs in the context of human augmentation through extra arms (Figure 2A). We utilized immersive virtual reality, where the humanoid avatar is equipped with a fixed XVA at a neutral position (chest) characterized by four fingers and two thumbs to avoid association with the left or right hand.

The visual feedback of the VE is presented in a first-person perspective through the Vive Pro Eye head-mounted display (HMD) for virtual characters. The VE is the core of our neuro-robotics platform, which also integrates a bilateral upper limb exoskeleton (ALEx) for tracking joint angles and providing force feedback for biological limbs. With this platform and following the different modules of the proposed pipeline, the suitability of the motor and sensory-motor human-machine interfaces can be tested before using the XRA, ensuring real force feedback for the biological arm regarding VE interactions through the use of a manual exoskeleton for engaging simulations.

A module for artificial tactile feedback was also developed, providing tactile and proprioceptive information through vibration motors and a factor. The system proved effective, allowing users to correlate the feedback received with the information on end effector displacement, thus integrating the possibility of including this HMI component within the neuro-robotics platform. The different motor decoding, driving, and feedback modules of the platform are illustrated in Figure 2B. Figure 2C shows an example of controlling single target reaching operations using the proposed HMI. To control the extra arm, the user must gaze at the given target appearing in the VE to select it, then control the movement towards (or away from) the target by expanding (or contracting) the diaphragm beyond a given threshold. For a portion of users, tactile feedback was also provided; in this case, the factor linearly encodes the distance from the resting state (the farther the XVA is, the greater the pressure exerted on the user’s chest), with the two coin vibrators encoding the start and offset contact with the target or biological arm.

The motion HMI does not interfere with visual exploration and verbal interaction

Firstly, this paper describes the HMI (gaze and breathing) in the framework of human augmentation. It evaluates whether it hinders two potentially affected functions: the ability to visually explore the VE and the ability to speak. To this end, we measured a baseline period for 10 naïve volunteers (5 females and 5 males, age = 25.7 ± 2.0) using the XVA to perform single-handed reaching (UR) tasks, comparing it with the situation during the UR task (UR-v) when they had to search for randomly appearing visual cues in the peripheral view of the VE. In a third block, they had to continuously count aloud while performing the same UR task (UR-c) (Figure 3A). The UR-V and UR-C block orders were balanced. To ensure participants did not alternate between controlling the XVA and counting, we measured the percentage of time participants spent performing both tasks simultaneously in the UR-C task. A low percentage here indicates that participants separated the two tasks. Our results showed that all participants had moments of simultaneously controlling the XVA and counting. The median scores of all participants were above 47% (Figure 3B).

Figure 3 illustrates the human-machine interface enhancement assessment.

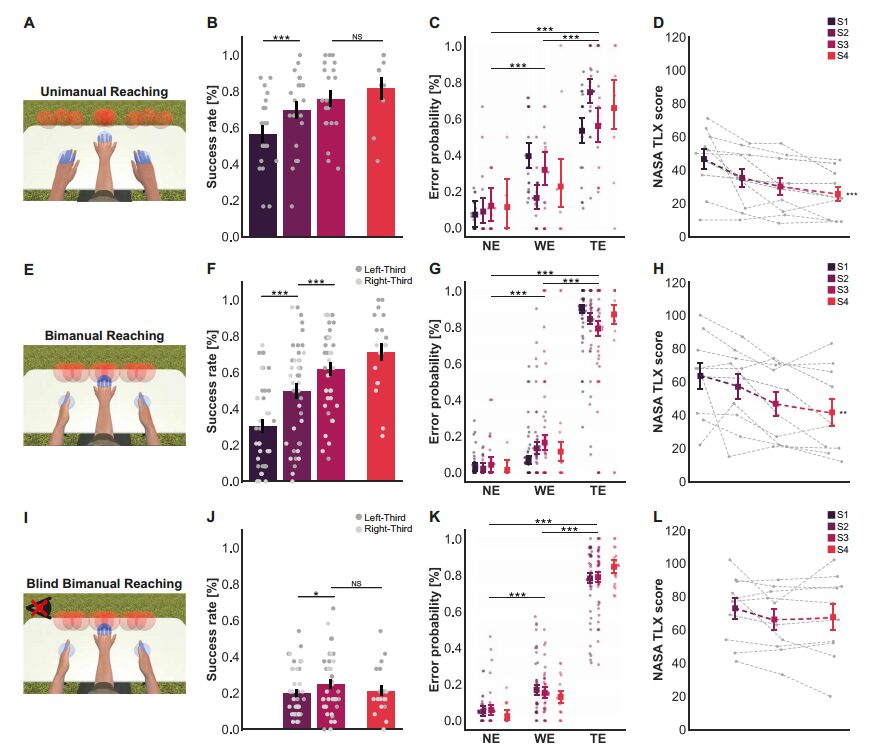

Performance of independent XVA motion control improves with training

This study recruited 20 naïve volunteers (10 females and 10 males, age = 24.5 ± 4.4), randomly assigned to the motion enhancement group. Their motion control performance was evaluated using the XVA (in terms of success rate and execution time) across three consecutive sessions of various contact tasks. In each of the three stages, participants completed UR blocks in the VE (Figure 4A), aimed at assessing their ability to control the XVA while keeping the biological arm at rest. Participants improved their success rates across the three training sessions [χ2 (2) = 45.68, P< 0.001], with a correct success rate of 75.8 ± 4.5% in the third training session (Figure 4B).

Figure 4 illustrates the independent coordination of XVA control.

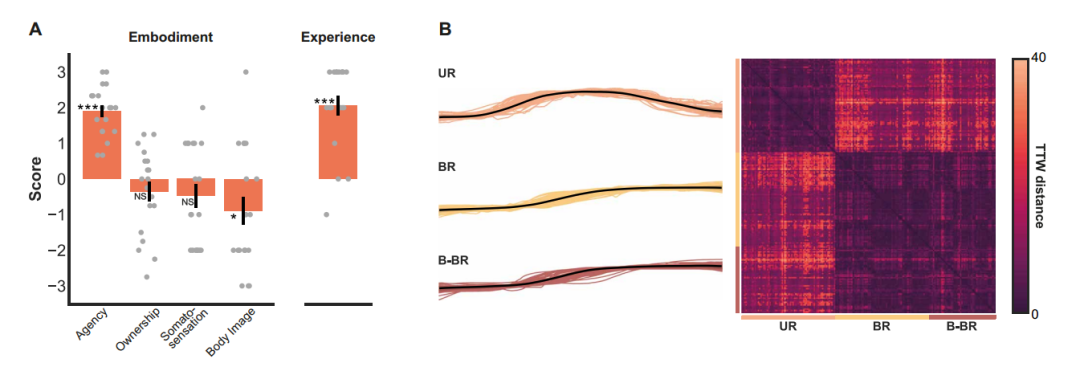

The task-dependent diaphragmatic breathing profile of XVA motion control

This paper analyzes the diaphragmatic breathing characteristics of successful trials to understand the potential control strategies used by participants in UR and BR task types. The study found that the profiles from successful trials of UR and BR and B-BR tasks were essentially different and could be divided into two separate clusters (Figure 5). The UR type exhibited a typical bell shape, reflecting the requirements to reach the target and stop within it. In contrast, the profiles of BR and B-BR tasks are characterized by S shapes. This shows how participants successfully completed BR and B-BR when the XVA collided with the biological hand within the movement towards the target. As seen in the matrix of Figure 5B, the breathing profiles exhibited unexpectedly small variations across tasks.

Figure 5 illustrates the motion enhancement group XVA embodiment and breathing analysis.

The integration of artificial tactile feedback did not improve the coordination of XVA control

By comparing the success rates of the sensory-motor enhancement group and the motion enhancement group in the BR task, the study found an interaction between group and session [χ2 (2) = 12.80, P = 0.002]. However, despite the lower success rate of the sensory-motor enhancement group, the analysis of the study showed no significant differences between the two groups in the second treatment (P = 0.691, BF = 0.234) or third treatment (P = 0.933, BF = 0.239). Similar to findings in the UR task, sufficient evidence was not found to conclude a difference between the two groups (P = 0.095, BF = 0.952), even when observing the maximum difference in success rates between the two groups during the first session (Figure 6B).

When analyzing the success rates of the sensory-motor enhancement group and the motion enhancement group in the UR task, no substantial evidence was found indicating an interaction between group and session [χ2 (1) = 0.15, P = 0.701, BF = 0.227]. As shown in Figure 6C, contrary to expectations, the two groups achieved similar success rates in the second phase (P= 0.927, BF = 0.296) and third phase (P= 0.868, BF = 0.161).

Figure 6 illustrates the independent and coordinated sensory-motor XVA control.

Planar XRA prototype study

To verify the usability of the HMI in the physical world, a physical prototype of the XRA was developed. The XRA described in this paper is a wearable robot weighing less than 2 kg, connected to the user’s chest via adjustable straps, integrating a base that allows planar rotation and a linear motion blade for end effector translation (Figure 7A).

Despite some minor oscillations on the vertical axis, the arm exhibited smooth and consistent trajectories, demonstrating its effective execution of tasks designed on a 2D plane. Users can control the XRA using a simplified version of our motor human-machine interface, where the eye-tracking module is replaced by the automated orientation of the XRA towards the target of the experiment. The XRA did not integrate sensory HMIs, as the comparison of the results of the evaluation block showed no improvements compared to using the motion HMI alone (Figure 7B).

Volunteers in the motion enhancement group participating in the fourth training were asked to perform a separate UR training with the XRA (Figure 7C), as experienced participants who had experience using the HMI for three consecutive days of training. We also recruited 10 naïve volunteers with no prior experience with HMIs (control group, 5 females and 5 males, age = 24.6 ± 2.5), who performed the same tasks. The success rates of naïve XRA users and naïve XVA users (the motion enhancement group in the first phase) during the UR task were compared to understand whether the experience of using a virtual or physical extra arm would lead to different performances (Figure 7D). Analyzing the sources of errors when using the XRA (Figure 7E), we found that experienced users made no net errors, while naïve users made no net errors. Considering this complete separation, the following analysis was decided to be primarily based on Bayesian hypothesis testing, as it is more robust in this case. After completing the UR task with the XRA, experienced and naïve participants were asked to complete the NASA TLX questionnaire to assess their perceived task workload (Figure 7F).

This paper developed, characterized, and validated a non-invasive human-machine interface specifically designed to control XRAs by utilizing biological signals that naturally participate in motion actions. While gaze has been proposed as a mechanism for triggering automatic XRA reaching actions (29), its combination with diaphragm modulation decoding ensures more flexible control, allowing users to freely and voluntarily control the position of the XRA along the selected direction of motion. Our human-machine interface follows the principles of neural resource allocation issues, providing effective enhancement for healthy individuals without compromising natural limb and biological functions.

Innovative control method: The study proposes a novel human-machine interface (HMI) utilizing gaze detection and diaphragmatic breathing to control an extra robotic arm (XVA), enabling users to effectively manipulate the robot without interfering with natural movements. This approach showcases new possibilities in human-machine interaction, with broad application prospects.

Systematic experimental design: The study systematically analyzes the motion control performance of participants using the XVA through multiple training and assessments involving 20 volunteers. By employing statistical analysis methods (such as generalized linear mixed models), the research provides reliable data support, validating the significant enhancement of motion control capabilities through training.

Multidimensional experience assessment: The article evaluates participants’ experiences with the XVA through questionnaires, covering multiple aspects such as body ownership, agency, embodiment, and body image. This comprehensive assessment method aids in a deeper understanding of users’ subjective experiences when using robotic arms, providing important reference for future research and applications.

Original Link

Original Linkhttps://www.science.org/doi/10.1126/scirobotics.adh1438

For submissions, recommendations, and collaborations, please contact us!

|| WeChat Official Account: Micro-Nano Sensing

|| WeChat ID: successplus999

Micro-Nano Sensor Industry Research

Academic Frontier Research Reports

Author: LiL

Editor: Teacher Li

Distributor: LiL

Disclaimer ————

This article is for research sharing only and is not for profit use. If there is any infringement, please contact the backend personnel for deletion.

Due to limited knowledge, there may be omissions and errors. Criticism and correction are welcomed.