Follow+Star Public Account, don’t miss wonderful content

Source | Mculover666

1. Why Task Synchronization is Needed

In a real-time operating system, each task is an independent bare-metal program, but these tasks share the same CPU, memory space, and peripherals. How does the operating system solve this problem?

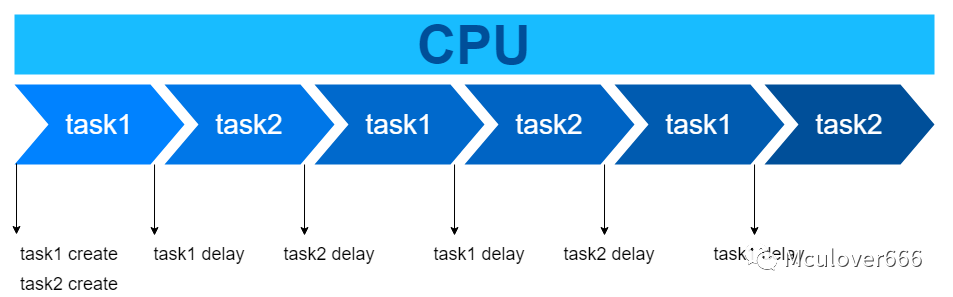

1.1. How to Share the Same CPU

Whether a task is using the CPU is reflected in whether that task is in a running state.

The RTOS kernel adopts the idea of “time-sharing CPU” and schedules tasks in the system to run at different times based on preemptive scheduling rules, time-slice round-robin scheduling rules, and interrupt scheduling rules, which is also the focus of the previous four articles.

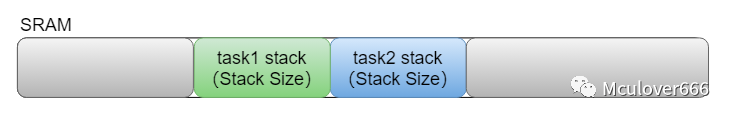

1.2. How to Share the Same Memory Space

Each task’s task stack is independent, so each task can have fun in its own stack space when running.

If we consider the entire memory space as an apartment, each task would be assigned its own room, and it can play freely inside. As for what you are doing, others in different rooms have no way of knowing, nor do they need to know.

In some cases, there may be some special memory spaces, such as a global variable or an array of global variables, that each task can access and operate on. The heap space is similar; each task can use malloc and free to allocate and release memory.

These memory spaces are collectively referred to as shared memory, which has only one characteristic, both an advantage and a disadvantage: “All tasks in the system can freely access and modify it”.

In daily life, this is similar to shared rooms like kitchens, bathrooms, and storage rooms. If someone forces their way in while you’re using it, won’t it be a mess?

If rules and restrictions are applied, the advantages of shared memory can be utilized:

If rules and restrictions are applied, the advantages of shared memory can be utilized:

-

Advantages: Because tasks can freely access and modify, they can be used as some “flags or counters”, which leads to the concept of semaphores. -

Disadvantages: Tasks can freely access and modify, so when used as a shared data buffer, it is necessary to control which tasks can access and modify it to protect the data from being tampered with, leading to the concept of “mutex locks”.

As for heap space, it can be entirely managed by the system, with “the system providing a unified malloc and free interface” to solve the problem easily, which will be discussed in detail in subsequent articles.

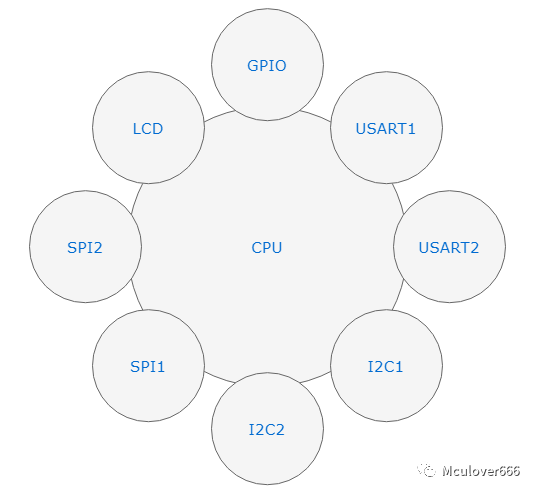

1.3. How to Share the Same Peripheral

Due to the special nature of embedded systems, there are many peripherals, such as GPIO (including situations where GPIO simulates various software protocols), serial ports, I2C interfaces, SPI interfaces, etc.

All tasks can freely use these peripherals. When task1 uses the I2C interface to operate on the OLED, if a high-priority task task2 is suddenly awakened for some reason and preempts task1, if task2 also operates on the OLED via the I2C interface, then when task1 resumes execution, it will certainly not display content correctly (all previous communication data with the OLED will be lost).

At the operating system level, it is necessary to impose restrictions on the use of peripherals: “When one task is using a peripheral, other tasks cannot use it and must wait until that task has finished using it before they can use it normally”.

Clearly, managing all peripheral resources by the system is unrealistic; the system only needs to provide a “mutex lock mechanism”, leaving the permission operations for peripherals to the developer, which is simple and convenient.

2. Pend-Post Mechanism

The characteristics of shared memory (e.g., global variables) are both advantages and disadvantages: all tasks in the system can freely access and modify it.

“Based on global variables, the system adds some additional mechanisms and data members, encapsulated into structures, which is everything used for inter-task communication in the RTOS kernel”, including: semaphores, mutex locks, events, completion counts, counting locks, and barriers. These provide different functionalities for upper-layer applications, each with its own characteristics for applications to choose from in different situations.

There are many types, but they all have one unchanging mechanism: the pend-post mechanism. The implementation in TencentOS-tiny is in pend.h and pend.c.

2.1. Wait List Object

Let’s not worry about what it does; just know what it looks like: it’s a doubly linked list node:

typedef struct pend_object_st {

k_list_t list;

} pend_obj_t;

2.2. Implementation of Pend Wait Mechanism

The English definition of pend is “to wait for a decision”, which I think is very apt. The implementation mechanism is also very simple: when a task needs to wait for this quantity, it is mounted onto “the wait list object of this quantity”. The source code is as follows:

__KNL__ void pend_task_block(k_task_t *task, pend_obj_t *object, k_tick_t timeout)

{

readyqueue_remove(task);

task->pend_state = PEND_STATE_NONE;

pend_list_add(task, object);

if (timeout != TOS_TIME_FOREVER) {

tick_list_add(task, timeout);

}

}

❝

If it is not a blocking wait, then simultaneously mount the task onto the delay list, automatically waking up when the time is up. The delay list has been detailed in the previous article.

❞

① “How does a task mount onto the wait list?”

In the task control block, there is a data member:

/**

* task control block

*/

struct k_task_st {

//… omitted unrelated parts

k_list_t pend_list; /**< when we are ready, our pend_list is in readyqueue; when pend, in a certain pend object's list. */

pend_obj_t *pending_obj; /**< if we are pending, which pend object's list we are in? */

pend_state_t pend_state; /**< why we wakeup from a pend */

};

Among them, the doubly linked list node pend_list is mounted to the ready list when the task is ready, and is mounted to the wait list when the task is waiting for a certain task synchronization quantity.

② “Do tasks on the wait list have priorities?”

Suppose there are 5 tasks waiting for the same mutex lock. Once the mutex lock is released, according to the rules of preemptive scheduling based on priority, it is clear that the task with the highest priority among the 5 tasks will be awakened to execute.

In TencentOS-tiny, algorithm optimization has been performed: when inserting tasks into the wait list, “they are arranged in descending order of priority”, so that when waking up tasks, we can always pull up the task at the head of the list. The implementation source code is as follows:

__STATIC__ void pend_list_add(k_task_t *task, pend_obj_t *pend_obj)

{

k_task_t *iter;

/* keep priority in descending order, the boss(task with highest priority,

numerically smallest) always comes first

*/

TOS_LIST_FOR_EACH_ENTRY(iter, k_task_t, pend_list, &pend_obj->list) {

if (task->prio < iter->prio) {

break;

}

}

tos_list_add_tail(&task->pend_list, &iter->pend_list);

// remember me, you may use me someday

task->pending_obj = pend_obj;

task_state_set_pend(task);

}

❝

Just knowing that tasks are arranged in descending order of priority is sufficient; not understanding the source code implementation does not affect anything.

❞

2.3. Implementation of Post Wake Mechanism

When the task synchronization quantity is posted, the highest priority task (the first task on the wait list) waiting for that task synchronization quantity is awakened.

The wake mechanism is also very simple; it removes the task control block from the wait list, removes it from the delay list, and adds it to the ready list. The source code is as follows:

__KNL__ void pend_task_wakeup(k_task_t *task, pend_state_t state)

{

if (task_state_is_pending(task)) {

// mark why we wakeup

task->pend_state = state;

pend_list_remove(task);

}

if (task_state_is_sleeping(task)) {

tick_list_remove(task);

}

if (task_state_is_suspended(task)) {

return;

}

readyqueue_add(task);

}

It should be noted that when calling this API, “the state parameter is used to indicate the reason the task was awakened”, for use by upper-layer application programs, stored in the pend_state member of the task control block, with the following task state values:

/ **

* The reason why we wakeup from a pend.

* when we wakeup, we need to know why.

*/

typedef enum pend_state_en {

PEND_STATE_NONE, /**< nothing. */

PEND_STATE_POST, /**< someone has post, we get what we want. */

PEND_STATE_TIMEOUT, /**< a post has never came until time is out. */

PEND_STATE_DESTROY, /**< someone has destroyed what we are pending for. */

PEND_STATE_OWNER_DIE, /**< the pend object owner task is destroyed. */

} pend_state_t;

3. Rich Task Synchronization Quantities

TencentOS-tiny provides a rich set of task synchronization quantities suitable for various situations. This article will not discuss the specific APIs and usage demos. If you need to read them, please refer to the documents in the doc directory of the TencentOS-tiny repository: 04-TencentOS tiny Development Guide.

This article only discusses what functionalities are encapsulated based on these task synchronization quantities and the global variables + pend-post mechanism.

3.1. Semaphore

The semaphore control block is as follows:

typedef struct k_sem_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

k_sem_cnt_t count;

k_sem_cnt_t count_max;

} k_sem_t;

We can see that it has added the count member and count_max member. What are they used for?

-

① Provides binary semaphore functionality

Setting count_max to 1 means the semaphore’s count value can only be 0 or 1, thus it is called a binary semaphore, usually used as a flag.

-

② Provides resource counting functionality

Setting count_max to the maximum count value means count represents the current count value, which can be used to count resources. When a pend is successful, count will decrease by 1, and after a post is successful, count will increase by 1, for example, in the typical producer-consumer case.

3.2. Mutex Lock

The source code of the mutex lock control block is as follows:

typedef struct k_mutex_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

k_nesting_t pend_nesting;

k_task_t *owner;

k_prio_t owner_orig_prio;

k_list_t owner_anchor;

} k_mutex_t;

Among them, the pend_nesting member indicates whether the mutex lock is in a locked or unlocked state and also indicates the nesting value of the mutex lock, “to prevent mutex lock nesting” (having already successfully pended the mutex lock and needing to pend again) is an integer:

typedef uint8_t k_nesting_t;

The owner member is used to record the task that has obtained the mutex lock, so that only the task that has obtained the mutex lock can release it; other tasks cannot.

The owner_anchor member is a doubly linked list node, as one can use multiple locks simultaneously, so this member is used to “mount to” the mutex_own_list list of the task control block.

The owner_orig_prio member records the priority of the task that owns the mutex lock, used to prevent priority inversion.

Priority inversion can be summarized in one sentence (though a bit long):

Task priority A > B > C (lowest), when A is suspended waiting for C to release the task synchronization quantity, if C is preempted by B for some reason, causing C to be unable to release the task synchronization quantity, which in turn causes A to be unable to obtain the task synchronization quantity, resulting in “a low-priority task B running while a high-priority task A is waiting”, which can lead to very serious consequences in a real-time system; this is B’s fault~

Therefore, “when a task obtains the mutex lock, its priority will be temporarily raised to the highest to prevent being interrupted by other tasks, and after releasing the lock, it will restore its original priority”. Users should also use the mutex lock as soon as possible and release it.

3.3. Event

The source code of the event control block is as follows:

typedef struct k_event_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

k_event_flag_t flag;

} k_event_t;

In comparison, it adds a data member flag to record the event flag, indicating whether the event has occurred:

typedef uint32_t k_event_flag_t;

An event contains a flag, where each bit represents an “event”; the flag can represent up to 32 events.

At the same time, a task can wait for one or more “events” to occur. Other tasks can wake up the waiting task by writing specific “events” under certain business conditions, achieving a programming paradigm similar to signals.

3.4. Completion Count

The source code of the completion count control block is as follows:

typedef struct k_completion_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

completion_done_t done;

} k_completion_t;

It adds a done member to indicate whether it is completed:

typedef uint16_t completion_done_t;

The completion count is a simple inter-task communication mechanism used to synchronize whether a certain event has been “completed” between tasks.

3.5. Countdown Latch

The source code of the countdown latch control block is as follows:

typedef struct k_countdownlatch_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

k_countdownlatch_cnt_t count;

} k_countdownlatch_t;

It adds a count member to store the count value.

The countdown latch provides a concept of “counting information” synchronization. When creating the countdown latch, a count value is specified. Each time a task executes tos_countdownlatch_post, the count value of the latch decreases until the count value of the latch reaches zero, at which point the tasks waiting for this latch will be awakened.

3.6. Barrier

The source code of the barrier control block is as follows:

typedef struct k_barrier_st {

#if TOS_CFG_OBJECT_VERIFY_EN > 0u

knl_obj_t knl_obj;

#endif

pend_obj_t pend_obj;

k_barrier_cnt_t count;

} k_barrier_t;

It adds a data member count to indicate the count value.

The barrier provides a mechanism for setting task blocking barriers. When creating the barrier, a count value is specified. Each time a task executes tos_barrier_pend, the count value of the latch decreases until the count value reaches zero, at which point all tasks blocked at the tos_barrier_pend point can continue running.

4. Summary

As usual, let’s review the content of this article.

This article mainly discusses the various implementations of task synchronization quantities in the RTOS kernel, which are diverse yet fundamentally the same. Two main points were discussed in this article.

-

① Global variables have the characteristic of being accessible and modifiable by all tasks. -

② Each task synchronization quantity has “its own wait list” for mounting all tasks currently waiting for that task synchronization quantity, which is also the core of the pend-post mechanism. It is important to note that when waking up only one task, “the task with the highest priority in the wait list is awakened”.

Based on these two points, and with different data members, various rich task synchronization quantities have been implemented. Among them, semaphores, mutex locks, and events are relatively common, with the following comparisons:

-

Semaphore: Any task can acquire and release it, can be used as a “binary” signal flag, or can be used for counting; -

Mutex Lock: Any task can acquire it, but “can only be released by the owner”, and upon acquiring the lock, the owner’s priority is raised to the highest to prevent priority inversion, used for protecting peripherals and shared memory; -

Event: Any task can acquire and release it, used as a flag, “supports a maximum of 32 event flags”;

As for the others, it depends on your choice!

Lastly, one more thing: when creating these task synchronization quantity control blocks, they are all “global variables”. These various task synchronization quantities are just extensions of functionality based on ordinary global variables. If your scenario can be solved directly with ordinary global variables, why not? Use whatever is convenient!

This article ends here. If you ever encounter someone who insists on debating why to use these task synchronization quantities when you have global variables, please send them this article!

———— END ————

● Column “Embedded Tools”

● Column “Embedded Development”

● Column “Keil Tutorial”

● Selected Tutorials on Embedded

Follow the public accountReply “Join Group” to join the technical exchange group according to the rules, reply “1024” to see more content.