After understanding the content of the previous article on net/rpc (From net/rpc in Go applications to gRPC), it is now time to delve into HTTP/2, which is the foundation of the gRPC protocol.

A Guide to the Principles of HTTP/2 and Practical Implementation in Go

This article focuses on theoretical explanations, and the content will be relatively dense. We will primarily focus on the core concepts of HTTP/2, followed by a brief introduction on how to enable it in Go. It is recommended to brew a cup of coffee, sit down, and take your time to read as we break down this topic step by step.

Advantages of HTTP/2

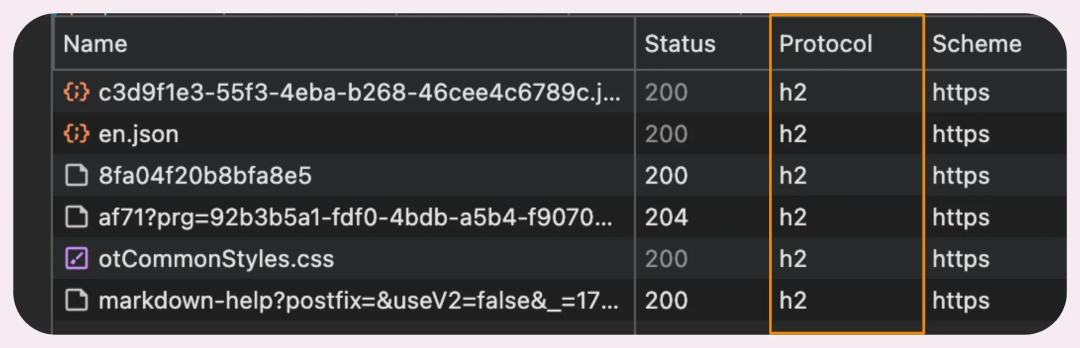

HTTP/2 is a significant upgrade over HTTP/1.1 and has now become the default standard everywhere. If you have ever used Chrome’s developer tools to inspect network requests, you have likely seen the operational status of HTTP/2 connections.

Inspecting HTTP/2 connections with Chrome

So why is HTTP/2 so important? What issues does HTTP/1.1 have?

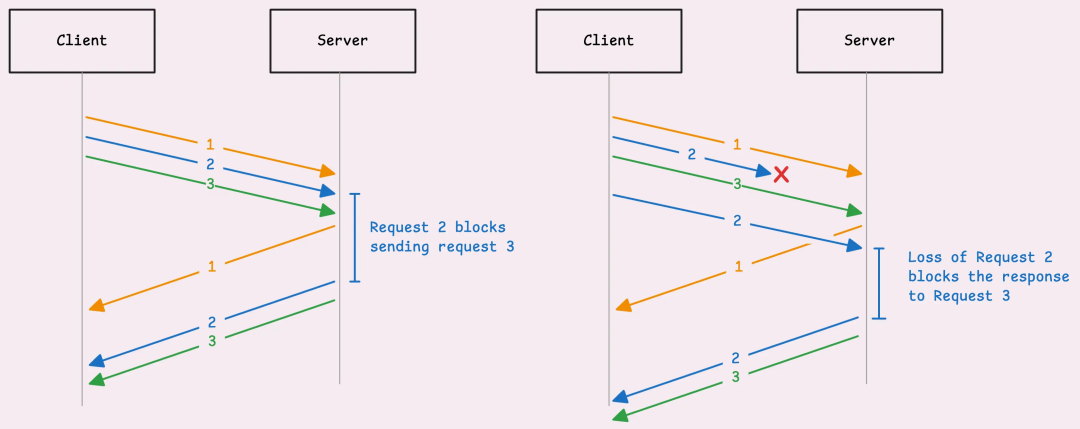

HTTP/1.1 did introduce a pipelining mechanism, which theoretically seems perfect. The principle is simple: multiple requests can share the same connection, allowing the next request to be initiated without waiting for the previous one to complete.

Pipelining mechanism in HTTP/1.1: Sequential processing of requests

The problem is that requests must be sent in order, and responses must also be returned in the same order. If a response is delayed—such as when the server needs extra time to process—then all other requests in the queue must wait.

If a “small fault” in the network causes a delay in a request, this situation can also occur. The entire response pipeline will stagnate until the delayed request is processed.

Head-of-Line blocking in HTTP/1.1

This issue is known as Head-of-Line blocking.

To bypass this limitation, HTTP/1.1 clients (like your browser) began establishing multiple TCP connections to the same server, allowing requests to flow more freely and concurrently.

While this method is feasible, it is not efficient:

- More connections mean that both the client and server consume more resources.

- Each connection must go through the TCP handshake process, adding extra latency.

“So, did HTTP/2 solve this problem?”

It solved… most of it.

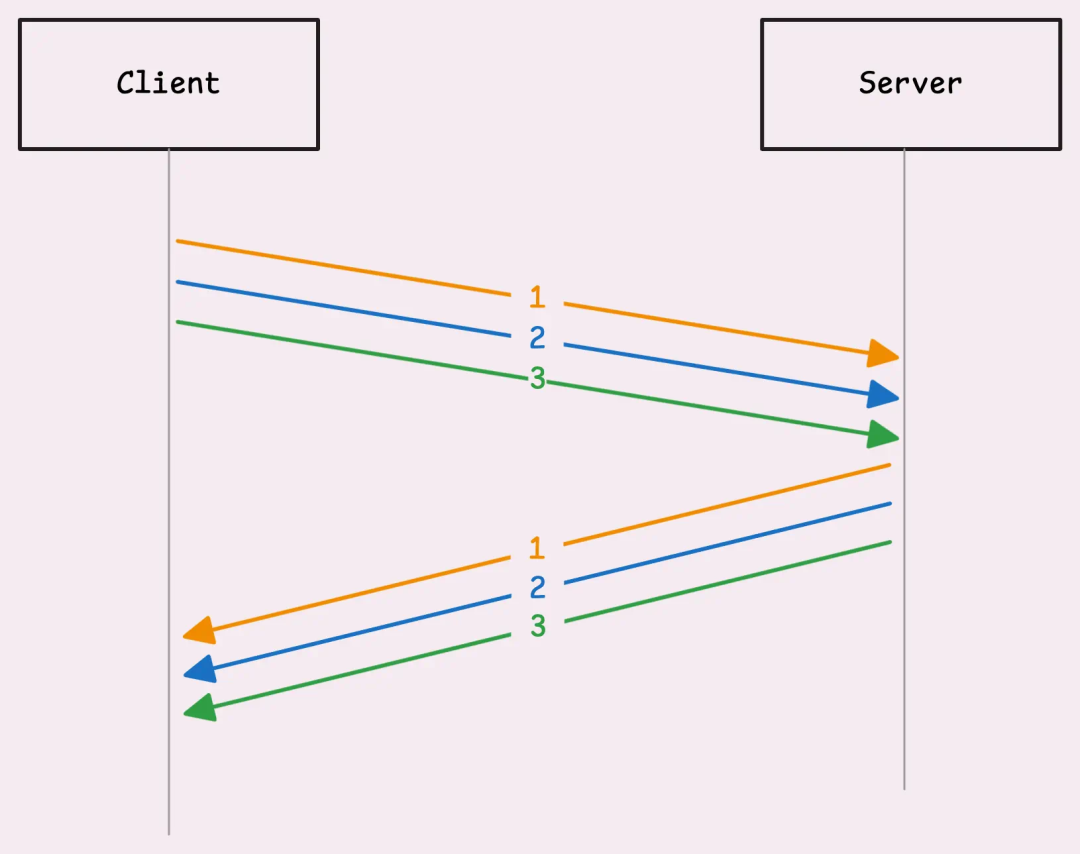

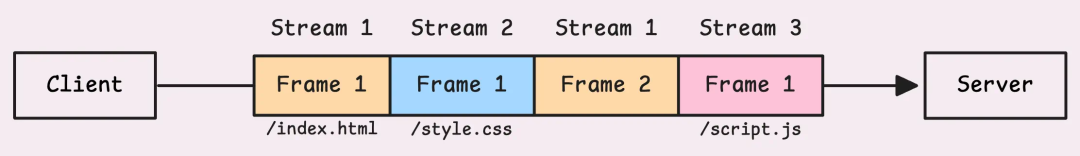

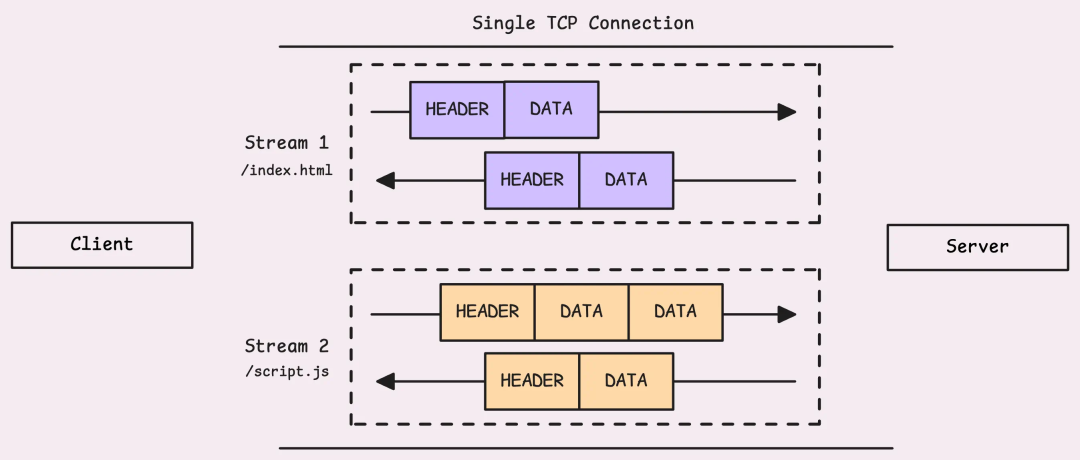

HTTP/2 splits a single connection into multiple independent data streams. Each data stream has its unique identifier, known as stream ID, and these data streams can operate in parallel. This design addresses the Head-of-Line blocking issue at the application layer (the layer where HTTP resides). Even if a data stream experiences a delay, it does not affect the transmission of other data streams.

Multiple stream data frames on a single connection

However, HTTP/2 still operates over TCP, so it has not completely eliminated the Head-of-Line blocking issue.

At the transport layer, TCP insists on delivering packets to the application layer in order. If a packet is lost or delayed, TCP will make all subsequent packets wait until the missing part is resolved. Once the delayed packet arrives, TCP will deliver the queued packets to the HTTP/2 layer (or application layer) in the correct order.

Even if the content of other data streams is ready in the buffer, the server still has to wait for the delayed data stream to arrive before processing the remaining parts.

To completely break through the limitations of TCP, one must consider protocols like QUIC, which is based on UDP (User Datagram Protocol), and this is also the core motivation behind HTTP/3.

Of course, HTTP/2 not only addresses the pain points of HTTP/1.1 but also opens up new possibilities. Let’s dive deeper into how it works.

How HTTP/2 Works

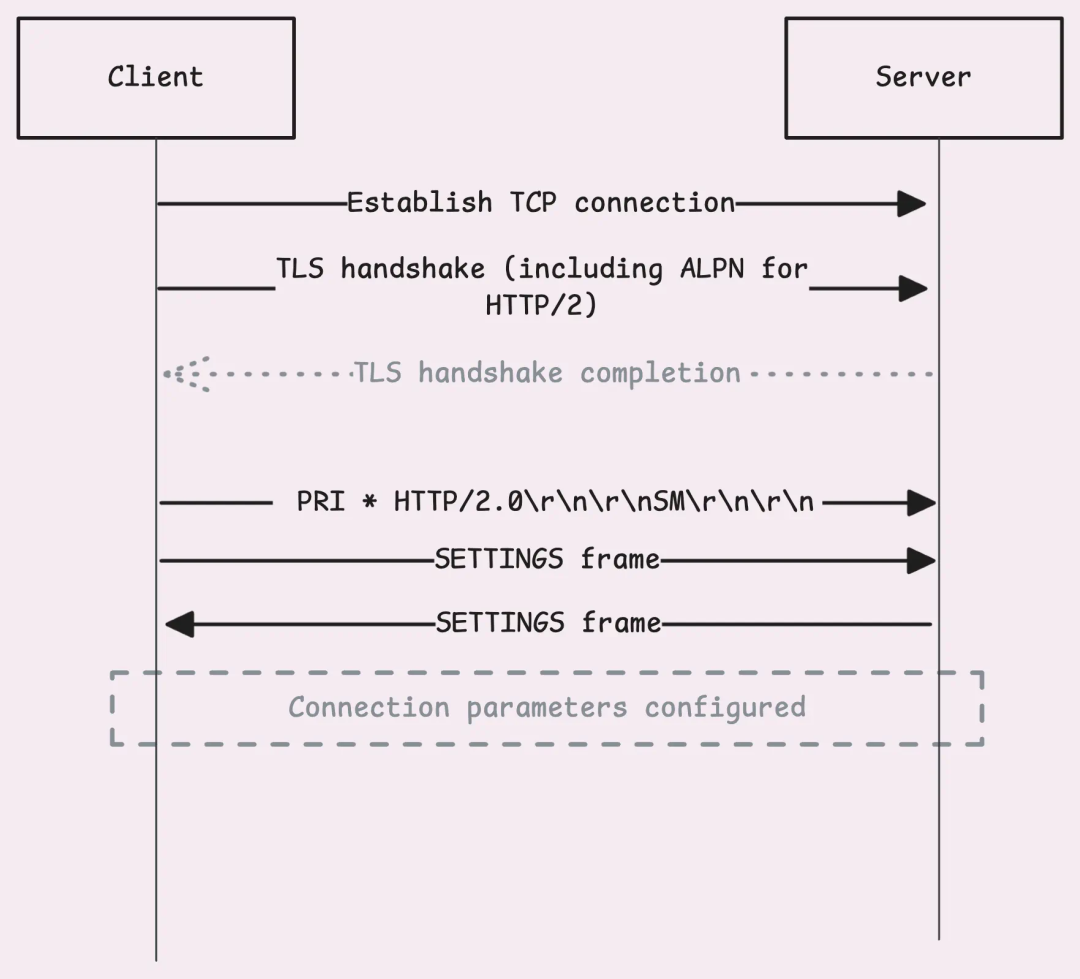

When a client establishes a TLS connection, it first sends a <span><span>ClientHello</span></span> message. This message includes the ALPN (Application-Layer Protocol Negotiation) extension, listing the protocols supported by the client. Typically, this includes “h2” for HTTP/2, “http/1.1” as a fallback, and other protocols.

The server’s TLS protocol stack will match this list with the protocols it supports. If both parties agree on “h2”, the server will confirm this choice in the <span><span>ServerHello</span></span> response.

After that, the TLS handshake will continue as usual, including setting up encryption keys, validating certificates, and other steps.

Connection Preface

Once the handshake is complete, the client sends a connection preface. It starts with a specific 24-byte sequence: <span><span>PRI</span></span><span><span>*</span></span><span><span>HTTP</span></span><span><span>/</span></span><span><span>2.0</span></span><span><span>\r\n\r\nSM\r\n\r\n</span></span>. This sequence is used to confirm that the HTTP/2 protocol is being used. At this point, compression and framing have not yet begun.

Immediately following the connection preface, the client sends a SETTINGS frame. This is a connection-level control frame that is not related to any stream, essentially indicating to the server: “This is my preference.” It includes flow control options, maximum frame size, and other configurations.

Server and client exchanging SETTINGS frames

Once the server understands the client’s intent, it will send its own connection preface in response, which includes a <span><span>SETTINGS</span></span> frame. After the exchange is complete, the connection is established.

When the client is ready to send a request, it will create a new data stream with a unique identifier, known as stream ID. The data stream IDs initiated by the client are always odd—1, 3, 5, etc.

You may wonder why stream IDs use odd numbers instead of sequential numbering like 1, 2, 3. There is a clever rule:

- Odd stream IDs are reserved for client-initiated requests.

- Even stream IDs are reserved for server use, typically for server push and other server-initiated functions.

- Stream ID 0 is special and is only used for connection-level (not stream-level) control frames, which apply to the entire connection.

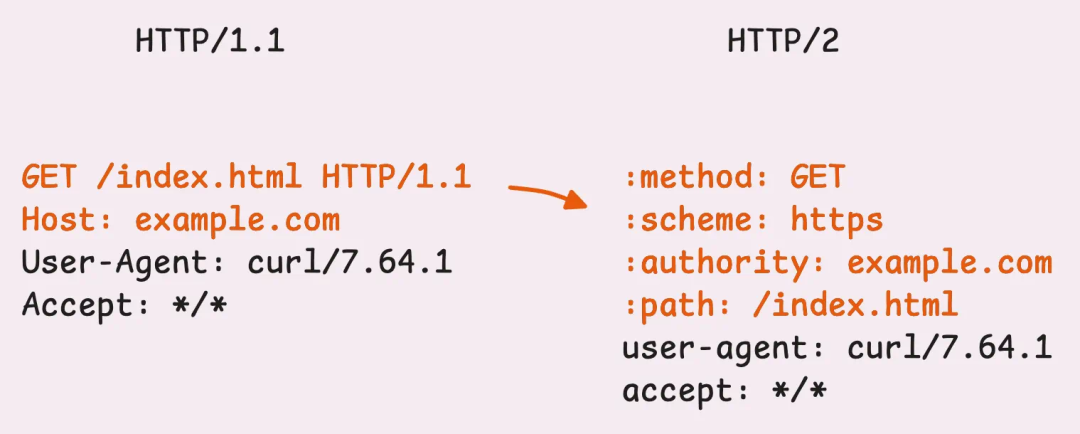

When the data stream is ready, the client sends a <span><span>HEADERS</span></span> frame. This frame contains all the header information you expect—equivalent to the request line and header information in HTTP/1.1 (like <span><span>GET</span></span><span><span>/</span></span><span><span>HTTP</span></span><span><span>/</span></span><span><span>1.1</span></span> and its subsequent content). However, the structure and transmission method of these headers differ.

- Structure: HTTP/2 introduces pseudo-header fields to define information such as method, path, and status. Following these are the familiar header fields, such as

<span><span>User</span></span><span><span>-</span></span><span><span>Agent</span></span>,<span><span>Content</span></span><span><span>-</span></span><span><span>Type</span></span>, etc. - Transmission: Header information is compressed using the HPACK algorithm and transmitted in binary format.

“Pseudo-headers? HPACK compression? What is going on here?”

Let’s first understand the pseudo-header fields.

If you frequently use Chrome’s developer tools or other inspection tools, these may already be familiar to you. In HTTP/2, pseudo-header fields are a way to separate special headers from regular headers. These special headers (like <span><span>:</span></span><span><span>method</span></span>, <span><span>:</span></span><span><span>path</span></span>, <span><span>:</span></span><span><span>scheme</span></span>, and <span><span>:</span></span><span><span>status</span></span>) always come first. Following them are the regular headers, such as <span><span>Accept</span></span>, <span><span>Host</span></span>, and <span><span>Content</span></span><span><span>-</span></span><span><span>Type</span></span>, which are arranged in the usual format.

Comparison of HTTP/1.1 and HTTP/2 header formats

In HTTP/1.1, this type of information is scattered across the request line and header fields. This design is not elegant and often relies on conventions or context to fill in missing information. For example:

- Protocol type (HTTP or HTTPS) is usually implied by the connection method. If it is a TLS connection using port 443, it is naturally HTTPS.

- The

<span><span>Host</span></span>request header added for virtual hosting in HTTP/1.1 is just one of many request headers and not a core part of the request structure.

With the introduction of pseudo-header fields (starting with a colon, like :method or :path) in HTTP/2, these ambiguities are cleared up.

“So what is HPACK compression?”

Unlike HTTP/1.1, which uses line breaks to separate plain text headers (<span><span>\r\n</span></span>), HTTP/2 encodes headers in binary format. This is where the HPACK compression algorithm comes into play, specifically designed for HTTP/2. HPACK not only saves space but also avoids the need to retransmit the same header data.

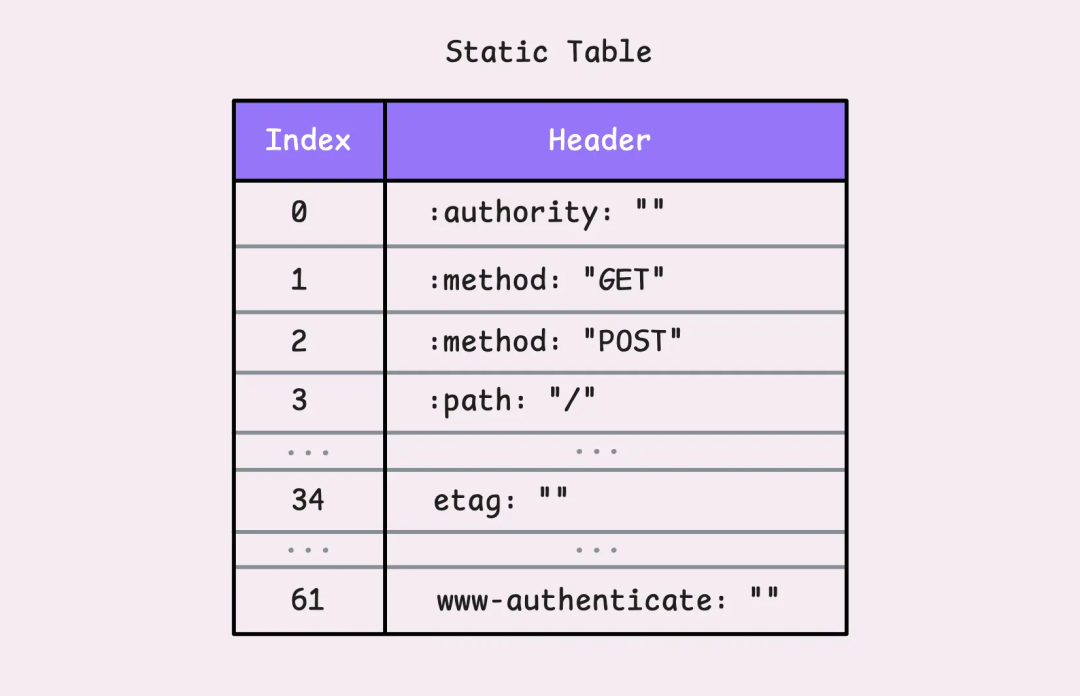

HPACK cleverly uses two tables to manage headers: a static table and a dynamic table. The static table acts like a dictionary shared between the client and server. It contains the 61 most commonly used HTTP headers. If you want to know more details, you can check the <span><span>net</span></span><span><span>/</span></span><span><span>http2</span></span> package’s static_table.go file.

Static table of commonly used HTTP headers

Suppose you send a GET request with a <span><span>:</span></span><span><span>method</span></span><span><span>:</span></span><span><span>GET</span></span> header.

HPACK does not need to transmit the entire header; it only needs to send the number 2, which corresponds to the key-value pair <span><span>:</span></span><span><span>method</span></span><span><span>:</span></span><span><span>GET</span></span>, which both parties can understand. If the key matches but the specific value is different, such as <span><span>etag</span></span><span><span>:</span></span><span><span>some</span></span><span><span>-</span></span><span><span>random</span></span><span><span>-</span></span><span><span>value</span></span>, HPACK can still reuse this key (in this case, 34) and only transmit the updated value. This way, there is no need to retransmit the full header name.

“What about

<span><span>some</span></span><span><span>-</span></span><span><span>random</span></span><span><span>-</span></span><span><span>value</span></span>?”

It will be encoded using Huffman coding, represented as <span><span>34</span></span><span><span>:</span></span><span><span>huffman</span></span><span><span>(</span></span><span><span>"some-random-value"</span></span><span><span>)</span></span> (pseudo code). Interestingly, the entire header <span><span>etag</span></span><span><span>:</span></span><span><span>some</span></span><span><span>-</span></span><span><span>random</span></span><span><span>-</span></span><span><span>value</span></span> will be added to the dynamic table.

The dynamic table starts off empty and grows as new headers (not in the static table) are sent. This makes HPACK a stateful protocol, meaning that both the client and server need to maintain their respective dynamic tables throughout the connection. Each new header added to the dynamic table receives a unique index value, starting from 62 (since 1-61 are occupied by the static table). This index can then be used without retransmitting the header. This design has the following characteristics:

- Connection-level: The dynamic table is shared among all data streams on the same connection. Both the server and client maintain a copy.

- Capacity limit: The default maximum capacity of the dynamic table is 4 KB (4,096 bytes), which can be adjusted through the

<span><span>SETTINGS_HEADER_TABLE_SIZE</span></span>parameter in the<span><span>SETTINGS</span></span>frame. When the table is full, old headers will be evicted to make room for new headers.

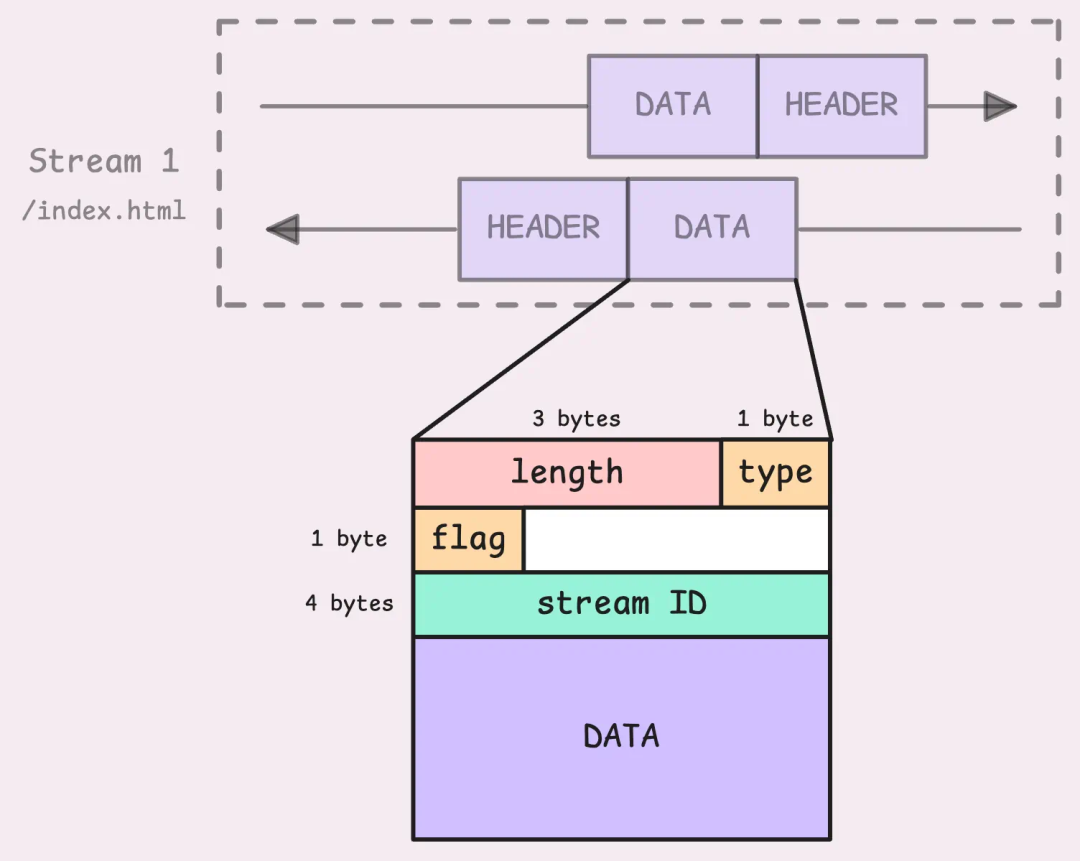

Detailed Explanation of Data Frames

If there is a request body, it will be sent via a <span><span>DATA</span></span> frame. When the request body exceeds the maximum frame size (default 16KB), it will be split into multiple <span><span>DATA</span></span> frames that share the same stream ID.

A single TCP connection carrying multiple data streams

“So, where is the stream ID in the frame?”

Good question. We haven’t discussed the structure of frames yet.

In HTTP/2, frames are not just containers for data or headers. Each frame contains a 9-byte frame header, which is different from the HTTP header we discussed earlier; this is a frame header.

Analysis of HTTP/2 frame header

Analysis of HTTP/2 frame header

Let’s look at the specific structure: first is the length, which indicates the size of the frame payload (excluding the frame header itself). Next is the type, which distinguishes the type of frame (such as DATA, HEADERS, PRIORITY, etc.). Then there are the flags, which provide additional information about the frame. For example, the <span><span>END_STREAM</span></span> flag (0x1) indicates that there will be no more frames on this stream.

Finally, there is the stream identifier. This is a 32-bit value used to identify the stream to which the frame belongs (the most significant bit is reserved and must be set to 0).

“What about the order of frames within a stream? What if frames arrive out of order?”

Indeed, while the stream identifier tells us which stream a frame belongs to, it does not specify the order of frames. The answer lies at the TCP layer. Since HTTP/2 operates over TCP, the protocol ensures that packets are transmitted in order. Even if packets are transmitted over different paths in the network, TCP guarantees that the order of packets received by the receiver will be exactly the same as when they were sent.

This is closely related to the Head-of-Line blocking issue we discussed earlier.

When the server receives a <span><span>HEADERS</span></span> frame, it will create a new data stream with the same stream ID as the request.

The server will first send its own <span><span>HEADERS</span></span> frame, which contains the response status and header information (using <span><span>HPACK</span></span> compression). Subsequently, the response body is sent via a <span><span>DATA</span></span> frame. Thanks to multiplexing technology, the server can interleave frames from multiple streams, transmitting different response data blocks simultaneously over the same connection.

On the client side, response frames are sorted by stream ID. The client decompresses the <span><span>HEADERS</span></span> frame and processes the <span><span>DATA</span></span> frames in order.

Even with multiple active streams, all content remains aligned.

Flow Control

When a frame is received, if its <span><span>END_STREAM</span></span> flag is set (the first bit of the frame header flag field is set to 1), this is a signal. It tells the receiver: “That’s it; there will be no more frames on this stream.” At this point, the server can send back the requested data and set its own <span><span>END_STREAM</span></span> flag in the response to end this stream.

However, ending a stream does not close the entire connection. The connection remains open, and other streams can continue to operate normally.

If the server needs to actively close the connection, it will send a <span><span>GOAWAY</span></span> frame. This is a connection-level control frame used to gracefully close the connection. When the server sends a <span><span>GOAWAY</span></span> frame, it will include the last stream ID it plans to process. This message essentially says:“I am preparing to wrap up; streams with higher IDs will not be processed, but ongoing streams can complete normally.” This is why it is called a graceful shutdown.

After sending a <span><span>GOAWAY</span></span> frame, the sender usually waits briefly to allow the receiver time to process this message and stop sending new streams. This brief pause can prevent sudden TCP resets (RST), which would immediately terminate all streams and cause chaos.

HTTP/2 also includes some useful features. During the connection, both parties can send a <span><span>WINDOW_UPDATE</span></span> frame to control data flow, use a <span><span>PING</span></span> frame to check if the connection is alive, and adjust the priority of streams using a <span><span>PRIORITY</span></span> frame. If issues arise, a <span><span>RST_STREAM</span></span> frame can selectively close a single data stream without affecting the entire connection.

That covers the main content of HTTP/2. Next, let’s see how to implement these features in Go.

HTTP/2 Implementation in Go

You may not have noticed, but the Go <span><span>net</span></span><span><span>/</span></span><span><span>http</span></span> package actually has built-in support for HTTP/2.

“Wait, does that mean HTTP/2 is enabled by default?”

This question cannot be simply answered with a yes or no.

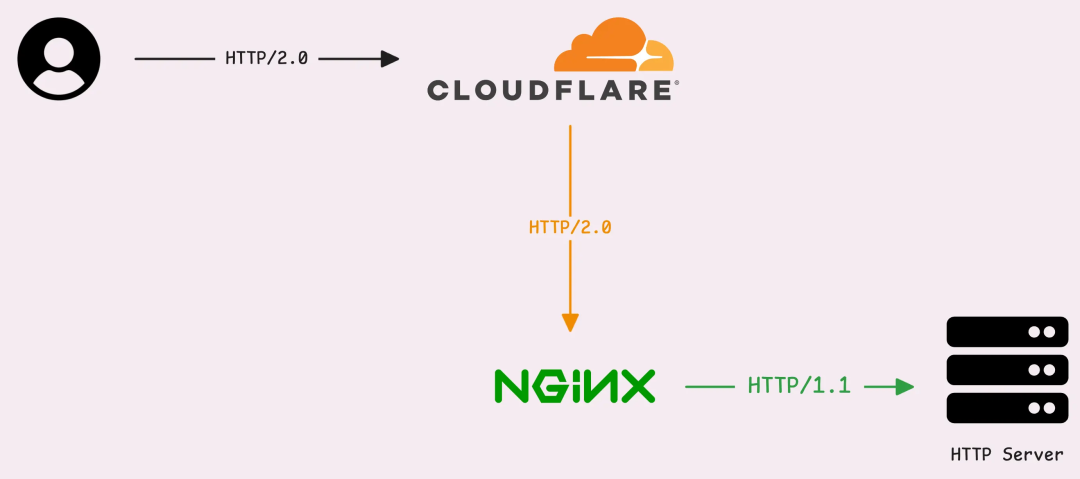

If your service runs on HTTPS, HTTP/2 is likely enabled automatically. However, if it is just regular HTTP, that may not be the case. The following common scenarios may prevent HTTP/2 from being effective:

- Your service uses regular HTTP and simply uses

<span><span>ListenAndServe</span></span>. - You are using a Cloudflare proxy. In this case, requests from users to Cloudflare may use HTTP/2, but the connection from Cloudflare to your server (origin) usually still uses HTTP/1.1.

- You are behind an Nginx that has HTTP/2 enabled. Nginx acts as a TLS termination point, responsible for decrypting requests and encrypting responses, while still communicating with your service using HTTP/1.1.

Mixed Protocols: HTTP/2 and HTTP/1.1

If you want your service to use HTTP/2 directly, you need to configure SSL/TLS.

From a technical perspective, HTTP/2 can run without TLS, but this is not the standard practice for external traffic. However, it is feasible in internal environments such as microservices or private networks. If you are curious, feel free to give it a try.

Even without TLS, clients may still default to using HTTP/1.1. The following solutions do not guarantee that clients (external services) will communicate with your HTTP server using HTTP/2.

Let’s look at a simple example. First, we set up a basic HTTP server on port 8080:

Then we write a basic HTTP client to test:

To highlight the core concepts, error handling is omitted here.

From the output, we can see that both the request and response are using HTTP/1.1, which is entirely expected. Without HTTPS or specific configurations, HTTP/2 will indeed not be enabled.

By default, the Go HTTP client uses <span><span>DefaultTransport</span></span>, which supports both HTTP/1.1 and HTTP/2 protocols. There is also a useful field <span><span>ForceAttemptHTTP2</span></span>, which is enabled by default:

“Since both our client and server support HTTP/2, why aren’t they using HTTP/2?”

Indeed, both parties do have HTTP/2 capabilities—but only in HTTPS scenarios. In regular HTTP, there is a missing key element: support for unencrypted HTTP/2. However, it can be easily enabled:

By setting <span><span>protocols</span></span><span><span>.</span></span><span><span>SetUnencryptedHTTP2</span></span><span><span>(</span></span><span><span>true</span></span><span><span>)</span></span> to enable unencrypted HTTP/2, the client and server can communicate directly using HTTP/2 without needing HTTPS. This small change can make everything fall into place. Interestingly, Go also supports HTTP/2 through the <span><span>golang</span></span><span><span>.</span></span><span><span>org</span></span><span><span>/</span></span><span><span>x</span></span><span><span>/</span></span><span><span>net</span></span><span><span>/</span></span><span><span>http2</span></span> package, giving you more control. Here’s a configuration example:

This indicates that HTTP/2 does not necessarily rely on TLS; it is a protocol that operates on top of HTTP/1.1. However, in most cases, if your server has TLS enabled, Go’s default HTTP client will automatically use HTTP/2 and fall back to HTTP/1.1 when necessary. All of this requires no additional configuration steps.

#HTTP #2 protocol #Go programming #network communication #header compression #server development

Recommended Reading:

- Go Protobuf: An Opaque API

- Exciting Golang 1.21 Generics

- Golang Security Pitfalls – Lessons from Two Cases at Grafana Labs

- Threat Precedence – Why Engineers Face Security Issues

- Golang Memory Alignment