Effective solutions to the contradiction between the scarcity of real machine data and the generalization of scenarios.

Written by|Huang NanEdited by|Yuan SilaiSource|HardK (ID:south_36kr)Cover Source|IC photo

HardK has learned that Beijing BeingBeyond Technology Co., Ltd. (hereinafter referred to as “BeingBeyond”) has recently completed financing of tens of millions of yuan, led by Lenovo Star, with follow-up investments from Xinglian Capital (Z Fund), Yanyuan Venture Capital, and Binfu Capital, with Momentum Capital serving as the exclusive financial advisor. The funds will be used to increase investment in core technology research and development, accelerate the iteration and industrialization verification of existing models, and continuously enhance technical barriers and product competitiveness.

Founded in January 2025, “BeingBeyond” focuses on the research and application of general models for humanoid robots. The founder, Lu Zongqing, is a tenured associate professor at Peking University’s School of Computer Science and was previously the head of the multimodal interaction research center at the Zhiyuan Research Institute, responsible for the first original exploration project of the National Natural Science Foundation of China on general intelligent agents; many core members come from the Zhiyuan Research Institute and have rich technical research and application experience in reinforcement learning, computer vision, robot control, and multimodal fields.

Currently, the scale of data and generalization ability are the core contradictions restricting the performance improvement of embodied brains. On one hand, embodied intelligent robots need to achieve highly human-like action and decision-making capabilities, relying on massive and diverse data for deep training. This data covers various scenarios, including daily trivial operations and complex environmental interactions, with data scale increasing exponentially. However, the data collection process still faces multiple technical and resource barriers, relying heavily on human labor and being difficult, with storage costs rapidly rising as data volume increases.

On the other hand, even with massive data support, robots must flexibly respond to new tasks, new objects, and new disturbances in unknown environments, which still relies on strong generalization ability. However, existing models perform poorly when faced with significantly different scenarios, making it difficult to effectively transfer learned knowledge to new contexts, resulting in poor adaptability in practical applications.

Therefore, how to enhance generalization ability with limited data scale has become a key challenge for embodied brains to break through performance bottlenecks and move towards practical applications.

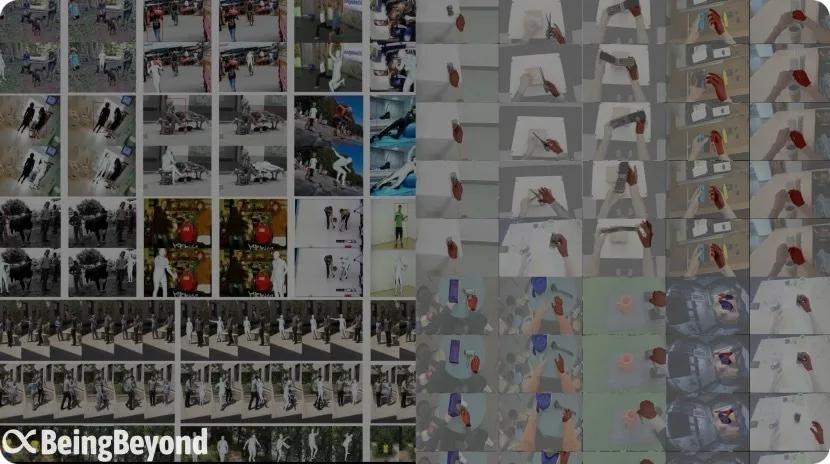

“The pre-training data used by BeingBeyond” (Image source/Company)

Focusing on the two core capabilities of humanoid robots, operation and movement, “BeingBeyond” divides its general model system into three layers: embodied multimodal large language model, multimodal posture model, and motion model, and has built a self-learning embodied intelligent agent framework.

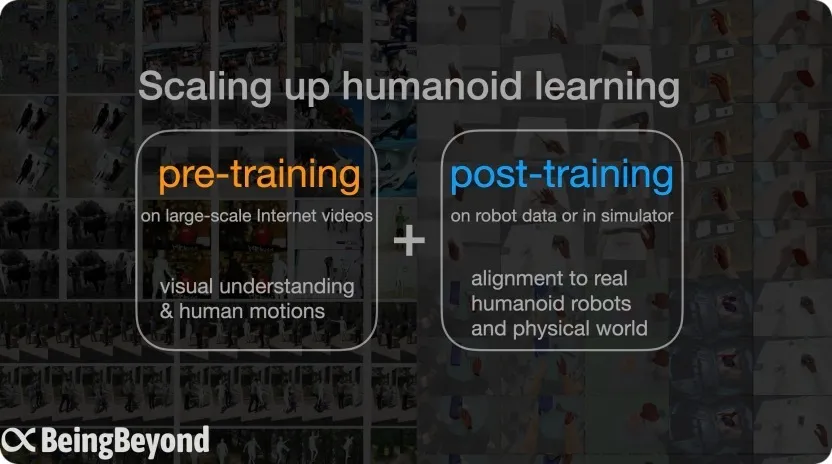

Lu Zongqing told HardK that, unlike other models, the pre-training data for “BeingBeyond” comes from human movement and hand operation videos on the internet. By analyzing action sequences in these natural scenes, a pre-training foundation for robot motion operation capabilities is constructed. This technology route driven by public video data breaks the traditional reliance on real machine data for robots, enabling cross-modal transfer from “human behavior demonstration” to “robot action generation.”

Specifically, “BeingBeyond” proposes a multimodal posture model that utilizes rich video resources from the internet, including full-body movements such as walking and dancing, as well as first-person perspective data of fine hand operations like object grasping and tool usage, providing the model with rich and diverse action samples. Through these video-action data, the model can learn the performance of various actions in different environments and achieve end-to-end motion operations with generalization based on real-time environmental information and task requirements.

In terms of the embodied multimodal large language model, “BeingBeyond” has independently developed the Video Tokenizer technology, which emphasizes understanding and reasoning capabilities in spatiotemporal environments, especially for analyzing first-person perspective video content. By deconstructing continuous video streams into visual token units that incorporate both temporal sequences and spatial semantics, this model can accurately capture the temporal logic of actions, such as reaching out, raising an arm to grasp an object, and understanding the physical world and human behavior based on spatial features like object position and relative limb positions.

Currently, although simple multimodal large language models + motion operation strategies have commercial landing conditions, the generalization ability of robots is difficult to adapt due to dynamic environmental changes in real scenarios. How to enable humanoid robots to possess autonomous learning capabilities has become a key breakthrough for their commercialization.

To this end, “BeingBeyond” proposes the Retriever-Actor-Critic framework, which enhances the model’s response accuracy and user experience through the collaborative application of real interaction data’s RAG (Retrieval-Augmented Generation) and reinforcement learning. This creates a closed loop of “data collection – model optimization – effect feedback,” enabling robots to adapt dynamically to changing scenarios and providing a feasible technical path for large-scale implementation.

Pre-training + post-training architecture (Image source/Company)

Lu Zongqing pointed out that based on the internet video pre-training general action model, and then through post-adaptation training to achieve transfer to different robot bodies and scenarios, “BeingBeyond”‘s technical path can avoid data waste caused by hardware iteration, effectively solving the contradiction between the scarcity of real machine data and scenario generalization. Currently, the company is advancing scene verification cooperation with leading robot manufacturers to accelerate the application of embodied intelligence in more fields.

Investor Insights:

Gao Tianya, partner at Lenovo Star, stated that the current technical route of embodied large models has not yet converged, as there is a lack of a unified architectural paradigm. The BeingBeyond team’s technical route addresses the limited sources of training data while constructing a complete technical framework through a modular approach that connects large and small brains. Compared to teams with similar technical routes abroad, it possesses full-stack technical capabilities, relying on self-developed large models such as multimodal large models to have strong competitiveness in solving the task and environmental generalization of embodied large models, gradually achieving “zero-shot” generalization. We look forward to the products of the BeingBeyond team landing in high-potential application scenarios, achieving a commercial closed loop.

Wang Pu, partner at Xinglian Capital (Z Fund), expressed that as an angel investor in BeingBeyond, he is immensely proud to witness the milestone breakthroughs achieved by Professor Lu Zongqing and his team in the field of general humanoid robots. From building the industry’s first million-scale MotionLib dataset to developing the end-to-end Being-M0 action generation model, the team has not only validated the scale effect of “big data + big models” in embodied intelligence but also achieved a technical closed loop for cross-platform action transfer. This innovation, which transforms text instructions into precise robot actions, not only breaks through the limitations of traditional methods but also paves the way for robots to enter thousands of households. I firmly believe that BeingBeyond will continue to lead the iteration of embodied intelligence—from dexterous operations to full-body motion control, driving robots from the laboratory into daily life. We will work hand in hand with BeingBeyond to welcome a new era empowered by general robots.