【Editor’s Note】”Is C++ 80% Dead?” The author of this article has been using C++ for 18 years. After experiencing dozens of programming languages, he points out that although C++ has been one of the most commonly used programming languages for decades, it has some issues such as being unsafe, inefficient, and wasting programmers’ energy. Therefore, the article explores some languages and technologies that may replace C++, including Spiral, Numba, and ForwardCom, and introduces them in detail.

Original link: https://wordsandbuttons.online/the_real_cpp_killers.html

Reproduction without permission is prohibited!

The following is the translation:

I am a fan of C++, having written code in C++ for 18 years, and during these 18 years, I have been trying to get rid of C++.

It all started with a 3D space simulation engine at the end of 2005. The engine had all the features of C++ at that time, with triple pointers, eight layers of dependencies, and ubiquitous C-style macros. There were also some assembly code snippets, Stepanov-style iterators, and Alexandrescu-style meta-programming. In short, it had everything. So, why?

Because this engine took 8 years to develop and went through 5 different teams. Each team brought their favorite trendy technologies to the project, wrapping old code in trendy packaging, but adding very little real value to the engine itself.

At first, I seriously tried to understand every little detail, but after hitting a wall, I gave up. I just completed the tasks and fixed bugs. I can’t say my work efficiency was high; I can only say it was barely enough to avoid being fired. But later, my boss asked me, “Do you want to change some assembly code to GLSL?” Although I didn’t know what GLSL was, I thought it couldn’t be worse than C++, so I agreed. It turned out it wasn’t worse than C++.

Later, I was still writing code in C++ most of the time, but whenever someone asked me, “Do you want to try some non-C++ work?” I would say, “Of course!” and then I would do it. I have written in C89, MASM32, C#, PHP, Delphi, ActionScript, JavaScript, Erlang, Python, Haskell, D, Rust, and the dreaded scripting language InstallShield. I even wrote in VisualBasic, bash, and several proprietary languages that cannot be publicly discussed. I even created my own language; I wrote a simple Lisp-style interpreter to help game designers automatically load resources, and then went on vacation. When I returned, I found they had used this interpreter to write the entire game scene, so for a while, we had to support this interpreter.

For the past 17 years, I have been trying to get rid of C++, but every time I tried a new technology, I always ended up back at C++. Nevertheless, I still believe that programming in C++ is a bad habit. This language is not safe, does not meet efficiency expectations, and programmers waste a lot of energy on tasks unrelated to software production. Do you know that in MSVC, uint16_t(50000) + uint16_t(50000) == -1794967296? Do you know why? Your opinion coincides with mine.

As a long-time C++ programmer, I feel responsible to advise the younger generation of programmers not to take C++ as their specialization, just as those with bad habits should warn others not to repeat their mistakes.

So, why can’t I give up C++? Where lies the problem? The problem is that all programming languages, especially those so-called “C++ killers,” fail to bring advantages that surpass C++. Most of these new languages tend to constrain programmers to some extent. This is not a problem in itself; after all, transistor density doubles every 18 months, while the number of programmers only doubles every 5 years, and bad programmers not being able to write good code is not a big issue.

Today, we live in the 21st century. The number of experienced programmers exceeds that of any previous time, and we need efficient software more than ever.

Last century, writing software was simple. You had an idea, then packaged it into a UI and sold it as a desktop system software product. Running too slow? No one cared! Within 18 months, the speed of desktops would double. The important thing was to get to market, open sales, and have no bugs. Of course, it would be even better if the compiler could prevent programmers from making mistakes, because bugs do not generate revenue, and you have to pay programmers to fix bugs.

But today, things are very different. You have an idea, then package it into a Docker container and run it in the cloud. Nowadays, to get revenue, your software must solve problems for users. Even if a product only does one thing, as long as it does it correctly, it can get paid. You don’t have to keep adding features to sell new versions of the product. Instead, if your code doesn’t really work, you are the one who pays the price. Cloud bills can accurately reflect whether your program really works.

Therefore, in this new environment, you need fewer features, but all features need to perform better.

Under this premise, you will find that all the “C++ killers,” even Rust, Julia, and D, which I genuinely like and respect, do not solve the problems of the 21st century. They are still stuck in the last century. While these languages can help you write more features and have fewer bugs, they are not very useful when you need to squeeze the last bit of FLOPS out of rented hardware.

Thus, these languages are merely more competitive than C++ or can compete with each other. But most programming languages, such as Rust, Julia, and Cland, even share the same backend. All racers are sitting in the same car; how can we talk about who can win the race?

So, which technologies have advantages over C++ or traditional pre-compilers?

C++’s Number One Killer: Spiral

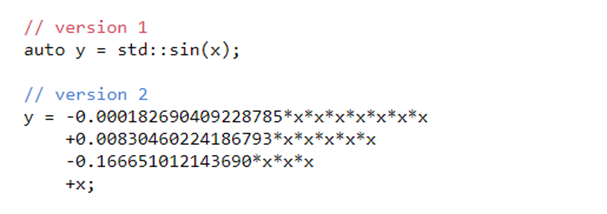

Before discussing Spiral, let me quiz you. Which version of the following code runs faster? Version 1: Standard C++ sine function; Version 2: Sine function composed of 4 polynomial models?

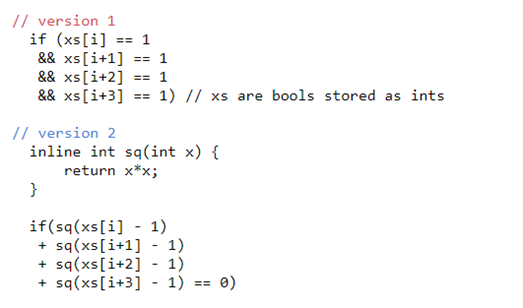

The next question. Which version of the following code runs faster? Version 1: Using short-circuit logical operations; Version 2: Converting logical expressions to arithmetic expressions?

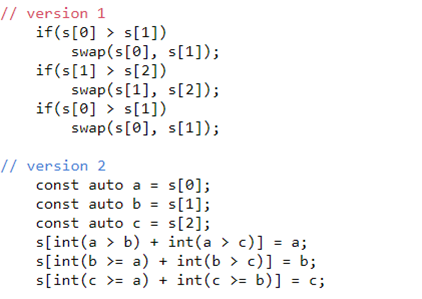

Third question, which version of tuple sorting is faster? Version 1: Branching exchange sort; Version 2: Non-branching index sort?

If you answered all of the above questions decisively, without thinking or searching online, then I can only say you were deceived by your own intuition. Did you not notice the traps? Without context, these questions have no definite answers.

-

If built using clang 11 and -O2 -march=native, running on an Intel Core i7-9700F, the polynomial model is 3 times faster than the standard sine. But if built using NVCC and –use-fast-math, running on GeForce GTX 1050 Ti Mobile, the standard sine is 10 times faster than the polynomial model.

-

On i7, if short-circuit logic is replaced with vectorized arithmetic, the code’s run speed can be doubled. But on ARMv7, using clang and -O2, standard logic is 25% faster than micro-optimized.

For index sort vs. exchange sort, on Intel, index sort is 3 times faster than exchange sort; while on GeForce, exchange sort is 3 times faster than index sort.

Therefore, our beloved micro-optimizations can potentially improve code performance by 3 times or lead to a 90% drop in speed. It all depends on the context. Wouldn’t it be nice if the compiler could choose the best alternative for us, for example, when we switch build targets, index sort magically becomes exchange sort? But unfortunately, compilers cannot do that.

-

Even if we allow the compiler to replace the sine function with a polynomial model, sacrificing precision for speed, it still does not know our target precision. In C++, we cannot express: “This function allows for errors.” We only have compiler flags like –use-fast-math, and only within the scope of translation units.

-

In the second example, the compiler does not know our values are limited to 0 or 1, and it cannot propose actionable optimizations. While we can hint this through boolean types, that is another issue.

-

In the third example, the two pieces of code are completely different, and the compiler cannot treat them as equivalent code. The code describes too many details. If there were only std::sort, it could give the compiler more freedom to choose the algorithm. But it will not choose index sort or exchange sort, as both algorithms are inefficient for large arrays, while std::sort is suitable for generic iterable containers.

Here comes Spiral. This language is a joint project of Carnegie Mellon University and ETH Zurich. In short, signal processing experts were tired of having to manually rewrite their favorite algorithms every time new hardware appeared, so they wrote a program that could automate this work. This program takes a high-level description of the algorithm and detailed descriptions of the hardware architecture and optimizes the code until the most efficient algorithm is achieved on the specified hardware.

Unlike languages like Fortran, Spiral truly addresses the optimization problem in a mathematical sense. It defines runtime as an objective function and searches for the globally optimal implementation within the variable space constrained by hardware architecture. Compilers can never truly achieve this optimization.

Compilers do not seek true optimal solutions. They merely optimize code based on heuristic rules taught by programmers. Essentially, a compiler is not a machine for finding optimal solutions; it is more like an assembler. A good compiler is like a good assembler, and nothing more.

Spiral is a research project with limited scope and budget. But the results shown are astonishing. In fast Fourier transforms, their solution significantly outperformed implementations of MKL and FFTW, with their code being about twice as fast, even on Intel.

To highlight such a grand achievement, it should be noted that MKL is Intel’s own Math Kernel Library, so they know very well how to leverage their hardware. And WWTF (Fastest Fourier Transform in the West) is a highly specialized library written by the people who know the algorithm best. Both are champions in their respective fields, and Spiral’s speed being able to reach double that of both is truly unbelievable.

When the optimization techniques used by Spiral mature and are commercialized, not only C++, but also Rust, Julia, and even Fortran will face unprecedented competitive pressure. Since one can write 2x faster code using high-level algorithm description languages, who would still use C++?

C++ Killer Number Two: Numba

You’re probably familiar with this excellent programming language. For decades, the language most familiar to most programmers has been C. In the TIOBE index, C has consistently ranked first, with other C-like languages occupying the top ten. However, two years ago, something unprecedented happened; C lost its top position.

Replacing it was Python. In the 90s, no one thought much of Python as it was just one of many scripting languages.

Some might say, “Python is slow,” but this statement is absurd, just like saying an accordion or a frying pan is slow; the speed of a language is not inherent to the language itself. Just like the speed of an accordion depends on the player, the speed of a language depends on the speed of the compiler.

Some might also say, “Python is not a compiled language,” which is also inaccurate. There are many Python compilers, and one of the most promising compilers can also be considered a Python script. Let me explain.

I once had a project, a 3D printing simulation, initially written in Python, which was later “rewritten in C++ for performance” and then ported to GPU, of course, all of this happened before I joined the project. Later, I spent months migrating the build to Linux and optimizing the GPU code for the Tesla M60, as it was the cheapest GPU at the time in AWS. After that, I verified all changes in the C++/CU code to combine it with the original Python code. Aside from designing geometric algorithms, all the work was done by me.

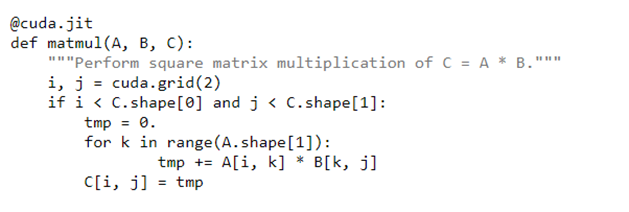

Once everything was running smoothly, a part-time student from Bremen called me asking, “I heard you are good at using multiple technologies; can you help me run an algorithm on the GPU?” “Of course!” I explained CUDA, CMake, Linux builds, testing, and optimization, etc., which took about an hour. He politely listened to my introduction and finally said, “Very interesting, but I want to ask a very specific question. I have a function, and I added @cuda.jit before the function definition, but Python cannot compile the kernel and prompts some array-related errors. Do you know what the problem is?”

I didn’t know. Later, he spent a day figuring it out himself. The reason was that Numba cannot handle native Python lists; it only accepts data from NumPy arrays. He found the problem and ran the algorithm on the GPU using Python. He didn’t encounter any of the “problems” I had spent months solving. Want to run code on Linux? No problem, just run it on Linux. Want to optimize code for the target platform? Not a problem either. Numba will optimize the code running on the platform for you because it does not pre-compile the code but compiles on demand at deployment.

Impressive, right? However, it wasn’t for me. I spent months solving problems that wouldn’t occur in Numba using C++, while that part-time student from Bremen completed the same work in just a few days. If it weren’t for it being his first time using Numba, it might have only taken him a few hours. So, what exactly is Numba? What kind of magic is it?

There is no magic. Python decorators convert each piece of code into an abstract syntax tree, allowing you to manipulate it freely. Numba is a Python library that compiles the abstract syntax tree for any supported platform using any backend. If you want to compile Python code to run in a highly parallel manner on CPU cores, just tell Numba to compile. If you want to run code on the GPU, just request it.

Numba is a Python compiler that can eliminate C++. However, theoretically, Numba does not surpass C++, since both use the same backend. Numba’s GPU programming utilizes CUDA, while CPU programming uses LLVM. In practice, because it does not need to be rebuilt in advance for each new architecture, it can adapt better to each new hardware and its potential optimizations.

Of course, it would be better if Numba had significant performance advantages like Spiral. But Spiral is more of a research project that could eventually eliminate C++, but only if it is lucky enough. The combination of Numba and Python could immediately pronounce C++ dead. If you can program in Python and achieve C++ performance, who would still write C++ code?

C++ Killer Number Three: ForwardCom

Next, let’s play another game. I’ll give you three pieces of code, and you guess which one (or possibly more) is written in assembly language.

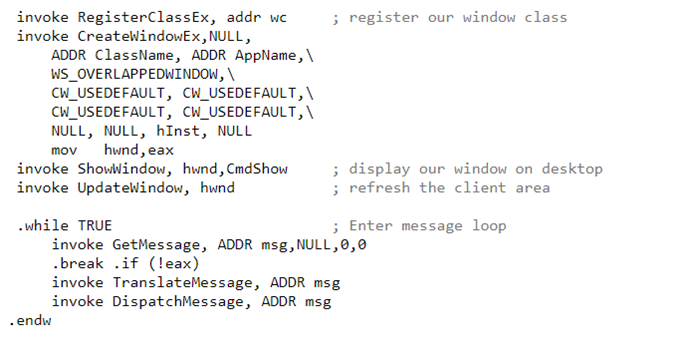

First piece of code:

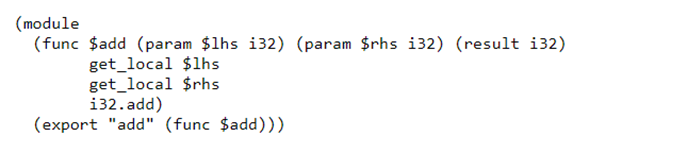

Second piece of code:

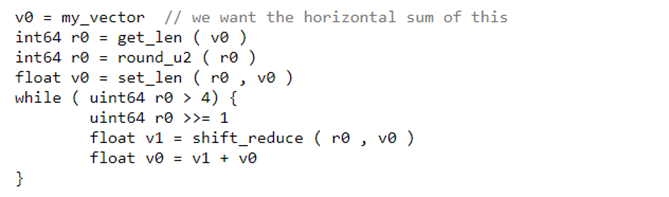

Third piece of code:

If you guessed that all three examples are assembly, congratulations!

The first example is written in MASM32. This is a macro assembler with “if” and “while” for writing native Windows applications. Note that this is not how it was written in the past, but it is still used today. Microsoft has been actively maintaining backward compatibility with Windows and the Win32 API, so all MASM32 programs written in the past can run normally on modern PCs.

Ironically, the invention of the C language was to simplify the difficulty of converting UNIX from PDP-7 to PDP-11. The design intention of the C language was to be a portable assembly language that could survive the Cambrian explosion of hardware architectures in the 70s. But in the 21st century, the evolution of hardware architecture is so slow that programs I wrote 20 years ago in MASM32 still run perfectly today, while I can’t be sure whether a C++ application built with CMake 3.21 last year can still be built with CMake 3.25 today.

The second piece of code is WebAssembly, which is not even a macro assembler; it has no “if” and “while” and is more like human-readable browser machine code. Conceptually, it can be any browser.

WebAssembly code does not depend on hardware architecture at all. It provides an abstract, virtual, and universal machine, whatever you want to call it. If you can read this text, it means your physical machine already has a hardware architecture that can run WebAssembly.

The most interesting is the third piece of code. This is ForwardCom: an assembler proposed by Agner Fog, a well-known author of C++ and assembly optimization manuals. Like WebAssembly, this is not just an assembler; it aims to achieve both backward and forward compatibility in a universal instruction set. Hence the name. ForwardCom stands for an open forward-compatible instruction set architecture. In other words, it is not just a proposal for an assembler, but also a proposal for a peace treaty.

We know that the most common series of computer architectures, x64, ARM, and RISC-V, have different instruction sets. But no one knows why this should be the case. All modern processors, except for the simplest ones, do not run the code you provide; they convert your input into microcode. Therefore, not only does the M1 chip provide an Intel backward compatibility layer, but every processor essentially provides a backward compatibility layer for its earlier versions.

So why have architecture designers failed to reach a consensus on a similar forward compatibility layer? It is nothing but the competitive ambitions between companies. But if processor manufacturers finally decide to establish a common instruction set instead of implementing a new compatibility layer for each competitor, ForwardCom could bring assembly back to the mainstream. This forward compatibility layer could heal the greatest psychological trauma of every assembly programmer: “Am I writing one-time code for this specific architecture today, only for it to be obsolete in less than a year?”

With a forward compatibility layer, this code will never become obsolete. That is the key.

Furthermore, assembly programming is also limited by another misconception: that assembly code is too difficult to write, making it impractical. Fog’s proposal also addresses this issue. If people think writing assembly code is too difficult, while writing C is not, then let’s make assembly look like C. This is not a problem. Modern assembly languages do not need to retain the appearance of their ancestors from the 1950s.

The three assembly examples you see above do not resemble “traditional” assembly, nor should they remain the same.

ForwardCom is an assembly language that can be used to write the best code that will never become obsolete without having to learn “traditional” assembly. From a realistic perspective, ForwardCom is the future of C. Not C++.

When Will C++ Finally Die?

We live in a postmodern world. What passes away is not technology, but people. Just as Latin never truly disappeared, COBOL, Algol 68, and Ada have not either, C++ is destined to remain in a state of half-life forever. C++ will never truly disappear; it will only be replaced by newer, more powerful technologies.

Strictly speaking, it is not that “it will be replaced in the future,” but rather that “it is being replaced.” My career started with C++, and now I write code in Python. I write equations, SymPy helps me solve them, and then I convert the solution into C++. Then I paste this code into the C++ library without even needing to adjust the format because clang-tidy takes care of that automatically. Static analyzers check for namespace confusion, dynamic analyzers check for memory leaks, and CI/CD is responsible for cross-platform compilation. Performance analyzers let me know how the code actually runs, and disassemblers can explain why.

If I replace C++ with technology outside of C++, then 80% of the work would not change. For most of my work, C++ is completely irrelevant. Does this mean that for me, C++ is already 80% dead?

▶ A Barometer in the Field of Large Models: 2024 Global Machine Learning Technology Conference Reveals 50+ Topics!

▶ WeChat Pay Chaos Engineering Practice

▶ OpenAI Announced No-Login Use of ChatGPT, Many Report ChatGPT Server Crashed!