Source: Electric Science Xiaoxian WeChat Official Account

Edge systems are computing systems that operate at the edge of the network, close to users and data. These types of systems belong to externally deployed devices that need to rely on existing networks to connect to other systems, such as cloud-based systems or other edge systems. With commercial infrastructure being ubiquitous, reliable networks are often a given in industrial or commercial edge systems. However, reliable network access cannot be guaranteed in all edge environments, such as in tactical and humanitarian edge environments. This article will discuss the network challenges primarily brought about by high uncertainty in these environments and propose solutions to address and overcome these challenges.

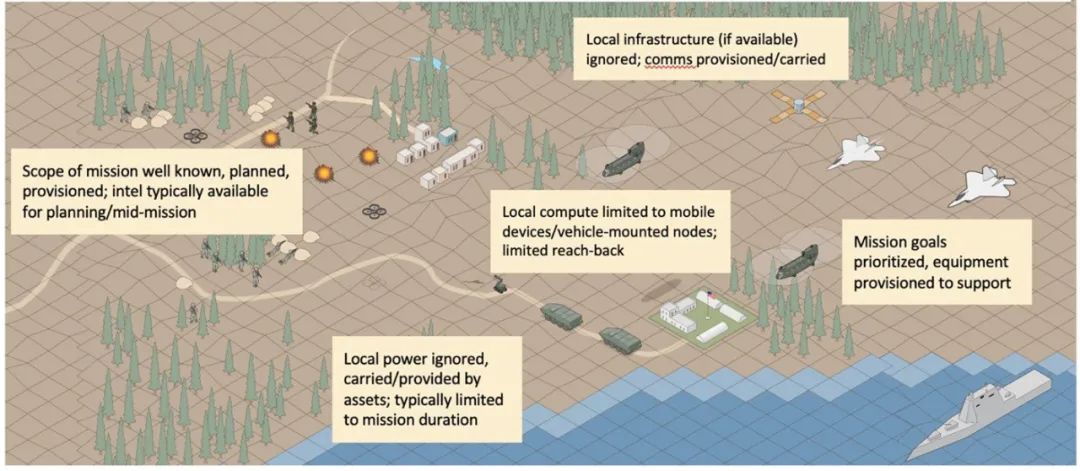

Tactical and humanitarian edge environments are characterized by limited resources, including limited network access and bandwidth, making it impossible to access or reliably access cloud resources. In these environments, many tasks are collaborative in nature, such as search and rescue or maintaining a common operational picture, requiring access to the network to share data and maintain communication among all team members. Regardless of the reliability of the local network, enabling all participants to remain interconnected is key to mission success. When cloud resources are available, access to cloud resources can ensure the completion of tasks.

Uncertainty is a significant characteristic of edge environments. In such environments, uncertainty involves not only the availability/unavailability of the network but also the availability/unavailability of the operating environment, which may subsequently lead to network interruptions. Tactical edge systems operate in environments where adversaries may attempt to disrupt or sabotage missions. Despite the diversity and uncertainty of network interruptions, these edge systems must be able to continue functioning under unexpected environmental and infrastructure failure conditions.

Tactical edge systems differ from other edge environments. For instance, in urban and commercial edges, the unreliability of any access point is typically mitigated by backup access points provided by extensive infrastructure. Similarly, in space edges, where communication delays (and asset deployment costs) are high, utilizing regularly scheduled communication sessions allows those self-contained systems to maintain full capability even when connections are lost. Uncertainty leads to the following key challenges in tactical and humanitarian edge environments.

(1) Defining the Challenge of Unreliability

The level of guarantee for successful data transmission (known as reliability) is a primary requirement in edge systems. A common method for defining the reliability of modern software systems is “uptime,” which is the time that services in the system are available to users. When measuring the reliability of edge systems, both the availability of the system and the network must be considered comprehensively. Edge networks are often intermittent, sporadic, and low-bandwidth (DIL), which poses challenges to the uptime of tactical and humanitarian edge systems. Since failures in any aspect of the system or network can lead to unsuccessful data transmission, edge system developers must consider unreliability from a broad perspective.

(2) Design Challenges for Operating Systems on Intermittent Networks

Intermittent networks are often the easiest type of DIL networks to manage. These networks are characterized by long periods of disconnection and short or periodic reconnections triggered by planned events. Common scenarios for the prevalence of intermittent networks include:

· Disaster recovery operations where all local infrastructure is completely non-operational;

· Tactical edge missions where RF communication is always subject to interference;

· Planned connection disruption environments, such as satellite operations, where communication is only available during scheduled time intervals when the relay station is pointed in the correct direction.

Edge systems in such environments must be designed to maximize bandwidth when available, primarily to prepare for triggers that enable connections.

(3) Design Challenges for Operating Systems on Sporadic Networks

In intermittent networks, network availability can ultimately be expected, while sporadic networks experience unpredictable connection interruptions of varying lengths. These failures can occur at any time, so edge system designs must be able to withstand these failures. Common scenarios for edge systems in sporadic networks include:

· Disaster recovery operations where local infrastructure is limited or partially damaged; and unexpected physical effects, such as surges or RF interference caused by equipment damage due to disasters;

· Environmental impacts during humanitarian and tactical edge operations, such as traversing walls, passing through tunnels, and entering forests, which may cause changes in RF coverage.

Approaches to handling sporadic networks primarily involve different types of data distribution, which differ from those for handling intermittent networks, and this will be discussed later in this article.

(4) Design Challenges for Operating Systems on Low-Bandwidth Networks

Finally, even when connections are available, applications running at the edge often have to deal with the problem of insufficient network communication bandwidth. This challenge requires data encoding strategies to maximize available bandwidth. Common scenarios for edge systems on low-bandwidth networks include:

· Environments where high-density devices compete for available bandwidth, such as disaster response teams all using a single satellite network connection;

· Military networks using highly encrypted links, which reduce the available bandwidth of connections.

(5) The Challenge of Reliability Layering: Extending Networks

Edge networks are often more complex than simple point-to-point connections. Multiple networks may use different connection technologies to connect devices at different physical locations. There are usually multiple devices physically at the edge. These devices may have good short-range connections with each other, which can be achieved through common protocols (such as Bluetooth or WiFi Mobile Ad-hoc Networks (MANET)) or short-range connection means (such as tactical network radios). Such short-range networks may be much more reliable than connections to supporting networks or even the entire internet, which may rely on line-of-sight or beyond-line-of-sight communications (such as satellite networks) or even through intermediate connection points.

However, the reliability of network connections to cloud or data center resources (i.e., backhaul connections) may be much lower, but they are valuable for edge operations because they can provide command control updates, local expertise unavailable from experts, and access to vast computational resources. However, this combination of short-range and long-range networks, along with the potential presence of intermediate nodes providing resources or connections, creates a multi-layered connectivity situation. In this case, some links may be reliable but have low bandwidth, some links may be reliable but only available at set times, some links may have unpredictable availability, and some links may be a complete mix of the above situations. It is this complex networking environment that drives the design of network mitigation solutions to achieve advanced edge capabilities.

Solutions to overcome the above challenges typically address two aspects: network reliability (e.g., whether data can be expected to transfer between systems) and network performance (e.g., regardless of the observed level of reliability, what is the actual bandwidth that can be achieved). Here are some common architectural strategies and design decisions that influence quality attribute responses (e.g., average fault-free time of the network) that help improve reliability and performance, thereby mitigating the uncertainty of edge networks. The following four major areas of focus will be discussed: data distribution configuration, connection configuration, protocol configuration, and data configuration.

(1) Data Distribution Configuration

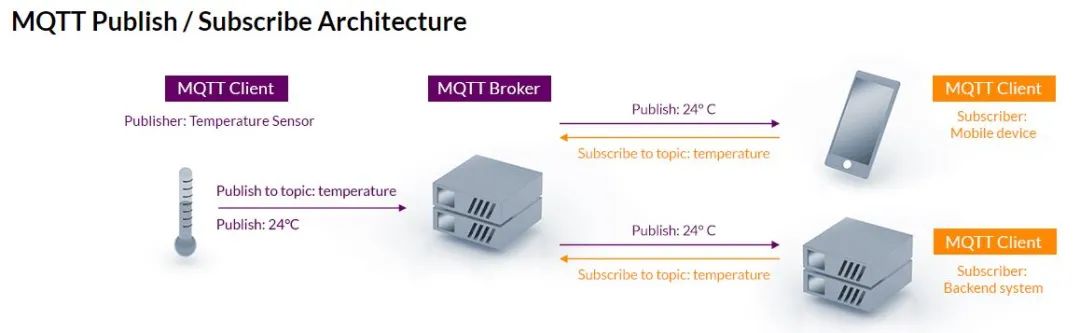

An important issue that needs to be addressed in any edge network environment is how data is distributed. A common architectural pattern is the publish-subscribe (pub-sub) model, where data is shared by multiple nodes (published), and other nodes actively request (subscribe) to receive updates. This approach is commonly used because it addresses low bandwidth issues by limiting data transmission to those who actively need it. It also simplifies and modularizes data processing of different types of data running on systems in the network. Additionally, it can make data transmission more reliable through the centralization of the data transmission process. Finally, these methods are also applicable to distributed containerized microservices, which is a mainstream approach in current edge system development.

· Standard Publish-Subscribe Distribution

The publish-subscribe architecture adopts an asynchronous working mode, where some network elements publish events and others subscribe to manage message exchange and event updates. Most data distribution middleware, such as ZeroMQ or numerous implementations of the Data Distribution Service (DDS) standard, provide topic-based subscriptions. These middleware allow systems to specify the type of data they subscribe to based on content descriptors (such as location data). It also implements true decoupling of communication systems, allowing any content publisher to provide data to any subscriber without either party needing explicit knowledge of the other. Therefore, system architects can build different system deployments more flexibly, providing data from different sources, whether backup/redundancy or entirely new sources. The publish-subscribe architecture also allows for simpler recovery operations when services lose connection or fail, as new services can ramp up and replace the original services without requiring any coordination or reorganization of the publish-subscribe scheme.

A less supported enhancement to the topic-based publish-subscribe model is multi-topic subscriptions. In this scheme, systems can subscribe to a set of customized metadata tags, allowing for appropriate filtering of similar data streams for each subscriber. For example, imagine a robotic platform with multiple redundant location sources that needs a merging algorithm to process raw location data and metadata (such as accuracy and precision, timeliness, or incrementality) to generate an optimal available location, which is the location data that all location-sensitive consumers should use. Implementing such an algorithm would generate a service that could potentially subscribe to all data tagged with location and raw data, as well as a service that subscribes to data tagged with location and optimal availability, and possibly specific services interested only in certain sources, such as Global Navigation Satellite System (GLONASS) or relative trajectory estimation using initial location and position/motion sensors. Logging services may also be used to subscribe to all location data (regardless of source) for later review.

Such situations where similar data has multiple sources but different contextual elements can benefit significantly from data distribution middleware that supports multi-topic subscription capabilities. As more IoT devices are deployed, this approach is becoming increasingly popular. Considering the volume of data generated by the large-scale use of IoT devices, the bandwidth filtering value of multi-topic subscriptions is also very high. Although multi-topic subscription capabilities are not commonly found among middleware vendors, they can provide greater flexibility for complex deployments.

· Centralized Distribution

Similar to the centralized connection management of some distributed middleware services, a common method of data transmission is to centralize this function into a single entity. This approach is typically implemented through a proxy, where the proxy performs all data transmission for the distributed network. Each application sends its data to the proxy (all publish-subscribe and other data), and the proxy forwards the data to the necessary recipients. MQTT (Message Queue Telemetry Transport) is a common middleware software solution that implements this method.

This centralized approach has significant value for edge networks. First, it consolidates all connection decisions within the proxy, allowing each system to share data without needing to know the location, timing, and delivery methods of the data. Second, it allows for the implementation of DIL network mitigation measures in a single location, meaning that protocol and data configuration mitigation measures can be limited to the network links that require them.

However, consolidating data transmission into a proxy incurs bandwidth costs. Additionally, there is a risk of the proxy disconnecting or becoming unavailable. Developers of each distributed network should carefully consider the potential risks of proxy loss and conduct appropriate cost/benefit analyses.

(2) Connection Configuration

The unreliability of networks makes it difficult for systems within edge networks to be discovered, and even when discovered, it is challenging to establish stable connections between them. Actively managing this process to minimize uncertainty will improve the overall reliability of any devices collaborating in edge networks. The two main methods for establishing connections in unstable network conditions include individual approaches and unified approaches.

· Individual Connection Management

In the individual approach, each member of the distributed system is responsible for discovering and connecting to other systems with which it communicates. The DDS Simple Discovery Protocol is a standard example of this approach. Most data distribution middleware software solutions support a version of this protocol. However, the inherent challenges of operating in DIL network environments make this approach difficult to execute during network interruptions or intermittency, particularly in terms of scalability.

· Unified Connection Management

A preferred method for edge networking is to assign the network node discovery function to a proxy or enabling service. Many modern distributed architectures provide this functionality through a public registration service for preferred connection types. Each system informs the public service of its location, what types of connections are available, and what types of connections it is interested in, allowing the public service to route data distribution connections (such as publish-subscribe topics, heartbeats, and other common data streams) in a unified manner.

An example of a service using a proxy-based service to coordinate data distribution is the FAST-DDS discovery server used by ROS2. This service is typically most effective for operations in DIL network environments because it allows services and devices with high local connectivity to find each other effectively on the local network. It also addresses the challenges of coordinating with remote devices and systems, implementing mitigations for the unique challenges of local DIL environments without requiring each individual node to undertake these mitigations.

(3) Protocol Configuration

Edge system developers must also carefully consider the different protocol options for data distribution. Most modern data distribution middleware supports multiple protocols, including the reliable TCP protocol, UDP for “fire and forget” transmissions, and multicast protocols typically used for general publish-subscribe. Many middleware solutions also support custom protocols, such as RTI DDS supporting reliable UDP protocols. Edge system developers should carefully consider the required data transmission reliability and, in some cases, adopt multiple protocol supports for different types of data with varying reliability requirements.

· Multicast

Multicast is a common consideration when researching protocols, especially when selecting publish-subscribe architectures. While basic multicast may be a viable solution for certain data distribution scenarios, system designers must consider several issues. First, multicast is a UDP-based protocol, meaning all sent data is “fire and forget” unless a reliability mechanism is built on top of the underlying protocol. Second, multicast is not well-supported in commercial networks due to the potential for multicast flooding; tactical networks also do not support multicast well, as it may conflict with proprietary protocol implementations by vendors. Finally, the nature of the IP address scheme imposes inherent limitations on multicast, which may hinder large or complex topic schemes. If these schemes are frequently changing, they may be relatively fragile, as different multicast addresses cannot be directly associated with data types. Therefore, while multicast may be an option in some cases, careful consideration is needed to ensure that the limitations of multicast do not pose problems.

· Use of DTN Standards

Notably, Delay Tolerant Networking (DTN) is an existing RFC standard that provides a wealth of structure to address DIL network challenges. This standard has several implementations and has been tested, including by the SEI company team, and one implementation has been used by NASA for satellite communications. The store-carry-forward concept of the DTN standard is most ideal for timed communication environments (such as satellite communications). However, the DTN standard and its underlying implementations also provide guidance for developing mitigations for unreliable intermittent and sporadic networks.

(4) Data Configuration

Key decisions for addressing low bandwidth issues in DIL network environments include carefully designing the data to be transmitted, how the data is transmitted and when, and how the data is formatted. Standard methods such as caching, prioritization, filtering, and encoding are some key strategies to consider. Taken together, each strategy can improve performance by reducing the total amount of data to be sent. By ensuring that only the most important data is sent, they can also enhance reliability.

· Caching, Prioritization, and Filtering

Given an intermittent or interrupted environment, caching is the first strategy to consider. When connections are available, ensuring that the data to be transmitted is ready allows applications to ensure that data is not lost when the network is unavailable. However, there are other aspects to consider as part of the caching strategy. Prioritizing data allows edge systems to ensure that the most important data is sent first, maximizing the value of available bandwidth. Additionally, filtering cached data should be considered, based on criteria that may include stale data timeouts, detection of duplicate or unchanged data, and relevance to the current task (which may change over time).

· Preprocessing

One way to reduce data size is through preprocessing at the edge, where algorithms designed to run on mobile devices process raw sensor data to produce composite data items that summarize or detail important aspects of the original data. For example, a simple facial recognition algorithm running on a local video source could send facial recognition matches for known individuals. These matches could include metadata such as time, date, location, and snapshots of the best matches, which can be several orders of magnitude smaller than sending the original video stream.

· Encoding

The choice of data encoding has a significant impact on effectively sending data over bandwidth-limited networks. Over the past few decades, encoding methods have changed significantly. Fixed Format Binary (FFB) or bit/byte message encoding is a key part of tactical systems in the defense domain. While FFB can bring bandwidth efficiency close to optimal, it is also susceptible to changes due to different technical standards affecting encoding, making it difficult to implement and challenging for heterogeneous system communication.

Over the years, text-based encoding formats, such as XML and more recently JSON, have been used to enable interoperability between different systems. However, text-based messages incur high bandwidth costs, leading to the development of more modern approaches, including Variable Format Binary (VFB) encoding, such as Google Protocol Buffer and EXI. These methods leverage the size advantages of fixed format binary encoding while allowing for effective payloads based on general specifications. Although these encoding methods are not as universal as text-based encodings (such as XML and JSON), they are gaining increasing support in commercial and tactical application domains.

For edge networks, areas researchers will explore in the future include:

· Electronic countermeasures and electronic counter-countermeasures technologies, as well as technologies to address current and future near-peer conflict environments;

· Optimized protocols for different network profiles to achieve a more heterogeneous network environment where devices have different platform capabilities and come from different agencies and organizations;

· Lightweight orchestration tools for data distribution to reduce the computational and bandwidth burden of data distribution in DIL network environments, improving operational bandwidth availability.

Reply with the following keywords to view a series of articles:

|

Hot Topics:ABMS|Unmanned Autonomous Systems|Joint All-Domain Command|Urban Operations |

|

Strategic Planning:Development Planning|Regulations|Think Tank Reports |

|

Operational Concepts:Mosaic Warfare|Multi-Domain Operations|Distributed Lethality |

|

Emerging Technologies:Artificial Intelligence|Cloud Computing|Big Data|Internet of Things|Blockchain|5G |

|

System Equipment:Army|Navy|Air Force|Space Force|Cyber Space|NC3|Air Defense and Missile Defense|Logistics Support |

|

Air Traffic:NextGen|SESAR|Unmanned Aerial Vehicles |

For more information, long press the QR code to follow.