Life goes on, and so does innovation. Many engineers have attempted to integrate MCUs with OpenAI’s ChatGPT to create chatbots, voice assistants, and natural language interfaces.

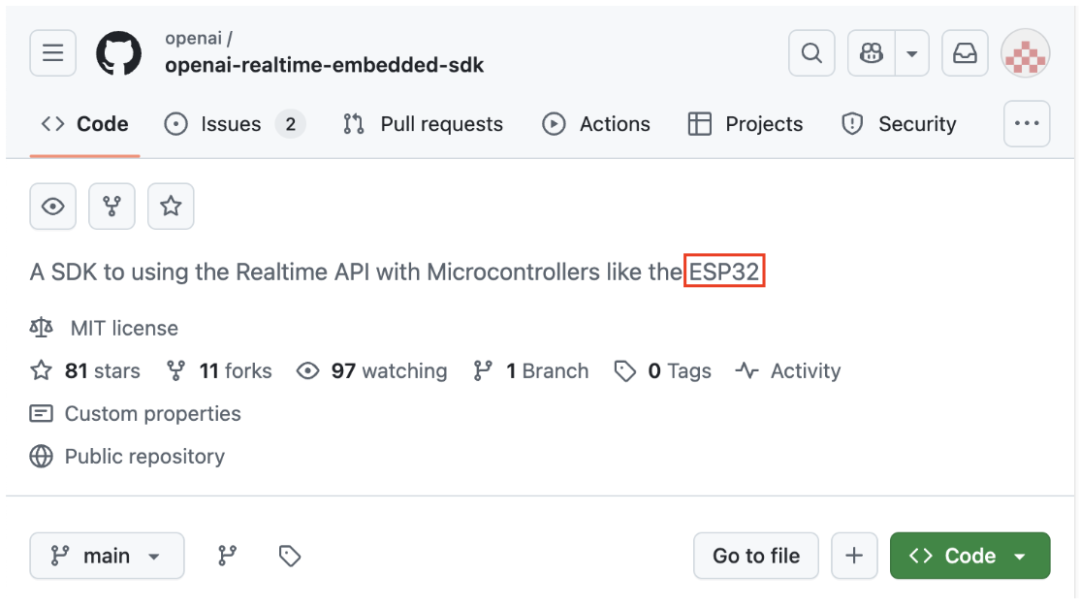

A few days ago, when OpenAI officially released the o3 model, it also announced a Realtime API SDK that can be used on Linux and 32-bit MCUs, sparking a heated discussion among engineers.

OpenAI Created an SDK for 32-bit MCUs

Recently, OpenAI released an SDK for using the Open Realtime API on microcontrollers represented by the ESP32 on its official GitHub repository. This project has been developed and tested on ESP32-S3 and Linux, allowing developers to use it directly according to the guidelines.

This SDK is primarily designed for embedded hardware and has only been validated on the Espressif ESP32S3. The SDK is developed based on OpenAI’s latest WebRTC technology, providing an extremely low-latency voice conversation experience.

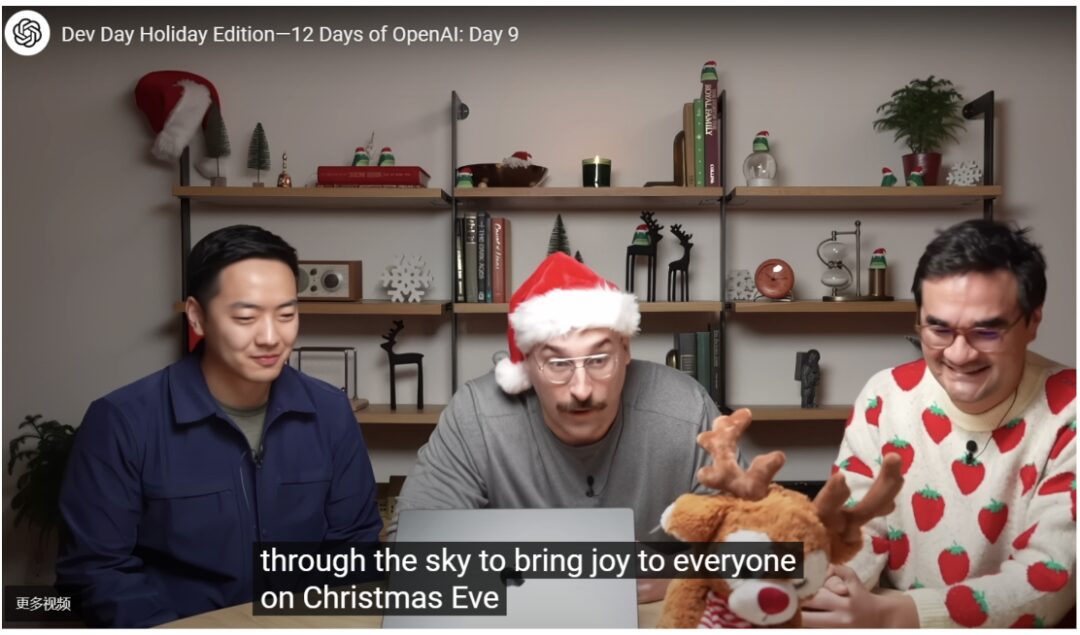

During the release event, OpenAI showcased a Christmas-themed AI toy that used the ESP32 MCU. In the demo, the engineer interacted with the AI toy in several rounds of conversation, which felt quite natural, with no noticeable delays or response times, consistent with previous web demo performances.

What’s Available on GitHub

According to the GitHub page (https://github.com/openai/openai-realtime-embedded-sdk), the openai-realtime-embedded-sdk is a tailor-made SDK for microcontrollers that enables developers to implement real-time API functionality on devices like the ESP32.

This SDK has been primarily developed and tested on the ESP32S3 and Linux platforms, allowing developers to use it directly on Linux without the need for physical hardware.

To use this SDK on hardware, you can purchase any of the following microcontrollers. Other MCUs may also be compatible, but this SDK is developed based on the following devices:

-

Freenove ESP32-S3-WROOM;

-

Sonatino – ESP32-S3 Audio Development Board.

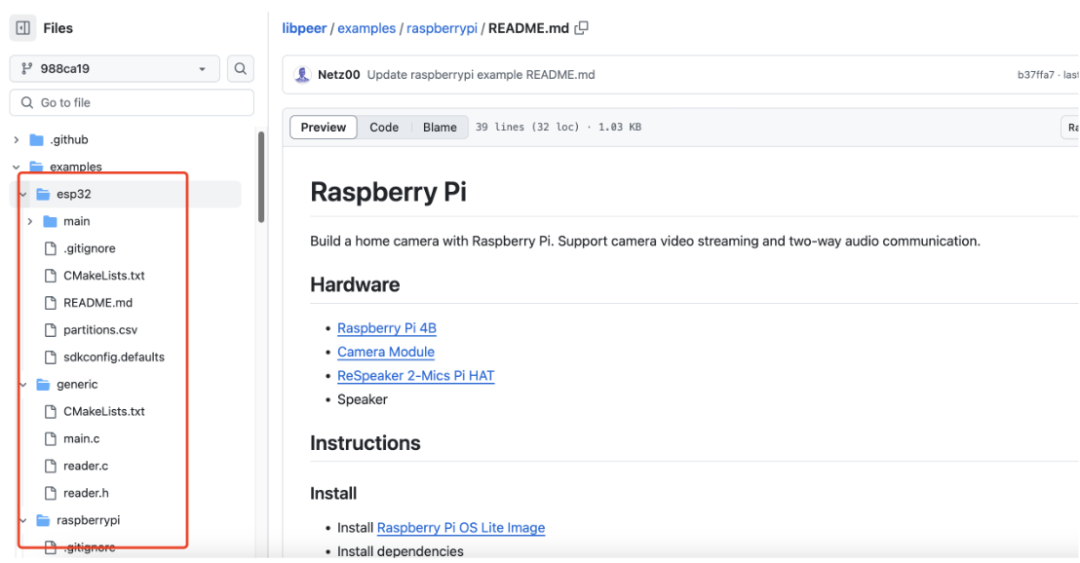

However, we found that in the examples folder, there is also a generic example and a Raspberry Pi example. In the Raspberry Pi folder, the hardware used includes Raspberry Pi 4B, Camera Module, ReSpeaker 2-Mics Pi HAT, and Speaker. So, perhaps embedded devices will gradually support this SDK as well.

By configuring the Wi-Fi SSID, password, and OpenAI API key, users can easily set up the device and run the program. The key advantage of this SDK is its ability to provide microcontrollers with the capability to interact with powerful APIs, expanding the application potential of microcontrollers in real-time data processing and decision-making scenarios.

Target audience: The target audience includes embedded system developers, IoT device manufacturers, and researchers needing to implement intelligent decision-making on microcontrollers. This SDK is particularly suitable for those seeking to achieve advanced data processing capabilities on resource-constrained devices due to its ease of integration and use.

Examples of use cases:

-

Smart Home: Implementing voice control features on ESP32 using the SDK;

-

Industrial Automation: Enabling microcontrollers to respond to sensor data in real-time via the SDK;

-

Research: Utilizing the SDK for real-time inference of machine learning models.

According to engineers’ analysis, the demo is essentially an engineering implementation, with the biggest advantage being that the WebRTC protocol’s API significantly simplifies the process of calling APIs for developers. As we know, embedded development primarily uses C/C++, which can be cumbersome, especially when dealing with actual business scenarios that require handling numerous cases manually. With WebRTC, hundreds of lines of C code can accomplish this demo.

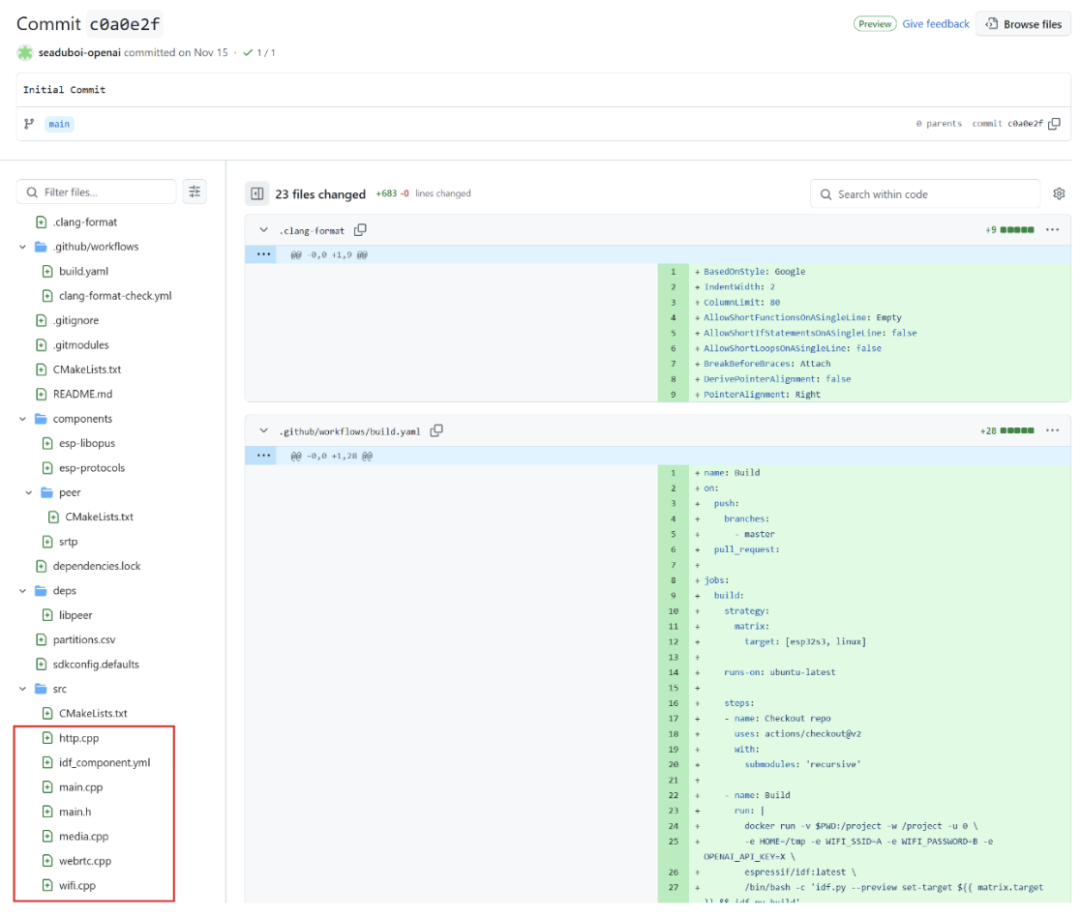

Specifically, the repo structure contains only one commit, and the demo code consists of just six files. The project references several open-source libraries: libopus (for audio encoding and decoding), esp-protocols (to control ESP-integrated hardware, connect to Wi-Fi, record audio, etc.), and libpeer (for WebRTC communication).

The main program is fairly straightforward, primarily involving package calls, enabling Wi-Fi, starting audio recording, playback, connecting to Wi-Fi, and then connecting WebRTC to OpenAI’s API. Each function implements less than 100 lines, and after removing parts compatible with PCs, the actual code compiled to run on the chip is only about 300 lines.

Why OpenAI Chose ESP32

Engineers analyzed that, based on product requirements, the control chip for the voice-interactive AI toy has two basic requirements:

-

Networking capability, whether via Wi-Fi or Bluetooth;

-

Voice processing, supporting recording and playback.

These two are hard requirements; other functions are less critical, especially in the Arm domain, where video processing capabilities, such as large screen displays, are not needed for AI toys.

Compared to traditional microcontrollers, the ESP32 is a new player that shines in the smart home era, perfectly meeting these needs.

First, the ESP32 is inexpensive, highly integrated, with a single chip costing only a few dollars;

Second, the ESP32 is designed for low-power scenarios, allowing it to achieve several weeks or even months of battery life;

Third, the ESP32 has integrated Wi-Fi, Bluetooth, and voice processing capabilities, eliminating the need for external modules, further reducing the complexity and cost of PCB design while enhancing product endurance.

Compared to other common microcontroller solutions, while there are various implementation methods, the simplest and most labor-saving solution is to use the ESP32. Which hardware engineer can resist a design that only requires one chip?

More Embedded SDKs on the Way

At the “2024 Volcanic Engine Winter Force Power Conference,” several hardware manufacturers showcased product demos based on RTC technology. During this conference, a product manager from ByteDance mentioned embedded SDKs, although they did not disclose the supported hardware models in detail, it is undoubtedly clear that SDKs are on the way.

Apex.AI is also working on this. According to Apex.AI, the Apex.Grace product enhances ROS 2, while Apex.Ida enhances Eclipse iceoryx. Through the Apex.AI SDK for microprocessors, we provide additional features, improved functionality, and added safety certifications based on open-source projects. With the launch of the new microcontroller Apex.AI SDK, this successful path will continue to build upon open-source projects. It is understood that Apex.AI has currently added Xilinx Ultrascale+ MPSoC and Infineon AURIX TC399 as internal projects to the new platform. Based on experience, adding a new platform takes only a few weeks.

· END ·

Get a free Autosar introductory and practical materials package!

Scan to add the assistant and reply “Join Group”

Exchange experiences face-to-face with electronic engineers