On May 19th, the 4th “China Smart Home Expo (CSHE2018)” and the 9th Shenzhen (International) Integrated Circuit Technology Innovation and Application Exhibition (CICE2018) successfully concluded at the Shenzhen Convention and Exhibition Center.

During the concurrent event “Smart Home: IoT Embedded Technology Ecological Summit Forum,” Liu Zhe, Product Director of Zhongke Chuangda Software Co., Ltd., delivered a keynote speech titled “The Implementation of Embedded Intelligent Visual Algorithms in IoT Application Scenarios.” Below is the content of the speech, not yet confirmed by the author.

01

The Opportunities for Embedded Intelligent Visual AI Are Coming

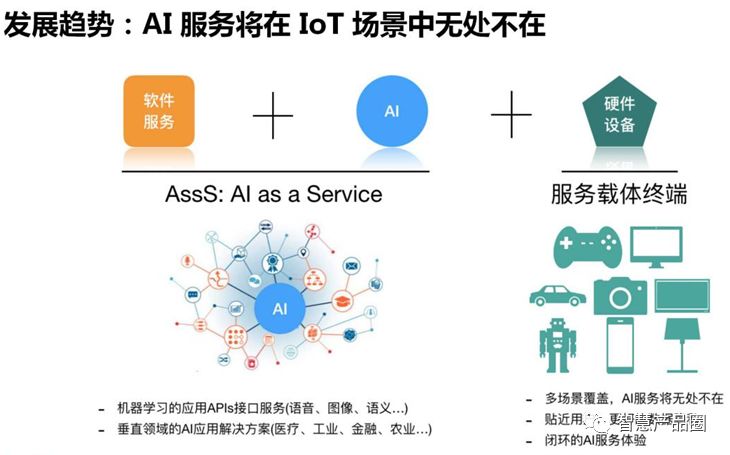

The explosion of the IoT requires intelligent algorithms to be more deeply embedded in terminal devices. The trend of IoT itself is also to hope that terminal devices can autonomously control and have intelligent functions to quickly meet the needs of corresponding terminal users, while requiring lower power consumption and better data privacy and security guarantees.

In other words, AI will become a service, and intelligent terminals serve as carriers of this service, covering specific scenarios comprehensively to better approach users and provide a more convenient experience.

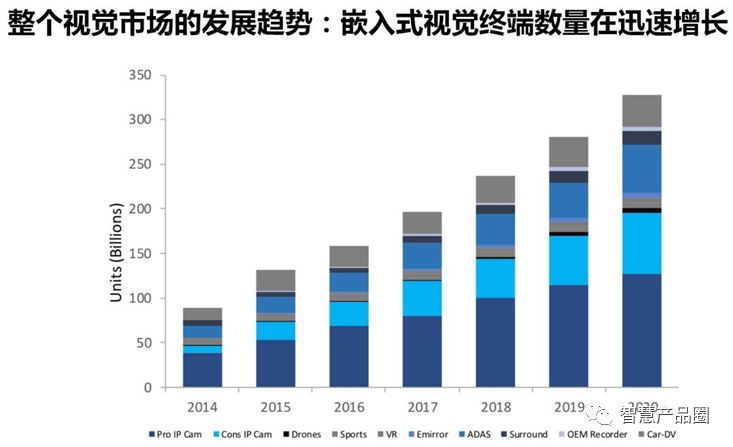

This is the development trend of the entire visual market. This year, there will be nearly 250 billion camera terminals, and if only 1% are endowed with intelligent visual capabilities, that would be a scale of 25 billion, which is a very large market capacity. Moreover, embedded IoT has been growing rapidly. Some major international giants have also started to work in this area, such as Amazon’s AWS DeepLens, Google’s AIY Vision, and Microsoft, which launched AI development boards at last week’s developer conference.

02

Development Methods for Embedded AI

Embedded AI has a very promising future, but there will still be many difficulties and challenges in applying it to real scenarios. What is the usual process to develop a good embedded AI algorithm?

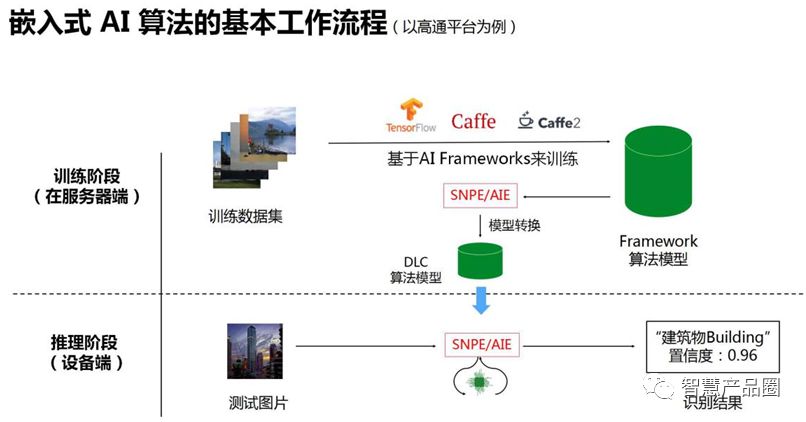

First, a dataset will be trained on the cloud or server side based on some open-source AI frameworks to establish a cloud algorithm model. Then, based on various chip platforms, such as Qualcomm’s SNPE model conversion tool, it will be converted into a small model that can run on the terminal. The model’s size and parameters will be appropriately trimmed. This way, when inference is performed on the device side, it can quickly and real-time meet the requirements for low power consumption and recognition rate.

During the application of terminal devices, it is found that the actual usage scenarios are very fragmented, with various applications such as industrial, smart city, smart home, etc., and various terminal hardware, such as ARM platforms, DSP, and NPU chips. Overall, the computing power of embedded AI chips is still quite limited. Another practical issue is that the current ecosystem for embedded AI is still very weak. If one wants to create an embedded AI product, whether it’s chips or algorithms, the costs are relatively high.

Therefore, Zhongke Chuangda’s strategy is to handle it from both hardware and software dimensions, combined with the specific application scenarios of customers.

First, the key to hardware is to accelerate AI computation. Many chips have AI acceleration processing units, and various NPU, TPU, and DPU are emerging, which also require deep cooperation with chip manufacturers.

Second, from the software dimension, it is necessary to reduce the computational load of the algorithm model and design the architecture as close to the embedded platform as possible. A general, relatively large teacher model is first created using supervised learning or reinforcement learning. When applied to an embedded IoT scenario, it will be trimmed, extracting a part as a student model.

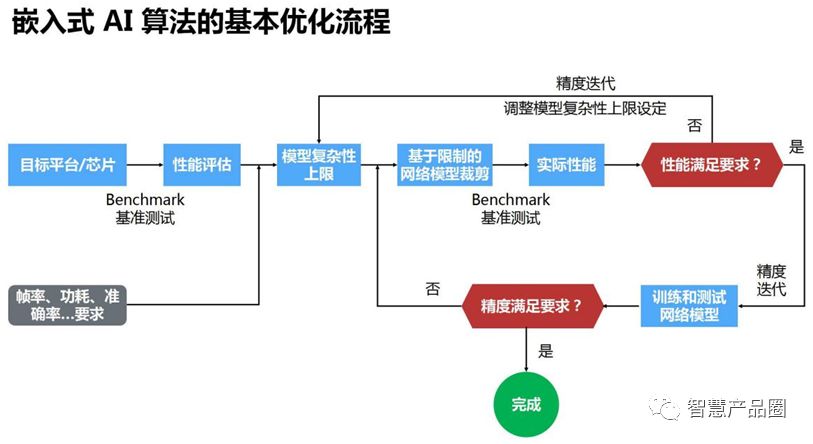

Third, the performance indicators of the model will be adjusted according to specific terminal requirements.

Specifically, in the third step, debugging tests will be conducted on the chip platform that the customer wishes to use. After testing is completed, performance evaluations will be carried out on the chip platform. Customers may also propose some requirements, such as frame rate and power consumption, based on these requirements, the algorithm model will be designed for complexity limits. Based on this limitation, trimming will be done, and when the requirements are not met, iteration and adjustment of algorithm accuracy will be carried out, which is commonly referred to as the tuning process.

03

Several Embedded Visual AI Cases from Zhongke Chuangda

Zhongke Chuangda provides an extensible embedded AI platform framework and also offers workstations for embedded edge AI solutions, mainly to solve how to quickly adapt under different hardware platforms and computation architectures, as well as a complete solution for intelligent vision.

Here are several embedded visual AI cases developed by Zhongke Chuangda for customers.

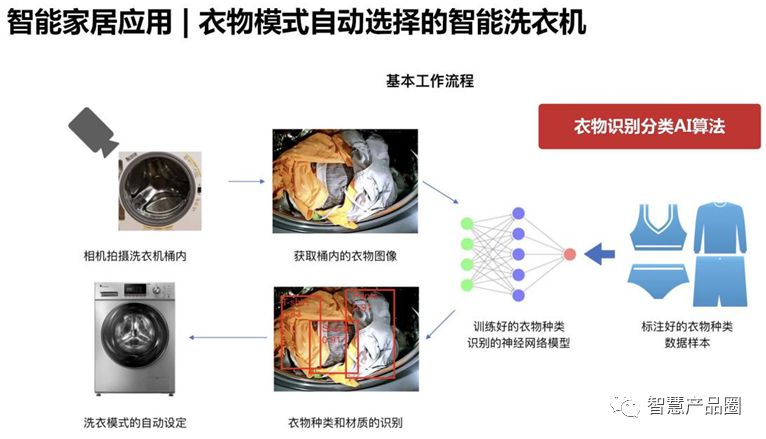

Case 1: Intelligent Washing Machine That Can Identify Clothes

Previously, washing clothes generally involved stuffing all clothes into the washing machine and then manually selecting the washing mode, such as water volume and time. This washing machine manufacturer hoped to achieve the ability to identify the material of the clothes thrown into the washing machine through a built-in camera, and then autonomously select the washing mode.

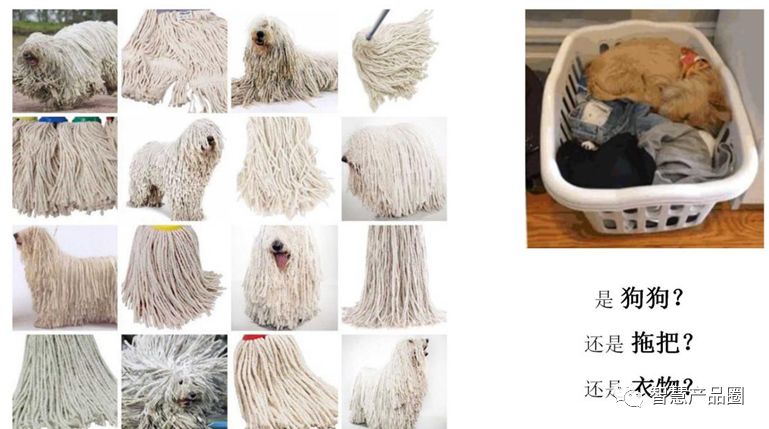

Zhongke Chuangda developed an AI algorithm for clothing classification recognition for this washing machine manufacturer. During the process, several practical issues were encountered, such as clothes being obscured, especially socks being easily blocked by larger garments. Additionally, during the training of the dataset, it was found that some image data were inherently difficult to distinguish, as shown in the figure below, making it hard to differentiate between a mop, a puppy, or a piece of clothing. Therefore, testing for algorithm scalability was necessary.

Case 2: Intelligent Microwave That Can Identify Food

This is a food recognition algorithm for microwaves, which is quite similar to the previous washing machine case. Traditional microwaves also require manual selection of the working mode. This microwave manufacturer hopes to identify the type of food and then autonomously select the working mode. Similar issues were encountered in this case, particularly with the classification recognition of training data, as shown in the figure below, where images of puppies and muffins are very similar.

Case 3: Intelligent Detection of Cracks in Utility Poles

This is a smart city case, providing a crack detection solution for utility poles for a Japanese company that maintains utility poles. As Japan is a country prone to earthquakes and has a marine climate, the number of aging and cracked utility poles is quite high.

Previously, workers would carry a DSLR camera to photograph each utility pole and then send the photos to the company’s data center, where professional evaluators would identify the shape and risk level of the cracks to determine if repairs were necessary. Zhongke Chuangda provided an embedded terminal camera device, which, after taking a photo of a utility pole, can immediately identify and extract information such as the length, width, and depth of cracks, determining the risk level of the utility pole. The data sent back to the company’s data center is only a small amount, previously requiring several MB or even tens of MB, now only needing to transmit a few KB.

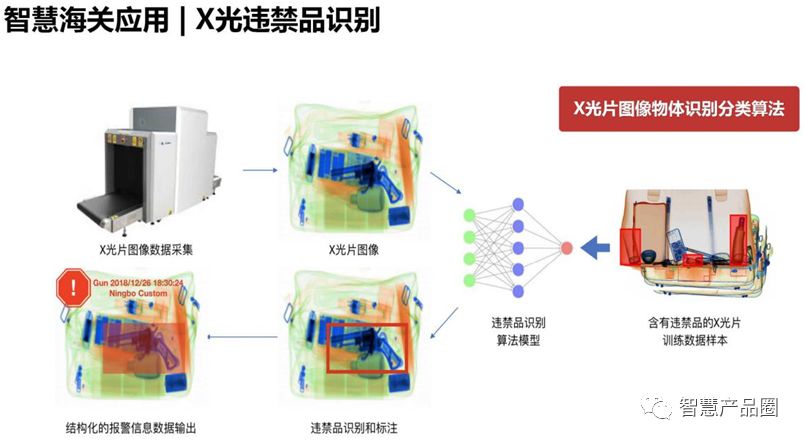

Case 4: Detection of Prohibited Items at Smart Customs

Currently, customs detection methods involve packages being scanned by X-ray machines, with staff in the monitoring room watching the screens, needing to check two packages within six seconds for prohibited items such as knives, guns, or gun parts. The solution provided by Zhongke Chuangda can quickly identify and mark these prohibited items, achieving an accuracy rate of 95%, effectively reducing the workload of customs inspections by over 90%.

✄———————————–

2019 Booth Recruitment Has Started

Sales Director:Cai Yuancan

18002541247

Smart Product Circle

China Smart Home Industry Professional Media Platform

Scan to Follow ↑↑↑