Click the above 【Car Cloud】 to follow and see more past content.

In today’s electronic information field, the pace of cross-industry integration is accelerating, and the connection between various links in the industrial chain is unprecedentedly tight. Therefore, when examining a field or a project, one must consider it comprehensively from all aspects of the industrial chain, including cloud management, hardware, software, algorithms, and data. Companies in each segment of the industrial chain can integrate forward or backward at any time, and the competitive relationship can change at any moment.

With the advent of a new hardware era, research on the industry has raised higher requirements, and the dimensions of thinking need to broaden. It is necessary to clarify the intricate relationships within and the future development trends, with workloads several times greater than before. Researching benchmark enterprises in each segment of the industrial chain is essential; only by understanding the strategies and trends of these large companies can one discover potential entrepreneurial/investment opportunities. Therefore, I have recently taken the initiative to study large enterprises, such as those in audio, visual, IoT platforms, etc., and this article mainly focuses on chip manufacturers for ADAS, to understand their main product lines and statuses.

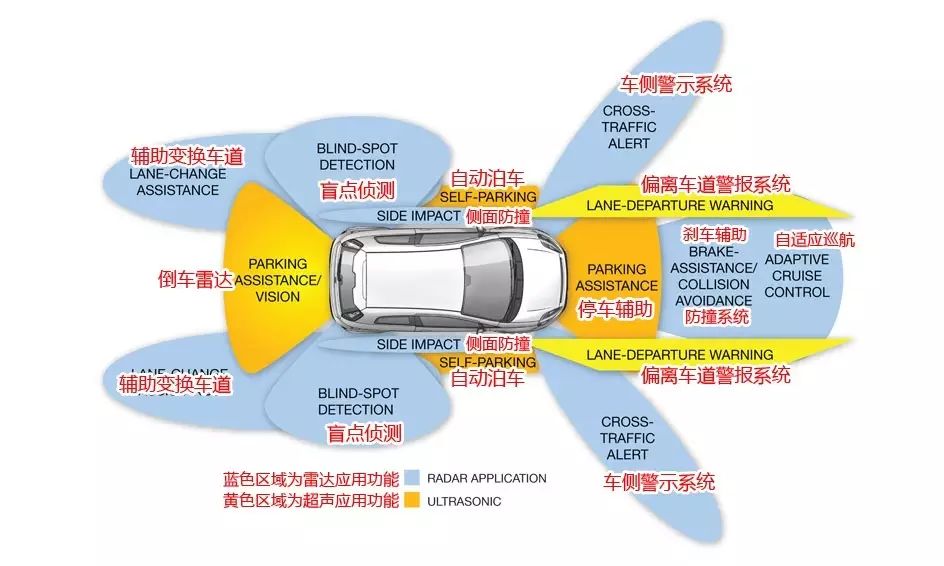

ADAS (Advanced Driver Assistance Systems) refers to the use of various sensors installed in vehicles to collect environmental data inside the vehicle in real-time, performing technical processing such as recognition, detection, and tracking of static and dynamic objects, thereby enabling drivers to detect potential dangers as quickly as possible. Typically, it includes navigation and real-time traffic systems (TMC), electronic police systems (ISA), adaptive cruise control (ACC), lane departure warning systems (LDWS), lane keeping systems, collision avoidance or pre-collision systems, night vision systems, adaptive light control, pedestrian protection systems, automatic parking systems, traffic sign recognition, blind spot detection, driver fatigue detection, downhill control systems, and electric vehicle alarm systems, among others.

Currently, most innovations in the automotive sector come from innovations in automotive electronics. From the perspective of automotive electronic systems, we are gradually transitioning from a decentralized architecture (numerous ECUs controlling) to a centralized or even super processing system. This trend is also evident in ADAS. The changes in this trend include reducing ECUs, lowering power consumption, improving processor and memory utilization efficiency, reducing software development difficulty, and enhancing safety, making automotive semiconductor manufacturers play an increasingly important role in the entire automotive industry.

At the same time, for ADAS processor chips, the current product forms resemble those of smart home products, with blockbuster single products and multifunctional combinations. This is similar to Mobileye’s visual processing ADAS chip products and the fusion of multiple sensors, making the trend of ADAS chips becoming platforms. Currently, both forms have markets; single-function products will allow ADAS to be more widely popularized in mid-range and low-end vehicles, even in the aftermarket, with cost-performance ratio being the premise. On the other hand, the fusion of multiple sensors will elevate the level of autonomous driving towards Level 4 or even Level 5. Currently, companies like Google and Baidu are working on the fusion of multiple sensors, but so far, there is no dedicated Level 4/5 ADAS ASIC chip.

From the perspective of chip design, the main challenges for ADAS processor chips currently lie in the following areas:

-

1. Automotive-grade standards, preferably passing ISO26262, reaching ASIL-B or even ASIL-D levels.

-

2. High computational load and high bandwidth, especially for chips that perform multi-sensor fusion, require higher chip frequency and heterogeneous design to achieve rapid data processing speed, while also having high throughput requirements for data transmission.

-

3. With the application of artificial intelligence in ADAS, the design of chips will consider adding hardware for deep learning. How to balance between hardware and software, as well as how to match the AI computing model with the existing software and hardware architecture and the overall system design, is still in the early exploration stage.

The following mainly introduces the products of major ADAS processor chip manufacturers, hoping to glimpse the current state of the ADAS processor chip field and future development trends from their products.

Qualcomm/NXP

Since Qualcomm has acquired NXP, they are introduced together here. Qualcomm mainly enters the ADAS market gradually through its mobile processor chips (modified to automotive-grade). Initially, it started with surround view systems, and recently partnered with Zongmu to launch the first prototype of an ADAS product based on the Snapdragon 820A platform, utilizing deep learning. This product runs on the 820A neural network processing engine (SNPE) and can recognize various objects such as vehicles, pedestrians, bicycles, etc., and perform real-time semantic segmentation of drivable areas at the pixel level. However, it is still some distance from commercialization. Overall, Qualcomm’s Snapdragon product strategy should still focus on in-car entertainment information systems while gradually expanding into more specialized ADAS.

At the same time, NXP, along with its previous acquisition of Freescale, has a complete product line layout in automotive electronics and ADAS chips.

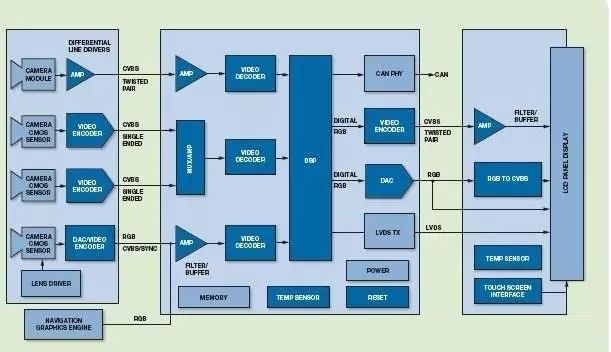

NXP has released the Blubox platform, providing OEM manufacturers with design, manufacturing, and sales solutions for Level 4 (SAE) autonomous vehicles. The following is the ADAS system block diagram from NXP, which processes data from multiple video and 77G radar sources and then transmits it to the cloud and vehicle systems. We see that NXP is capable of providing a complete set of reference solutions; their product line is comprehensive, although they currently do not integrate more chips but rather provide relatively dispersed chips and solutions. Here, we focus on the central processing units S32V234 and MPC5775K, where MPC5775K processes radar data, while S32V234 analyzes the fused data from multiple sensors and sends the results to the vehicle body system via the CAN bus.

S32V234 is an ADAS processor launched by NXP in 2015 as part of its S32V series. It supports heterogeneous computing with CPU (4 ARM V8 architecture A53 and M4), GPU (GC3000), and image recognition processing (CogniVue APEX2 processors), with a low power design of 5W. Through CogniVue APEX2 processors, it can simultaneously support four automotive cameras (front, rear, left, right), extracting and classifying images, while the GPU can perform real-time 3D modeling, achieving a computational capacity of 50 GFLOPs. Thus, according to this hardware architecture, it can complete 360-degree surround view and automatic parking functions. Additionally, this chip reserves interfaces for supporting millimeter-wave radar, laser radar, and ultrasonic sensors, facilitating the realization of multi-sensor fusion, and this chip meets the ISO 26262 ASIL B standard.

The Qorivva MPC567xK series is a 32-bit MCU based on Power Architecture®, and MPC577XK is a dedicated radar information processing chip. This series increases the memory of the chip, enhancing its speed and performance, supporting applications such as adaptive cruise control, intelligent headlight control, lane departure warning, and blind spot detection. From the perspective of the entire radar system, it combines the 77G radar transceiver chip set, Qorivva MPC567xK MCU, FPGA, ADC, DAC, and SRAM, supporting long, medium, and short-range applications. It is important to focus on the signal processing toolbox design, which includes FFT, DMA, COPY, and Scheduler. Currently, the 77GHz FCMW radar requires FFT, i.e., Fast Fourier Transform, in digital signal processing, with the sampling points generally around 512-2048. From the chip architecture diagram, we see a dedicated FFT circuit.

In addition to the S32V series, the acquired Freescale has a well-known i.MX series chip that can also serve as a central processor. The i.MX series, especially the i.MX6, has extensive applications in automotive environments, particularly in in-car information systems. Many automotive manufacturers use i.MX.

Intel/Mobileye/Altera

Through a series of acquisitions, Intel has completed its layout in ADAS processors, including Mobileye’s ADAS visual processing, utilizing Altera’s FPGA processing, as well as Intel’s own Xeon and other processor models, forming a complete hardware super-central control solution for autonomous driving.

It is particularly noteworthy that Mobileye’s EyeQ series has been adopted by several automotive manufacturers, including Audi, BMW, Fiat, Ford, GM, Honda, Nissan, Peugeot, Citroën, Renault, Volvo, and Tesla. The latest EyeQ4 has demonstrated performance reaching 25 trillion operations per second, with a power consumption as low as 3W. From the hardware architecture perspective, this chip includes a set of industrial-grade quad-core MIPS processors operating at 1GHz, supporting innovative multithreading technology for better data control and management. Multiple dedicated vector microcode processors (VMP) are used to handle ADAS-related image processing tasks (such as scaling and preprocessing, warping, tracking, lane marking detection, road geometry detection, filtering, and histogramming). A military-grade MIPS Warrior CPU is located in the secondary transmission management center to handle general data both inside and outside the chip.

Currently, the fusion primarily involves radar and camera integration, which meets the bandwidth requirements; general ASICs can satisfy this. However, for laser radar integration, it is best to use FPGA, making FPGA the most suitable sensor hub. At the same time, sensor fusion is currently less applied, and suitable ASICs are hard to find, making FPGA the mainstream choice. Additionally, for some high-precision radars, using single-precision floating-point processing to achieve a 4096-point FFT increases the sampling points, reducing error but significantly increasing computation. It inputs and outputs four complex samples per clock cycle. Each FFT kernel operates at speeds exceeding 80 GFLOP, which generally requires FPGA for better implementation. The sampling points for general vehicle radar are around 512-2048, but military applications can reach 8192, necessitating FPGA support. Below is an actual image from Audi’s FAS, which uses Altera’s Cyclone V SoC FPGA for sensor fusion, also responsible for processing millimeter-wave radar and laser radar data.

Renesas

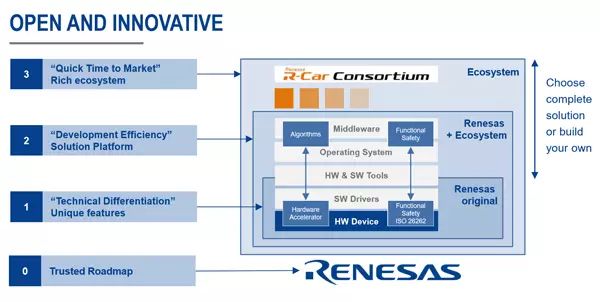

Renesas provides a relatively complete product line for the ADAS processor business and also offers ADAS Kit development systems. Among the chip series, the most famous is its R-Car product line. The high-end product hardware architecture includes ARM Cortex A57/53, ARM Cortex R series, Video Codec, 2D/3D GPU, ISP, etc., capable of supporting multiple visual sensor inputs, compatible with OPENGL, OpenCV software, meeting ASILB automotive-grade standards. Initially, this product was used for in-car infotainment systems, and later this system product gradually adapted to automotive surround vision systems, dashboards, and ADAS systems. This development path is worth learning from for domestic semiconductor manufacturers looking to enter the automotive sector.

In addition to the R-Car series, like NXP, Renesas also has specialized processing chips for radar sensors, such as the RH850/V1R-M series. This product adopts 40nm embedded eFlash technology, and the optimized DSP can quickly perform FFT processing.

Recently, reports indicate that Renesas has launched Renesas Autonomy, a newly designed ADAS and autonomous driving platform. Specific details are still unclear, but according to Amrit Vivekanand, Vice President of Automotive Business for Renesas Electronics America, this autonomous driving platform differs from competitors in that “it is an open platform, hoping to make it easier for users to port their algorithms, function libraries, and real-time operating systems (RTOS) to the platform.”

The first product released on the Renesas Autonomy platform is a system-on-chip (SoC) for image recognition called R-Car V3M. Renesas describes this high-performance visual processing chip as “an optimized processing unit primarily used for smart camera sensors, but also applicable for surround vision systems and even laser radar data processing.” Mike Demler, a senior analyst at semiconductor industry analysis firm Linley Group, believes that this open platform and product release can be seen as Renesas Electronics’ competitive layout against Mobileye, “They hope to attract automotive manufacturers that have not partnered with Mobileye, especially Japanese manufacturers, as well as some Tier 1 manufacturers producing ADAS products.” Compared to Mobileye’s processing platform’s “black box” system, Renesas continually emphasizes the “openness” of its solutions, which is also a focus for every processor manufacturer aiming to compete with Mobileye. Renesas states that all algorithms for its latest released R-Car V3M processing module will be open to its users.

Infineon

As a global market leader in automotive electronics, power semiconductors, and smart card chips, Infineon has long provided semiconductor and system solutions for automotive and other industrial applications. Infineon has leading technologies in 24/77/79G radar, laser radar, and other sensor devices and processing chips. In addition, they have their own solutions in body control, airbag systems, EPS, TPMS, and various other areas.

Texas Instruments (TI)

TI actually follows two product lines in ADAS processors, the Jacinto and TDA series. The Jacinto series is primarily developed based on the previous OMAP processors; after TI abandoned the mobile processor platform, it shifted its focus on digital processors to applications in automotive, primarily in in-car infotainment systems. However, from Jacinto6, we see the integration of in-car infotainment and ADAS functions. This chip includes dual ARM Cortex-A15 cores, two ARM M4 cores, two C66x floating-point DSPs, multiple 3D/2D graphics processors (Imagination), and also integrates two EVE accelerators. The Jacinto6 SoC processor is exceptionally powerful, whether in processing entertainment media or assisting driving with in-car cameras, it can utilize both internal and external cameras to present various functions such as object and pedestrian detection, enhanced reality navigation, and driver identity recognition.

The TDA series has always focused on ADAS functions, with the TDA3x series supporting various ADAS algorithms such as lane line assistance, adaptive cruise control, traffic sign recognition, pedestrian and object detection, forward collision warning, and rear collision warning. These algorithms are crucial for the effective use of front cameras, full vehicle surround view, fusion, radar, and intelligent rear cameras in numerous ADAS applications.

NVIDIA

With the rise of artificial intelligence and autonomous driving technology, NVIDIA’s GPUs, with their strong parallel computing capabilities, are particularly suitable for deep learning. It is generally believed that compared to Mobileye, which focuses only on visual processing, NVIDIA’s solution emphasizes the fusion of different sensors. Reports suggest that Tesla has abandoned Mobileye in favor of NVIDIA.

NVIDIA’s Drive PX2 is referred to by Huang Renxun as “a supercomputer designed for cars”; it will become a standard configuration for vehicles, capable of perceiving the car’s location, identifying surrounding objects, and calculating the safest path in real-time. “The Tegra X1 processor and 10GB memory can simultaneously process images captured at 60 frames per second from 12 2-megapixel cameras, actively identifying various vehicles on the road through environmental visual computing technology and powerful deep neural networks, and even detecting whether the vehicle ahead is opening its door.

Driver PX2 also incorporates chips from other partners, including Avago’s PEX8724 (24-lane, 6-port, third-generation PCIe Gen switch) for interconnection between two Parkers. Additionally, it includes an FPGA from Intel-acquired Altera for executing real-time operating systems. The FPGA model is Cyclone V 5SCXC6, Altera’s top product, with 110K logic operations and 166036 registers. Finally, there is an Infineon AURIX TC 297 MCU for safety control, said to allow PX2 to reach ASIL C level. There is also Broadcom’s BCM89811 low-power physical layer transceiver (PHY), using BroadR-Reach in-vehicle Ethernet technology, achieving a transmission rate of up to 100Mbps over a single pair of unshielded twisted pairs. Thus, NVIDIA has essentially launched a board-level ADAS system.

ADI

Compared to the aforementioned chip companies, ADI’s strategy in ADAS chips emphasizes cost-performance ratio. Currently, ADAS technology is primarily applied in high-end models due to the overall high costs. ADI targets high, medium, and low-end vehicles, selectively launching certain ADAS technologies at costs reduced to 2 dollars or several tens of dollars, which is undoubtedly great news for automotive manufacturers and consumers.

In visual ADAS, ADI’s Blackfin series processors are widely adopted, where low-end systems based on BF592 implement LDW functions; mid-range systems based on BF53x/BF54x/BF561 implement LDW/HBLB/TSR functions; and high-end systems based on BF60x utilize “pipeline vision processors (PVP)” to achieve LDW/HBLB/TSR/FCW/PD functions. The integrated visual preprocessing unit can significantly lighten the burden on the processor, thereby lowering the performance requirements on the processor.

It is worth mentioning that ADI recently launched the Drive360TM 28nm CMOS RADAR technology (77/79GHz), applying excellent RF performance to target recognition and classification, revolutionizing the sensor performance of ADAS applications. ADI’s high-performance RADAR solutions can detect fast-moving small objects in advance, and extremely low phase noise allows for the clearest detection of small objects in the presence of large objects. ADI collaborates with Renesas to launch a systematic solution for this chip, combining ADI’s RADAR and Renesas Autonomy platform’s RH850/V1R-M microcontroller (MCU).

Fujitsu

Fujitsu’s ADAS technology mainly involves the combination of cameras and sensors to achieve image recognition assistance and target detection. The application fields mainly include 360-degree 3D panoramic assistance, visual parking assistance, monitoring blind spots while driving, safe door opening, and recognizing obstacles and pedestrians around the vehicle. This includes a panoramic video system based on the MB86R11 “Emerald-L” 2D/3D image SoC, supporting real-time panoramic video monitoring of the vehicle’s surroundings using four cameras. From public information, it seems Fujitsu is more keen on building virtual dashboards and in-car infotainment systems, but this area is the easiest for domestic chip companies to imitate and surpass.

Toshiba

The latest news is that Toshiba will split its business into four segments: social infrastructure business, thermal power generation and other energy businesses, semiconductor and storage businesses excluding memory, and information and communication technology (ICT) solutions business. Therefore, there is significant uncertainty regarding Toshiba’s future, but in terms of ADAS processors, Toshiba has the Visconti series of image recognition processors. The second-generation product (Visconti2) has already been mass-produced since November 2015, launched through products from Japan’s Denso, mounted on the Toyota Prius mass production vehicle, with global monthly shipments exceeding 30,000 units.

Visconti employs a multi-core heterogeneous dedicated processor, and Visconti2 can process in real-time and simultaneously implement four functions such as lane keeping, front vehicle detection, pedestrian recognition, and traffic sign recognition. The Visconti4 image recognition processor can easily recognize vehicles and pedestrians, and recognize information such as traffic signals, obstacles, and lane markings, thereby achieving various advanced driver assistance applications, such as lane departure warning, forward/rear collision warning, and traffic sign recognition, capable of simultaneously processing and implementing eight functions.

Xilinx

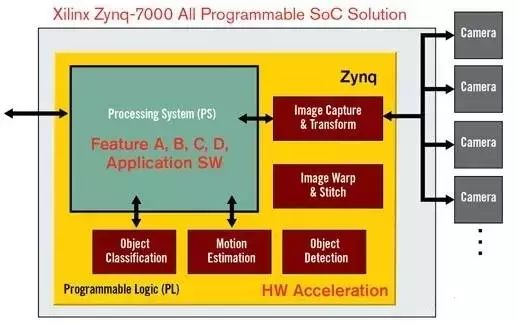

Xilinx is a well-known FPGA company, and its products are widely used in various fields. The benefits of FPGAs include programmability and flexible configuration, which can also enhance overall system performance, significantly shortening the development cycle compared to developing standalone chips. However, the drawbacks include factors such as price and size. In automotive ADAS, Xilinx’s most widely used product is the Zynq®-7000 All Programmable SoC. This system (SoC) platform can help system vendors accelerate the development time for applications in surround vision, 3D surround vision, rearview cameras, dynamic calibration, pedestrian detection, rear lane departure warning, and blind spot detection.

Zynq enables the development of ADAS solutions with a single chip. Zynq-7000 All Programmable SoC significantly enhances performance, facilitates various bundled applications, and allows for scalability between different product lines. Additionally, it achieves an optimized platform for ADAS, allowing automotive manufacturers and automotive electronic product suppliers to add their own IP and ready-made IP from the Xilinx automotive ecosystem to create unique differentiated systems.

The article is reprinted from the WeChat public account “Electronic Industry Talk,” authored by Feng Zongxu.