Today, I present to you a work from creator X: the blind assistance environmental perception device. This device mainly utilizes the Grove Vision AI V2 camera module to identify objects in the environment and uses XIAO ESP32S3 and Raspberry Pi for voice output, combining object detection and text-to-speech technology to provide environmental information to visually impaired individuals.

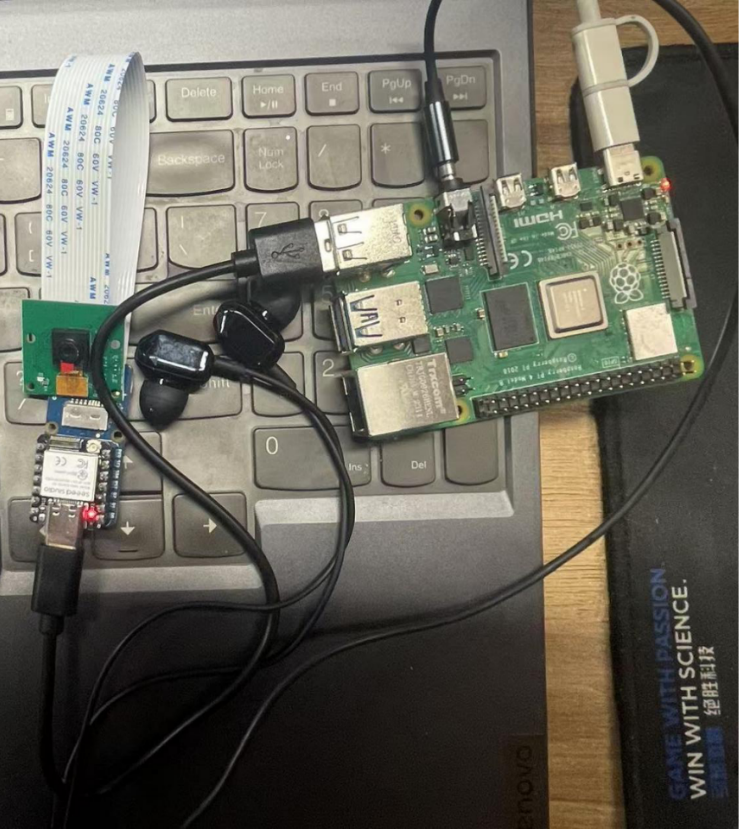

Hardware

-

Seeed Studio XIAO ESP32S3 Sense

-

Raspberry Pi 1 Model B+

-

Raspberry Pi Case

-

Grove – Vision AI Module V2

-

OV5647-69.1 FOV Camera module for Raspberry Pi 3B+4B

Software

-

SenseCraft AI

-

Arduino IDE

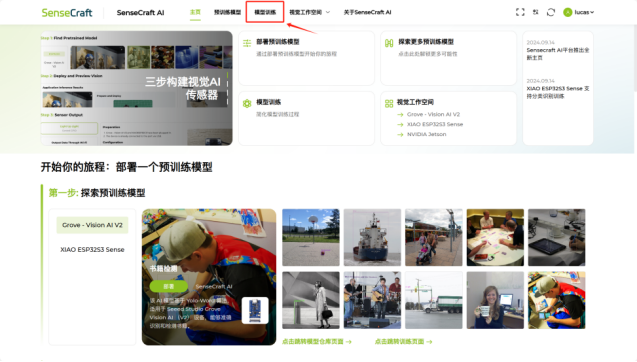

I used SenseCraft AI to train the object detection model. SenseCraft AI is a leading development platform for model training and deployment.

To start a project, you need to log in to SenseCraft AI with your account (or create a free account). Then you can use the models provided on the platform or create your own models, which is very convenient.

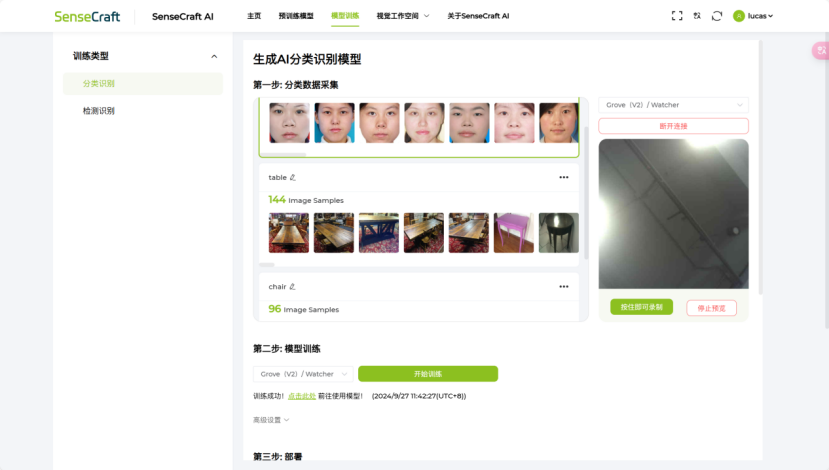

In SenseCraft AI, you can upload existing data or use connected devices to record new data. In my project, I prepared a dataset that includes some common items, such as chairs, tables, and pedestrians on the road. The more items included in the dataset, the more effective the model becomes. The size of the dataset is also important. The more images of specific objects we can capture, the higher the accuracy.

I initially uploaded 312 images of 3 objects in my project. I will upload more images of more objects later to improve the model’s accuracy.

Uploading Object Identification Model to Grove Vision AI

-

Open the SenseCraft platform: Home – SenseCraft AI -

Open the SenseCraft homepage, find the model training option, and click to enter

In the model training interface, first select the classification recognition type, then select the device Grove – Vision AI Module V2 at “2” to connect, then add categories as needed and name the classifications.

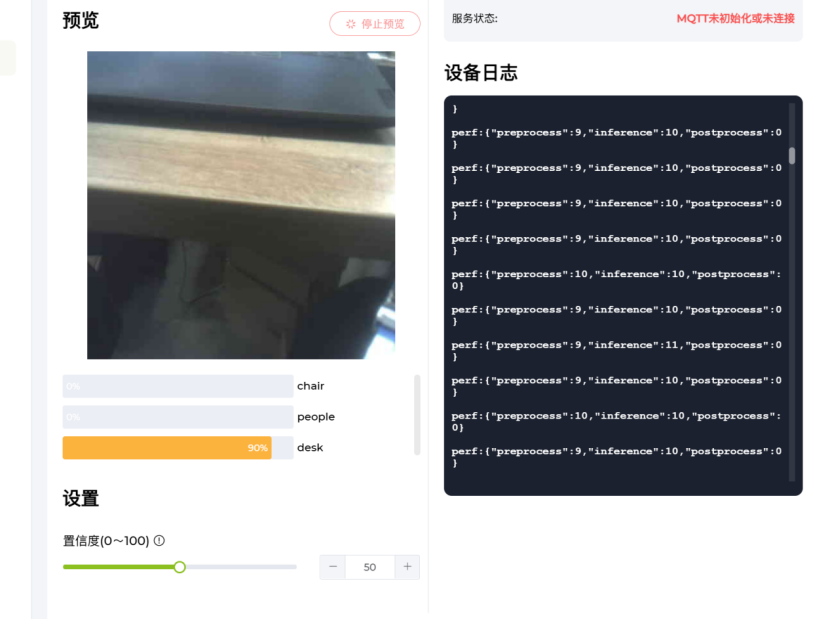

Next, select the device Grove – Vision AI Module V2 in step two, click to start training, set advanced settings as needed, or leave them as default. After training, you can select the device Grove – Vision AI Module V2 for model deployment. Wait for the deployment to complete, and we can observe on the right side of the page that by pointing the camera at the object, the confidence of the object in the preview will change in real-time. This step indicates that the model deployment is successful.

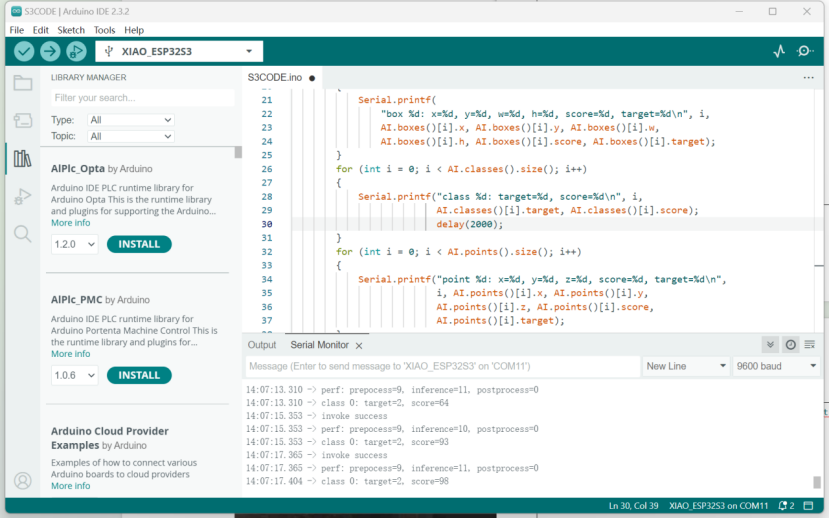

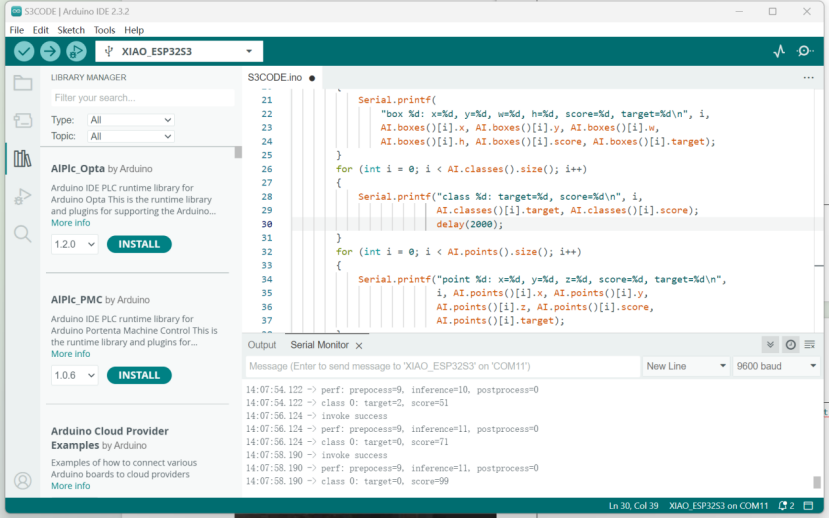

#include <Seeed_Arduino_SSCMA.h>

SSCMA AI;

void setup(){ AI.begin(); Serial.begin(600);}

void loop(){ if (!AI.invoke()) { Serial.println("invoke success"); Serial.printf("perf: prepocess=%d, inference=%d, postprocess=%d\n", AI.perf().prepocess, AI.perf().inference, AI.perf().postprocess); for (int i = 0; i < AI.boxes().size(); i++) { Serial.printf( "box %d: x=%d, y=%d, w=%d, h=%d, score=%d, target=%d\n", i, AI.boxes()[i].x, AI.boxes()[i].y, AI.boxes()[i].w, AI.boxes()[i].h, AI.boxes()[i].score, AI.boxes()[i].target); } for (int i = 0; i < AI.classes().size(); i++) { Serial.printf("class %d: target=%d, score=%d\n", i, AI.classes()[i].target, AI.classes()[i].score); delay(2000); } for (int i = 0; i < AI.points().size(); i++) { Serial.printf("point %d: x=%d, y=%d, z=%d, score=%d, target=%d\n", i, AI.points()[i].x, AI.points()[i].y, AI.points()[i].z, AI.points()[i].score, AI.points()[i].target); } }Output Results:

-

When I pointed the camera at a person, the serial output target1 indicated that a person was recognized.

-

When I pointed the camera at a table, the serial output target2 indicated that a table was recognized.

-

When I pointed the camera at a chair, the serial output target0 indicated that a chair was recognized.

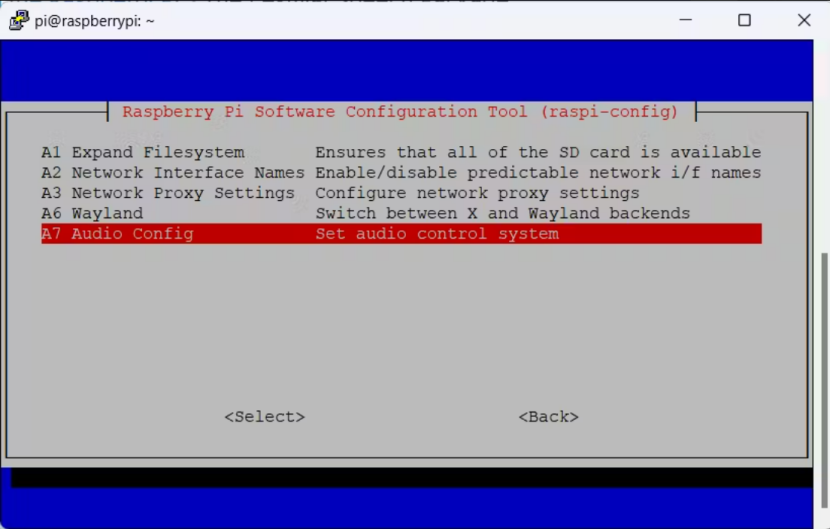

The XIAO ESP32S3 Sense paired with the Grove – Vision AI Module V2 can detect objects in the surrounding environment and return the names and locations of the objects. The Raspberry Pi is used to receive the object names and locations via UART and convert text to speech. Here, I used Raspberry Pi 4 B, which performed satisfactorily. After installing the operating system on the Raspberry Pi, I configured the audio control system and set the volume to 100%.

sudo raspi-config

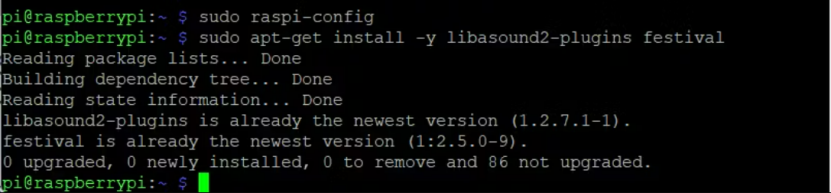

Then, I installed the free software package Festival on the Pi. Festival, developed by the UK Speech Technology Research Center, provides a framework for building speech synthesis systems. It offers complete text-to-speech functionality through various application programming interfaces: from the shell layer, via command interpreters, as a C++ library, from Java, and Emacs editor interfaces.

Use the following command to install Festival:

sudo apt-get install -y libasound2-plugins festival

After installing Festival, I connected a wired headset and tested it with the following audio:

echo "Hello World!" | festival --tts

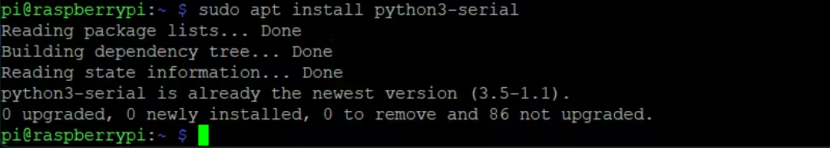

Then, I installed the Python serial module on the Raspberry Pi.

Connect the XIAO ESP32S3 Sense to the Raspberry Pi with a USB-C cable.

Writing Code for Raspberry Pi

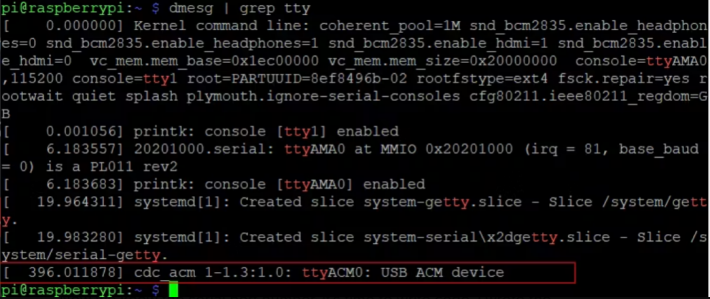

Before writing the code, we need to know the serial port number of the XIAO Sense board.

After connecting the XIAO Sense board and inserting it into the Raspberry Pi, we can run the following command in the terminal.

dmesg | grep ttyThe result is:

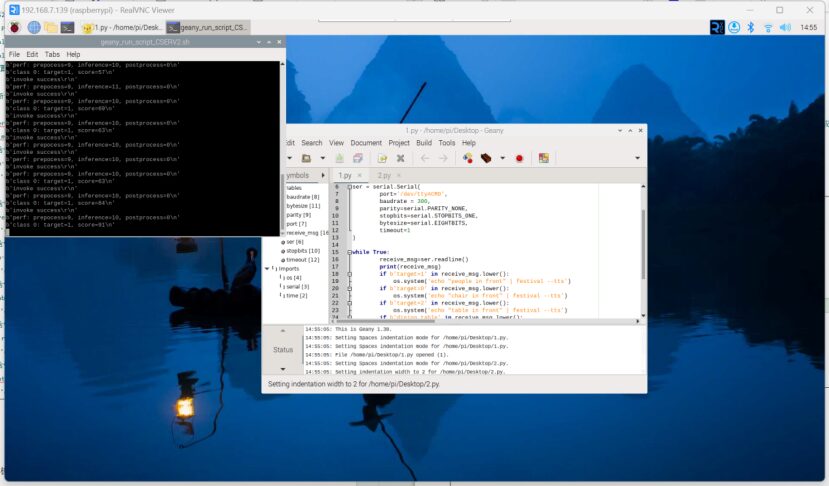

Now that we know the serial port number, it’s time to write the code. I wrote the following code for the Raspberry Pi to convert the received text into speech.

#!/usr/bin/env python

# This line specifies the interpreter used by the script (Python)

import time

import serial

import os

# Set the parameters for the serial connection to communicate with the device

ser = serial.Serial( port='/dev/ttyACM1', # Specify the port that the device is connected to. baudrate = 115200, # Set the baud rate for serial communication parity=serial.PARITY_NONE, # No parity bit stopbits=serial.STOPBITS_ONE, # Use one stop bit bytesize=serial.EIGHTBITS, # Each byte has 8 data bits timeout=1 # Set the timeout for reading the serial port to 1 second)

# Enter an infinite loop to continuously read serial data

while True: receive_msg=ser.readline() # Read a line of data from the serial port print(receive_msg) # Print the received data

# If the received data contains the word "basin", execute the following:

if b'basin' in receive_msg.lower(): os.system('echo "basin in front" | festival --tts')

# Use the festival speech synthesis engine to read the prompt.

# If the received data contains the word "1", execute the following:

if b'1' in receive_msg.lower(): os.system('echo "people in front" | festival --tts')

# If the received data contains the word "0", execute the following:

if b'0' in receive_msg.lower(): os.system('echo "chair in front" | festival --tts')

# If the received data contains the word "2", execute the following:

if b'2' in receive_msg.lower(): os.system('echo "table in front" | festival --tts')

# And so on...Test Results:

Bashrc Configuration

Modify the .bashrc file.

sudo nano /home/pi/.bashrcecho Running at boot

sudo python /home/pi/sample.pyThe above echo command is used to display that the script has started running in the .bashrc file.

The Bash RC configuration program will automatically run when the Raspberry Pi starts. The commands in the .bashrc file will open a new terminal window when executed.

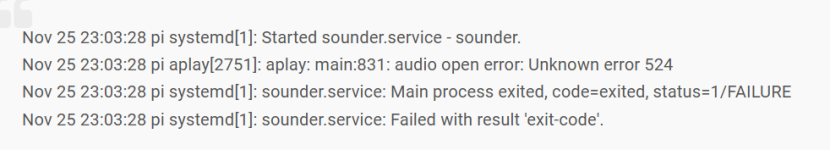

However, when I configured the above content, I encountered the following error:

Solution: For me, I had to add the creation of the file /etc/asound.conf

And add this content in that file:

pcm.!default {type asym

playback.pcm {type plug

slave.pcm "hw:2,0"}Thus, when the Raspberry Pi is powered on, this program will run automatically!

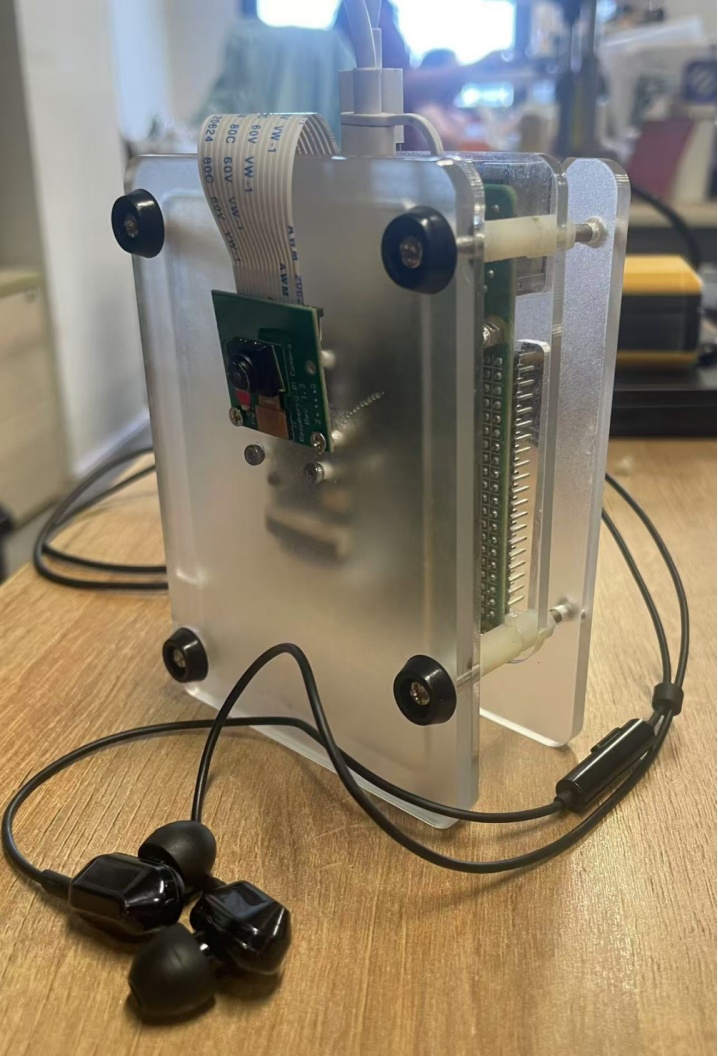

Based on the Seeed Raspberry Pi basic case, the XIAO ESP32S3 and Grove V2 are installed externally by drilling holes.

Final Presentation:

Demonstration Video:

Fan Benefits

Chaihuo Maker’s parent company, Seeed Studio, brings you the lowest prices for each product in the Grove series,RMB 113 to get the Grove Vision AI V2, scan the code for more surprises

Maker Project Show | Page Turner Based on XIAO

Maker Project Show | Interactive Robot Based on Raspberry Pi – Doly

Maker Project Show | Artificial Tongue Based on Wio Terminal

Maker Project Show | Desktop Robot Based on Raspberry Pi – MBO

Maker Project Show | Mechanical Pet Based on Raspberry Pi “The Addams Family”

Maker Project Show | Image Classification Processing Project Based on XIAO

Maker Project Show | Cellular IoT Based on Raspberry Pi 5

Maker Project Show | Magic Newspaper Based on Raspberry Pi Zero

Maker Project Based on STM32 500KHz Oscilloscope

Maker Project Show | FFT Sound Real-Time Visualization Based on OLED Screen Driven by LVGL

Maker Project Show | Smart Home Four-Way Controller Based on XIAO ESP32C3

Maker Project Show | Smart Pet Litter Box Based on Seeed XIAO

Maker Project Show | Meshwork Based on Wio Terminal

Maker Project Show | Edge Computing Vision Project Based on Grove Vision AI2 Module

Maker Project Show | Online Voice Assistant Based on XIAO ESP32S3 Sense

Maker Project Show | HA Automation Aquarium Based on Seeed XIAO ESP32S3 Sense

Maker Project Show | Pet Cat Detection Project Based on XIAO ESP32 Sense

New Generation Information Technology Empowerment | Talent Upgrade | Industrial Innovation

Seeed Studio IoT Device Trial Center Lands at Chaihuo!

Chaihuo x.factory|Shenzhen, Hebei