Background

AMOVLAB

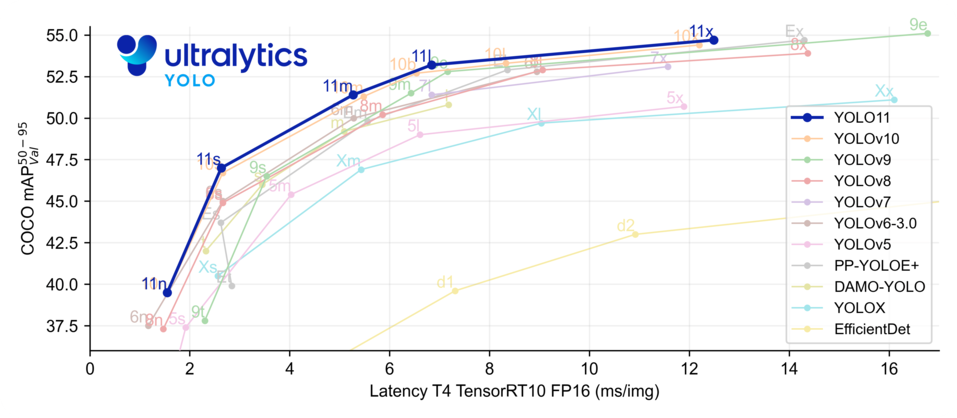

On September 30, 2024, the official team of Ultralytics announced the official release of YOLOv11, marking another major upgrade of the YOLO series of real-time object detectors and the rapid development of object detection.

In the field of small target detection, due to poor visual features and high noise, it has long been a challenge in object detection. This is especially true in the application scenarios of drones. Due to the high flight altitude of drones, there are often numerous small targets in the images, with fewer extractable features. Additionally, the significant fluctuations in drone flight altitude and the drastic changes in the scale of objects lead to a sharp increase in detection difficulty. Furthermore, there are many complex scenes in actual flight perspectives, where dense small targets can be heavily occluded by other targets or backgrounds.

Principle

AMOVLAB

The AFOD algorithm, or AutoFocusObjectDetector, is a new open-source algorithm specifically designed by SpireCV for small target detection from the perspective of drones, known in Chinese as Attention Object Detection. Below is the detection of distant vehicle targets using the AFOD algorithm combined with the GX40 pod without zoom (its pixel size is much smaller than 32×32).

The main advantage of Attention Object Detection is balancing the accuracy of small target detection with frame rate performance, which is divided into two stages in temporal order:

-

Global target search, generally at a resolution of 1280×1280

-

After detecting a target, it enters the sub-region detection phase, generally at a resolution of 640×640

-

As shown in the figure below:

This detector requires two general object detectors as input, one for global search and the other for use in the sub-region. The types of targets to be detected will be defined on specific datasets, outputting category information and pixel positions (bounding boxes).

The relevant configuration parameters are detailed as follows:

-

lock_thres: The number of consecutive frames detecting the same target before entering sub-region detection, default is 5 frames

-

unlock_thres: The number of consecutive frames losing a target in the sub-region before returning to global detection, default is 5 frames

-

lock_scale_init: Control parameter for the initial sub-region size, specifically a multiple of the target pixel width, default is 12 times

-

lock_scale: Control parameter for sub-region size (after stable tracking), default is 8 times

-

categories_filter: Filter target names, if empty, no filtering. Filtered target names are as follows:

[“person”, “car”]

-

keep_unlocked: Whether to output targets not automatically attended to, default is not to output (false)

-

use_square_region: Whether to use a square region for initial detection; if yes, for non-square input images, detection is not performed on the sides, default is not used (false)

General Object Detectors:

The two general object detectors used in the AFOD algorithm are yolov11s and yolov11s6, trained on the visdrone2019 det dataset (640×640, 1280×1280). Below is the detection effect achieved by yolov11s6 combined with the GX40 pod at 10x optical zoom while the P600 drone hovers at a height of 40 meters. It is evident that this detector can effectively identify vehicles within 1600 meters and pedestrians within 1400 meters.

Usage

AMOVLAB

Link to access the AFOD algorithm wiki:

1. Please star and bookmark SpireCV’s gitee or github repository, thank you for your support!

➡Github:[https://github.com/amov-lab/SpireCV]

➡Gitee:[https://gitee.com/amovlab/SpireCV]

2. Long press the QR code below to add us and obtain the AFOD algorithm wiki link. (This includes the models used in this experiment, as well as methods for training, converting, and deploying the yolov11 custom dataset model)