Failure is the mother of success. This article is a real debugging record of failure. Through this article, you will deeply experience the following concepts in the Linux system:

-

Real-time processes and normal process scheduling strategies;

-

How the chaotic process priority is calculated in Linux;

-

Testing CPU affinity;

-

Program design for multiprocessor (SMP) systems encountering real-time and normal processes;

-

A short-circuit experience of Dao Ge’s head getting caught in the door;

Regarding process scheduling strategies, different operating systems have different overall goals, so the scheduling algorithms vary.

This needs to be chosen based on factors such as the type of process (CPU-intensive? IO-intensive?), priority, etc.

For the Linux x86 platform, the commonly used algorithm is CFS: Completely Fair Scheduler.

It is called completely fair because the operating system dynamically calculates based on the CPU usage ratio of each thread, hoping that every process can equally use the CPU resource, sharing the benefits.

When we create a thread, the default scheduling algorithm is SCHED_OTHER, with a default priority of 0.

PS: In the Linux operating system, the kernel object of a thread is very similar to that of a process (which is actually just some structure variables), so a thread can be considered a lightweight process.

In this article, a thread can be roughly equated to a process, and in some contexts, it may also be referred to as a task, with some different customary terms in different contexts.

It can be understood this way: if there are a total of N processes in the system, each process will get 1/N of the execution opportunity. After each process executes for a period of time, it is scheduled out, and the next process is executed.

If the number N is too large, causing each process to run out of time just as it starts executing, and if scheduling occurs at this time, the system’s resources will be wasted on process context switching.

Therefore, the operating system introduces a minimum granularity, which means that each process has a guaranteed minimum execution time, called a time slice.

In addition to the SCHED_OTHER scheduling algorithm, the Linux system also supports two real-time scheduling strategies:

1. SCHED_FIFO: Schedules based on the priority of the process; once it preempts the CPU, it runs until it voluntarily gives up or is preempted by a higher-priority process;

2. SCHED_RR: Based on SCHED_FIFO, it adds the concept of time slices. When a process preempts the CPU, after running for a certain amount of time, the scheduler will place this process at the end of the current priority process queue and choose another process of the same priority to execute;

This article aims to test the mixed situation of SCHED_FIFO and normal SCHED_OTHER scheduling strategies.

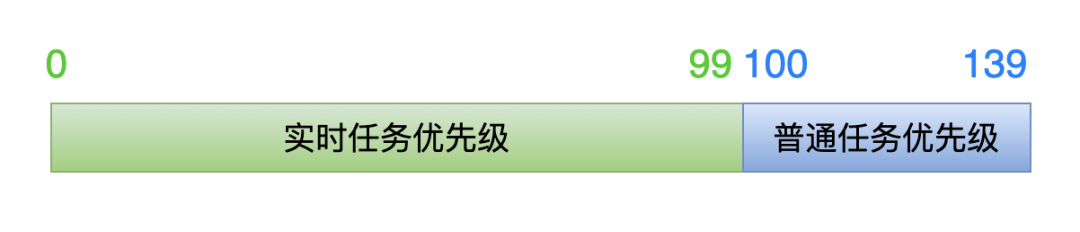

In the Linux system, the management of priorities appears somewhat chaotic. First, look at the following image:

This image represents the priorities in the kernel, divided into two segments.

The values 0-99 are for real-time tasks, while the values 100-139 are for normal tasks.

The lower the value, the higher the priority of the task.

The lower the value, the higher the priority of the task.

The lower the value, the higher the priority of the task.

To emphasize again, the above is the priority from the kernel perspective.

Now, the key point:

When we create threads at the application layer, we set a priority value, which is the priority value from the application layer perspective.

However, the kernel does not directly use this value set at the application layer; instead, it undergoes certain calculations to obtain the priority value used in the kernel (0 ~ 139).

1. For real-time tasks

When we create a thread, we can set the priority value like this (0 ~ 99):

struct sched_param param;

param.__sched_priority = xxx;

When the thread creation function enters the kernel level, the kernel calculates the actual priority value using the following formula:

kernel priority = 100 - 1 - param.__sched_priority

If the application layer passes the value 0, then in the kernel, the priority value is 99 (100 – 1 – 0 = 99), which is the lowest priority among all real-time tasks.

If the application layer passes the value 99, then in the kernel, the priority value is 0<span>(100 - 1 - 99 = 0</span><span>)</span>, which is the highest priority among all real-time tasks.

Therefore, from the application layer perspective, the larger the priority value passed, the higher the thread’s priority; the smaller the value, the lower the priority.

This is completelyopposite!

2. For normal tasks

Adjusting the priority of normal tasks is done through the nice value, and the kernel also has a formula to convert the nice value passed from the application layer into the kernel perspective priority value:

kernel priority = 100 + 20 + nice

The valid values for nice are: -20 ~ 19.

If the application layer sets the thread nice value to -20, then in the kernel, the priority value is 100<span>(100 + 20 + (-20) = 100</span><span>)</span>, which is the highest priority among all normal tasks.

If the application layer sets the thread nice value to 19, then in the kernel, the priority value is 139<span>(100 + 20 + 19 = 139</span><span>)</span>, which is the lowest priority among all normal tasks.

Therefore, from the application layer perspective, the smaller the priority value passed, the higher the thread’s priority; the larger the value, the lower the priority.

This is completelythe same!

With the background knowledge clarified, we can finally proceed to code testing!

Points to note:

#define _GNU_SOURCEmust be defined before#include <sched.h>;

#include <sched.h>must be included before#include <pthread.h>;This order ensures that the CPU_SET and CPU_ZERO functions can be successfully located when setting the inherited CPU affinity later.

// filename: test.c

#define _GNU_SOURCE

#include <unistd.h>

#include <stdio.h>

#include <stdlib.h>

#include <sched.h>

#include <pthread.h>

// Function to print current thread information: What is the scheduling policy? What is the priority?

void get_thread_info(const int thread_index)

{

int policy;

struct sched_param param;

printf("\n====> thread_index = %d \n", thread_index);

pthread_getschedparam(pthread_self(), &policy, ¶m);

if (SCHED_OTHER == policy)

printf("thread_index %d: SCHED_OTHER \n", thread_index);

else if (SCHED_FIFO == policy)

printf("thread_index %d: SCHED_FIFO \n", thread_index);

else if (SCHED_RR == policy)

printf("thread_index %d: SCHED_RR \n", thread_index);

printf("thread_index %d: priority = %d \n", thread_index, param.sched_priority);

}

// Thread function,

void *thread_routine(void *args)

{

// Parameter is: thread index number. Four threads, index numbers from 1 to 4, used in printed information.

int thread_index = *(int *)args;

// To ensure all threads are created, let the thread sleep for 1 second.

sleep(1);

// Print thread-related information: scheduling policy, priority.

get_thread_info(thread_index);

long num = 0;

for (int i = 0; i < 10; i++)

{

for (int j = 0; j < 5000000; j++)

{

// No real meaning, purely simulating CPU-intensive computation.

float f1 = ((i+1) * 345.45) * 12.3 * 45.6 / 78.9 / ((j+1) * 4567.89);

float f2 = (i+1) * 12.3 * 45.6 / 78.9 * (j+1);

float f3 = f1 / f2;

}

// Print counting information to see which thread is executing

printf("thread_index %d: num = %ld \n", thread_index, num++);

}

// Thread execution ends

printf("thread_index %d: exit \n", thread_index);

return 0;

}

void main(void)

{

// Create a total of four threads: 0 and 1 - real-time threads, 2 and 3 - normal threads (non-real-time)

int thread_num = 4;

// Allocated thread index numbers, will be passed to thread parameters

int index[4] = {1, 2, 3, 4};

// To save the IDs of the 4 threads

pthread_t ppid[4];

// To set the attributes of the 2 real-time threads: scheduling policy and priority

pthread_attr_t attr[2];

struct sched_param param[2];

// Real-time threads must be created by the root user

if (0 != getuid())

{

printf("Please run as root \n");

exit(0);

}

// Create 4 threads

for (int i = 0; i < thread_num; i++)

{

if (i <= 1) // The first 2 create real-time threads

{

// Initialize thread attributes

pthread_attr_init(&attr[i]);

// Set scheduling policy to: SCHED_FIFO

pthread_attr_setschedpolicy(&attr[i], SCHED_FIFO);

// Set priority to 51, 52.

param[i].__sched_priority = 51 + i;

pthread_attr_setschedparam(&attr[i], ¶m[i]);

// Set thread attributes: do not inherit the scheduling policy and priority of the main thread.

pthread_attr_setinheritsched(&attr[i], PTHREAD_EXPLICIT_SCHED);

// Create thread

pthread_create(&ppid[i], &attr[i],(void *)thread_routine, (void *)&index[i]);

}

else // The last two create normal threads

{

pthread_create(&ppid[i], 0, (void *)thread_routine, (void *)&index[i]);

}

}

// Wait for the 4 threads to finish execution

for (int i = 0; i < 4; i++)

pthread_join(ppid[i], 0);

for (int i = 0; i < 2; i++)

pthread_attr_destroy(&attr[i]);

}

Command to compile into an executable program:

gcc -o test test.c -lpthread

First, let’s talk about the expected results. If there are no expected results, then there is no point in discussing any other issues.

There are a total of 4 threads:

Thread index 1 and 2: are real-time threads (scheduling policy is SCHED_FIFO, priority is 51, 52);

Thread index 3 and 4: are normal threads (scheduling policy is SCHED_OTHER, priority is 0);

My testing environment is: Ubuntu16.04, a virtual machine installed on Windows10.

I expect the results to be:

First, print the information of threads 1 and 2, as they are real-time tasks and need to be scheduled first;

Thread 1 has a priority of 51, which is less than thread 2’s priority of 52, so thread 2 should finish before thread 1 gets executed;

Threads 3 and 4 are normal processes, and they need to wait until threads 1 and 2 have finished executing before they can start executing, and threads 3 and 4 should execute alternately since they have the same scheduling policy and priority.

With high hopes, I tested on my work computer, and the printed results were as follows:

====> thread_index = 4

thread_index 4: SCHED_OTHER

thread_index 4: priority = 0

====> thread_index = 1

thread_index 1: SCHED_FIFO

thread_index 1: priority = 51

====> thread_index = 2

thread_index 2: SCHED_FIFO

thread_index 2: priority = 52

thread_index 2: num = 0

thread_index 4: num = 0

====> thread_index = 3

thread_index 3: SCHED_OTHER

thread_index 3: priority = 0

thread_index 1: num = 0

thread_index 2: num = 1

thread_index 4: num = 1

thread_index 3: num = 0

thread_index 1: num = 1

thread_index 2: num = 2

thread_index 4: num = 2

thread_index 3: num = 1

The subsequent printed content need not be output, as the problem has already appeared.

The problem is obvious: Why are all 4 threads executing simultaneously?

Threads 1 and 2 should be executed first because they are real-time tasks!

How come the result is like this? I am completely confused, not at all as expected!

Unable to figure it out, I could only seek help from the internet! However, I found no valuable clues.

One piece of information involves the Linux system’s scheduling strategy, which I will record here.

In the Linux system, to prevent real-time tasks from completely occupying CPU resources, it allows normal tasks a small time gap to execute.

In the directory /proc/sys/kernel, there are 2 files that limit the time real-time tasks can occupy the CPU:

sched_rt_runtime_us: default value 950000

sched_rt_period_us: default value 1000000

This means: within a period of 1000000 microseconds (1 second), real-time tasks can occupy 950000 microseconds (0.95 seconds), leaving 0.05 seconds for normal tasks.

If there were no this limit, if a certain SCHED_FIFO task had a particularly high priority and happened to have a bug: continuously occupying CPU resources without giving up, we would have no chance to kill that real-time task because the system would not be able to schedule any other processes to execute.

With this limit, we can utilize that 0.05 seconds of execution time to kill the buggy real-time task.

Back to the point: the documentation says that if real-time tasks are not prioritized for scheduling, you can remove this time limit. The method is:

sysctl -w kernel.sched_rt_runtime_us=-1

I followed this, but it was still ineffective!

Could it be an issue with the computer environment? So, I tested the code on another virtual machine Ubuntu14.04 on a laptop.

During compilation, there was a small issue, prompting an error:

error: ‘for’ loop initial declarations are only allowed in C99 mode

Just add the C99 standard to the compilation command:

gcc -o test test.c -lpthread -std=c99

Executing the program, the printed information is as follows:

====> thread_index = 2

====> thread_index = 1

thread_index 1: SCHED_FIFO

thread_index 1: priority = 51

thread_index 2: SCHED_FIFO

thread_index 2: priority = 52

thread_index 1: num = 0

thread_index 2: num = 0

thread_index 2: num = 1

thread_index 1: num = 1

thread_index 2: num = 2

thread_index 1: num = 2

thread_index 2: num = 3

thread_index 1: num = 3

thread_index 2: num = 4

thread_index 1: num = 4

thread_index 2: num = 5

thread_index 1: num = 5

thread_index 2: num = 6

thread_index 1: num = 6

thread_index 2: num = 7

thread_index 1: num = 7

thread_index 2: num = 8

thread_index 1: num = 8

thread_index 2: num = 9

thread_index 2: exit

====> thread_index = 4

thread_index 4: SCHED_OTHER

thread_index 4: priority = 0

thread_index 1: num = 9

thread_index 1: exit

====> thread_index = 3

thread_index 3: SCHED_OTHER

thread_index 3: priority = 0

thread_index 3: num = 0

thread_index 4: num = 0

thread_index 3: num = 1

thread_index 4: num = 1

thread_index 3: num = 2

thread_index 4: num = 2

thread_index 3: num = 3

thread_index 4: num = 3

thread_index 3: num = 4

thread_index 4: num = 4

thread_index 3: num = 5

thread_index 4: num = 5

thread_index 3: num = 6

thread_index 4: num = 6

thread_index 3: num = 7

thread_index 4: num = 7

thread_index 3: num = 8

thread_index 4: num = 8

thread_index 3: num = 9

thread_index 3: exit

thread_index 4: num = 9

thread_index 4: exit

Threads 1 and 2 executed simultaneously, and after that, threads 3 and 4 executed.

However, this also does not conform to expectations: thread 2 has a higher priority than thread 1, so it should execute first!

I didn’t know how to investigate this issue, and could only consult the Linux kernel experts, who suggested checking the kernel version.

At this point, I remembered that on the Ubuntu16.04 virtual machine, I had downgraded the kernel version for some reason.

I checked this direction, and ultimately confirmed that it was not the difference in kernel versions that caused the problem.

So I had to go back and look at the differences in the printed information from these two tests:

In Ubuntu16.04 on my work computer: all 4 threads were scheduled and executed simultaneously, and the scheduling policy and priority did not take effect;

In Ubuntu14.04 on my laptop: threads 1 and 2 real-time tasks were executed first, indicating that the scheduling policy took effect, but the priority did not take effect;

Suddenly, the CPU affinity popped into my mind!

Immediately, I felt where the problem lay: this is most likely an issue caused by multicore!

So I bound all 4 threads to CPU0, which means setting CPU affinity.

At the beginning of the thread entry function thread_routine, I added the following code:

cpu_set_t mask;

int cpus = sysconf(_SC_NPROCESSORS_CONF);

CPU_ZERO(&mask);

CPU_SET(0, &mask);

if (pthread_setaffinity_np(pthread_self(), sizeof(mask), &mask) < 0)

{

printf("set thread affinity failed! \n");

}

Then I continued to verify in the Ubuntu16.04 virtual machine, and the printed information was perfect, completely conforming to expectations:

====> thread_index = 1

====> thread_index = 2

thread_index 2: SCHED_FIFO

thread_index 2: priority = 52

thread_index 2: num = 0

。。。

thread_index 2: num = 9

thread_index 2: exit

thread_index 1: SCHED_FIFO

thread_index 1: priority = 51

thread_index 1: num = 0

。。。

thread_index 1: num = 9

thread_index 1: exit

====> thread_index = 3

thread_index 3: SCHED_OTHER

thread_index 3: priority = 0

====> thread_index = 4

thread_index 4: SCHED_OTHER

thread_index 4: priority = 0

thread_index 3: num = 0

thread_index 4: num = 0

。。。

thread_index 4: num = 8

thread_index 3: num = 8

thread_index 4: num = 9

thread_index 4: exit

thread_index 3: num = 9

thread_index 3: exit

Thus, the truth of the problem became clear: it was caused by the multicore processor!

Moreover, the two testing virtual machines had different CPU core allocations during installation, which led to the different printed results.

Finally, let’s confirm the CPU information in these 2 virtual machines:

Ubuntu 16.04 cpuinfo information:

$ cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 158

model name : Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

stepping : 10

cpu MHz : 2807.996

cache size : 9216 KB

physical id : 0

siblings : 4

core id : 0

cpu cores : 4

。。。other information

processor : 1

vendor_id : GenuineIntel

cpu family : 6

model : 158

model name : Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

stepping : 10

cpu MHz : 2807.996

cache size : 9216 KB

physical id : 0

siblings : 4

core id : 1

cpu cores : 4

。。。other information

processor : 2

vendor_id : GenuineIntel

cpu family : 6

model : 158

model name : Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

stepping : 10

cpu MHz : 2807.996

cache size : 9216 KB

physical id : 0

siblings : 4

core id : 2

cpu cores : 4

。。。other information

processor : 3

vendor_id : GenuineIntel

cpu family : 6

model : 158

model name : Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

stepping : 10

cpu MHz : 2807.996

cache size : 9216 KB

physical id : 0

siblings : 4

core id : 3

cpu cores : 4

。。。other information

In this virtual machine, there are exactly 4 cores, and my test code created exactly 4 threads, so each core was assigned a thread, and none were idle, executing simultaneously.

Therefore, the printed information shows that 4 threads are executing in parallel.

At this point, no scheduling policy or priority takes effect! (To be precise: the scheduling policy and priority take effect within the CPU where the thread is located.)

If I had created 10 threads in the test code from the beginning, I might have discovered the problem even faster!

Now let’s look at the CPU information in the virtual machine Ubuntu14.04 on my laptop:

$ cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 142

model name : Intel(R) Core(TM) i5-7360U CPU @ 2.30GHz

stepping : 9

microcode : 0x9a

cpu MHz : 2304.000

cache size : 4096 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 2

。。。other information

processor : 1

vendor_id : GenuineIntel

cpu family : 6

model : 142

model name : Intel(R) Core(TM) i5-7360U CPU @ 2.30GHz

stepping : 9

microcode : 0x9a

cpu MHz : 2304.000

cache size : 4096 KB

physical id : 0

siblings : 2

core id : 1

cpu cores : 2

。。。other information

In this virtual machine, there are 2 cores, so threads 1 and 2 real-time tasks are executed first (because there are 2 cores executing simultaneously, the priority of these 2 tasks is not very meaningful), and after they finish, threads 3 and 4 are executed.

After this round of testing, I really want to hit my head with the keyboard, why didn’t I consider the multicore factor earlier?!

The deeper reasons are:

Many previous projects were on single-core situations like ARM, MIPS, STM32, and my fixed thinking did not allow me to realize the multicore factor earlier;

Some x86 platform projects I have worked on did not involve real-time task requirements. Generally, the default scheduling policy of the system is used, which is also an important indicator of Linux x86 as a general-purpose computer: to allow every task to use CPU resources fairly.

As the x86 platform gradually applies in the industrial control field, the issue of real-time performance becomes more prominent and important.

Thus, there are intime in the Windows system, and preempt, xenomai and other real-time patches in the Linux system.

Recommended Reading

【1】C Language Pointers – From Basic Principles to Various Techniques, Explained Thoroughly with Diagrams and Code【2】Step-by-Step Analysis – How to Implement Object-Oriented Programming in C【3】It Turns Out the Underlying Debugging Principles of GDB are So Simple【4】Is Inline Assembly Scary? After Reading This Article, End It!【5】It is said that software architecture should be layered and modular, but how should it be done specifically?