Click on Computer Enthusiasts to follow us

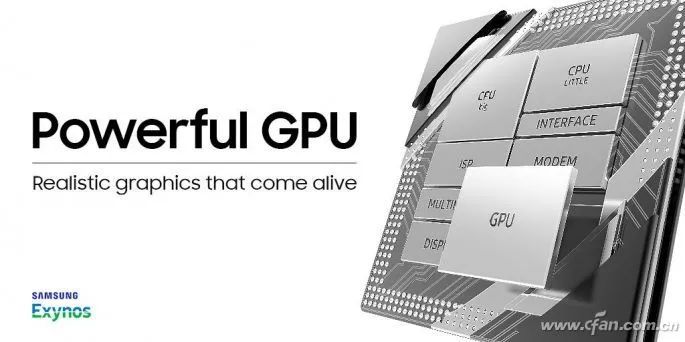

With the rise of competitive mobile games, smartphones are facing increasingly stringent requirements for 3D graphics rendering and computational capabilities. At the same time, 4K ultra-high-definition recording, video editing on mobile devices, AR, and VR entertainment applications all rely heavily on the involvement of GPUs (as well as CPUs and ISPs). In other words, as CPU performance becomes sufficient today, the “nuclear war” from the GPU side is unavoidable. So, which GPUs in the smartphone field are worth our attention?

Let’s Start with the Partnership Between Samsung and AMD

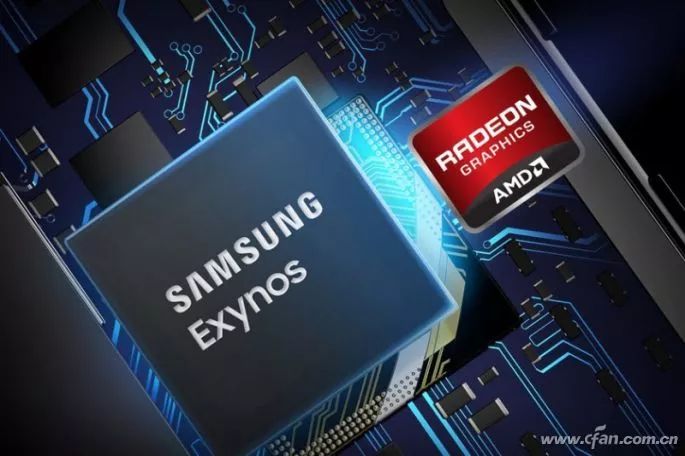

In early June 2019, a significant announcement was made in the mobile industry—AMD and Samsung jointly announced a multi-year strategic partnership. Samsung will gain authorization for the AMD Radeon graphics API and focus on enhancing the advanced graphics technologies and solutions crucial for innovation in mobile devices (limited to smartphones and tablets in markets where AMD does not compete).There are few details revealed officially, but it can be confirmed that AMD will authorize the “highly scalable RDNA graphics architecture” to Samsung.

In simple terms, RDNA (Radeon DNA) is AMD’s latest GPU architecture launched in the graphics card field. It is the successor to the longest-lived GPU architecture in history, “GCN” (2011-2019), with innovations in CU computing units, caches, pipelines, etc., offering better performance (meeting modern gaming load demands), energy efficiency (fully optimizing power consumption and bandwidth utilization), functionality (strengthening the relevant ecosystem), and scalability (serving mobile, desktop, and cloud environments). It can be said that RDNA is a completely redesigned architecture, marking the beginning of the fifth major architectural era in AMD’s graphics history.

Will Samsung’s future Exynos (Orion) mobile platform directly integrate GPUs based on the RDNA architecture?

The answer is naturally no.AMD has long lacked experience in the ARM ecosystem (AMD once ventured into ARM servers but quickly abandoned it), and the core requirement for integrating GPUs into mobile SoCs is low power consumption and high energy efficiency, which AMD’s existing GPU technology clearly does not meet.

In fact, Samsung has been conducting self-research (independent research and development) on CPUs and GPUs for years. In the CPU architecture field, they have successfully developed the “Mongoose” (Mongoose, such as Exynos M3/M4) core based on the ARM instruction set and have gained considerable reputation through mobile platforms like Exynos 9810 and Exynos 9820.

In the GPU field, it is reported that Samsung started self-research on the “S-GPU” project as early as 2012. This collaboration with AMD only requires integrating some of AMD Radeon’s graphic IP into the GPU, and it is impossible to completely replicate the RDNA architecture.Additionally, the cooperation also involves related patent authorizations to avoid potential legal disputes in the future; after all, MediaTek is a good cautionary tale.

At the 2015 MWC exhibition, there were reports that MediaTek had reached a cooperation with AMD in the mobile SoC graphics computing field, but this news was never officially confirmed.In early 2019, AMD sued MediaTek, claiming that MediaTek’s smart devices infringed on several of its APU and GPU-related patents.It is worth noting that the SoCs under MediaTek all integrate GPUs from ARM or Imagination.

With the injection of AMD graphic IP, Samsung can focus more on refining its self-developed CPU core “Mongoose”, while AMD’s reputation in the PC field can help Samsung differentiate itself from Qualcomm, Huawei, and MediaTek—did you see? I integrated AMD GPU!Meanwhile, when Samsung’s Exynos CPU is paired with the integrated AMD RDNA gene GPU, it may also impact an industry represented by handheld gaming consoles like the Nintendo Switch.Can Samsung + AMD replace NVIDIA Tegra X1 and its subsequent chips in the handheld console field?Let’s wait and see.

The Story of Qualcomm and AMD

The Adreno GPU integrated into Qualcomm Snapdragon mobile platforms is probably the strongest in the Android smartphone field and the only one that can compete with the Apple A-series chips integrated GPUs of the same period.But did you know that as Qualcomm’s unique “self-developed” GPU, Adreno originally also had AMD’s “bloodline”?

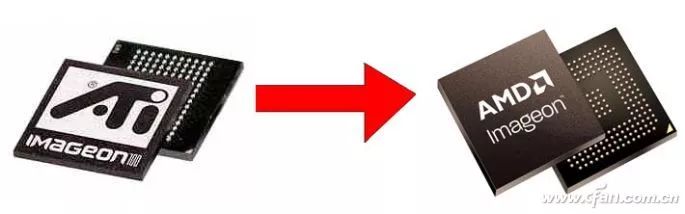

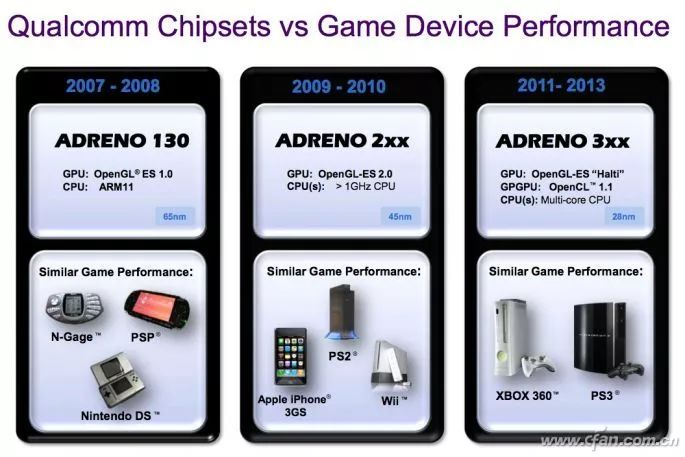

The predecessor of Adreno actually originated from the ATI Imageon series low-power GPUs launched in 2002, which were originally used in the Zodiac handheld console pre-installed with the PalmOS system.After AMD acquired ATI, AMD packaged the related mobile device assets and sold them to Qualcomm in 2008, thus obtaining AMD (ATI)’s vector drawing and 3D drawing technology and related intellectual property, and based on this, developed the familiar Adreno GPU.

If AMD had not sold the original ATI Imageon back then, and Intel had not sold XScale (which had full authorization for StrongARM and ARM architecture) and its handheld device chip business, these two X86 chip giants might have achieved greater success in the mobile internet era.

How to Determine the Strength of Adreno GPU

In the Android smartphone field, Qualcomm’s Snapdragon mobile platform occupies a large share, and Adreno is both familiar and unfamiliar to us.It is familiar because every time Qualcomm releases a new Snapdragon mobile platform, the media always emphasizes that it integrates Adreno xxx model GPU, which has improved significantly compared to the previous generation; it is unfamiliar because the Adreno GPU model is too numerous and chaotic, making it difficult for us to judge the relative performance levels between the same generation of Adreno GPUs based on their names.

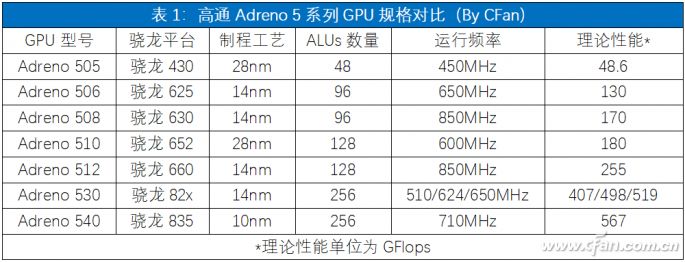

This is particularly evident during the Adreno 5 series GPU period (see the table below).

For example, Adreno 506 (Snapdragon 625) has a model number that is only one higher than Adreno 505 (Snapdragon 430), but the former’s performance is nearly twice that of the latter.Adreno 508 (Snapdragon 630) has a model number that is two higher than Adreno 506, but its performance has only improved by 30%, showing no pattern at all.

To understand the above issues, we need to know the key parameters that affect Adreno GPU performance.Setting aside core architecture, rendering methods, and various graphics interfaces, the performance of Adreno GPUs is mainly constrained by process technology, the number of ALUs (Arithmetic Logic Units), and the GPU frequency.

We can understand the ALUs integrated into Adreno GPUs as the “stream processors” in PC graphics cards. The more ALUs there are, the stronger the performance, given the same architecture.Unfortunately, Qualcomm has never publicly disclosed the number of ALUs in Adreno GPUs, and the data in this article and related online data are all derived from user testing, so they are for reference only.

For GPUs, the more advanced the process technology, the higher the frequency at which they can operate at the same heat and power levels, which also greatly benefits performance.The significant performance improvement of Adreno 506 compared to Adreno 505 is due to the greater number of ALUs and the frequency increase brought about by process upgrades.Similarly, the performance differences between Adreno 512 and Adreno 508, and between Adreno 530 and Adreno 512 are also influenced by the above factors.

Today, Adreno GPUs have fully evolved into the Adreno 6 series era, with the biggest feature being support for complete graphics interfaces such as OpenCL 2.0FP, OpenGL ES3.2, DX12, and Vulkan 1.1.

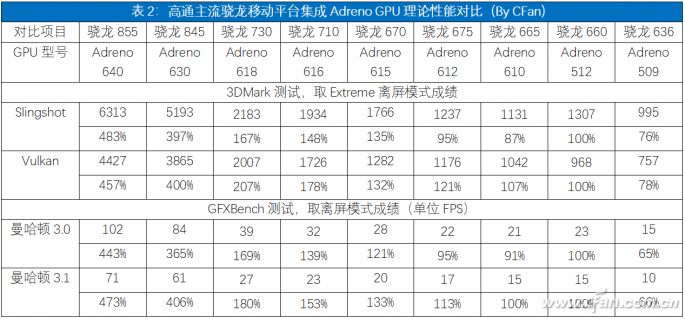

Unfortunately, I couldn’t find accurate information about their specific ALUs and frequency, so in this article, we will only compare the theoretical performance of Adreno 6 series GPUs using two professional testing software, 3DMark and GFXBench (see the table below).

Using the Adreno 512 integrated into Snapdragon 660 as a benchmark (100%), we can more intuitively understand the differences among Adreno 6 series GPUs.Among them, Adreno 610 and Adreno 612, despite having higher numerical sequences, actually have comprehensive performance that is not much different from Adreno 512.The performance from Adreno 615 to Adreno 618 increases slightly in order, with the differences stemming from the combinations of different ALUs and GPU frequencies.

As Qualcomm’s flagship in 2018, Snapdragon 845 (Adreno 630) can outperform the latest Snapdragon 730 (Adreno 618) in 3D performance because Adreno 630 integrates up to 512 ALUs, while Snapdragon 730’s ALUs should be less than 256 (sorry, I couldn’t find the exact parameters).

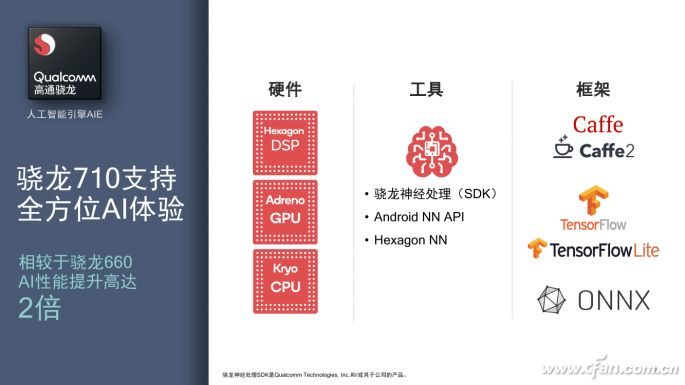

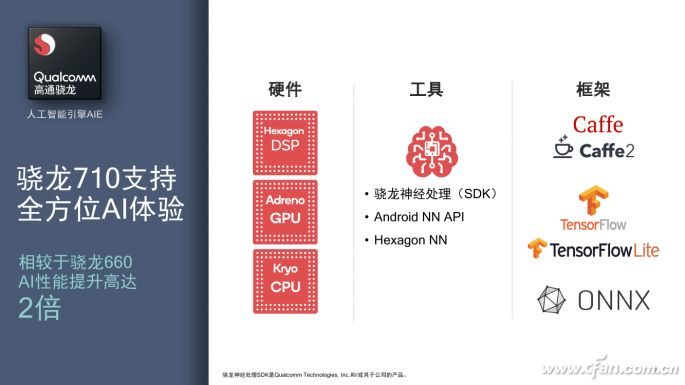

It should be noted that starting from the second half of 2018, Qualcomm’s latest Snapdragon SoC mobile platforms have strengthened AI computing capabilities, using some of the ALUs in the GPU combined with the HVX units integrated in Hexagon, working with the CPU to form Qualcomm’s unique Snapdragon AI Engine platform.

In simple terms, RDNA (Radeon DNA) is AMD’s latest GPU architecture launched in the graphics card field. It is the successor to the longest-lived GPU architecture in history, “GCN” (2011-2019), with innovations in CU computing units, caches, pipelines, etc., offering better performance (meeting modern gaming load demands), energy efficiency (fully optimizing power consumption and bandwidth utilization), functionality (strengthening the relevant ecosystem), and scalability (serving mobile, desktop, and cloud environments). It can be said that RDNA is a completely redesigned architecture, marking the beginning of the fifth major architectural era in AMD’s graphics history.

Will Samsung’s future Exynos (Orion) mobile platform directly integrate GPUs based on the RDNA architecture?

The answer is naturally no.AMD has long lacked experience in the ARM ecosystem (AMD once ventured into ARM servers but quickly abandoned it), and the core requirement for integrating GPUs into mobile SoCs is low power consumption and high energy efficiency, which AMD’s existing GPU technology clearly does not meet.

In fact, Samsung has been conducting self-research (independent research and development) on CPUs and GPUs for years. In the CPU architecture field, they have successfully developed the “Mongoose” (Mongoose, such as Exynos M3/M4) core based on the ARM instruction set and have gained considerable reputation through mobile platforms like Exynos 9810 and Exynos 9820.

In the GPU field, it is reported that Samsung started self-research on the “S-GPU” project as early as 2012. This collaboration with AMD only requires integrating some of AMD Radeon’s graphic IP into the GPU, and it is impossible to completely replicate the RDNA architecture.Additionally, the cooperation also involves related patent authorizations to avoid potential legal disputes in the future; after all, MediaTek is a good cautionary tale.

At the 2015 MWC exhibition, there were reports that MediaTek had reached a cooperation with AMD in the mobile SoC graphics computing field, but this news was never officially confirmed.In early 2019, AMD sued MediaTek, claiming that MediaTek’s smart devices infringed on several of its APU and GPU-related patents.It is worth noting that the SoCs under MediaTek all integrate GPUs from ARM or Imagination.

With the injection of AMD graphic IP, Samsung can focus more on refining its self-developed CPU core “Mongoose”, while AMD’s reputation in the PC field can help Samsung differentiate itself from Qualcomm, Huawei, and MediaTek—did you see? I integrated AMD GPU!Meanwhile, when Samsung’s Exynos CPU is paired with the integrated AMD RDNA gene GPU, it may also impact an industry represented by handheld gaming consoles like the Nintendo Switch.Can Samsung + AMD replace NVIDIA Tegra X1 and its subsequent chips in the handheld console field?Let’s wait and see.

The Story of Qualcomm and AMD

The Adreno GPU integrated into Qualcomm Snapdragon mobile platforms is probably the strongest in the Android smartphone field and the only one that can compete with the Apple A-series chips integrated GPUs of the same period.But did you know that as Qualcomm’s unique “self-developed” GPU, Adreno originally also had AMD’s “bloodline”?

The predecessor of Adreno actually originated from the ATI Imageon series low-power GPUs launched in 2002, which were originally used in the Zodiac handheld console pre-installed with the PalmOS system.After AMD acquired ATI, AMD packaged the related mobile device assets and sold them to Qualcomm in 2008, thus obtaining AMD (ATI)’s vector drawing and 3D drawing technology and related intellectual property, and based on this, developed the familiar Adreno GPU.

If AMD had not sold the original ATI Imageon back then, and Intel had not sold XScale (which had full authorization for StrongARM and ARM architecture) and its handheld device chip business, these two X86 chip giants might have achieved greater success in the mobile internet era.

How to Determine the Strength of Adreno GPU

In the Android smartphone field, Qualcomm’s Snapdragon mobile platform occupies a large share, and Adreno is both familiar and unfamiliar to us.It is familiar because every time Qualcomm releases a new Snapdragon mobile platform, the media always emphasizes that it integrates Adreno xxx model GPU, which has improved significantly compared to the previous generation; it is unfamiliar because the Adreno GPU model is too numerous and chaotic, making it difficult for us to judge the relative performance levels between the same generation of Adreno GPUs based on their names.

This is particularly evident during the Adreno 5 series GPU period (see the table below).

For example, Adreno 506 (Snapdragon 625) has a model number that is only one higher than Adreno 505 (Snapdragon 430), but the former’s performance is nearly twice that of the latter.Adreno 508 (Snapdragon 630) has a model number that is two higher than Adreno 506, but its performance has only improved by 30%, showing no pattern at all.

To understand the above issues, we need to know the key parameters that affect Adreno GPU performance.Setting aside core architecture, rendering methods, and various graphics interfaces, the performance of Adreno GPUs is mainly constrained by process technology, the number of ALUs (Arithmetic Logic Units), and the GPU frequency.

We can understand the ALUs integrated into Adreno GPUs as the “stream processors” in PC graphics cards. The more ALUs there are, the stronger the performance, given the same architecture.Unfortunately, Qualcomm has never publicly disclosed the number of ALUs in Adreno GPUs, and the data in this article and related online data are all derived from user testing, so they are for reference only.

For GPUs, the more advanced the process technology, the higher the frequency at which they can operate at the same heat and power levels, which also greatly benefits performance.The significant performance improvement of Adreno 506 compared to Adreno 505 is due to the greater number of ALUs and the frequency increase brought about by process upgrades.Similarly, the performance differences between Adreno 512 and Adreno 508, and between Adreno 530 and Adreno 512 are also influenced by the above factors.

Today, Adreno GPUs have fully evolved into the Adreno 6 series era, with the biggest feature being support for complete graphics interfaces such as OpenCL 2.0FP, OpenGL ES3.2, DX12, and Vulkan 1.1.

Unfortunately, I couldn’t find accurate information about their specific ALUs and frequency, so in this article, we will only compare the theoretical performance of Adreno 6 series GPUs using two professional testing software, 3DMark and GFXBench (see the table below).

Using the Adreno 512 integrated into Snapdragon 660 as a benchmark (100%), we can more intuitively understand the differences among Adreno 6 series GPUs.Among them, Adreno 610 and Adreno 612, despite having higher numerical sequences, actually have comprehensive performance that is not much different from Adreno 512.The performance from Adreno 615 to Adreno 618 increases slightly in order, with the differences stemming from the combinations of different ALUs and GPU frequencies.

As Qualcomm’s flagship in 2018, Snapdragon 845 (Adreno 630) can outperform the latest Snapdragon 730 (Adreno 618) in 3D performance because Adreno 630 integrates up to 512 ALUs, while Snapdragon 730’s ALUs should be less than 256 (sorry, I couldn’t find the exact parameters).

It should be noted that starting from the second half of 2018, Qualcomm’s latest Snapdragon SoC mobile platforms have strengthened AI computing capabilities, using some of the ALUs in the GPU combined with the HVX units integrated in Hexagon, working with the CPU to form Qualcomm’s unique Snapdragon AI Engine platform.

In other words, in the latest Snapdragon mobile platforms, more ALUs do not equate to equivalent performance improvements.Taking Snapdragon 855 as an example, Qualcomm revealed that this chip (compared to Snapdragon 845) increased the number of arithmetic logic units by 50%, meaning that the number of ALUs increased from 512 to 768, but based on the actual performance differences between Adreno 640 and Adreno 630, the additional 50% ALUs only resulted in about a 20% performance gain, indicating that many of them were likely allocated for AI computing.Some professionals have tested and analyzed that half (256) of the 512 ALUs in Adreno 630 are used for heterogeneous AI computing, compensating for the lack of a dedicated NPU unit in the Snapdragon mobile platform.

In summary, the “most capable” in the Snapdragon mobile platform is undoubtedly Snapdragon 845 and Snapdragon 855, and there is also a significant gap between the GPUs in the Snapdragon 7 series and 6 series.Therefore, if you want to purchase a phone that can run most games at full frame, it is very necessary to choose the Snapdragon 8 series.As for the Snapdragon 6 series, with Snapdragon 710 dropping to the thousand-yuan price range, unless you don’t play games much, it’s best to stay away.

The Grievances Between Apple and Imagination

In the smartphone field, currently, the only chip design manufacturers capable of building their own GPUs are Qualcomm and Apple. Qualcomm’s Adreno GPU originated from AMD, while Apple’s device GPUs rely on the support of Imagination Technologies.

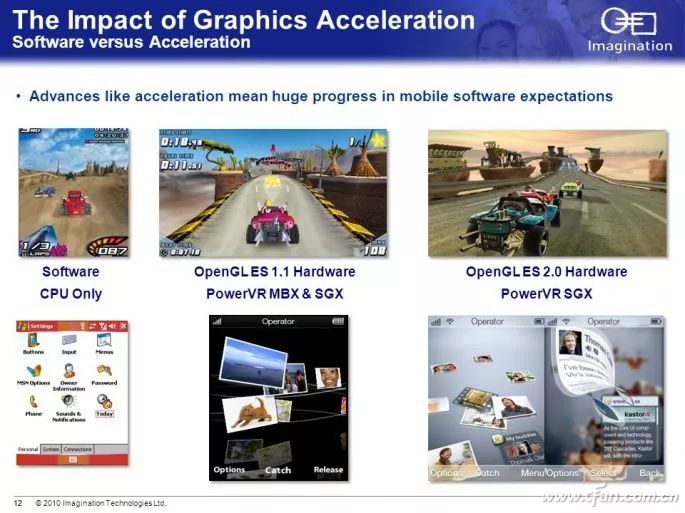

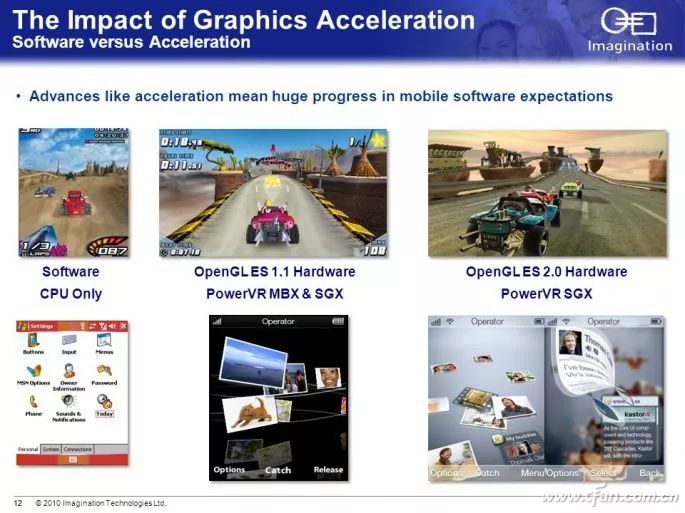

Imagination was also one of the earliest companies to enter the GPU market, but to avoid the spotlight of NVIDIA and ATI, the company focused more on refining ultra-low-power mobile GPUs. The PowerVR MBX GPU launched in 2001 was integrated into SoCs from Intel, Freescale, Texas Instruments, and Samsung, contributing to classic devices like the first generation iPhone, Nokia N95, and Dell Axim X50v (PDA).

In other words, in the latest Snapdragon mobile platforms, more ALUs do not equate to equivalent performance improvements.Taking Snapdragon 855 as an example, Qualcomm revealed that this chip (compared to Snapdragon 845) increased the number of arithmetic logic units by 50%, meaning that the number of ALUs increased from 512 to 768, but based on the actual performance differences between Adreno 640 and Adreno 630, the additional 50% ALUs only resulted in about a 20% performance gain, indicating that many of them were likely allocated for AI computing.Some professionals have tested and analyzed that half (256) of the 512 ALUs in Adreno 630 are used for heterogeneous AI computing, compensating for the lack of a dedicated NPU unit in the Snapdragon mobile platform.

In summary, the “most capable” in the Snapdragon mobile platform is undoubtedly Snapdragon 845 and Snapdragon 855, and there is also a significant gap between the GPUs in the Snapdragon 7 series and 6 series.Therefore, if you want to purchase a phone that can run most games at full frame, it is very necessary to choose the Snapdragon 8 series.As for the Snapdragon 6 series, with Snapdragon 710 dropping to the thousand-yuan price range, unless you don’t play games much, it’s best to stay away.

The Grievances Between Apple and Imagination

In the smartphone field, currently, the only chip design manufacturers capable of building their own GPUs are Qualcomm and Apple. Qualcomm’s Adreno GPU originated from AMD, while Apple’s device GPUs rely on the support of Imagination Technologies.

Imagination was also one of the earliest companies to enter the GPU market, but to avoid the spotlight of NVIDIA and ATI, the company focused more on refining ultra-low-power mobile GPUs. The PowerVR MBX GPU launched in 2001 was integrated into SoCs from Intel, Freescale, Texas Instruments, and Samsung, contributing to classic devices like the first generation iPhone, Nokia N95, and Dell Axim X50v (PDA).

The fifth generation of PowerVR SGX series is likely the most well-known GPU (such as SGX530, SGX535). Apple’s A4, Samsung’s Exynos, and Texas Instruments’ OMAP3 series SoCs are all its customers, and the four-core PowerVR SGX544MP4 integrated into the Sony PSV handheld console.Subsequently, PowerVR gradually became Apple’s “exclusive” GPU, while other brands (such as MediaTek, Allwinner, Rockchip, and Amlogic) even if they occasionally cooperated, the integrated PowerVR GPUs were mostly low-end models, with treatment far inferior to their “golden sponsor” Apple.However, it was precisely due to being overly reliant on Apple that Imagination sowed the seeds of its decline.

The Apple A10 processor should be considered the last collaboration between Apple and Imagination. Its integrated PowerVR GT7600 GPU could even outperform the concurrent Adreno 540 (Snapdragon 835), making the iPhone 7 series the most powerful smartphone of that year (in fact, every generation of iPhone is basically the strongest at the same time).

The fifth generation of PowerVR SGX series is likely the most well-known GPU (such as SGX530, SGX535). Apple’s A4, Samsung’s Exynos, and Texas Instruments’ OMAP3 series SoCs are all its customers, and the four-core PowerVR SGX544MP4 integrated into the Sony PSV handheld console.Subsequently, PowerVR gradually became Apple’s “exclusive” GPU, while other brands (such as MediaTek, Allwinner, Rockchip, and Amlogic) even if they occasionally cooperated, the integrated PowerVR GPUs were mostly low-end models, with treatment far inferior to their “golden sponsor” Apple.However, it was precisely due to being overly reliant on Apple that Imagination sowed the seeds of its decline.

The Apple A10 processor should be considered the last collaboration between Apple and Imagination. Its integrated PowerVR GT7600 GPU could even outperform the concurrent Adreno 540 (Snapdragon 835), making the iPhone 7 series the most powerful smartphone of that year (in fact, every generation of iPhone is basically the strongest at the same time).

Unfortunately, starting from the A11 chip, Apple officially abandoned Imagination and switched to its self-developed GPU, which is configured with 6 shader cores, with every 2 cores sharing a texture unit. This similar design to the PowerVR GPU has led many players to claim that Apple’s GPU ascended by stepping on Imagination—once the news of Apple’s self-developed GPU came out, Imagination quickly fell into an operational crisis, and to this day, only a few SoCs like MediaTek Helio P60 (PowerVR GM9446), P35, and P22 (PowerVR GE8320) choose to collaborate with Imagination to integrate PowerVR GPUs, reducing Imagination from the sole ruler of the iOS ecosystem to “others” in the GPU field, which is a lamentable situation.

Unfortunately, starting from the A11 chip, Apple officially abandoned Imagination and switched to its self-developed GPU, which is configured with 6 shader cores, with every 2 cores sharing a texture unit. This similar design to the PowerVR GPU has led many players to claim that Apple’s GPU ascended by stepping on Imagination—once the news of Apple’s self-developed GPU came out, Imagination quickly fell into an operational crisis, and to this day, only a few SoCs like MediaTek Helio P60 (PowerVR GM9446), P35, and P22 (PowerVR GE8320) choose to collaborate with Imagination to integrate PowerVR GPUs, reducing Imagination from the sole ruler of the iOS ecosystem to “others” in the GPU field, which is a lamentable situation.

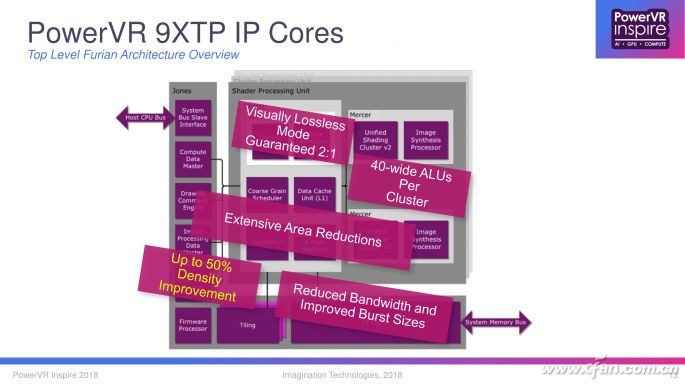

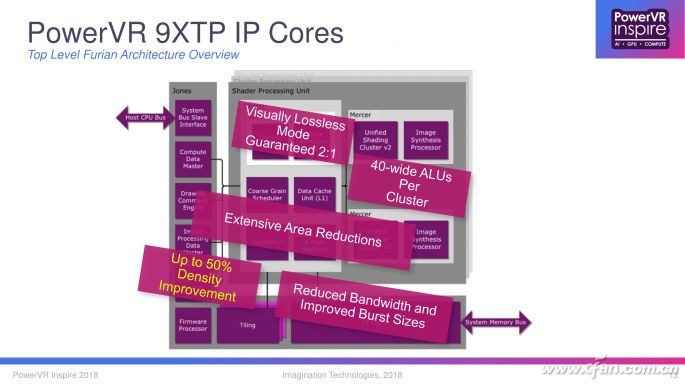

Currently, Imagination’s latest GPU solutions include PowerVR 9XEP/9XMP/9XTP (positioned in ascending order), with 9XEP and 9XMP adopting the older Rogue architecture, mainly competing with ARM’s Mali-G72.9XTP, on the other hand, is built on the latest Furian architecture, increasing the ALUs to 40 pipelines, supporting advanced technologies such as 4K 120FPS, HDR, Vulkan API, and Android Neural Networks API, theoretically allowing it to compete with ARM Mali-G76/G77.

ARM’s “Favorite Son” Mali GPU

Next, it’s time to discuss ARM’s own “favorite son,” the Mali series GPUs.As the partner of the Cortex A series CPU architecture, it is also the second most popular in the Android smartphone market after Qualcomm’s Adreno GPU. The vast majority of non-Qualcomm SoCs we are familiar with, such as HiSilicon Kirin, Samsung Exynos, and MediaTek Helio, have directly chosen to partner with Mali GPUs.

CFan once explained the features of ARM’s latest Cortex-A77 CPU and Mali-G77 GPU in the article “The Biggest Regret of Kirin 990! What’s So Good About ARM Cortex-A77 Architecture?” Therefore, this article will not elaborate further, just briefly introduce the special aspects of Mali GPUs.

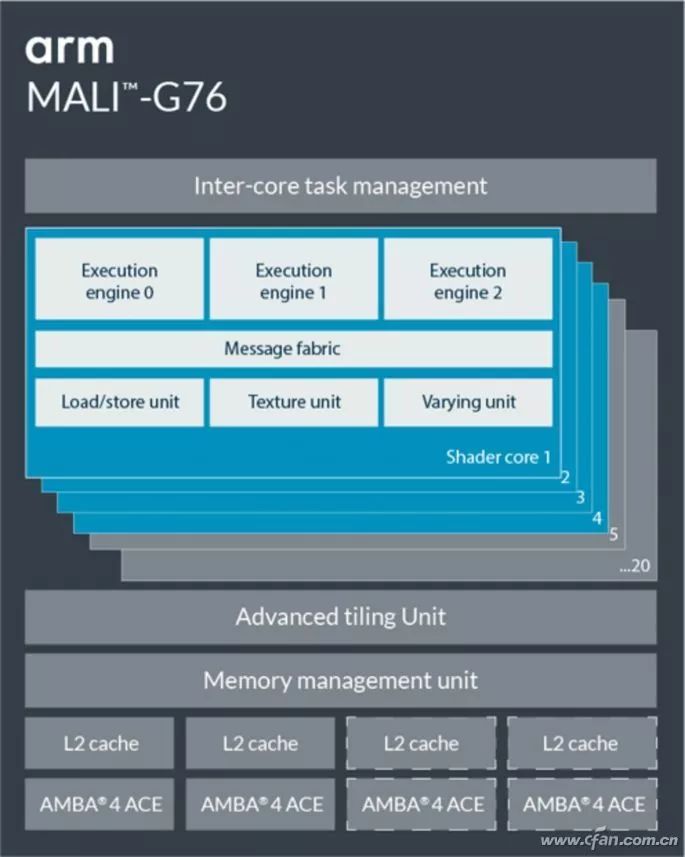

Compared with Adreno GPUs, Mali places more emphasis on the number of GPU cores, packaging a larger number of GPU cores into a larger GPU matrix, which is the key to improving Mali GPU performance.

Mali-G76 Can Have Up to 20 Compute Cores

For example, during the Mali-G72 era, Kirin 970 integrated a 12-core Mali-G72MP12, while Samsung Exynos 9810 was even more impressive, cramming in 18 cores (Mali-G72MP18).However, the more GPU cores there are, the greater the heat and power consumption, and SoC manufacturers typically choose to lower GPU frequencies and turn off some cores to control power consumption.

In other words, the number of cores in Mali-GPU has a critical threshold; exceeding this number will necessitate reducing GPU frequencies, ultimately leading to an imbalance between the increase in GPU core count and the corresponding performance improvement (1+1<2).

Therefore, how to allocate limited chip area to Cortex-A CPUs and Mali GPUs, and reasonably control the scale and frequency of Mali GPUs to achieve the best energy efficiency ratio is the final exam that ARM has handed over to SoC manufacturers.

In summary, Qualcomm Adreno and ARM Mali are the current main players in the Android smartphone field. The specifications and performance of Adreno GPUs depend on the model of the Snapdragon mobile platform, making it relatively easy to compare.ARM Mali GPUs involve Mali models (such as Mali-G52, Mali-G72, Mali-G76), the number of cores (the suffix is MPx), and the CPU architecture and frequency they are paired with, requiring us to spend more effort to compare both comprehensive and performance metrics.

With Samsung bringing self-developed GPUs authorized by AMD, and Imagination gaining support from more SoC chip manufacturers, just thinking about it can be overwhelming, right? Therefore, if you want to know the latest dynamics and purchasing suggestions for mobile GPUs, please look forward to CFan’s latest reports.

Currently, Imagination’s latest GPU solutions include PowerVR 9XEP/9XMP/9XTP (positioned in ascending order), with 9XEP and 9XMP adopting the older Rogue architecture, mainly competing with ARM’s Mali-G72.9XTP, on the other hand, is built on the latest Furian architecture, increasing the ALUs to 40 pipelines, supporting advanced technologies such as 4K 120FPS, HDR, Vulkan API, and Android Neural Networks API, theoretically allowing it to compete with ARM Mali-G76/G77.

ARM’s “Favorite Son” Mali GPU

Next, it’s time to discuss ARM’s own “favorite son,” the Mali series GPUs.As the partner of the Cortex A series CPU architecture, it is also the second most popular in the Android smartphone market after Qualcomm’s Adreno GPU. The vast majority of non-Qualcomm SoCs we are familiar with, such as HiSilicon Kirin, Samsung Exynos, and MediaTek Helio, have directly chosen to partner with Mali GPUs.

CFan once explained the features of ARM’s latest Cortex-A77 CPU and Mali-G77 GPU in the article “The Biggest Regret of Kirin 990! What’s So Good About ARM Cortex-A77 Architecture?” Therefore, this article will not elaborate further, just briefly introduce the special aspects of Mali GPUs.

Compared with Adreno GPUs, Mali places more emphasis on the number of GPU cores, packaging a larger number of GPU cores into a larger GPU matrix, which is the key to improving Mali GPU performance.

Mali-G76 Can Have Up to 20 Compute Cores

For example, during the Mali-G72 era, Kirin 970 integrated a 12-core Mali-G72MP12, while Samsung Exynos 9810 was even more impressive, cramming in 18 cores (Mali-G72MP18).However, the more GPU cores there are, the greater the heat and power consumption, and SoC manufacturers typically choose to lower GPU frequencies and turn off some cores to control power consumption.

In other words, the number of cores in Mali-GPU has a critical threshold; exceeding this number will necessitate reducing GPU frequencies, ultimately leading to an imbalance between the increase in GPU core count and the corresponding performance improvement (1+1<2).

Therefore, how to allocate limited chip area to Cortex-A CPUs and Mali GPUs, and reasonably control the scale and frequency of Mali GPUs to achieve the best energy efficiency ratio is the final exam that ARM has handed over to SoC manufacturers.

In summary, Qualcomm Adreno and ARM Mali are the current main players in the Android smartphone field. The specifications and performance of Adreno GPUs depend on the model of the Snapdragon mobile platform, making it relatively easy to compare.ARM Mali GPUs involve Mali models (such as Mali-G52, Mali-G72, Mali-G76), the number of cores (the suffix is MPx), and the CPU architecture and frequency they are paired with, requiring us to spend more effort to compare both comprehensive and performance metrics.

With Samsung bringing self-developed GPUs authorized by AMD, and Imagination gaining support from more SoC chip manufacturers, just thinking about it can be overwhelming, right? Therefore, if you want to know the latest dynamics and purchasing suggestions for mobile GPUs, please look forward to CFan’s latest reports.

Click “Read Original” to see more exciting content