First, use RTC interrupts to keep time; I prefer a short RTC interrupt period of about 125us, which is necessary for decoding infrared remote control codes. RTC timing is quite accurate, so we should make the most of it. Second, place three (quantity can be adjusted) timers in the RTC interrupt service routine (essentially counters). My habit is to use 2ms, 5ms, and 500ms as benchmark times for the entire system to call upon, so they must be accurate; adjusting them with an oscilloscope works well. Third, place a dedicated time-handling subroutine in the main program loop. (Note: microcontrollers do not stop; they continually run in a loop, which differs from what we learned in school; I was asked about this in an interview…). All time handling is done in the time-handling subroutine, which is very convenient. A microcontroller system needs to handle at least 10 to 20 different times and requires 10 to 20 timers, many of which must work simultaneously and asynchronously. If each were handled separately, it would be quite troublesome. Fourth, “programs run to wait, not stand still to wait”; this seems a bit abstract. An engineer I worked with once mentioned this concept, and I believe it is a fairly important idea in a time-slice system, hence the name. Fifth, let’s let the program speak; comments should be as detailed as possible; one can understand the code just by reading the comments.

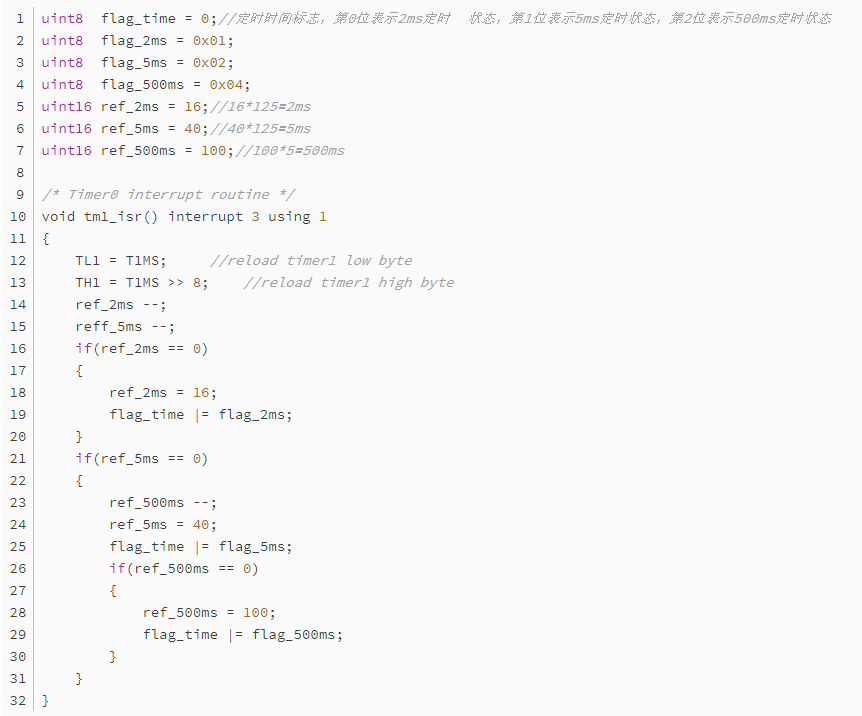

Interrupt Service Routine Part Interrupt every 125us to generate several benchmark times.

(1) The ref_2ms register continuously decrements by 1 every interrupt; it decreases 16 times, so the elapsed time is 125us × 16 = 2ms, which is what we call a timer/counter. Thus, we can use the system’s RTC interrupt to implement many required timing intervals. (2) Set the 2ms timing completion flag, which is provided for the time-handling program; this is a timer framework, and the 5ms timing is identical.

This program also uses a block framework, which is quite convenient, although it is unrelated to today’s topic; I will write about that later when I feel like it. The program above is the timer in the interrupt service routine, timing 2ms, 5ms, and 500ms; upon timing completion, the overflow is recorded by the flag_time flag, and the program can read this flag to determine if the timing has reached the specified interval.

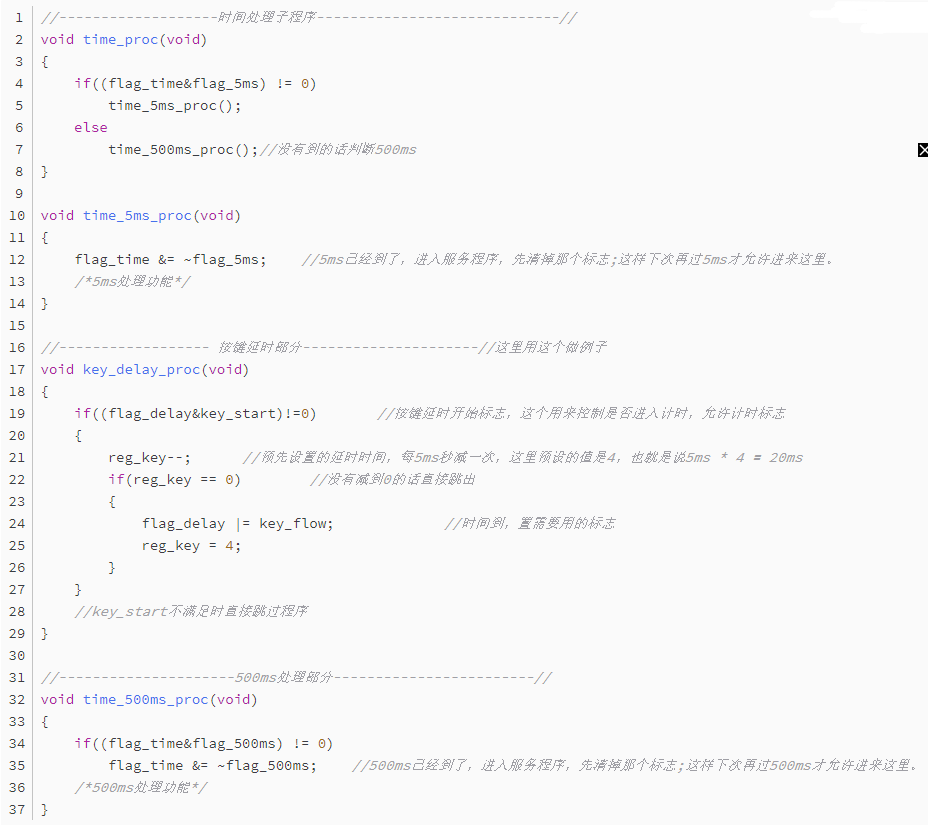

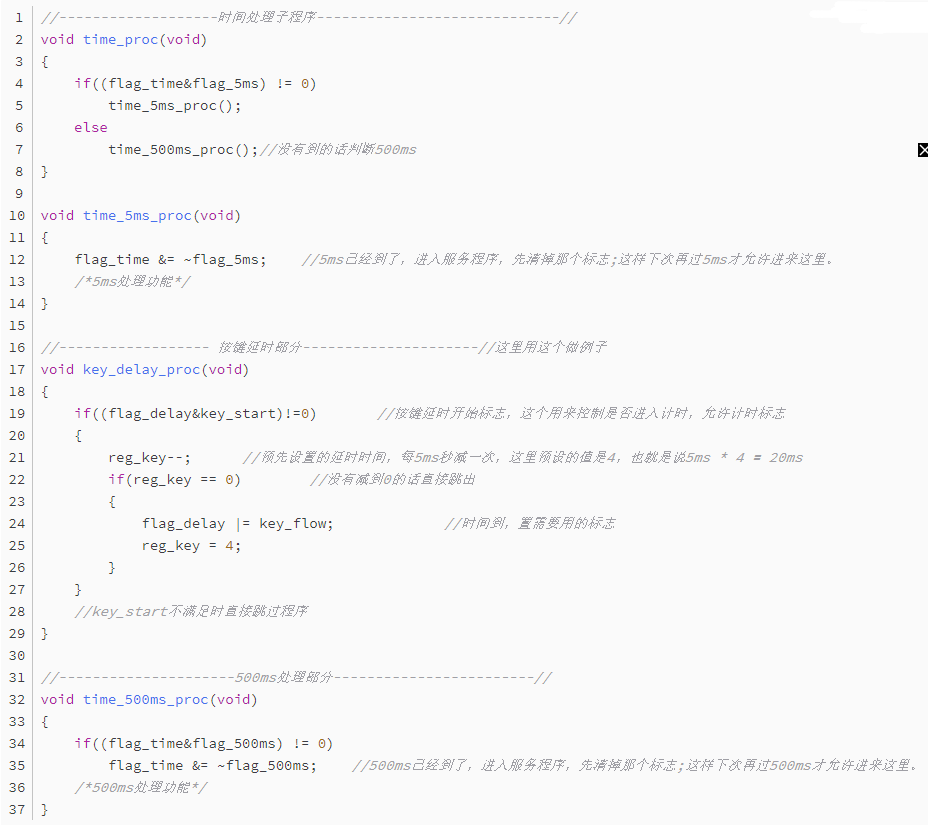

Now Let’s Look at the Unified Time Service Subroutine

The above uses a 20ms debounce timer for the key as an example; once understood, you can see that we can completely mimic that timer and place many more timers below, each counting simultaneously every 5ms. Whoever finishes counting first will turn off itself and set the corresponding flag for other programs to call, without affecting other timers! Thus, we can place many timers here; generally, having ten to twenty is no problem and fully meets the timing needs of a microcontroller system. The structure of a single timer is quite simple; first, check whether the timing flag is set to allow timing, then a dedicated register increments or decrements by 1. After adding or subtracting the corresponding value, when the appropriate time is reached, the timer turns off and sets the corresponding flags needed. At this point, we are almost done; we can obtain all the required timing intervals conveniently. Now, let’s see what the microcontroller is doing during this time. Only when the interrupt timing reaches 5ms or 500ms will the overflow flag be valid, allowing entry into the above timing program; at other times, it is performing other tasks. Furthermore, when entering the above timer, it is clear that it is not in a dead loop; it simply adds or subtracts a register and exits, consuming very little time—about 5us to 20us—without affecting the execution of the main program.

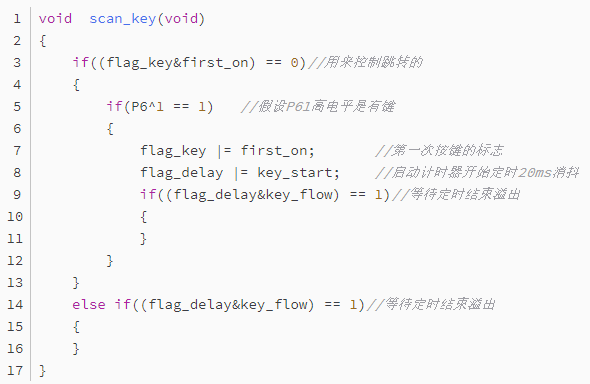

Next, Let’s Look at How to Call This

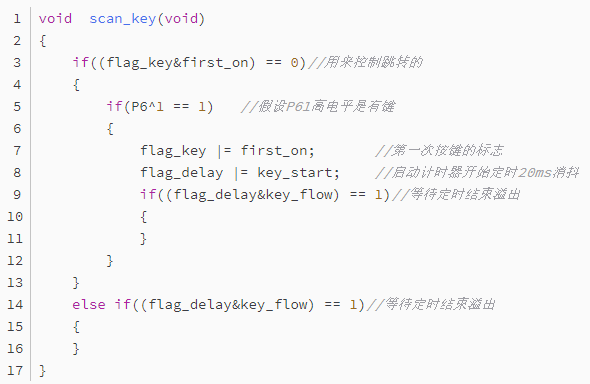

We previously discussed the debounce time handling for keys; now let’s see how to solve this issue using the methods introduced above.

The above uses a 20ms debounce timer for the key as an example; once understood, you can see that we can completely mimic that timer and place many more timers below, each counting simultaneously every 5ms. Whoever finishes counting first will turn off itself and set the corresponding flag for other programs to call, without affecting other timers! Thus, we can place many timers here; generally, having ten to twenty is no problem and fully meets the timing needs of a microcontroller system. The structure of a single timer is quite simple; first, check whether the timing flag is set to allow timing, then a dedicated register increments or decrements by 1. After adding or subtracting the corresponding value, when the appropriate time is reached, the timer turns off and sets the corresponding flags needed. At this point, we are almost done; we can obtain all the required timing intervals conveniently. Now, let’s see what the microcontroller is doing during this time. Only when the interrupt timing reaches 5ms or 500ms will the overflow flag be valid, allowing entry into the above timing program; at other times, it is performing other tasks. Furthermore, when entering the above timer, it is clear that it is not in a dead loop; it simply adds or subtracts a register and exits, consuming very little time—about 5us to 20us—without affecting the execution of the main program.

Next, Let’s Look at How to Call This

We previously discussed the debounce time handling for keys; now let’s see how to solve this issue using the methods introduced above.

Essentially, it works like this: determine when a key is pressed; if not, exit; if pressed, start the debounce timing. On the second entry, control the checking based on the flag to see if the time is sufficient. Here, we are waiting, but as mentioned earlier, we are running to wait, not standing still to wait. Compared to dead loop timing, during the time before reaching 20ms, what is the microcontroller doing? In a dead loop, it would be waiting in place, doing nothing. However, looking at the above program, it merely checks whether the timing is sufficient; the specific timing is handled in the unified time subroutine. If the time is not yet reached, it exits and continues running other programs. Until the timing is reached, the microcontroller checks whether the flags

Essentially, it works like this: determine when a key is pressed; if not, exit; if pressed, start the debounce timing. On the second entry, control the checking based on the flag to see if the time is sufficient. Here, we are waiting, but as mentioned earlier, we are running to wait, not standing still to wait. Compared to dead loop timing, during the time before reaching 20ms, what is the microcontroller doing? In a dead loop, it would be waiting in place, doing nothing. However, looking at the above program, it merely checks whether the timing is sufficient; the specific timing is handled in the unified time subroutine. If the time is not yet reached, it exits and continues running other programs. Until the timing is reached, the microcontroller checks whether the flags flag_delay and key_flow meet the conditions and starts the key handling program. During this period, the microcontroller is free to run other tasks; it simply checks once in the main loop.

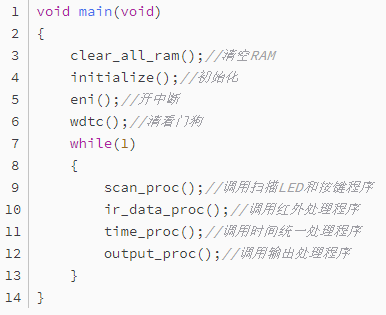

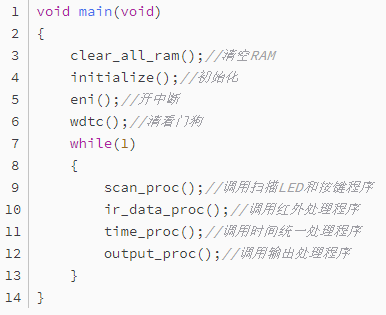

This is the loop body used; all functions are written as subroutine forms, making it convenient to attach them as needed. Thus, in a general loop body, the microcontroller continuously executes this loop. If the entire program adopts the aforementioned time-slice scanning philosophy, the time to loop back is quite short, and it is somewhat similar to the idea of a computer, isn’t it?

No matter how fast a computer is, it does not process multiple tasks simultaneously; rather, it processes one at a time, cycling through very quickly, giving us the impression that it is handling multiple programs at once. I believe the idea I ultimately want to express is just this. With this philosophy supporting it, programming for microcontrollers becomes relatively easy; the remaining task is simply to focus on using code to realize our ideas. Of course, this is just one feasible method, not the only one.

Writing programs is an art; it is easy to write but challenging to write elegantly.