In recent years, a main theme in the AI market has been edge AI—or more specifically, edge-side AI, and even further, TinyML.Competitors in this market include not only traditional MCU/MPU suppliers and IP providers but also many startups focused on edge AI chips.

The advantages of edge AI technology have been discussed in many articles, such as how edge AI shifts part of the inference workload to local devices, eliminating the need for data communication with data centers or gateways, thus saving power and costs; avoiding issues related to network communication delays and bandwidth; and enhancing privacy protection…

However, we believe that the inevitable reason for the continued popularity of edge AI in the near future is that the number of edge devices and the market scale are something that no market participant can ignore: just as the number of IoT devices is expected to reach tens of billions, if the edge AI market takes off, it will undoubtedly be a significant opportunity for all market participants to expand their revenues.

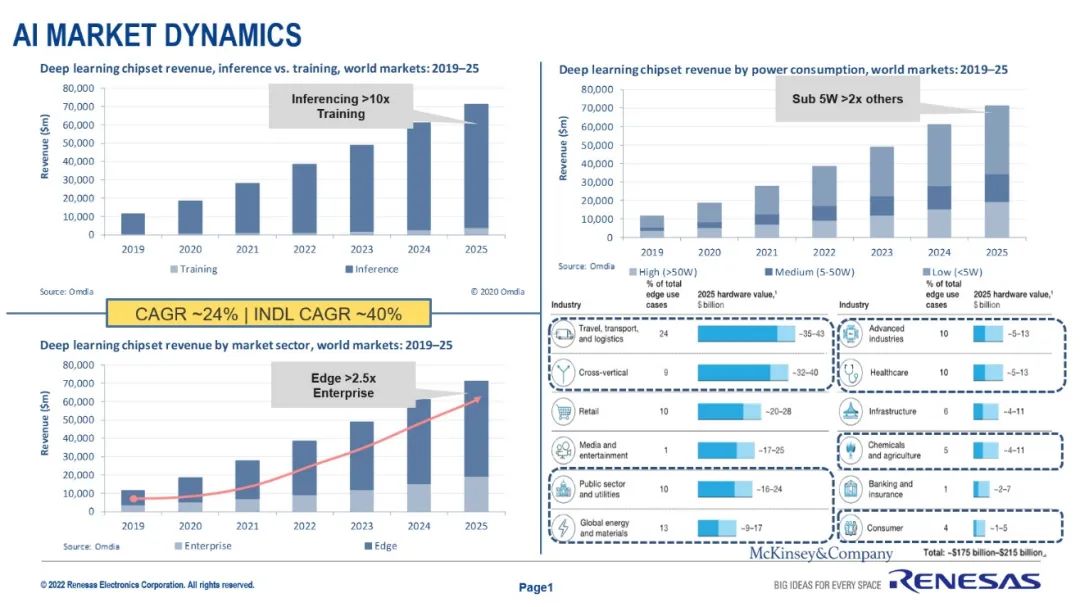

Recently, during an interview with Dr. Sailesh Chittipeddi, Executive Vice President of Renesas Electronics Group and head of the IoT and Infrastructure Business Unit, he presented us with data from Omdia, as shown in the figure below.

It is expected that by 2025, the market revenue for deep learning chips used for inference will reach ten times that of training AI chips. Among them, AI inference chips with a power consumption of less than 5W will have twice the market revenue of other categories. <5W refers to AI chips applied to the edge. This figure also presents the revenue changes for enterprise and edge AI chips.

Such market increments are certainly worth the investment from enterprises. However, selling AI chips is not as simple as just making the chips themselves well.

Earlier this month, Renesas announced the acquisition of an embedded AI solution provider, Reality Analytics, Inc (Reality AI). Although acquisitions are routine for Renesas, this is likely the first time Renesas has executed an acquisition with AI technology as a clear goal. Observing Renesas’s AI technology layout and its outlook for this market will help us further understand the current development status and potential of this market.

(Note: In this article, the term “edge” will be narrowed down to edge devices, excluding components like edge data centers.)

Renesas is another typical representative among traditional MCU manufacturers that are laying out edge AI technology. Companies like Infineon and TI also have similar operations. This indicates that these large multinational chip companies have a roughly similar understanding of the edge AI market, although they each have their own focus.

When talking about Renesas’s edge AI technology, the first thing that comes to mind is its e-AI/DRP (Dynamic Reconfigurable Processor) technology. At many previous technology exhibitions, we have seen Renesas showcasing this dedicated AI inference accelerator. The DRP-AI has already been applied in Renesas’s RZ/V series MPU chips for the past two years.

However, Sailesh told us that DRP-AI is not the entirety of Renesas’s edge AI layout: “AI can be implemented in multiple ways. One method is using CPU + NPU (neural processing unit), which can also involve hardware combinations like CPU and FPGA. The second is to use only general MPUs (CPU/MCU), such as a 64-bit MPU for calculations. The third is DRP-AI.”

Our understanding of AI computation mainly leans towards dedicated neural network computing acceleration chips or units. However, in reality, general-purpose processors can also be used for AI computation, albeit with relatively limited performance and power consumption. Especially for edge devices, constrained by power consumption, computing power, and cost, most AI inference operations still rely on general-purpose processors—hence, for example, Arm has specifically added the Helium vector processing unit to the Cortex-M55 to enhance its DSP and ML capabilities; similarly, the Raspberry Pi Pico released last year can run TensorFlow Lite Micro, also based on Cortex-M0+.

As TinyML technology rises, the edge AI ecosystem is also striving to reduce AI model sizes and lower memory capacity and computational power requirements. Thus, Sailesh specifically mentioned that the AI model used in Renesas’s translator libraries in cooperation with Reality AI is based on Renesas’s general MCU and MPU as the computing platform. This will be elaborated on later.

By highlighting DRP-AI as a separate direction for edge AI development, Sailesh aims to demonstrate Renesas’s uniqueness in implementing edge AI. “DRP-AI, as a fully flexible option, is the solution we provide.” More people may be interested in DRP-AI, as it is a technology Renesas has high hopes for. “We are confident about this. In the high-end robotics market, we believe that DRP-AI technology and some of our new products will play a very important role.” Sailesh said, “This field is worth watching, as there are many interesting works related to visual AI ongoing.”

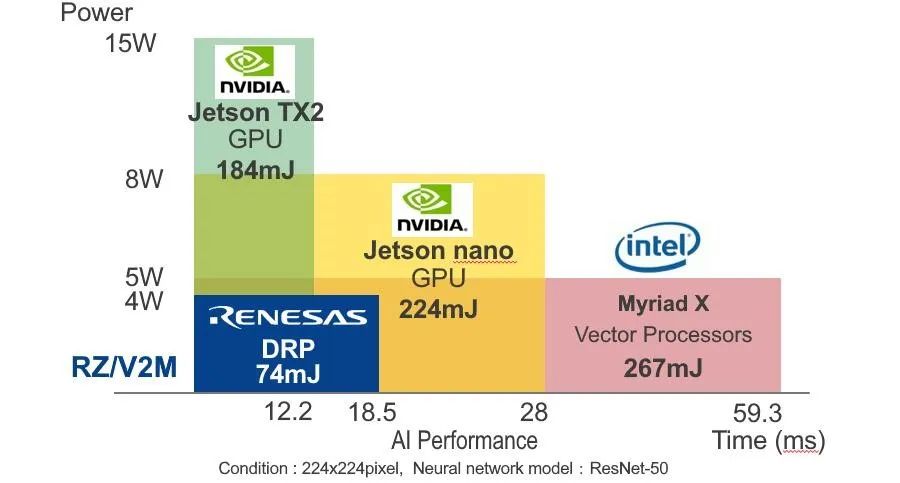

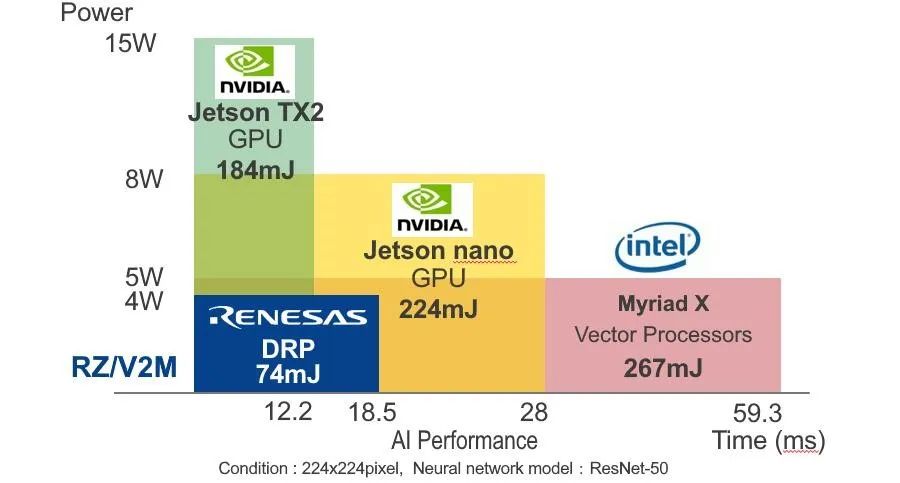

In the past two years, Renesas has developed the DRP-AI (AI accelerator) and the DRP-AI translator software. This is a neural network solution mainly aimed at visual AI, such as being able to apply predictive analytics in industrial production (for instance, predicting motor failure based on AI analysis before it occurs). Previously, Renesas promoted DRP-AI as a typical feed-forward neural network computing acceleration solution, showing significant advantages in efficiency compared to other types of processors, as well as NVIDIA Jetson Nano GPU and Intel Myriad X vector processors.

Sailesh stated in the interview, “DRP-AI technology has computing power levels from 0.5 TOPS to nearly 10 TOPS, and it operates at very low power consumption. This neural network can configure itself based on the load, optimizing itself in complex environments, making it very intelligent.” “The charm of DRP technology lies in its ability to adjust processor performance based on the information fed into it, making it a self-dynamically reconfiguring solution. Therefore, DRP has higher efficiency.”

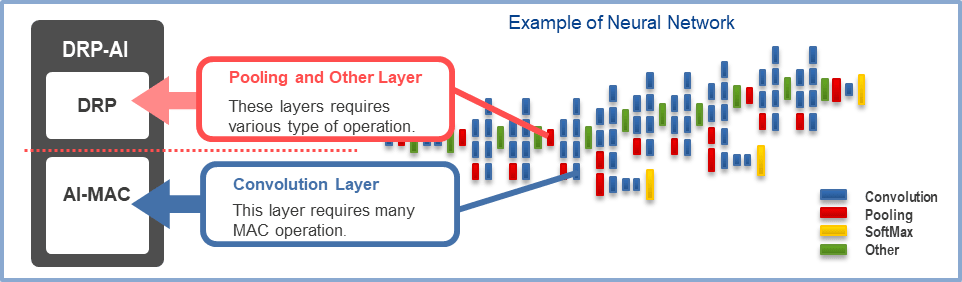

From the current official introduction page of DRP-AI, it includes two parts: AI-MAC—responsible for common AI multiply-accumulate operations, and the DRP reconfigurable processor—used for preprocessing and pooling layer processing tasks.

If we summarize four key points, Sailesh believes that the value of DRP-AI lies in 1. Energy efficiency; 2. Efficiency; 3. Cost and flexibility (area, chip, and board area); 4. Dynamic reconfigurability.

Building an AI Ecosystem Takes Time

Although it has advantages in hardware efficiency, “compared to some cloud-based competitors with an established ecosystem, we currently lack the breadth of ecosystem partners.” Here, the mention of “cloud” competitors likely refers to NVIDIA, with its CUDA and Nvidia AI ecosystem. Even though we believe NVIDIA and Renesas are not in the same race. “We are currently developing the ecosystem, and by the end of this year, we will have hundreds of partners.”

“My expectation is that over time, DRP technology will be increasingly optimized in edge visual AI solutions.” Sailesh said, “If the performance demand is only moderate, then a general CNN (convolutional neural network) solution will suffice. However, if you are looking for a highly optimized solution for energy efficiency and power consumption in visual AI, then you should consider using feed-forward neural network products that can dynamically reconfigure themselves.”

Renesas is still in the early stages of building its AI ecosystem. This is why we see Reality AI as Renesas’s first AI-related acquisition. However, the entire field of edge AI is still in a relatively early development stage, and Renesas has been quite active in the past two years. For example, Sailesh told us that Renesas has an internal team focused on developing AI ecosystem partners, which includes technical marketing and business development teams—they collaborate with different customers.

Moreover, following the acquisition of Reality AI, Renesas also plans to establish an AI excellence center in Maryland, USA, “This is something we are working on, and we expect the team to continue to expand.”

At the end of last month, Renesas announced a partnership with embedded voice solution provider Cyberon—both sides will collaborate to provide VUI (voice user interface) solutions. “Cyberon is a strong market participant in the voice field. There are many such collaborations, working together with ecosystem partners to solve problems in different terminal markets.” “Based on our MCU and MPU to implement such applications, this collaboration will continue.” Sailesh stated, “We expect to expand the scope of cooperation with MCU, MPU, and DRP-AI to maximize their application.”

Another typical ecosystem-building activity is the Renesas Cup National College Student Electronic Design Competition held two years ago, where Renesas encouraged all participants to effectively use DRP-AI resources in their projects… Such technical promotion activities should be numerous.

“We are tracking Design-In data for DRP-AI and other AI-related solutions. Although I cannot provide exact numbers, the number of Design-Ins for DRP-AI is steadily increasing. Moreover, I now expect the team to achieve a multiple growth target for Design-Ins each year.”

Completing the Ecosystem and Differentiated Competition

Based on these, Renesas’s acquisition of Reality AI is easily understood: the expansion of the AI ecosystem and capabilities is naturally a focus. “We have previously collaborated with Reality AI. This company is one of our ecosystem partners, and we have been collaborating on HVAC (heating, ventilation, and air conditioning) load balancing applications.”

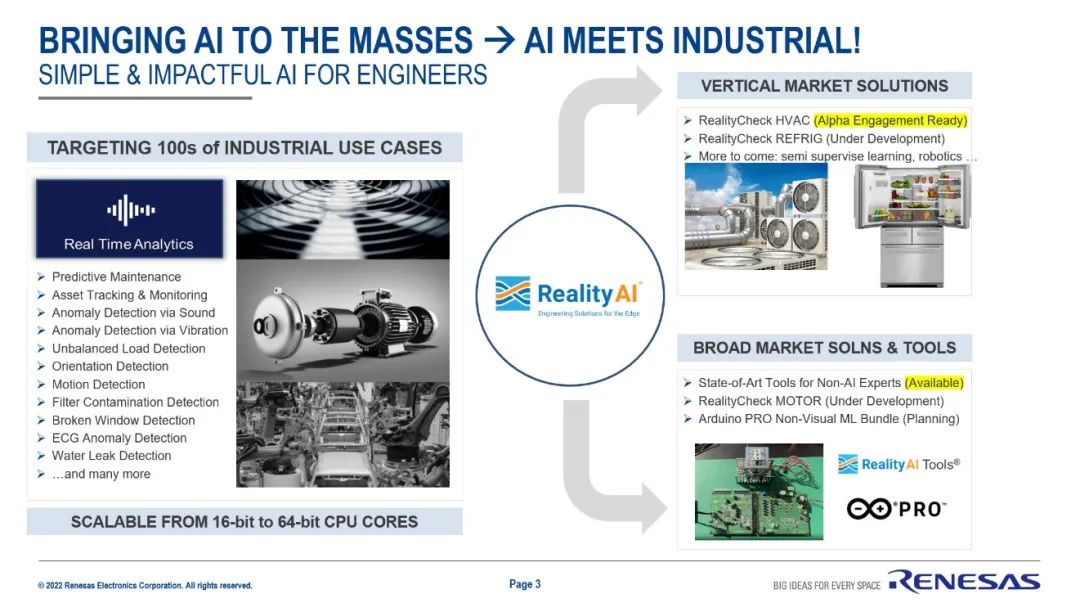

The image above shows the markets and products that Reality AI is involved in. “What sets Reality AI apart from other competitors is that they focus on the industrial sector.” This is also Renesas’s stronghold, Sailesh said, “For us, this is quite a match. At the same time, they are also involved in the automotive sector.” Renesas’s two major business units are IIBU (Industrial/Infrastructure/IoT) and ABU (Automotive).

“I think, based on our overall strategy, both parties are quite aligned. Moreover, talent in this field is very hard to find.” “The Reality AI team is very professional in developing AI models and has DSP experts.” “This team is not large, but we expect it to grow rapidly.”

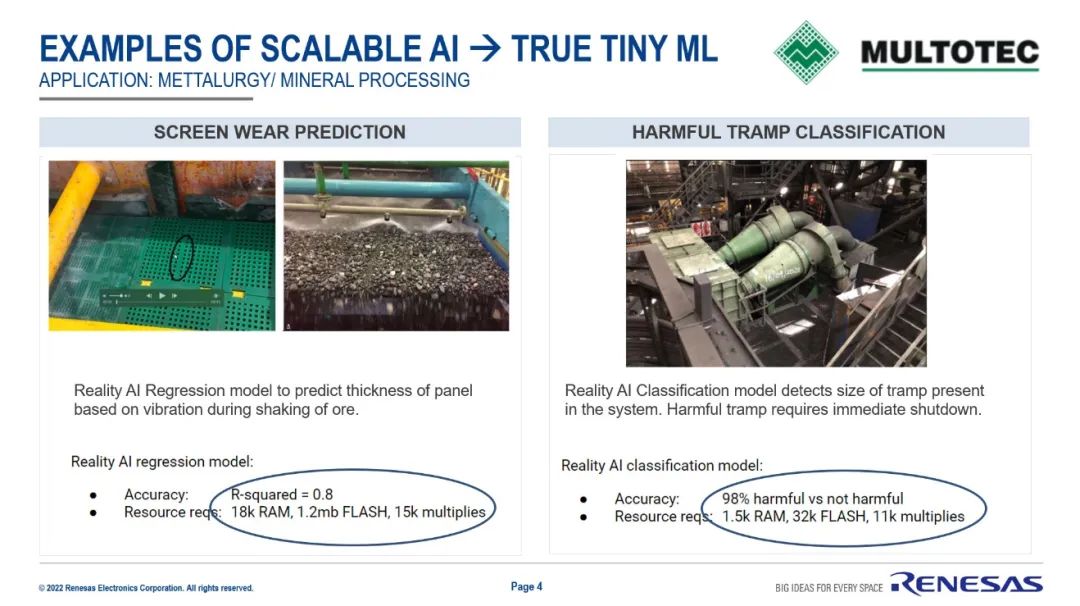

To be more specific, Renesas cited two examples of Reality AI models in a PPT and referred to them as “True Tiny ML.” The regression model and classification model here are typical cases in industrial applications. The key point is the last line of storage and computing resource requirements, which is likely why Renesas calls it “True Tiny ML.” “Reality AI works well with our MCU and MPU workflow: they develop front-end AI models, and we provide translator libraries—after integration (via the e² studio tool), they can run on MCU and MPU.”

“This way, we can provide customers with a more complete product. Moreover, if customers need to develop AI models but lack the capability, we can also offer Reality AI’s model-as-a-service.” From this explanation, it is clear that Reality AI is an important part of enhancing Renesas’s AI ecosystem-building capabilities. “The problems that the Reality AI team can solve are crucial for our customers. This is the reason we strive to achieve this transaction.” It is easy to understand that the acquisition of Reality AI is a horizontal expansion of Renesas’s edge AI technology stack.

“Reality AI’s original customers can use our MCU and MPU; we can also provide AI models to our existing customers through Reality AI’s tools—offering a complete package, end-to-end solutions, from problem definition to AI model implementation on MCU.” This is also the key to Renesas forming differentiated competition in the edge AI market, as mentioned earlier regarding the establishment of the AI excellence center, which will expand the reach of AI solutions.

“I have already seen the basic demo of how to integrate the tools and ultimately apply them to our MCU.” Sailesh said, “This is still in the early stages, but I expect that in the future we will be able to provide a complete suite.” Sailesh stated, “We certainly cannot cover every field. Therefore, our idea is to make the (developer-facing) process seamless and integrate well with our translator libraries and e² studio on the backend.” “This is a very challenging task, but the team already has a clear idea and early demos.”

Reality AI’s products may be more suited for lower-computing TinyML devices. In fact, within the higher-computing DRP-AI ecosystem, Sailesh also talked about Renesas’s dedication to creating a more full-stack, complete system-level solution for developers, making AI development more seamless. This seems to be a consistent theme in Renesas’s pursuit of product differentiation over the years.

From a higher-dimensional perspective, the company’s IIBU business is also promoting Go Broader and Go Deeper strategies. Various collaborations and expansions have thus become commonplace. Here, Renesas has not yet touched upon its coverage goals for more vertical edge AI markets (including voice, video, preventive detection, healthcare, etc.). Although Renesas does not intend to comprehensively cover all application markets, the collaboration with Syntiant on voice-controlled multi-modal AI solutions last year is a typical example from this angle.

In the past two years, Renesas has developed the DRP-AI (AI accelerator) and the DRP-AI translator software. This is a neural network solution mainly aimed at visual AI, such as being able to apply predictive analytics in industrial production (for instance, predicting motor failure based on AI analysis before it occurs). Previously, Renesas promoted DRP-AI as a typical feed-forward neural network computing acceleration solution, showing significant advantages in efficiency compared to other types of processors, as well as NVIDIA Jetson Nano GPU and Intel Myriad X vector processors.

Sailesh stated in the interview, “DRP-AI technology has computing power levels from 0.5 TOPS to nearly 10 TOPS, and it operates at very low power consumption. This neural network can configure itself based on the load, optimizing itself in complex environments, making it very intelligent.” “The charm of DRP technology lies in its ability to adjust processor performance based on the information fed into it, making it a self-dynamically reconfiguring solution. Therefore, DRP has higher efficiency.”

From the current official introduction page of DRP-AI, it includes two parts: AI-MAC—responsible for common AI multiply-accumulate operations, and the DRP reconfigurable processor—used for preprocessing and pooling layer processing tasks.

If we summarize four key points, Sailesh believes that the value of DRP-AI lies in 1. Energy efficiency; 2. Efficiency; 3. Cost and flexibility (area, chip, and board area); 4. Dynamic reconfigurability.

Building an AI Ecosystem Takes Time

Although it has advantages in hardware efficiency, “compared to some cloud-based competitors with an established ecosystem, we currently lack the breadth of ecosystem partners.” Here, the mention of “cloud” competitors likely refers to NVIDIA, with its CUDA and Nvidia AI ecosystem. Even though we believe NVIDIA and Renesas are not in the same race. “We are currently developing the ecosystem, and by the end of this year, we will have hundreds of partners.”

“My expectation is that over time, DRP technology will be increasingly optimized in edge visual AI solutions.” Sailesh said, “If the performance demand is only moderate, then a general CNN (convolutional neural network) solution will suffice. However, if you are looking for a highly optimized solution for energy efficiency and power consumption in visual AI, then you should consider using feed-forward neural network products that can dynamically reconfigure themselves.”

Renesas is still in the early stages of building its AI ecosystem. This is why we see Reality AI as Renesas’s first AI-related acquisition. However, the entire field of edge AI is still in a relatively early development stage, and Renesas has been quite active in the past two years. For example, Sailesh told us that Renesas has an internal team focused on developing AI ecosystem partners, which includes technical marketing and business development teams—they collaborate with different customers.

Moreover, following the acquisition of Reality AI, Renesas also plans to establish an AI excellence center in Maryland, USA, “This is something we are working on, and we expect the team to continue to expand.”

At the end of last month, Renesas announced a partnership with embedded voice solution provider Cyberon—both sides will collaborate to provide VUI (voice user interface) solutions. “Cyberon is a strong market participant in the voice field. There are many such collaborations, working together with ecosystem partners to solve problems in different terminal markets.” “Based on our MCU and MPU to implement such applications, this collaboration will continue.” Sailesh stated, “We expect to expand the scope of cooperation with MCU, MPU, and DRP-AI to maximize their application.”

Another typical ecosystem-building activity is the Renesas Cup National College Student Electronic Design Competition held two years ago, where Renesas encouraged all participants to effectively use DRP-AI resources in their projects… Such technical promotion activities should be numerous.

“We are tracking Design-In data for DRP-AI and other AI-related solutions. Although I cannot provide exact numbers, the number of Design-Ins for DRP-AI is steadily increasing. Moreover, I now expect the team to achieve a multiple growth target for Design-Ins each year.”

Completing the Ecosystem and Differentiated Competition

Based on these, Renesas’s acquisition of Reality AI is easily understood: the expansion of the AI ecosystem and capabilities is naturally a focus. “We have previously collaborated with Reality AI. This company is one of our ecosystem partners, and we have been collaborating on HVAC (heating, ventilation, and air conditioning) load balancing applications.”

The image above shows the markets and products that Reality AI is involved in. “What sets Reality AI apart from other competitors is that they focus on the industrial sector.” This is also Renesas’s stronghold, Sailesh said, “For us, this is quite a match. At the same time, they are also involved in the automotive sector.” Renesas’s two major business units are IIBU (Industrial/Infrastructure/IoT) and ABU (Automotive).

“I think, based on our overall strategy, both parties are quite aligned. Moreover, talent in this field is very hard to find.” “The Reality AI team is very professional in developing AI models and has DSP experts.” “This team is not large, but we expect it to grow rapidly.”

To be more specific, Renesas cited two examples of Reality AI models in a PPT and referred to them as “True Tiny ML.” The regression model and classification model here are typical cases in industrial applications. The key point is the last line of storage and computing resource requirements, which is likely why Renesas calls it “True Tiny ML.” “Reality AI works well with our MCU and MPU workflow: they develop front-end AI models, and we provide translator libraries—after integration (via the e² studio tool), they can run on MCU and MPU.”

“This way, we can provide customers with a more complete product. Moreover, if customers need to develop AI models but lack the capability, we can also offer Reality AI’s model-as-a-service.” From this explanation, it is clear that Reality AI is an important part of enhancing Renesas’s AI ecosystem-building capabilities. “The problems that the Reality AI team can solve are crucial for our customers. This is the reason we strive to achieve this transaction.” It is easy to understand that the acquisition of Reality AI is a horizontal expansion of Renesas’s edge AI technology stack.

“Reality AI’s original customers can use our MCU and MPU; we can also provide AI models to our existing customers through Reality AI’s tools—offering a complete package, end-to-end solutions, from problem definition to AI model implementation on MCU.” This is also the key to Renesas forming differentiated competition in the edge AI market, as mentioned earlier regarding the establishment of the AI excellence center, which will expand the reach of AI solutions.

“I have already seen the basic demo of how to integrate the tools and ultimately apply them to our MCU.” Sailesh said, “This is still in the early stages, but I expect that in the future we will be able to provide a complete suite.” Sailesh stated, “We certainly cannot cover every field. Therefore, our idea is to make the (developer-facing) process seamless and integrate well with our translator libraries and e² studio on the backend.” “This is a very challenging task, but the team already has a clear idea and early demos.”

Reality AI’s products may be more suited for lower-computing TinyML devices. In fact, within the higher-computing DRP-AI ecosystem, Sailesh also talked about Renesas’s dedication to creating a more full-stack, complete system-level solution for developers, making AI development more seamless. This seems to be a consistent theme in Renesas’s pursuit of product differentiation over the years.

From a higher-dimensional perspective, the company’s IIBU business is also promoting Go Broader and Go Deeper strategies. Various collaborations and expansions have thus become commonplace. Here, Renesas has not yet touched upon its coverage goals for more vertical edge AI markets (including voice, video, preventive detection, healthcare, etc.). Although Renesas does not intend to comprehensively cover all application markets, the collaboration with Syntiant on voice-controlled multi-modal AI solutions last year is a typical example from this angle.

“We believe that edge AI is a rapidly growing field. We can find differentiated directions compared to our competitors in this area. We provide customers with a complete tool suite, which is difficult for some market competitors to match.” Sailesh summarized, “This is not just about acquiring a company; it is about building a comprehensive solution and a user experience that is more user-friendly than competitors. If we can achieve these, we can win in the competition.”

At the end of the interview, Sailesh reiterated Renesas’s irreplaceability in the edge AI field compared to competitors, thanks to Reality AI: the ability for customers to receive AI training models as a service, especially for those enterprises lacking such capabilities. “This is a choice that many of our competitors cannot offer.” Even though the integration of Renesas and Reality AI’s products will take some time, Sailesh believes that this capability is already sufficient to achieve differentiated competition in the market.

“When we talk about 2024 and 2025, you will see significant differences brought by these technologies. We are just getting started, but we are already on the path we expect.” Sailesh said, “We have decided to become a leader in this market. I believe that when the time comes, you will see our imprint in this field, not only from the acquisition of Reality AI but also from our internal development efforts, better toolchains, and end-to-end user experiences.”

Author: Huang Yefeng, Senior Industry Analyst

EET Electronic Engineering Magazine Original

“We believe that edge AI is a rapidly growing field. We can find differentiated directions compared to our competitors in this area. We provide customers with a complete tool suite, which is difficult for some market competitors to match.” Sailesh summarized, “This is not just about acquiring a company; it is about building a comprehensive solution and a user experience that is more user-friendly than competitors. If we can achieve these, we can win in the competition.”

At the end of the interview, Sailesh reiterated Renesas’s irreplaceability in the edge AI field compared to competitors, thanks to Reality AI: the ability for customers to receive AI training models as a service, especially for those enterprises lacking such capabilities. “This is a choice that many of our competitors cannot offer.” Even though the integration of Renesas and Reality AI’s products will take some time, Sailesh believes that this capability is already sufficient to achieve differentiated competition in the market.

“When we talk about 2024 and 2025, you will see significant differences brought by these technologies. We are just getting started, but we are already on the path we expect.” Sailesh said, “We have decided to become a leader in this market. I believe that when the time comes, you will see our imprint in this field, not only from the acquisition of Reality AI but also from our internal development efforts, better toolchains, and end-to-end user experiences.”

Author: Huang Yefeng, Senior Industry Analyst

EET Electronic Engineering Magazine Original