Welcome to our technical case study column.

Here, we will continuously update articles about six-axis desktop collaborative robots’ open-source technology cases. Accompanied by detailed development processes and source code.

We showcase unique high extensibility, high scalability, and high playability of six-axis desktop collaborative robots through creative development, technical development, and application practice cases. This highlights the excellent performance of six-axis robotic arms as development assistants and learning tools.

We hope these cases can inspire many robotics enthusiasts, helping them better understand the use of robotic technology and applications, and promoting innovation and development in the robotics industry.

Long press to recognize the QR code at the bottom to add the assistant’s WeChat for source code access.

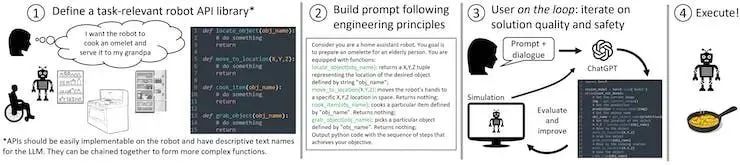

ChatGPT Application for Robots: Design Principles and Model Capabilities

Click the image to read the full article.

We extend ChatGPT’s capabilities to robots, allowing for intuitive control of robotic arms, drones, and home assistant robots across multiple platforms through language.

Have you ever thought about telling a robot what to do in your own words, just like you would with a human? Just tell your home assistant robot: “Please heat my lunch,” and let it find the microwave by itself. Isn’t that amazing? Although language is the most intuitive way for us to express our intentions, we still heavily rely on handwritten code to control robots. Our team has been exploring how to change this reality and achieve natural human-robot interaction using OpenAI’s new AI language model, ChatGPT.

3D Depth Vision and Unordered Grasping with Robotic Arms

Click the image to read the full article.

2D cameras (the most commonly used cameras) can capture two-dimensional images, which are pixel values in horizontal and vertical directions. They are typically used for capturing static scenes or moving objects and cannot provide depth information. In machine vision applications, 2D cameras can be used for image classification, object detection, and recognition tasks.

In contrast, depth cameras can capture depth information, allowing for the acquisition of three-dimensional information about objects. These cameras use various techniques to measure the depth of objects, such as structured light, time-of-flight, and stereo vision. In machine vision applications, 3D cameras can be used for point cloud segmentation, object recognition, and 3D reconstruction tasks.

The information captured by 2D cameras is no longer sufficient for some special cases, so switching to depth cameras provides more information, such as the length, width, and height of objects.

Object Recognition and Tracking with myCobot 280 Jetson Nano

Click the image to read the full article.

Our goal is to develop a robotic arm system capable of accurately recognizing and tracking objects to perform effectively in real-world applications. This project involves many technologies and algorithms, including visual recognition, hand-eye coordination, and robotic arm control.

The robotic arm is the myCobot 280-Jetson Nano.

This is a small six-axis robotic arm produced by Elephant Robotics, featuring Jetson Nano as the microprocessor, ESP32 as auxiliary control, and UR collaborative structure. The myCobot 280 Jetson Nano has a body weight of 1030g, a payload of 250g, a working radius of 280mm, compact and portable design, small yet powerful, easy to operate, and can work safely in collaboration with humans.

Learning the SLAM Algorithm of myAgv and Dynamic Obstacle Avoidance

Click the image to read the full article.

As technology advances, terms like artificial intelligence and autonomous navigation frequently appear before us. However, currently, autonomous driving has not been comprehensively popularized and is still undergoing continuous development and testing. As a car enthusiast since childhood, I am also very interested in this technology.

By chance, I bought a SLAM car online that can utilize 2D laser radar for mapping, autonomous navigation, dynamic obstacle avoidance, and other functions. Today, I will document the implementation of dynamic obstacle avoidance using this SLAM car. The algorithms used for dynamic obstacle avoidance are DWA and TEB algorithms.

Competing with Artificial Intelligence! myCobot 280 Open-Source Six-Axis Robotic Arm Connect 4 Game

Click the image to read the full article.

The research on artificial intelligence playing chess can be traced back to the 1950s when computer scientists began exploring how to write programs that enable computers to play chess. One of the most famous examples is Deep Blue, developed by IBM, which defeated the reigning world chess champion Garry Kasparov in 1997 with a score of 3.5-2.5.

Artificial intelligence playing chess is like giving a computer a way to think to achieve victory in a match. There are many ways to think, most of which stem from excellent algorithms. Deep Blue’s core algorithm is based on brute-force search: generating all possible moves, then executing as deep a search as possible, continuously evaluating the positions to find the best move.

Today, I will introduce how an AI robotic arm plays chess.